Indexing - PowerPoint PPT Presentation

1 / 54

Title:

Indexing

Description:

... 'Dallas', read all tuples from secondary storage and check if city='Dallas' for each one (scan) ... 'Dallas', we can now find the index entry for 'Dallas' ... – PowerPoint PPT presentation

Number of Views:276

Avg rating:3.0/5.0

Title: Indexing

1

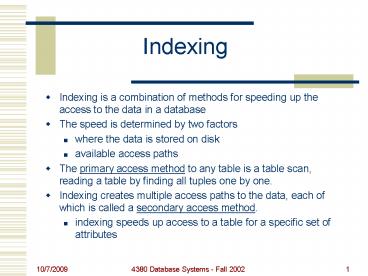

Indexing

- Indexing is a combination of methods for speeding

up the access to the data in a database - The speed is determined by two factors

- where the data is stored on disk

- available access paths

- The primary access method to any table is a table

scan, reading a table by finding all tuples one

by one. - Indexing creates multiple access paths to the

data, each of which is called a secondary access

method. - indexing speeds up access to a table for a

specific set of attributes

2

Indexing

- Example ITEMS(itemid, description, price, city)

- to find all items in Dallas, read all tuples

from secondary storage and check if cityDallas

for each one (scan) - may read many extra tuples that are not part of

the answer - suppose instead we create index itemcity for the

city attribute of the items relation - itemcity, Dallast1,t5,t10,

Bostont2,t3,t15, - to find all items for Dallas, we can now find

the index entry for Dallas and get the ids of

just the tuples we want - many fewer tuples are read from secondary storage

3

Disk terminology

rotation

Platters (2 platters 4 read/write surfaces

Track Can read four tracks from four surfaces at

the same time (all tracks at the same radius are

called a cylinder)

Read/write heads one for each surface

Disk arm controller

4

Disk terminology

- Reading data from a disk involves

- seek time -- move heads to the correct

track/cylinder - rotational latency -- wait until the required

data spins under the read/write heads (average is

about half the rotation time) - transfer time -- transfer the required data

(read/write data to/from a buffer) - Tracks contain the same amount of information

even though they have different circumferences

A block or a page is the smallest unit of data

transfer for a disk. Read a block / write a block

track

Block/page

5

Disk Space - final frontier (1999)

- Disk is much cheaper than memory (1GB 15 - 20)

- A fast disk today

- 40 - 10 GB per disk

- 4.9 ms average read seek time

- 2.99 ms average latency

- 10,000 rpm

- 280 - 452 Mbits/sec transfer rate

- 7.04 bits per square inch density

- 12 - 3 heads, 6 - 2 disk platters

- Disk is non-volatile storage that

- survives power failures

6

Disk space - the next generation (2002)

- Ultrastar 146Z10 by IBM (up to 146GB)

- 825 Mbs transfer rate

- 15 recoding zones, 12-2 data heads, 6-1 data

disks - Max areal density 26,263 Mbits/sq inch

- 4.7 ms seek time

- Average latency 3pm

- 10,000 rpm

7

Reading from disk

- Reading data from disk is extremely slow

(compared to reading from memory) - To read a page from disk

- find the page on disk (seeklatency times)

- transfer the data to memory/buffer (total bytes

transfer rate) - Assume the average page size is 4KB. To retrieve

a single row/tuple, we need to load the page that

contains it - assume 300 Mbits/sec transfer rate, to read a

page 4KB32764bits0.03Mbits hence we take

1/10000 of a second - 4.9 ms (seek) 2.99 ms (latency) 0.1 ms

(transfer time) 7.99 ms (seek and latency times

dominate!)

8

Reading from disk

- Assume the database saves a number of memory

slots (each holding exactly one page), which are

called buffers - To read / modify / write tuple t

- DISK (read it from disk, write it to buffer)

- DB (read it from buffer, modify)

- DISK (write it to disk, free the buffer space)

Buffer slots This buffer can hold 4 pages at any

time

9

Tablespaces

- Age old wisdom if you store a set of pages in

contiguous pages / blocks on disk, then the

transfer time will improve greatly (reduce seek

and latency times) - A tablespace is an allocation of space in

secondary storage - when creating a tablespace, a DBMS requests

contiguous blocks of disk space from the OS - the tablespace appears as a single file to the OS

- the management of physical addresses in a

tablespace is performed by the DBMS - a DBMS can have many tablespaces

- when a table is created, it is placed in a

tablespace, or partitioned between multiple

tablespaces

10

Tablespaces

- CREATE TABLESPACE tspace1

- DATAFILE diskafile1.dat SIZE 20M,

- DATAFILE diskafile2.dat SIZE 40M

- CREATE TABLE temp1 (

- TABLESPACE file1

- STORAGE (initial 6144, next 6144,

- minextents 1, maxextents 5)

- )

- CREATE TABLE temp2 (

- TABLESPACE file2

- STORAGE (initial 12144, next 6144,

- minextents 1, maxextents 5)

- )

tspace1

file1

file2

temp1

temp2

Actual data

11

Tablespaces

- Create table -- assign the tuples in the table

to a file in a tablespace - when a table is created, a chunk of space is

assigned to this table, the size of this chunk is

given by the INITIALEXTENT - when the initial extent becomes full, a new chunk

is allocated, the size of all next chunks is

given by the NEXTEXTENT - can also specify

- maxextents, minextents

- pctincrease (increase the size of extents at each

step) - pctfree (how much of the extent must be left free

12

Data Storage on pages

- Layout of a single disk page (assume fixed size

rows) - To find a specific row in a page, must know

- page number (or block number) BBBBBBBB

- offset (slot number of record within the page)

SSSS - file name (which datafile/tablespace) FFFF

- ROWID is then a unique number BBBBBBBB.SSSS.FFFF

for a row - B,S,F are hexadecimal numbers

...

Header info

row directory

Free space

Data rows

Row N

Row N-1

Row 1

1

2

N

...

13

Pseudocolumns

- Since each tuple has a unique rowid, we can refer

to the tuples with their rowid field

- However, rowid may change if the tuple is stored

at a different location (the valueof its primary

key is a better identifier)

14

Storage of tuples

- The order of tuples in disk may be

- Arbitrary, the order of insertion of tuples. This

is called a HEAP file. - SORTED with respect to a column.

- A sorted file can be searched using binary search

technique - To preserve the sorted order, a number of empty

slots can be preserved for each disk page (based

on some fill factor), and overflow pages can be

used for cases when a specific page is too full

15

Indexing Concepts

- Indexing speeds up access to data residing on

disk - disk access is much slower than main memory

access, by orders of magnitude - goal minimize the number of disk accesses

- Primary access methods rely on the physical

location of data as stored in a relation - finding all tuples with value x requires

reading the entire relation (heap) or binary

search (sorted) - Secondary access methods use a directory to

enable tuples to be found more quickly based on

the value of one or more attributes (keys) in a

tuple

16

Secondary Index

- To create a simple index on column A of table T,

make a list of pairs of the form - (attribute A value, tuple rowid)

- for each tuple in T

- example secondary index for the SSN attribute

- SSN ROWIDs (RID)

- 111-11-1111 AAAAqYAABAAAEPvAAH

- 222-22-2222 AAAAqYAABAAAEPvAAD

- 333-33-3333 AAAAqYAABAAAEPvAAG

- . . . .

- This index is large and stored on the disk

17

Secondary Index

- Suppose a disk page can contain 200 index entries

from a secondary index - To store a secondary index for a relation with 1

million tuples assuming no duplicate values

requires - 1,000,000 / 200 5,000 disk pages

- To find a particular Person tuple in the SSN

index given his or her SSN, you must on average

scan half of the index (5,000 / 2 2500 disk

accesses) - If 20 tuples of the Person relation fit on a

page, then sequential scan of the relation itself

needs to read on average half the relation

(50,000 / 2 25,000 disk accesses) - In this case, the secondary index helps a lot

18

Efficiency

- Need to organize the index information in a way

that makes it efficient to access and search - scanning the index from the beginning is not good

enough - Sorting the secondary index helps, but is not

sufficient - Solution 1 build a tree index

- Solution 2 hash the index

19

Tree Indices

- Want to minimize number of disk accesses.

- each tree node requires a disk access

- therefore, trees that are broad and shallow are

preferred over trees that are narrow and deep - Balanced binary search tree, AVL tree, etc. that

are useful in main memory are too narrow and deep

for secondary storage. - Need an m-way tree where m is large.

- also need a tree that is balanced

20

B-Tree

- A B -Tree of order d is a tree in which

- each node has between d and 2d key values

- the keys values within a node are ordered

- each key in a node has a pointer immediately

before and after it - leaf nodes pointer following a key is pointer to

record with that key - interior nodes pointers point to other nodes in

the tree - the length of the path from root to leaf is the

same for every leaf (balanced) - the root may have fewer keys and pointers

21

Example B -Tree

53

11 30

66 78

66 69 71 76

2 7

11 15 22

30 41

53 54 63

78 84 93

B-Tree of order 2 each node can hold up to four

keys

22

Searching in B-Trees

- Search(T, K) / searching for tuple with key

value K in tree T / - if T is a non-leaf node

- search for leftmost key K in node T such

that K lt K - if such a K exists

- ptr pointer in T immediately before K

- return the result of Search(ptr, K)

- if no such K exists

- ptr rightmost pointer in node T

- return the result of Search(ptr, K)

- else if T is a leaf node

- search for K in T

- if found, return the pointer following K

- else return NULL / K not in tree T /

23

Insert Algorithm

- To insert a new tuple with key K and address

rowid - use a modified Search algorithm to look for the

leaf node into which key K should be inserted - insert key K followed by address rowid into the

proper place in this leaf node to maintain order

and rebalance the tree if necessary - Rebalancing the tree

- if the leaf node has room for K and rowid, then

no rebalancing is needed - if the leaf node has no room for K and rowid,

then it is necessary to create a new node and

rebalance the tree

24

Rebalancing Algorithm

- Assume that K and rowid are to be inserted into

leaf node L, but L has no more room. - create a new empty node

- put K and rowid in their proper place among the

entries in L to maintain the key sequence order

-- there are 2d1 keys in this sequence - leave the first d keys with their rowids in node

L and move the final d1 keys with their rowids

to the new node - copy the middle key K from the original sequence

into the parent node followed by a pointer to the

new node - put them immediately after the pointer to node L

in the parent node - apply this algorithm recursively up the tree as

needed

25

Insert Example

Insert record with key 57 BTree of order 2

26

Another Insert Example

Insert record with key 65 BTree of order 2

27

Insertion Algorithm (1)

Insert (T, K, rowid, child) / insert new tuple

with key K and address rowid into tree T / /

child is NULL initially / / handle an

interior node of the B-Tree / if T is a

non-leaf node find j such that Kj ? K ? Kj1

for keys K1, , Kn in T ptr pointer between

Kj and Kj1 Insert (ptr, K, rowid, child) if

child is NULL then return / must insert key

and child pointer into T / if T has space for

another key and pointer put child.key and

child.ptr into T at proper place child

NULL return

28

Insert Algorithm (2)

else / must split node T / construct

sequence of keys and pointers from T

with child.key and child.ptr inserted at

proper place first d keys and d1 pointers

from sequence stay in T last d keys and d1

pointers from sequence move to a new node

N child.key middle key from

sequence child.ptr pointer to N if T is

root create new node containing pointer to T,

child.key, and child.ptr make this node

the new root node of the B-Tree return If T

is a leaf node / handle leaf node of the B-Tree

/ if T has space for another key and

rowid put K and rowid into T at proper

place return

29

Insert Algorithm (3)

else / must split leaf node T / construct

sequence of keys and pointers from T with K

and rowid inserted at proper place first d

keys and d1 pointers from sequence stay in

T last d1 keys and d2 pointers from sequence

move to a new node N child.key first

key in new node N child.ptr pointer to

N if T was root create new node containing

pointer to T, child.key, and

child.ptr make this node the new root node of

the B-Tree return

30

Deletion

- Assume that a tuple with key K and address rowid

is to be deleted from leaf node L. There is a

problem if after removing K and rowid from L it

has fewer than d keys remaining. To fix this - if a neighbor node has at least d1 keys, then

evenly redistribute the keys and rowids with the

neighbor node and adjust the separator key in the

parent node - otherwise, combine node L with a neighbor node

and discard the empty node - the parent node now needs one less key and node

pointer, so recursively apply this algorithm up

the tree until all nodes have enough keys and

pointers

31

Deletion Example

Redistribute between the second and third leaf

nodes.

53

11 30

66 78

30

22

66 69 71 76

2 7

53 54 63

78 84 93

30 41

11 15 22

41

Delete key 30

B-Tree of order 2

32

Another Deletion Example

Cannot redistribute, so combine the left two leaf

nodes

53

11 30

66 78

66 69 71 76

2 7

11 15

30 41

53 54 63

78 84 93

2 11 15

B-Tree of order 2 Delete 7 from the B-Tree

33

Another Deletion Example

53

30

66 78

66 69 71 76

11 15

30 41

53 54 63

78 84 93

2 11 15

B-Tree of order 2 Delete 7 from the B-Tree

34

Deletion Algorithm (1)

Delete (Parent, T, K, oldchild) / delete key K

from Tree T / / Parent is parent node for T,

initially NULL / / oldchild is discarded child

node, initially NULL / / handle an interior

node of the B-Tree / if T is a non-leaf

node find j such that Kj ? K ? Kj1 for keys

K1, , Kn in T ptr pointer between Kj and

Kj1 Delete (T, ptr, K, oldchild) if oldchild

is NULL then return / must handle discarded

child node of T / remove oldchild and adjacent

key from T if T still has enough keys and

pointers oldchild NULL return

35

Deletion Algorithm (2)

/ must fix node T / get a sibling node S of T

using Parent if S has entry keys / redistribute

S and T / redistribute keys adjacent

pointers evenly between S T K middle

unused key from the redistribution replace the

key in Parent between the pointers to S and T

with K oldchild NULL return else / merge

S and T / R S or T, whichever is to the

right of the other oldchild R copy key from

Parent node that is immediately before R to

the end of the node on the left move all keys

adjacent pointers from R to node to the

left discard node R return

36

Deletion Algorithm (3)

/ handle leaf node of the B-Tree / if T is a

leaf node if T has extra keys remove key K

from T oldchild NULL return / must fix

node T / get a sibling node S of T using

Parent if S has entry keys / redistribute S and

T / redistribute keys and adjacent pointers

evenly between S T K first key from node S

or T, whichever is to the right of the

other replace the key in Parent between the

pointers to S and T with K oldchild

NULL return

37

Deletion Algorithm (4)

else / merge S and T / R S or T,

whichever is to the right of the

other oldchild R move all keys and

adjacent rowids from R to the node on the

left discard node R return

38

Analysis of B-Trees

- Every access to a node is an access to disk and

hence is expensive. - Analysis of Find

- if there are n tuples in the tree, the height of

the tree, h, is bounded by h ceil(logd (n)) - example d 50, tree contains 1 million records,

then h 4 - Analysis of Insert and Delete

- finding the relevant node required h accesses

- rebalancing required O(h) accesses

- therefore, the total is O(logd n) accesses

39

B-tree

- The create index command creates a B-tree index

- CREATE INDEX age_idx ON people(age)

- TABLESPACE file1

- PCTFREE 70

- PCTFREE defines how full each node should be

- Optimal operation is usually with nodes about 70

full - To reduce disk accesses for sequential

processing, pointers are added to the leaf nodes

that point to the previous and next leaf nodes

40

A B-Tree Example

- Givens

- disk page has capacity of 4K bytes

- each rowid takes 6 bytes and each key value takes

2 bytes - each node is 70 full

- need to store 1 million tuples

- Leaf node capacity

- each (key value, rowid) pair takes 8 bytes

- disk page capacity is 4K, so (41024)/8 512

(key value, rowid) pairs per leaf page - in reality there are extra headers and pointers

that we will ignore - Hence, the degree for the tree is about 256

41

Example Continued

- If all pages are 70 full, each page has about

- 5120.7 359 entries

- To store 1 million tuples, requires

- 1,000,000 / 359 2786 pages at the leaf level

- 2789 / 359 8 pages at next level up

- 1 root page pointing to those 8 pages

- Hence, we have a B-tree with 3 levels, and a

total of 278681 2795 disk pages

42

Duplicate Key Values

- Duplicate key values in a B-tree can be handled.

- (key, rowid) pairs for same key value can span

multiple index nodes - Search algorithm needs to be changed

- find leftmost entry at the leaf level for the

searched item, then scan the index from left to

right following leaf level pointers - The insertion and deletion algorithms also

require small changes - they are more costly and hence not always

implemented in practice

43

Bitmap Index

- For some attribute x with possible values A,B and

C - create a list of all tuples in the relation and

store their rowids at some known location - build an index for each value, for example for

value A - the bitmap contains a 1 at location k if tuple

k has value A for this attribute - otherwise it contains a 0

- indices with a lot of 0s are called sparse and

can be compressed

44

Bitmap Example

1 0 0 1 0 . . .

Bitmap for A10

Bitmap for A15

0 1 1 0 1 . . .

. . .

Tuple List

. . .

Tuples

45

Querying with Bitmap Index

- Suppose have bitmap indices on attributes x and y

- Find if xA or xB, take the bitmaps for

both values and do a logical or - Find if xA and yltgtB, compute the logical

inverse of bitmap for yB and then do a logical

and with bitmap for xA - Bitmaps depend on the actual row ids of tuples

- If a tuple is deleted, its location can be

removed or swapped by another tuple (costly if

the index is compressed) - Too many updates or attributes with too many

values lead to bitmaps that are not cost effective

46

B-tree index on attributes A1,,Ak

Primary access methods Heap tuples are placed

in the order they are inserted Cluster tuples

with the same values for attributes A1,,Ak are

placed close to each other on disk Hash tuples

with the same hash value are placed close to each

other on disk

Row directory

Tuple 11, Tuple 12, , Tuple 20

Secondary access methods The primary

access method can be anything. Additional indexes

are created with entries that point to actual

tuples

Row directory

Tuple 1, Tuple 2, , Tuple 10

47

Clusters

- A cluster is a primary access method, it changes

the placement of tuples on disk - CREATE CLUSTER personnel

- (department_number integer)

- SIZE 512

- STORAGE (INITIAL 100K NEXT 50K)

- In ORACLE, a cluster can be generated for many

tables containing the same set of attributes - All tuples in different tables from the same

cluster will be placed closed to each other on

disk (i.e. on the same page and on consecutive

pages)

48

Adding tables to a cluster

- CREATE CLUSTER movies.actorcluster

- (actorid INTEGER)

- CREATE INDEX actorcluster_idx

- ON CLUSTER actorcluster

- CREATE TABLE movies.actors (

- actorid INTEGER NOT NULL,

- stagename VARCHAR2(50) NOT NULL,

- datesofwork VARCHAR2(10),

- birthname VARCHAR2(20),

- firstname VARCHAR2(20),

- CONSTRAINT actor_pidx PRIMARY KEY (actorid)

- ) CLUSTER actorcluster(actorid)

- CREATE TABLE movies.casts (

- filmid INTEGER NOT NULL,

- actorid INTEGER NOT NULL,

- roletype VARCHAR2(30) NOT NULL,

- FOREIGN KEY (filmid)

- REFERENCES movies.films(filmid)

- ON DELETE CASCADE,

- FOREIGN KEY (actorid)

- REFERENCES movies.actors(actorid)

- ON DELETE CASCADE,

- CONSTRAINT cast_pidx

- PRIMARY KEY (filmid, actorid, role)

- ) CLUSTER actorcluster(actorid)

49

Clusters

- Each table may belong to at most one cluster.

- Suppose we retrieve an employee tuple with

deptno10. We find a page with this employee and

read it into memory. - If there are 20 employees in the department 10,

then chances are that all these employees are on

the same page. - To find all employees in department 10 through

20, we can simply read the necessary pages. - A cluster is not an index, but we can also create

a B-tree index on a cluster - CREATE INDEX idx_personnel ON CLUSTER personnel

50

Hashing

- Hashing is another index method that changes the

way tuples are placed on disk - A hash index on attribute A allocates an initial

set of pages to store the relation

1

Hash function h ranges between 1 and n

New tuple T with key A

2

3

. .

If multiple tuples map to the same location/page,

this is called a collision. These tuples are

placed in an overflow page.

h(T. A)

n

51

Hashing

- The number of key values is given by HASHKEYS

- Hashing is useful for finding a tuple with a

given key value - Hashing is not as useful for ranges or key values

or for sequential processing of tuples in key

order - In the best case, a tuple is found with one disk

access - In the average case, expect 1.2 disk accesses or

more (because of overflow pages)

52

Extensible Hashing

- Assume that we originally allocate 2n pages for

the hash - Distribute tuples according to hash function mod

2 - hash the key to produce a bit string and then use

the least significant bit - If a disk page becomes full, double the directory

size instead of creating overflow buckets

Page 0 Page 1 Hash directory

0 1

tuples

53

Extensible Hashing

- Insert into a full Page 1 double the directory

size

The full page is split into two. Its contents

are rehashed between the original page and the

new page, using one additional bit from the

string produced by the hash function

Page 0 Page 1 Page 2 Page 3 Hash directory

00 01

10 11

New Page

Just created a new directory entry for Page 3.

Since Page 1 is not full, this directory entry

points to Page 1. The contents of Page 1 will

be rehashed with Page 3 when Page 1 becomes

full rather than creating a new page.

54

Extensible Hashing

- As the hash directory size grows it must be

stored on the disk - At most two disk accesses are needed to retrieve

any tuple - this is a better upper bound than for B-Trees

- However, extensible hashing is not as good as

B-Trees for range queries and sequential

processing where you want to process all the

tuples of a relation - Consequently, B-Trees are used more frequently

than Extensible Hashing