Objectives of Test Planning - PowerPoint PPT Presentation

1 / 94

Title:

Objectives of Test Planning

Description:

Provide a basis for re-testing during system maintenance ... Testing Approach ... High-order testing criteria should be expressed in the specification in a ... – PowerPoint PPT presentation

Number of Views:709

Avg rating:3.0/5.0

Title: Objectives of Test Planning

1

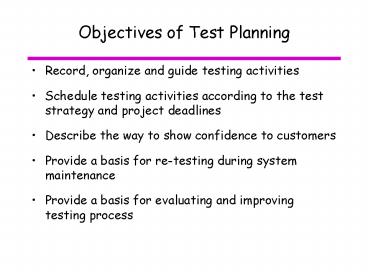

Objectives of Test Planning

- Record, organize and guide testing activities

- Schedule testing activities according to the test

strategy and project deadlines - Describe the way to show confidence to customers

- Provide a basis for re-testing during system

maintenance - Provide a basis for evaluating and improving

testing process

2

IEEE 829 Standard Test Plan

- Revision 1998

- Describes scope, approach resources, schedule of

intended testing activities. - Identifies

- test items,

- features to be tested,

- testing tasks,

- who will do each task, and

- any risks requiring contingency planning.

3

IEEE 829 Test Plan Outline

- Test plan identifier

- Introduction (refers to Project plan, Quality

assurance plan, Configuration management plan,

etc.) - Test items - identify test items including

version/revision level (e.g requirements, design,

code, etc.) - Features to be tested

4

IEEE 829 Test Plan Outline

- Features not to be tested

- Testing Approach

- Significant constraints on testing (test item

availability, testing-resource availability,

deadlines). - Item pass/fail criteria

- Suspension criteria and resumption requirements

5

IEEE 829 Test Plan Outline

- Test deliverables (e.g. test design

specifications, test cases specifications, test

procedure specifications, test logs, test

incident reports, test summary report) - Testing tasks

- Environmental needs

- Responsibilities

6

IEEE 829 Test Plan Outline

- Staffing and training needs

- Schedule

- Risks and contingencies

- Approvals

- References

7

IEEE 829 Test Design Specification

- Test design specification identifier

- Features to be tested

- Features addressed by document

- Approach refinements

- Test identification

- Identifier and description of test cases

associated with the design - Features pass/fail criteria

- Pass/fail criteria for each feature

8

IEEE 829 Test Case Specification

- Test case specification identifier

- Test items

- Items and features to be exercised by the test

case. - Input specifications

- Input required to execute the test case, data

bases, files, etc. - Output specifications

- Environmental needs

- Special procedural requirements

- Inter-case dependencies

9

IEEE 829 Test Procedure Outline

- Purpose

- Special requirements

- Procedure steps

- Log how to log results

- Set Up how to prepare for testing

- Start how to begin procedure execution

- Proceed procedure actions

- Measure how test measurements will be made

- Shut Down how to suspend testing procedure

- Restart how to resume testing procedure

- Stop how to bring execution to an orderly halt

- Wrap Up how to restore the environment

- Contingencies how to deal with anomalous events

during execution

10

IEEE 829 Test Log Outline

- Test log identifier

- Description

- Information on all the entries in the log

- Activity and event entries

- Execution Description

- Procedure Results - observable results (e.g.

messages), outputs - Environmental Information specific to the entry

- Anomalous Events (if any)

- Incident Report Identifiers (identifier of test

incident reports if any generated)

11

IEEE 829 Test Incident Report (1)

- Test incident report identifier

- Summary - items involved, references to linked

documents (e.g. procedure, test case, log) - Incident description

- Inputs

- Expected results

- Actual results

- Date and time

- Anomalies

- Procedure step

- Environment

- Attempts to repeat

- Testers

- Observers

12

IEEE 829 Test Incident Report (2)

- Impact on testing process

- S Show Stopper testing totally blocked,

bypass needed - H High - major portion of test is partially

blocked, test can continue with severe

restrictions, bypass needed - M Medium - test can continue but with minor

restrictions, no bypass needed - L Low testing not affected, problem is

insignificant

13

IEEE 829 Test Summary Report

- Test summary report identifier

- Summary - Summarize the evaluation of the test

items, references to plans, logs, incident

reports - Variances of test items (from specification),

plan, procedure, ... - Comprehensive assessment - of testing process

against comprehensiveness criteria specified in

test plan - Summary of results issues (resolved,

unresolved) - Evaluation - overall evaluation of each test item

including its limitations - Summary of activities

- Approvals - names and titles of all persons who

must approve the report

14

System Testing

- Performed after the software has been assembled.

- Test of entire system, as customer would see it.

- High-order testing criteria should be expressed

in the specification in a measurable way.

15

System Testing

- Check if system satisfies requirements for

- Functionality

- Reliability

- Recovery

- Multitasking

- Device and Configuration

- Security

- Compatibility

- Stress

- Performance

- Serviceability

- Ease/Correctness of installation

16

System Testing

- Acceptance Tests

- System tests carried out by customers or under

customers supervision - Verifies if the system works according to the

customers expectations - Common Types of Acceptance Tests

- Alpha testing end user testing performed on a

system that may have incomplete features, within

the development environment - Performed by an in-house testing panel including

end-users. - Beta testing an end user testing performed

within the user environment.

17

Functional Testing

- Ensure that the system supports its functional

requirements. - Test cases derived from statement of

requirements. - traditional form

- use cases

18

Deriving Test Cases from Requirements

- Involve clarification and restatement of the

requirements to put them into a testable form. - Obtain a point form formulation

- Enumerate single requirements

- Group related requirements

- For each requirement

- Create a test case that demonstrates the

requirement. - Create a test case that attempts to falsify the

requirement. - For example try something forbidden.

- Test boundaries and constraints when possible

19

Deriving Test Cases from Requirements

- Example Requirements for a video rental system

- The system shall allow rental and return of films

- 1. If a film is available for rental then it may

be lent to a customer. - 1.1 A film is available for rental until all

copies have been simultaneously borrowed. - 2. If a film was unavailable for rental, then

returning the film makes it available. - 3. The return date is established when the film

is lent and must be shown when the film is

returned. - 4. It must be possible for an inquiry on a rented

film to reveal the current borrower. - 5. An inquiry on a member will reveal any films

they currently have on rental.

20

Deriving Test Cases from Requirements

- Test situations for requirement 1

- Attempt to borrow an available film.

- Attempt to borrow an unavailable film.

- Test situations for requirement 1.1

- Attempt to borrow a film for which there are

multiple copies, all of which have been rented. - Attempt to borrow a film for which all copies but

one have been rented.

21

Deriving Test Cases from Requirements

- Test situations for requirement 2

- Borrow an unavailable film.

- Return a film and borrow it again.

- Test situations for requirement 3.

- Borrow a film, return it and check dates

- Check date on a non-returned film.

22

Deriving Test Cases from Requirements

- Test situations for requirement 4

- Inquiry on rented film.

- Inquiry on returned film.

- Inquiry on a film which has been just returned.

- Test situations for requirement 5

- Inquiry on member with no films.

- Inquiry on member with 1 film.

- Inquiry on member with multiple films.

23

Deriving Test Cases from Use Cases

- For all use cases

- Develop a graph of scenarios

- Determine all possible scenarios

- Analyze and rank scenarios

- Generate test cases from scenarios to meet a

coverage goal - Execute test cases

24

Scenario Graph

- Generated from a use case

- Nodes correspond to point where system waits for

an event - environment event, system reaction

- There is a single starting node

- End of use case is finish node

- Edges correspond to event occurrences

- May include conditions and looping edges

- Scenario

- Path from starting node to a finish node

25

Use Case Scenario Graph (1)

1a card isnot valid

- Title User login

- Actors User

- Precondition System is ON

- User inserts a card

- System asks for personal identification number

(PIN) - User types PIN

- System validates user identification

- System displays a welcome message to user

- System ejects card

- Postcondition User is logged in

1

1a.1

2

1a.2

3

4a.1

4

4bPIN invalid and attempts 4

4aPIN invalid and attempts lt 4

5

4b.1

4a.2

6

26

Use Case Scenario Graph (2)

1a card isnot valid

- Alternatives

- 1a Card is not valid

- 1a.1 System emits alarm

- 1a.2 System ejects card

- 4a User identification is invalid

- AND number of attempts lt 4

- 4a.1 Ask for PIN again and go back

- 4b User identification is invalid

- AND number of attempts 4

- 4b.1 System emits alarm

- 4b.2 System ejects card

1

1a.1

2

1a.2

3

4a.1

4

4bPIN invalid and attempts 4

4aPIN invalid and attempts lt 4

5

4b.1

4a.2

6

27

Scenarios

- Paths from start to finish

- The number of times loops are taken needs to be

restricted to keep the number of scenarios finite.

28

Scenario Ranking

- If there are too many scenarios to test

- Ranking may be based on criticality and frequency

- Can use operational profile, if available

- Operational profile statistical measurement

of typical user activity of the system. - Example what percentage of users would

typically be using any particular feature at any

time. - Always include main scenario

- Should be tested first

29

Test Case generation

- Satisfy a coverage goal. For example

- All branches in graph of scenarios (minimal

coverage goal) - All scenarios

- n most critical scenarios

30

Example of Test Case

- Test Case TC1

- Goal Test the main course of events for the ATM

system. - Scenario Reference 1

- Setup Create a Card 2411 with PIN 5555 as

valid user identification, System is ON - Course of test case

- Pass criteria User is logged in

31

Forced-Error Test (FET)

- Objective to force system into all error

conditions - Basis set of error messages for system.

- Checks

- Error-handling design and communication methods

consistency - Detection and handling of common error conditions

- System recovery from each error condition

- Correction of unstable states caused by errors

32

Forced-Error Test (FET)

- Verification of error messages to ensure

- Message matches type of error detected.

- Description of error is clear and concise.

- Message does not contain spelling or grammatical

errors. - User is offered reasonable options for getting

around or recovering from error condition.

33

Forced-Error Test (FET)

- How to obtain a list of error conditions?

- Obtain list of error messages from the developers

- Interviewing the developers

- Inspecting the String data in a resource file

- Information from specifications

- Using a utility to extract test strings out of

the binary or scripting sources - Analyzing every possible event with an eye to

error cases - Using your experience

- Using a standard valid/invalid input test matrix

34

Forced-Error Test (FET)

- For each error condition

- Force the error condition.

- Check the error detection logic

- Check the handling logic

- Does the application offer adequate forgiveness

and allow the user to recover from the mistakes

gracefully? - Does the application itself handle the error

condition gracefully? - Does the system recover gracefully?

- When the system is restarted, it is possible that

not all services will restart successfully?

35

Forced-Error Test (FET)

- Check the error communication

- Determine whether an error message appears

- Analyze the accuracy of the error message

- Note that the communication can be in another

medium such as an audio cue or visual cue - Look for further problems

36

Usability Testing

- Checks ability to learn, use system to perform

required task - Usability requirements usually not explicitly

specified - Factors influencing ease of use of system

- Accessibility Can users enter, navigate, and

exit the system with relative ease? - Responsiveness Can users do what they want,

when they want, in an intuitive/convenient way? - Efficiency Can users carry out tasks in an

optimal fashion with respect to time, number of

steps, etc.? - Comprehensibility Can users quickly grasp how

to use the system, its help functions, and

associated documentation?

37

Usability Testing

- Typical activities for usability testing

- Controlled experiments in simulated working

environments using novice and expert end-users. - Post-experiment protocol analysis by human

factors experts, psychologists, etc - Main objective collect data to improve usability

of software

38

Installability Testing

- Focus on requirements related to installation

- relevant documentation

- installation processes

- supporting system functions

39

Installability Testing

- Examples of test scenarios

- Install and check under the various option given

(e.g. minimum setup, typical setup, custom

setup). - Install and check under minimum configuration.

- Install and check on a clean system.

- Install and check on a dirty system (loaded

system). - Install of upgrades targeted to an operating

system. - Install of upgrades targeted to new

functionality. - Reduce amount of free disk space during

installation - Cancel installation midway

- Change default target installation path

- Uninstall and check if all program files and

install (empty) directories have been removed.

40

Installability Testing

- Test cases should include

- Start / entry state

- Requirement to be tested (goal of the test)

- Install/uninstall scenario (actions and inputs)

- Expected outcome (final state of the system).

41

Serviceability Testing

- Focus on maintenance requirements

- Change procedures (for various adaptive,

perfective, and corrective service scenarios) - Supporting documentation

- All system diagnostic tools

42

Performance/Stress/Load Testing

- Performance Testing

- Evaluate compliance to specified performance

requirements for - Throughput

- Response time

- Memory utilization

- Input/output rates

- etc.

- Look for resource bottlenecks

43

Performance/Stress/Load Testing

- Stress testing - focus on system behavior at,

near or beyond capacity conditions - Push system to failure

- Often done in conjunction with performance

testing. - Emphasis near specified load, volume boundaries

- Checks for graceful failures, non-abrupt

performance degradation.

44

Performance/Stress/Load Testing

- Load Testing - verifies handling of a particular

load while maintaining acceptable response times - done in conjunction with performance testing

45

Performance Testing Phases

- Planning phase

- Testing phase

- Analysis phase

46

Performance Testing ProcessPlanning phase

- Define objectives, deliverables, expectations

- Gather system and testing requirements

- environment and resources

- workload (peak, low)

- acceptable response time

- Select performance metrics to collect

- e.g. Transactions per second (TPS), Hits per

second, Concurrent connections, Throughput, etc.

47

Performance Testing ProcessPlanning phase

- Identify tests to run and decide when to run

them. - Often selected functional tests are used as the

test suite. - Use an operational profile to match

typicalusage. - Decide how to run the tests

- Baseline Test

- 2x/3x/4x baseline tests

- Longevity (endurance) test

- Decide on a tool/application service provider

option - to generate loads (replicate numerous instances

of test cases) - Write test plan, design user-scenarios, create

test scripts

48

Performance Testing ProcessTesting Phase

- Testing phase

- Generate test data

- Set-up test bed

- System under test

- Test environment performance monitors

- Run tests

- Collect results data

49

Performance Testing ProcessAnalysis Phase

- Analyze results to locate source of problems

- Software problems

- Hardware problems

- Change system to optimize performance

- Software optimization

- Hardware optimization

- Design additional tests (if test objective not

met)

50

Configuration Testing

- Configuration testing test all supported

hardware and software configurations - Factors

- Hardware processor, memory

- Operating system type, version

- Device drivers

- Run-time environments JRE, .NET

- Consist of running a set of tests under different

configurations exercising main set of system

features

51

Configuration Testing

- Huge number of potential configurations

- Need to select configurations to be tested

- decide the type of hardware needed to be tested

- select hardware brands, models, device drivers to

test - decide which hardware features, modes, options

are possible - pare down identified configurations to a

manageable set - e.g. based on popularity, age

52

Compatibility Testing

- Compatibility testing test for

- compatibility with other system resources in

operating environment - e.g., software, databases, standards, etc.

- source- or object-code compatibility with

different operating environment versions - compatibility/conversion testing

- when conversion procedures or processes are

involved

53

Security Testing

- Focus on vulnerabilities to unauthorized access

or manipulation - Objective identify any vulnerabilities and

protect a system. - Data

- Integrity

- Confidentiality

- Availability

- Network computing resources

54

Security Testing Threat Modelling

- To evaluate a software system for security issues

- Identify areas of software susceptible to be

exploited for security attacks. - Threat Modeling steps

- Assemble threat modelling team (developers,

testers, security experts) - Identify assets (what could be of interest to

attackers) - Create an architecture overview (major

technological pieces and how they communicate,

trust boundaries between pieces) - Decompose the application (identify how/where

data flows through the system, what are data

protection mechanisms) - Based on data flow and state diagrams

55

Security Testing Threat Modelling

- Threat Modeling steps

- Identify the Threats

- Consider each component as target,

- How could components be improperly

viewed/attacked/modified? - Is it possible to prevent authorized users access

to components? - Could anyone gain access and take control of the

system? - Document the Threats (description, target, form

of attack, countermeasure) - Rank the Threats based on

- Damage potential

- Reproducibility

- Exploitability

- Affected users

- Discoverability

56

Security TestingCommon System Vulnerabilities

- Buffer overflow

- Command line (shell) execution

- Backdoors

- Web scripting language weakness

- Password cracking

- Unprotected access

- Information leaks

- Hard coding of id/password information

- Revealing error messages

- Directory browsing

57

Security TestingBuffer Overflow

- One of the most commonly exploited

vulnerabilities. - Caused by

- The fact that in x86 systems, a program stack can

mix both data (local function variables) and

executable code. - The way program stack is allocated in x86 systems

(stack grows up-to-down). - A lack of boundary checks when allocating buffer

space in memory in program code (a typical bug).

58

Security TestingBuffer Overflow

- void parse(char arg)

- char param1024

- int localdata

- strcpy(param,arg)

- .../...

- return

- main(int argc, char argv)

- parse(argv1)

- .../...

Exit address (4 bytes)

Main stack (N bytes)

Return address (4 bytes)

param (1024 bytes)

localdata (4 bytes)

59

Security TestingSQL injection

- Security attack consisting of

- Entering unexpected SQL code in a form in order

to manipulate a database in unanticipated ways - Attackers expectation back processing is

supported by SQL database - Caused by

- The ability to string multiple SQL statements

together and to execute them in a batch - Using text obtained from user interface directly

in SQL statements.

60

Security TestingSQL injection

- Example Designer intends to complete this SQL

statement with values obtained from two user

interface fields. - SELECT FROM bank

- WHERE LOGIN 'id' AND PASSWORD 'password'

- Malicious user enters

- Login ADMIN

- And

- Password anything' OR 'x''x

- Result

- SELECT FROM bank

- WHERE LOGIN 'ADMIN' AND PASSWORD 'anything'

OR 'x''x'

61

Security TestingSQL injection

- Avoidance

- Do not copy text directly from input fields

through to SQL statements. - Input sanitizing (define acceptable field

contents with regular expressions) - Escape/Quotesafe the input (using predefined

quote functions) - Use bound parameter (e.g. prepareStatement)

- Limit database access

- Use stored procedures for database access

- Configure error reporting (not to give too much

information)

62

Security TestingTechniques

- Penetration Testing try to penetrate a system

by exploiting crackers methods - Look for default accounts that were not protected

by system administrators. - Password Testing using password cracking tools

- Example passwords should not be words in a

dictionary

63

Security TestingTechniques

- Buffer Overflows - systematical testing of all

buffers - Sending large amount of data

- Check boundary conditions on buffers

- Data that is exactly the buffer size

- Data with length (buffer size 1)

- Data with length (buffer size 1)

- Writing escape and special characters

- Ensure safe String functions are used

- SQL Injection

- Entering invalid characters in form fields

(escape, quotes, SQL comments, ...) - Checking error messages

64

Concurrency Testing

- Investigate simultaneous execution of multiple

tasks / threads / processes / applications. - Potential sources of problems

- interference among the executing sub-tasks

- interference when multiple copies are running

- interference with other executing products

- Tests designed to reveal possible timing errors,

force contention for shared resources, etc - Problems include deadlock, starvation, race

conditions, memory

65

Multitasking Testing

- Difficulties

- Test reproducibility not guaranteed

- varying order of tasks execution

- same test may find problem in a run and fail to

find any problem in other runs - tests need to be run several times

- Behavior can be platform dependent (hardware,

operating system, ...)

66

Multitasking Testing

- Logging can help detect problems log when

- tasks start and stop

- resources are obtained and released

- particular functions are called

- ...

- System model analysis can be effective for

finding some multitasking issues at the

specification level - using FSM based approaches, SDL, TTCN, UCM, ...

- intensively used in telecommunications

67

Recovery Testing

- Ability to recover from failures, exceptional

conditions associated with hardware, software, or

people - Detecting failures or exceptional conditions

- Switchovers to standby systems

- Recovery of execution state and configuration

(including security status) - Recovery of data and messages

- Replacing failed components

- Backing-out of incomplete transactions

- Maintaining audit trails

- External procedures

- e.g. storing backup media or various disaster

scenarios

68

Reliability Testing

- Popularized by the Cleanroom development approach

(from IBM) - Application of statistical techniques to data

collected during system development and operation

(an operational profile) to specify, predict,

estimate, and assess the reliability of

software-based systems. - Reliability requirements may be expressed in

terms of - Probability of no failure in a specified time

interval - Expected mean time to failure (MTTF)

69

Reliability Testing Statistical Testing

- Statistical testing based on a usage model.

- Development of an operational usage model of the

software - Random generation of test cases from the usage

model - Interpretation of test results according to

mathematical and statistical models to yield

measures of software quality and test

sufficiency.

70

Reliability Testing Statistical Testing

- Usage model

- Represents possible uses of the software

- Can be specified under different contexts for

example - normal usage context

- stress conditions

- hazardous conditions

- maintenance conditions

- Can be represented as a transition graph where

- Nodes are usages states.

- Arcs are transitions between usages states.

71

Reliability Testing Statistical Testing

- Example Security alarm system

- For use on doors, windows, boxes, etc.

- Has a detector that sends a trip signal when

motion is detected - Activated by pressing the Set button.

- Light in Set button illuminated when security

alarm is on - An alarm emitted if trip signal occurs while the

device is active. - A 3 digit code must be entered to turn off the

alarm. - If a mistake is made while entering the code, the

user must press the Clear button before

retrying. - Each unit has a hard-coded deactivation code.

72

Security alarm Stimuli and Responses

73

Reliability Testing Statistical Testing

- Usage model for alarm system

74

Usage Model for Alarm system with probabilities

- Usage Probabilities assumptions

- Trip stimuli probability is 0.05 in states Ready,

Entry Error, 1_OK, 2_OK. - Other stimuli that cause a state change have

equal probabilities. - Results in a Markov chain

75

Reliability Testing Statistical Testing

- Usage probabilities are obtained from

- field data,

- estimates from customer interviews

- using instrumentation of prior versions of the

system - For the approach to be effective, probabilities

must reflect future usage

76

Reliability Testing Statistical Testing

- Usage Model analysis

- Based on standard calculations on a Markov chain

- Possible to obtain estimates for

- Long-run occupancy of each state - the usage

profile as a percentage of time spent in each

state. - Occurrence probability - probability of

occurrence of each state in a random use of the

software. - Occurrence frequency - expected number of

occurrences of each state in a random use of the

software. - First occurrence - for each state, the expected

number of uses of the software before it will

first occur. - Expected sequence length - the expected number of

state transitions in a random use of the

software the average length of a use case or

test case.

77

Reliability Testing Statistical Testing

- Test case generation

- Traverse the usage model, guided by the

transition probabilities. - Each test case

- Starts at the initial node and end at an exit

node. - Consists of a succession of stimuli

- Test cases are random walks through the usage

model - Random selection used at each state to determine

next stimulus.

78

Randomly generated test case

79

Reliability Testing Statistical Testing

- Measures of Test Sufficiency (when to stop

testing?) - Usage Chain- usage model that generates test

cases. - used to determine each state long-run occupancy

- Testing Chain - used during testing to track

actual state traversals. - Add a counter to each arc initialized to 0

- Increment the counter of an arc whenever a

test-case execute the transition - Discriminant - difference between usage chain and

testing chain (degree to which testing experience

is representative to the expected usage) - ? testing can stop when the discriminant plateaus

80

Reliability Testing Statistical Testing

- Reliability measurement

- Failure states - added to the testing chain as

failures occur during testing - Software reliability - probability of taking a

random walk through the testing chain from

invocation to termination without encountering a

failure state. - Mean Time to Failure (MTTF) - average number of

test cases until a failure occurs

81

Example All tests pass

82

Example with failure cases

83

Regression Testing

- Purpose In a new version of software, ensure

that functionality of previous versions has not

been adversely affected. - Example in release 4 of software, verify that

all (unchanged) functionality of versions 1, 2,

and 3 still work. - Why is it necessary?

- One of the most frequent occasions when software

faults are introduced is when the software is

modified.

84

Regression Test Selection (1)

- In version 1 of the software, choose a set of

tests (usually at the system level) that has the

best coverage given the resources that are

available to develop and run the tests. - Usually take system tests that were run manually

prior to the release of version 1, and create a

version of the that can run automatically. - boundary tests

- tests that revealed bugs

- tests for customer-reported bugs

- Depends on tools available, etc.

85

Regression Test Selection (2)

- With a new version N of the software, the

regression test suite will need updating - new tests, for new functionality

- updated tests, for previous functionality that

has changed - deleted tests, for functionality that has been

removed - There is a tendency for infinite growth of

regression tests - Periodic analyses are needed to keep the size of

the test suite manageable, even for automated

execution.

86

Regression Test Management (1)

- The regression package for the previous

version(s) must be preserved as long as the

software version is being supported. - Suppose that version 12 of software is currently

being developed. - If versions 7, 8, 9, 10, and 11 of software are

still being used by customers and are officially

supported, all five of these regression packages

must be kept - Configuration management of regression suites is

essential !

87

Configuration Management ofRegression Suites

Softwareversion

Regressionsuite

7

7

Regression suites include all functionality up to

indicated version number

8

8

9

9

10

10

11

11

under development

12

88

When to run the regression suite?

- The version 11 suite would be run on version 12

of the software prior to the version 12 release - It may be run at all version 12 recompilations

during development - If a problem report results in a bug fix in

version 9 of the software, the version 9 suite

would be run to ensure the bug fix did not

introduce another fault. - If the fix is propagated to versions 10, 11, and

12, then the version 10 regression suite would

run against product version 10, and the version

11 regression suite would run against product

version 11 and new version 12.

89

Bug fixes in prior releases

Softwareversion

Regressionsuite

7

7

8

8

bug fix here

9

9

10

10

updates

11

11

under development

12

must be re-run

90

Constraints on Regression Execution

- Ideal would like to re-run entire regression

test suite for each re-compile of new software

version, or bug fix in a previous version - Reality test execution and (especially) result

analysis may take too long, especially with large

regression suites. - Test resources often shared with new

functionality testing - May only be able to re-run entire test suite only

at significant project milestones - example prior to product release

91

Test selection

- When a code modification takes place, can we run

only the regression tests related to the code

modification? - Issues

- How far does the effect of a change propagate

through the system? - Traceability keeping a link that stores the

relationship between a test, and what parts of

the software are covered by the test.

92

Change Impact Analysis

- Module firewall strategy if module M7 changes,

retest all modules with a use or used-by

relationship

M1

M3

M2

M4

M5

M6

M7

M10

M9

M8

93

OO Class Firewalls

- Suppose A is a superclass of A, and B is

modified. Then - B should be retested

- A should be retested if B has an effect on

inherited members of A - Suppose A is an aggregate class that includes B,

and B is modified. Then A and B should be

retested. - Suppose class A is associated to class B (by

access to data members or message passing), and B

is modified. Then A and B should be retested. - The transitive closure of such relationships also

needs to be retested.

94

Example

H

A

I

B

G

J

C

D

K

L

M

E

inheritance

association

N

O

F

aggregation

modified class

firewall

class to re-test