Parallel Algorithms for Unstructured and Dynamically Varying Problems - PowerPoint PPT Presentation

1 / 56

Title:

Parallel Algorithms for Unstructured and Dynamically Varying Problems

Description:

assigning them to PEs; each PE perform computation associated with subdomain ... proportional to perim subdomain. for fixed-size subdomain perim min if square ... – PowerPoint PPT presentation

Number of Views:73

Avg rating:3.0/5.0

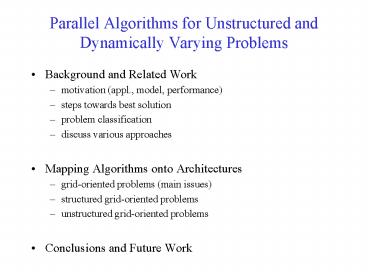

Title: Parallel Algorithms for Unstructured and Dynamically Varying Problems

1

Parallel Algorithms for Unstructured and

Dynamically Varying Problems

- Background and Related Work

- motivation (appl., model, performance)

- steps towards best solution

- problem classification

- discuss various approaches

- Mapping Algorithms onto Architectures

- grid-oriented problems (main issues)

- structured grid-oriented problems

- unstructured grid-oriented problems

- Conclusions and Future Work

2

Motivation

- Unstructured and dynamic problems

- abstractions of various phenomena

- astrophysics, molec. biology, chemistry

- fluid dynamics, electromagnetics,

- large, computationally intensive

- Applications

- solutions to boundary integral equations

- wave propagation, fluid flow

- transitions from 3-D to 2-D

- prediction (Schrodinger 1st, 2nd)

- local density approximations

- large least-squares problems

- image proc., molec. biology, astrophysics

3

Motivation (continued)

- Goal model real event

- simulations, prediction, impact

- accurate solutions

- Need high performance solutions

- performance evaluations

- hard to compare (differ. Parameters)

- metrics (ExT, Sp, E, IsoE, ConvR)

- simple, accurate, fast, effective

4

Performance of Parallel Algorithms

- Theoretical order analysis (not enough)

- best solutions restricted applications

- nonrealistic assumptions about resources

- PE number, speed problem size

- Empirical testing (optimal solution diff)

- need to consider various factors

- FlattKen89 given overhead, problem size,

parallel execution time minimum for a unique

number of processors.

Studies conclude converg rate and par.

efficiency det granularity and choice of alg. - BarrHick93 graph (best solution vs time)

- Performance degradation (loss degree)

5

Steps towards Best Performance Solution

- Depends upon the best choice of

- parallel algorithm (simple, accurate, fast)

- parallel architecture (fast, effective)

- mapping (detect, partitioning, scheduling)

- Mapping -- goals (minimize factors)

- computation time (numerical efficiency)

- communication (comm/comp ratio)

- load imbalance (effective use resources)

- overhead (synch, comm, sched)

- identify stochastic behavior -- algorithm

design - Best performance (choice of parameters)

- find dominant component

- Mapping -- considerations

- problems domain distrib (pattern, density, size)

- topology interconn net, char each comp.

6

Problem Classification

- Pattern of data points distribution

- structured (uniform)

- synchronous

- unstructured (nonuniform)

- loosely synchronous

- asynchronous

- Density of data points

- dense (high density)

- sparse (low density)

- Solutions grids (math description, data struct.)

structured, unstructured, semistructured

7

Problem ClassificationDepending on the pattern

of data points distribution

Regularity

Structured

Unstructured

Distribution

space

time

uniform

regular

regular

synchronous

--

Loosely synchronous

irregular

regular

--

nonuniform

irregular

irregular

asynchronous

--

8

Problem ClassificationDepending on the density

of data points distribution

Density

Structured

Unstructured

high

dense

dense

low

(sparse)

sparse

9

Structured Problems

- synchronous, reg space and time

- uniform distribution data points

- parall easy detect, express, implement

- naturally expressed (vector, matrix)

- compiler mapp constructs, operations

- eg. QCD simulations, chemistry, ...

10

Unstructured Problems

- loosly synchronous (irr space, reg time)

- asynchronous (irregular space and time)

- nonuniform distribution data points

- irregularities difficult detect, express

- irregularities hard to implement (develop)

- need flexible HW, fast communication

11

Loosely Synchronous Problems

- Dominant methodology irregular sci simul

- irreg static, data par. Over sparse struct

- irreg not too hard detect, express

- hierarchical data structure, sparse matrices

- success express irreg performance gains

- irreg hard to implement

- high-level data struct, geometric decomp

- run better on MIMD than on SIMD

- different data points, distinct alg.

- Eg periodic interact heterogenious objects

- time-driven simul, stat phys, particle dyn

- adaptive meshes, biology, image processing

- Monte Carlo, clustering alg N-body problem

- spacial structure may change dynamically

- need synchronization each iteration setp

- need adaptive algorithms

12

Asynchronous

- irreg hard detect, express, implement

- hard parallelize (unless embarassingly)

- Eg event-driven simul, chess, market analys

- irregularities dynamic (cannot exploit)

- cannot use simple mappings (communication,

decomposition) - object-oriented approach (flex commun)

- statistical methods load balancing

13

Grid-Oriented Problems

- Structured grids

- simple (each node same procedure)

- low overhead

- hard to create (complex domains)

- Unstructured grids

- easy create, adapt, effective

- no need propagate local features

- large overhead, more storage

- difficult solution

- convergence rate may be slow

- Semistructured grids

- domain unstructured union of structured

subdomains - use GroppKeyes92, PAFMA LeaBoa92

14

- Figures about grids

15

Dense and Sparse Problems

- Structured, Unstructured

- various approaches

16

Dense Problems

- Matrix problems

- well or not well determined solutions

- indices not necessarily linear order

- Vandermonde, Toeplitz, orthog structure

- well conditioned accurate regardless computation

method - ill-conditioned can be highly accurate if alg.

computes small residuals, sol. det right hand

side - role ill-cond and cond estimators

- Edel93 paradox

- improved multiply, transpose, inverse

17

Sparse Problems

- undirected weighted graphs

- compressed rows

- vector rows, nested pairs column-value

- different multiply, transpose, inverse

- det eigenval, eigenvect sparse matrix

- using O(n) matrix-vector multiply Yau93

18

- Figures about performance of PDE sparse solvers

on hypercubes

19

Dense Approaches to Sparse Problems

- Sparse problems contain dense blocks

- extract, process regular structure

eg. FEBA alg for sparse

matrix-vector multiplication Agar92 - direct matrix factorization

- decomposition smaller dense, loss performance,

communic router Kratzer92, mapp QR different

that mapp Cholesky

20

Sparse Approaches to Dense Problems

- Recently successful, seem counterintuitive

- General methods Edel93, Freu92Reev93

- access matrix through matrix-vector mul.

- Look for preconditioners

- replace O( ) matrix-vector multiplicat. With

approximations (multipole, multigrid) - PAFMA -- LeaBoa92

- nonuniform problem divided uniform reg.

- take advantage regular commun patterns

- processor assignment in subregions with density

variance. When (1) load imbalance assign dense

regions (2)

communication assign sparse regions

21

Stochastic Nature of Parallel Algorithms

- Variability run-time behavior alg.

- Solution unpredictable, multiple paths

- path nondeterministic, optimal path chosen

run-time, results divergent number pivots, sol

time, alternate optima - Race conditions (time dependent decisions)

- in algorithms design

- same problem, strategy, operating cond

- alternate optima, different timing event,

incoming variables, choices, sequence of points

traversed - Eg. self-scheduled nonuniform problems

- highly efficient machine utilization

22

Stochastic Nature of Parallel Algorithms

(continued)

- Some examples

- parallel network simplex, good load balance,

variability run-time behavior - branch-and-bound for integer programm

- good bounds affects portion of search tree

explored - loops without dependencies among their iteration

but sensitive other iter, OS, appl - Factoring HumSch, Fly92, scheduling good load

balance, overhead, scalable, resistant iteration

variance

23

Mapping Algorithms onto Architectures

- Parallelism detection

- depends upon algorithm and problem nature

- independent of architecture

- study data dependency explicit, implicit

- partitioning (problem decomposition)

- task into processes, identify sharing objects

- allocation (distribution task to processors)

- influenced by memory org, interconnect

- scheduling (ordering tasks on processors)

- depends upon interconnect and PE charact

24

Mapping Goals(partitioning, scheduling)

- minimize communication exploit locality

- minimize load imbalance adaptive refinement

25

Partitioning

- Granularity of process coarse enough for target

machine without loosing parallelism - partitioning technique Sarkar89

- Start initial fine granularity use heuristics to

merge processes until coarsest partition reached

use cost function (depends upon critical path,

overhead)

26

Scheduling

- Goal spread load evenly on PEs (efficiency)

- static vs dynamic allocation

- low overhead, inflexible vs high overhead,

flexible - centralized vs distributed scheduling

- centralized mediation SmithSchnabel92 mix

- hierarchical mediation strategy

- Note compiler, automatic tools for partitioning,

scheduling - run-time (flexible, large overhead)

- compile time (low overhead, need technology, good

if easy estimate)

27

The Mapping Problem

- Assigning tasks to PEs s.t. minimal

- Model HammondSchreiber92

- given work each PE equal G ( , ) task

graph (vertex process, edges interprocess

commun) H ( , ) PE graph dshortest

path. - Find surjection ? ? s.t.

communication load minimized ?

- good results need partition, alloc, schedul (if

isolated poor mappings, non-optimal time)

28

The Mapping Problem(continued)

- Mapping of communication pattern strategy

(efficient) Guptaschenfeld93 - switching locality (sparse nature of

communication graphs) - each process switches its communication between a

small set of other process -- ICN PEs grouped in

small clusters intercluster and intracluster

connectivity - identify this partitioning problem with bounded l

-- contraction of graph RamKri9 (partitioning

of vertex set into subsets such that no subset

contains more than l vertices and every subset

has at least as many vertices as the number of

subsets is connected to) - simulated annealing good results

29

- Figures of an example

30

Mapping of Grid-Oriented Problems

- grid points geometrically adjacent

- partitioning grid into subdomains (subgrids)

- assigning them to PEs each PE perform

computation associated with subdomain - dependency communication restricted to

perimeters of subdomains - Model RooseDries93 -- structured grid

- time integration of finite-difference, finite

volume discretization PDE on structured grid - 2D struct grid partitioned in subdomains of equal

size - each PE performs locally updates for interior

grid points - boundary grid points need neighbor information

31

- Figures about grid partitioning and communication

requirements

32

Analysis (communication, load balance, numerical

efficiency)

- Communication overhead depends upon

- size subdomains (large subdomains, small

overhead) 2D perim to surf, 3D surf to vol ratio - communic vol proportional to perim subdomain

- for fixed-size subdomain perim min if square

- blockwise partitioning in square subregions leads

to min communication volume - communication requirements not always isotropic

- machine characterisitcs

- influence communication to computation ration

- problem characteristics

- dense problems load imbalance predominates

- sparse problems communication predominates

33

Analysis (continued)

- Load Imbalance

- minimal, when block partitioning in square

subdomains - work per grid may vary (cannot predict load

imbalance) - math models differ in various parts of the domain

- boundary regions Work differs from interior

cells cells distributed almost equal among

processors, achieved when subdomains square

34

Analysis (continued)

- Numerical Efficiency

- accuracy results differ (depending algorithm,

problem nature) - Numerical properties of inherently parallel

algorithms not affected by partitioning strategy

(e.g. Jacobi relaxation as opposed to

Gauss-Seidel which has better convergence

properties) - Runge-Kutta update overlap regions only after

complete integration step (omitting some

communication) high speedup, small convergence

degradation blocks determined by PEs and not

domain geometry - Block tridiagonal systems (Thomas sequential,

pipelined) parallel solvers use Gaussian

elimination, cyclic reduction distribution of

sequential parts over PEs at the expense of

increased communication

35

Analysis (continued)

- Numerical Efficiency (continued)

- domain decomposition algorithm for PDEs (contain

algorithmic overhead) - Schwartz domain decomposition overlapping

subdomains (iterative process, approx boundaries) - Schur Complement non-overlapping subdomains

(borders computed first)

36

Hierarchical Nature of Multigrid Algorithms

- Obtaining acceptable performance levels require

optimization techniques which address the

characteristics of each architectural class

Matheson Tarjan93 - Model study for each architectural class

- Conclusions

- fine grain machines (high variable communication

cost) - optimize domain to PE topology mapping

- medium grain machines (high fixed communication

cost) - optimize domain partition (well-shaped, perim

minim, neighbors small) - Parallel algorithms require accurate subspace

decomposition and large PEs to provide practical

alternatives to standard algorithms.

37

Optimal Partitioning -- structured grids

- Structured problems (use structured grids)

- unstructured problems (structured on subdomains)

- Regular comput 2D mesh MIMD Lee90

- workload and communic pattern same at each point

- communication depends upon total communic

required, actual pattern of communic ( communic

neighbors, underlying architecture) - CCR important factor in performance evaluation

(given stencil, generate best shape) - CCR depends upon stencil, partition shape, gird

(e.g. diamond -5p, hexagon -7p, square -9p star)

(CCR max does not guarantee minim exec time CCR

better performance indicator in shared-memory

than message passing CCR proportional to aspect

ratio of partition)

38

Optimal Partitioning -- structured grids

(continued)

- Optimal partitionings ReedAdamsPatrick87

- formalize relationship stencil, shape,

underlying architecture - isolated evaluation of components yields

suboptimal performance - message-passing small vs large packets have

opposite order results (stencil, shape) - type of interconnect net important (grid to net

mapping must support interpartition communic

pattern -- otherwise performance degradation)

39

- Figures about stencil

40

Mapping Algorithms for Unstructured Grid-Oriented

Problems

- Grids structured, unstructured static,

adaptive single level, multilevel (multigrid) - Partitioning

- static grid size work fixed during comput

- dynamic grid size work change

- iterative static (quasi-dynamic) uses adaptive

refinement (grid fixed for each iteration load

balancing after each grid refinement overhead) - Mapping unstructured grids (complex)

- patition grid into subgrids if communication

- low, use fine grids (subgrids PEs)

- high, use coarse grids (subgrids gtgt PEs)

41

- Figures about Element Airfoil

42

Requirements for Mapping

- Subgrids dependency topology to match machine

communic topology - easy for fully connected machine in general

nearest-neighbor fast, decreases risk of link

contention) - subgrids mutualy dependent mapped onto PEs that

communicate easy (fast, close) - time for local comput depend upon grid points

(if each point same amount work, then each

subgrid same points) - time for interface operations depend on points

on the boundary of subgrid (length of boundary

and adjacent subgrids should be minimized)

43

Classification of Partitioning algorithms for

Unstructured Grids

- Optimization techniques (cost function)

- heuristic techniques

- clustering techniques

- graph partitioning

- geometry based techniques

44

Optimization techniques

- Minimize cost function -- mapping comunic, load

imbalance - Global combinatorial optimization problem

- grid n points mapped onto p processors

- search space cardinality (impractical)

- total enumeration, symmetry, branch-and-bound

(not practical enough) - Suboptimal solutions

- simulated annealing (expensive) Kirckpatrick83

- genetic algorithms (robust, large amount time)

45

Heuristic Techniques

- Simplify model (emphasize critical aspects)

- do not minimize comunic load imbalance

simultaneously (model one term explicitly while

the other is implicitly guiding the search) - e.g. explicitly minimize communication while

implicitly providing equally sized subgrids

46

Clustering

- Form clusters with high intra-cluster

dependencies low inter-cluster communication - nearest-neighbor mapping algorithm Sadayappan,

et al.90 - group grid points into clusters

- assign clusters to PEs such that nearest-neighbor

points are reassigned to same or nearest PE - explicit minimize load imbalance (boundary

refinement) - implicit minimize commnic by search strategy.

- bandwidth reduction algorithms Farhat92

- reduce local bandwidth at expense of bad aspect

ratio (sparse matrix technique of reordering the

equations and unknowns)

47

Graph Partitioning

- Grid-oriented problems described by dependency

graphs - Grid partitioning problems formulated as graph

partitioning problems - (each vertex correspondes grid point weight

vertex weight piont weight edge communic

vol) - given G (V, E) undirected graph vertices

(equal weights) edges edge

weights p positive integers n1,,np s.t.

- Partition vertex set V into p disjoing subsets

n1,,np (or n1 np) s.t. sum of weights of

edges connecting different subsets in minimal. - NP complete (heuristics, near-optimal solutions)

- Kernighan-Lin algorithms (graph with equal vertex

weights partitioned into 2 more subgraphs) - parallelized graph partitioning algorithm for

message passing MIMD Gilber, et al.87

48

Spectral Bisection Algorithm

- Based upon results from spectral graph theory in

which eigenvectors of a matrix used to bisect

graph - applied to interdependency graph of a grid

- initial algorithm Pothen, et al.90

- graph bisected using eigenvector corresp to 2-nd

smallest eigenvalue of Laplacian matrix of graph - algorithm for weighted vertices edges

HendricksonLeland93 - graph partitioned into eight subgraphs at once

applied recursively to subgraphs - improved alg various edge weights RoooseDri93

- better partitions for dynamic unstructured grids

with good load balance - reduces remapping elements by extending initial

graph with virtual vertices

49

- Figures about RSB algorithms

50

Geometry Based Techniques

- Partitioning based on geometrical info

- geometrical info about grid points

- grid bisected in subgrids with equal grid points

(applied recursively) - less expensive, produces acceptable partitionings

- spectral bisection alg better for large grid

points and higher accuracy (expensive) - ORB (half bodies n each subspace)

- bisection along x, y, z coordinates

- largest dimension chosen to split

- used by FMA, AFMA

51

Geometry Based Techniques (continued)

- Inertial recursive bisection

- partitionings do not depend upon the system of

coordinates used but upon problem characteristics - grid will be bisected orthogonal to the principal

inertia direction. - Principal inertia direction of a set of objects

is the direction for which rotation inertia

momentum is minimal when the direction is taken

as the rotation axis. - expensive but accurate results

52

- Figures about partitionings

53

Comparison between Spectral Bisection and

Coordinate Bisection

54

Optimal Partitioning Techniques

- Static load balancing Berger87

- Binary decomposition (rectangles equal work, easy

data structure) - optimal depth

determined by mapping communication costs onto

different architectures - cardinality (quality of mapping)

edges of

problem graph that fall on edges of processor

graph divided by total edges (cardinality1,

minimum communications) - regular domains are easy (map binary gray code on

gypercube) - irregular domains -- difficult (neighbor regions

not on neighbor nodes)

55

Optimal Partitioning Techniques (continued)

- Dynamic load balancing

- Nearest-neighbor (move grad. Towards balance)

- partial workload info, modify domains iteratively

- uses statistical methods for analysis too slow

- Hierarchical master-slave DragonGustafson89

- ideal balance at synchronization points

(hypercube) - divide conquer (time, space, effectiveness)

- all nodes same work within granularity of task

size - each node in subcube knows the extreme values

- if redistribution work needed (subcube with less

work takes its neighbor extreme particles,

subcube with more work removes extreme particle

a new boundary established) - process moves to a finer level

56

Scheduling techniques(improving load balancing)

- Factoring (parallel loop iter) Hummel, et al.92

- minim synchronization (coord global scheduling)

- iterates dynamic scheduled decreasing chunks

(sizes decrease exponentially rather than

linearly) - granularity large (self-sched), small

(static-sched) - Tiling (statistical methods) Ramsadayapan90

- minimize communication

- study stencil, partition shape, interconn topol

- Fractiling (factoring tiling) Hummel93

- load balancing (min synchron, min communic)

- chunk-size (factoring rule)

- chunk-shape (tiling)

- exploit properties found in fractals