Simultaneous Multithreading (SMT) - PowerPoint PPT Presentation

1 / 44

Title:

Simultaneous Multithreading (SMT)

Description:

An evolutionary processor architecture originally introduced in 1995 by Dean Tullsen at the University of Washington that aims at reducing resource waste in wide ... – PowerPoint PPT presentation

Number of Views:145

Avg rating:3.0/5.0

Title: Simultaneous Multithreading (SMT)

1

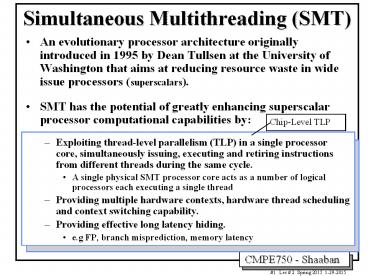

Simultaneous Multithreading (SMT)

- An evolutionary processor architecture originally

introduced in 1995 by Dean Tullsen at the

University of Washington that aims at reducing

resource waste in wide issue processors

(superscalars). - SMT has the potential of greatly enhancing

superscalar processor computational capabilities

by - Exploiting thread-level parallelism (TLP) in a

single processor core, simultaneously issuing,

executing and retiring instructions from

different threads during the same cycle. - A single physical SMT processor core acts as a

number of logical processors each executing a

single thread - Providing multiple hardware contexts, hardware

thread scheduling and context switching

capability. - Providing effective long latency hiding.

- e.g FP, branch misprediction, memory latency

Chip-Level TLP

2

SMT Issues

- SMT CPU performance gain potential.

- Modifications to Superscalar CPU architecture to

support SMT. - SMT performance evaluation vs. Fine-grain

multithreading, Superscalar, Chip

Multiprocessors. - Hardware techniques to improve SMT performance

- Optimal level one cache configuration for SMT.

- SMT thread instruction fetch, issue policies.

- Instruction recycling (reuse) of decoded

instructions. - Software techniques

- Compiler optimizations for SMT.

- Software-directed register deallocation.

- Operating system behavior and optimization.

- SMT support for fine-grain synchronization.

- SMT as a viable architecture for network

processors. - Current SMT implementation Intels

Hyper-Threading (2-way SMT) Microarchitecture and

performance in compute-intensive workloads.

Ref. Papers SMT-1, SMT-2

SMT-3

SMT-7

SMT-4

SMT-8

SMT-9

3

Evolution of Microprocessors

General Purpose Processors (GPPs)

Multiple Issue (CPI lt1)

Superscalar/VLIW/SMT/CMP

Pipelined (single issue)

Multi-cycle

Original (2002) Intel Predictions 15 GHz

1 GHz to ???? GHz

IPC

T I x CPI x C

Source John P. Chen, Intel Labs

Single-issue Processor Scalar

Processor Instructions Per Cycle (IPC) 1/CPI

4

Microprocessor Frequency Trend

Realty Check Clock frequency scaling is slowing

down! (Did silicone finally hit the wall?)

Why? 1- Power leakage 2- Clock distribution

delays

Result Deeper Pipelines Longer stalls Higher

CPI (lowers effective performance per cycle)

Chip-Level TLP

- Possible Solutions?

- - Exploit Thread-Level Parallelism (TLP)

- at the chip level (SMT/CMP)

- Utilize/integrate more-specialized

- computing elements other than GPPs

T I x CPI x C

5

Parallelism in Microprocessor VLSI Generations

(ILP)

(TLP)

Superscalar /VLIW CPI lt1

Multiple micro-operations per cycle (multi-cycle

non-pipelined)

Simultaneous Multithreading SMT e.g. Intels

Hyper-threading Chip-Multiprocessors (CMPs) e.g

IBM Power 4, 5 Intel Pentium D, Core 2

AMD Athlon 64 X2 Dual Core

Opteron Sun UltraSparc T1 (Niagara)

Single-issue Pipelined CPI 1

AKA Operation-Level Parallelism

Not Pipelined CPI gtgt 1

Chip-Level Parallel Processing

Even more important due to slowing clock rate

increase

Thread-Level Parallelism (TLP)

Single Thread

Improving microprocessor generation performance

by exploiting more levels of parallelism

6

Microprocessor Architecture Trends

General Purpose Processor (GPP)

Single Threaded

CMPs

(Single or Multi-Threaded)

(e.g IBM Power 4/5, AMD X2, X3, X4, Intel Core

2)

(SMT)

Chip-level TLP

e.g. Intels HyperThreading (P4)

SMT/CMPs

e.g. IBM Power5,6,7 , Intel Pentium D, Sun

Niagara - (UltraSparc T1) Intel Nehalem

(Core i7)

7

CPU Architecture Evolution

- Single Threaded/ Single Issue Pipeline

- Traditional 5-stage integer pipeline.

- Increases Throughput Ideal CPI 1

8

CPU Architecture Evolution

- Single-Threaded/Superscalar Architectures

- Fetch, issue, execute, etc. more than one

instruction per cycle (CPI lt 1). - Limited by instruction-level parallelism (ILP).

Due to single thread limitations

9

Hardware-Based Speculation

Commit or Retirement

(In Order)

FIFO

Usually implemented as a circular buffer

Instructions to issue in Order Instruction Queue

(IQ)

Speculative Execution Tomasulos Algorithm

Next to commit

Speculative Tomasulo

Store Results

Speculative Tomasulo-based Processor

4th Edition page 107 (3rd Edition page 228)

10

Four Steps of Speculative Tomasulo Algorithm

- 1. Issue (In-order) Get an instruction from

Instruction Queue - If a reservation station and a reorder buffer

slot are free, issue instruction send operands

reorder buffer number for destination (this

stage is sometimes called dispatch) - 2. Execution (out-of-order) Operate on

operands (EX) - When both operands are ready then execute if

not ready, watch CDB for result when both

operands are in reservation station, execute

checks RAW (sometimes called issue) - 3. Write result (out-of-order) Finish

execution (WB) - Write on Common Data Bus (CDB) to all awaiting

FUs reorder buffer mark reservation station

available. - 4. Commit (In-order) Update registers, memory

with reorder buffer result - When an instruction is at head of reorder buffer

the result is present, update register with

result (or store to memory) and remove

instruction from reorder buffer. - A mispredicted branch at the head of the reorder

buffer flushes the reorder buffer (cancels

speculated instructions after the branch) - Instructions issue in order, execute (EX),

write result (WB) out of order, but must commit

in order.

Stage 0 Instruction Fetch (IF) No changes,

in-order

Includes data MEM read

No write to registers or memory in WB

No WB for stores or branches

i.e Reservation Stations

Successfully completed instructions write to

registers and memory (stores) here

Mispredicted Branch Handling

4th Edition pages 106-108 (3rd Edition pages

227-229)

11

Advanced CPU Architectures

- VLIW Intel/HP IA-64

- Explicitly Parallel Instruction Computing (EPIC)

- Strengths

- Allows for a high level of instruction

parallelism (ILP). - Takes a lot of the dependency analysis out of HW

and places focus on smart compilers. - Weakness

- Limited by instruction-level parallelism (ILP) in

a single thread. - Keeping Functional Units (FUs) busy (control

hazards). - Static FUs Scheduling limits performance gains.

- Resulting overall performance heavily depends on

compiler performance.

12

Superscalar Architecture Limitations

Issue Slot Waste Classification

- Empty or wasted issue slots can be defined as

either vertical waste or horizontal waste - Vertical waste is introduced when the processor

issues no instructions in a cycle. - Horizontal waste occurs when not all issue slots

can be filled in a cycle.

Why not 8-issue?

Example 4-Issue Superscalar Ideal IPC 4 Ideal

CPI .25

Instructions Per Cycle IPC 1/CPI

Also applies to VLIW

Result of issue slot waste Actual Performance

ltlt Peak Performance

13

Sources of Unused Issue Cycles in an 8-issue

Superscalar Processor.

(wasted)

Ideal Instructions Per Cycle, IPC 8 Here real

IPC about 1.5

(CPI 1/8)

(18.75 of ideal IPC)

Average IPC 1.5 instructions/cycle issue rate

Real IPC ltlt Ideal IPC 1.5 ltlt 8

81 of issue slots wasted

Processor busy represents the utilized issue

slots all others represent wasted issue

slots. 61 of the wasted cycles are vertical

waste, the remainder are horizontal

waste. Workload SPEC92 benchmark suite.

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

14

Superscalar Architecture Limitations

- All possible causes of wasted issue slots, and

latency-hiding or latency reducing - techniques that can reduce the number of cycles

wasted by each cause.

Main Issue One Thread leads to limited ILP

(cannot fill issue slots) Solution Exploit

Thread Level Parallelism (TLP) within a single

microprocessor chip

- Simultaneous Multithreaded (SMT) Processor

- The processor issues and executes instructions

from a number of threads creating a number of

logical processors within a single physical

processor - e.g. Intels HyperThreading (HT), each physical

processor executes instructions from two threads

- Chip-Multiprocessors (CMPs)

- Integrate two or more complete processor cores

on the same chip (die) - Each core runs a different thread (or program)

- Limited ILP is still a problem in each core

(Solution combine this approach with SMT)

How?

AND/OR

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

15

Advanced CPU Architectures

- Single Chip Multiprocessors (CMPs)

- Strengths

- Create a single processor block and duplicate.

- Exploits Thread-Level Parallelism.

- Takes a lot of the dependency analysis out of HW

and places focus on smart compilers. - Weakness

- Performance within each processor still limited

by individual thread performance (ILP). - High power requirements using current VLSI

processes. - Almost entire processor cores are replicated on

chip. - May run at lower clock rates to reduce heat/power

consumption.

AKA Multi-Core Processors Each Single Threaded

at chip level

e.g IBM Power 4/5, Intel Pentium D, Core Duo,

Core 2 (Conroe), Core i7 AMD Athlon 64

X2, X3, X4, Dual/quad Core Opteron Sun

UltraSparc T1 (Niagara)

16

Advanced CPU Architectures

Single Chip Multiprocessor (CMP)

Or 4-way

Shared L2 Or L3 Cache

CMP with n cores

17

Current Dual-Core Chip-Multiprocessor (CMP)

Architectures

Two Dies Shared Package Private Caches Private

System Interface

Single Die Private Caches Shared System Interface

Single Die Shared L2 Cache

L2 Or L3

On-chip crossbar/switch

FSB

Cores communicate using shared cache (Lowest

communication latency) Examples IBM

POWER4/5 Intel Pentium Core Duo (Yonah),

Conroe Sun UltraSparc T1 (Niagara) Quad Core AMD

K10 (shared L3 cache)

Cores communicate using on-chip Interconnects

(shared system interface) Examples AMD Dual

Core Opteron, Athlon 64 X2 Intel

Itanium2 (Montecito)

Cores communicate over external Front Side Bus

(FSB) (Highest communication latency) Example In

tel Pentium D

Source Real World Technologies,

http//www.realworldtech.com/page.cfm?ArticleIDR

WT101405234615

18

Advanced CPU Architectures

- Fine-grained or Traditional Multithreaded

Processors - Multiple hardware contexts (PC, SP, and

registers). - Only one context or thread issues instructions

each cycle. - Performance limited by Instruction-Level

Parallelism (ILP) within each individual thread - Can reduce some of the vertical issue slot waste.

- No reduction in horizontal issue slot waste.

- Example Architecture The Tera Computer System

Cray MTA 2

19

Fine-grain or Traditional Multithreaded

Processors The Tera (Cray MTA 2) Computer System

- The Tera computer system is a shared memory

multiprocessor that can accommodate up to 256

processors. - Each Tera processor is fine-grain multithreaded

- Each processor can issue one 3-operation Long

Instruction Word (LIW) every 3 ns cycle (333MHz)

from among as many as 128 distinct instruction

streams (hardware threads), thereby hiding up to

128 cycles (384 ns) of memory latency. - In addition, each stream can issue as many as

eight memory references without waiting for

earlier ones to finish, further augmenting the

memory latency tolerance of the processor. - A stream implements a load/store architecture

with three addressing modes and 31

general-purpose 64-bit registers. - The instructions are 64 bits wide and can contain

three operations a memory reference operation

(M-unit operation or simply M-op for short), an

arithmetic or logical operation (A-op), and a

branch or simple arithmetic or logical operation

(C-op).

128 threads per core

From one thread

Source http//www.cscs.westminster.ac.uk/seaman

g/PAR/tera_overview.html

20

SMT Simultaneous Multithreading

- Multiple Hardware Contexts (or threads) running

at the same time (HW context ISA registers, PC,

and SP etc.). - A single physical SMT processor core acts (and

reports to the operating system) as a number of

logical processors each executing a single thread - Reduces both horizontal and vertical waste by

having multiple threads keeping functional units

busy during every cycle. - Builds on top of current time-proven advancements

in CPU design superscalar, dynamic scheduling,

hardware speculation, dynamic HW branch

prediction, multiple levels of cache, hardware

pre-fetching etc. - Enabling Technology VLSI logic density in the

order of hundreds of millions of

transistors/Chip. - Potential performance gain is much greater than

the increase in chip area and power consumption

needed to support SMT. - Improved Performance/Chip Area/Watt

(Computational Efficiency) vs. single-threaded

superscalar cores.

Thread state

2-way SMT processor 10-15 increase in area Vs.

100 increase for dual-core CMP

21

SMT

- With multiple threads running penalties from

long-latency operations, cache misses, and branch

mispredictions will be hidden - Reduction of both horizontal and vertical waste

and thus improved Instructions Issued Per Cycle

(IPC) rate. - Functional units are shared among all contexts

during every cycle - More complicated register read and writeback

stages. - More threads issuing to functional units results

in higher resource utilization. - CPU resources may have to resized to accommodate

the additional demands of the multiple threads

running. - (e.g cache, TLBs, branch prediction tables,

rename registers)

Thus SMT is an effective long latency-hiding

technique

context hardware thread

22

SMT Simultaneous Multithreading

n Hardware Contexts

One n-way SMT Core

Modified out-of-order Superscalar Core

23

The Power Of SMT

Time (processor cycles)

Superscalar

Traditional Multithreaded

Simultaneous Multithreading

(Fine-grain)

Rows of squares represent instruction issue

slots Box with number x instruction issued from

thread x Empty box slot is wasted

24

SMT Performance Example

- 4 integer ALUs (1 cycle latency)

- 1 integer multiplier/divider (3 cycle latency)

- 3 memory ports (2 cycle latency, assume cache

hit) - 2 FP ALUs (5 cycle latency)

- Assume all functional units are fully-pipelined

25

SMT Performance Example (continued)

4-issue (single-threaded)

2-thread SMT

- 2 additional cycles for SMT to complete program 2

- Throughput

- Superscalar 11 inst/7 cycles 1.57 IPC

- SMT 22 inst/9 cycles 2.44 IPC

- SMT is 2.44/1.57 1.55 times faster than

superscalar for this example

i.e 2nd thread

Ideal speedup 2

26

Modifications to Superscalar CPUs to Support SMT

i.e thread state

- Necessary Modifications

- Multiple program counters (PCs), ISA registers

and some mechanism by which one fetch unit

selects one each cycle (thread instruction

fetch/issue policy). - A separate return stack for each thread for

predicting subroutine return destinations. - Per-thread instruction issue/retirement,

instruction queue flush, and trap mechanisms. - A thread id with each branch target buffer entry

to avoid predicting phantom branches. - Modifications to Improve SMT performance

- A larger rename register file, to support logical

registers for all threads plus additional

registers for register renaming. (may require

additional pipeline stages). - A higher available main memory fetch bandwidth

may be required. - Larger data TLB with more entries to compensate

for increased virtual to physical address

translations. - Improved cache to offset the cache performance

degradation due to cache sharing among the

threads and the resulting reduced locality. - e.g Private per-thread vs. shared L1 cache.

Resize some hardware resources for performance

SMT-2

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

27

SMT Implementations

- Intels implementation of Hyper-Threading (HT)

Technology (2-thread SMT) - Originally implemented in its NetBurst

microarchitecture (P4 processor family). - Current Hyper-Threading implementation Intels

Nehalem (Core i7 introduced 4th quarter 2008)

2, 4 or 8 cores per chip each 2-thread SMT (4-16

threads per chip). - IBM POWER 5/6 Dual cores each 2-thread SMT.

- The Alpha EV8 (4-thread SMT) originally

scheduled for production in 2001. - A number of special-purpose processors targeted

towards network processor (NP) applications. - Sun UltraSparc T1 (Niagara) Eight processor

cores each executing from 4 hardware threads (32

threads total). - Actually, not SMT but fine-grain multithreaded

(each core issues one instruction from one thread

per cycle). - Current technology has the potential for at least

4-8 simultaneous threads per core (based on

transistor count and design complexity).

28

A Base SMT Hardware Architecture.

In-Order Front End

Out-of-order Core

Modified Superscalar Speculative Tomasulo

Fetch/Issue

SMT-2

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

29

Example SMT Vs. Superscalar Pipeline

Based on the Alpha 21164

SMT

Two extra pipeline stages added for reg.

Read/write to account for the size increase of

the register file

- The pipeline of (a) a conventional superscalar

processor and (b) that pipeline modified for an

SMT processor, along with some implications of

those pipelines.

SMT-2

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

30

Intel Hyper-Threaded (2-way SMT) P4 Processor

Pipeline

SMT-8

Source Intel Technology Journal , Volume 6,

Number 1, February 2002.

31

Intel P4 Out-of-order Execution Engine Detailed

Pipeline

Hyper-Threaded (2-way SMT)

SMT-8

Source Intel Technology Journal , Volume 6,

Number 1, February 2002.

32

SMT Performance Comparison

- Instruction throughput (IPC) from simulations by

Eggers et al. at The University of Washington,

using both multiprogramming and parallel

workloads

8-issue 8-threads

IPC

i.e Fine-grained

IPC

(MP Chip-multiprocessor)

Multiprogramming workload multiple single

threaded programs (multi-tasking) Parallel

Workload Single multi-threaded program

33

Possible Machine Models for an 8-way

Multithreaded Processor

- The following machine models for a multithreaded

CPU that can issue 8 instruction per cycle differ

in how threads use issue slots and functional

units - Fine-Grain Multithreading

- Only one thread issues instructions each cycle,

but it can use the entire issue width of the

processor. This hides all sources of vertical

waste, but does not hide horizontal waste. - SMFull Simultaneous Issue

- This is a completely flexible simultaneous

multithreaded superscalar all eight threads

compete for each of the 8 issue slots each cycle.

This is the least realistic model in terms of

hardware complexity, but provides insight into

the potential for simultaneous multithreading.

The following models each represent restrictions

to this scheme that decrease hardware complexity. - SMSingle Issue,SMDual Issue, and SMFour Issue

- These three models limit the number of

instructions each thread can issue, or have

active in the scheduling window, each cycle. - For example, in a SMDual Issue processor, each

thread can issue a maximum of 2 instructions per

cycle therefore, a minimum of 4 threads would be

required to fill the 8 issue slots in one cycle. - SMLimited Connection.

- Each hardware context is directly connected to

exactly one of each type of functional unit. - For example, if the hardware supports eight

threads and there are four integer units, each

integer unit could receive instructions from

exactly two threads. - The partitioning of functional units among

threads is thus less dynamic than in the other

models, but each functional unit is still shared

(the critical factor in achieving high

utilization).

i.e SM Eight Issue

Most Complex

SMT-1

i.e. Partition functional units among threads

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

34

Comparison of Multithreaded CPU Models Complexity

- A comparison of key hardware complexity features

of the various models (Hhigh complexity). - The comparison takes into account

- the number of ports needed for each register

file, - the dependence checking for a single thread to

issue multiple instructions, - the amount of forwarding logic,

- and the difficulty of scheduling issued

instructions onto functional units.

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

35

Simultaneous Vs. Fine-Grain Multithreading

Performance

4.8?

IPC

3.1

6.4

IPC

Workload SPEC92

- Instruction throughput as a function of

the number of threads. (a)-(c) show the

throughput by thread priority for particular

models, and (d) shows the total throughput for

all threads for each of the six machine models.

The lowest segment of each bar is the

contribution of the highest priority thread to

the total throughput.

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

36

Simultaneous Multithreading (SM) Vs. Single-Chip

Multiprocessing (MP)

IPC

- Results for the multiprocessor MP vs.

simultaneous multithreading SM comparisons.The

multiprocessor always has one functional unit of

each type per processor. In most cases the SM

processor has the same total number of each FU

type as the MP.

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

37

Impact of Level 1 Cache Sharing on SMT

Performance

- Results for the simulated cache configurations,

shown relative to the throughput (instructions

per cycle) of the 64s.64p - The caches are specified as

- total I cache size in KBprivate or shared.D

cache sizeprivate or shared - For instance, 64p.64s has eight private 8 KB I

caches and a shared 64 KB data

64K instruction cache shared 64K data cache

private (8K per thread)

Instruction Data

Notation

Best overall performance of configurations

considered achieved by 64s.64s (64K instruction

cache shared 64K data cache shared)

SMT-1

Source Simultaneous Multithreading Maximizing

On-Chip Parallelism Dean Tullsen et al.,

Proceedings of the 22rd Annual International

Symposium on Computer Architecture, June 1995,

pages 392-403.

38

The Impact of Increased Multithreading on Some

Low LevelMetrics for Base SMT Architecture

Renaming Registers

IPC

So?

More threads supported may lead to more demand on

hardware resources (e.g here D and I miss rated

increased substantially, and thus need to be

resized)

SMT-2

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

39

Possible SMT Thread Instruction Fetch Scheduling

Policies

- Round Robin

- Instruction from Thread 1, then Thread 2, then

Thread 3, etc.

(eg RR 1.8 each cycle one thread fetches

up to eight instructions - RR 2.4 each cycle two threads fetch

up to four instructions each) - BR-Count

- Give highest priority to those threads that are

least likely to be on a wrong path by by counting

branch instructions that are in the decode stage,

the rename stage, and the instruction queues,

favoring those with the fewest unresolved

branches. - MISS-Count

- Give priority to those threads that have the

fewest outstanding Data cache misses. - ICount

- Highest priority assigned to thread with the

lowest number of instructions in static portion

of pipeline (decode, rename, and the instruction

queues). - IQPOSN

- Give lowest priority to those threads with

instructions closest to the head of either the

integer or floating point instruction queues (the

oldest instruction is at the head of the queue).

Instruction Queue (IQ) Position

SMT-2

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

40

Instruction Throughput For Round Robin

Instruction Fetch Scheduling

IPC 4.2

RR.2.8

RR with best performance

Best overall instruction throughput achieved

using round robin RR.2.8 (in each cycle two

threads each fetch a block of 8 instructions)

SMT-2

Workload SPEC92

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

41

Instruction throughput Thread Fetch Policy

ICOUNT.2.8

All other fetch heuristics provide speedup over

round robin Instruction Count ICOUNT.2.8

provides most improvement 5.3 instructions/cycle

vs 2.5 for unmodified superscalar.

Workload SPEC92

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

SMT-2

ICOUNT Highest priority assigned to thread with

the lowest number of instructions in static

portion of pipeline (decode, rename, and the

instruction queues).

42

Low-Level Metrics For Round Robin 2.8, Icount 2.8

Renaming Registers

ICOUNT improves on the performance of Round Robin

by 23 by reducing Instruction Queue (IQ) clog by

selecting a better mix of instructions to queue

SMT-2

43

Possible SMT Instruction Issue Policies

- OLDEST FIRST Issue the oldest instructions

(those deepest into the instruction queue, the

default). - OPT LAST and SPEC LAST Issue optimistic and

speculative instructions after all others have

been issued. - BRANCH FIRST Issue branches as early as

possible in order to identify mispredicted

branches quickly.

Instruction issue bandwidth is not a bottleneck

in SMT as shown above

Source Exploiting Choice Instruction Fetch and

Issue on an Implementable Simultaneous

Multithreading Processor, Dean Tullsen et al.

Proceedings of the 23rd Annual International

Symposium on Computer Architecture, May 1996,

pages 191-202.

SMT-2

ICOUNT.2.8 Fetch policy used for all issue

policies above

44

SMT Simultaneous Multithreading

- Strengths

- Overcomes the limitations imposed by low single

thread instruction-level parallelism. - Resource-efficient support of chip-level TLP.

- Multiple threads running will hide individual

control hazards ( i.e branch mispredictions) and

other long latencies (i.e main memory access

latency on a cache miss). - Weaknesses

- Additional stress placed on memory hierarchy.

- Control unit complexity.

- Sizing of resources (cache, branch prediction,

TLBs etc.) - Accessing registers (32 integer 32 FP for each

HW context) - Some designs devote two clock cycles for both

register reads and register writes.

Deeper pipeline