Binary Classification Problem Learn a Classifier from the Training Set PowerPoint PPT Presentation

1 / 49

Title: Binary Classification Problem Learn a Classifier from the Training Set

1

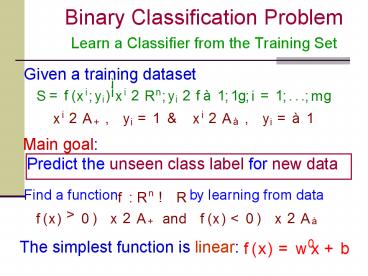

Binary Classification ProblemLearn a Classifier

from the Training Set

Given a training dataset

Main goal Predict the unseen class label for

new data

2

Binary Classification ProblemLinearly Separable

Case

Benign

Malignant

3

Support Vector MachinesMaximizing the Margin

between Bounding Planes

4

Why We Maximize the Margin? (Based on Statistical

Learning Theory)

- The Structural Risk Minimization (SRM)

- The expected risk will be less than or equal to

empirical risk (training error) VC (error) bound

5

Summary the Notations

6

Support Vector Classification

(Linearly Separable Case, Primal)

the minimization problem

It realizes the maximal margin hyperplane with

geometric margin

7

Support Vector Classification

(Linearly Separable Case, Dual Form)

The dual problem of previous MP

Dont forget

8

Dual Representation of SVM

(Key of Kernel Methods

)

The hypothesis is determined by

9

Soft Margin SVM

(Nonseparable Case)

- If data are not linearly separable

- Primal problem is infeasible

- Dual problem is unbounded above

- Introduce the slack variable for each

- training point

- The inequality system is always feasible

e.g.

10

(No Transcript)

11

Robust Linear Programming

Preliminary Approach to SVM

12

Support Vector Machine Formulations

(Two Different Measures of Training Error)

2-Norm Soft Margin

1-Norm Soft Margin (Conventional SVM)

13

Tuning ProcedureHow to determine C?

C

The final value of parameter is one with the

maximum testing set correctness !

14

Lagrangian Dual Problem

subject to

subject to

where

15

1-Norm Soft Margin SVM Dual Formulation

The Lagrangian for 1-norm soft margin

where

The partial derivatives with respect to

primal variables equal zeros

16

Substitute

in

where

and

17

Dual Maximization Problem for 1-Norm Soft Margin

Dual

- The corresponding KKT complementarity

18

Slack Variables for 1-Norm Soft Margin SVM

- Non-zero slack can only occur when

- The contribution of outlier in the decision

rule will be at most

- The trade-off between accuracy and

regularization directly controls by C

- The points for which

lie at the

bounding planes

19

Two-spiral Dataset(94 White Dots 94 Red Dots)

20

Learning in Feature Space

(Could Simplify the Classification Task)

- Learning in a high dimensional space could

degrade

generalization performance

- This phenomenon is called curse of dimensionality

- Even do not know the dimensionality of feature

space

- There is no free lunch

- Deal with a huge and dense kernel matrix

- Reduced kernel can avoid this difficulty

21

(No Transcript)

22

Linear Machine in Feature Space

Make it in the dual form

23

Kernel Represent Inner Product in Feature Space

Definition A kernel is a function

such that

where

The classifier will become

24

A Simple Example of Kernel

Polynomial Kernel of Degree 2

and the nonlinear map

Let

defined by

.

- There are many other nonlinear maps,

, that

satisfy the relation

25

Power of the Kernel Technique

Consider a nonlinear map

that consists

of distinct features of all the monomials of

degree d.

.

Then

!

26

Kernel Technique

Based on Mercers Condition (1909)

- The value of kernel function represents the

inner product of two training points in feature

space - Kernel functions merge two steps

- 1. map input data from input space to

- feature space (might be infinite

dim.) - 2. do inner product in the feature space

27

More Examples of Kernel

- The

of data points

and

28

Nonlinear 1-Norm Soft Margin SVM In Dual Form

Linear SVM

Nonlinear SVM

29

1-norm Support Vector MachinesGood for Feature

Selection

Equivalent to solve a Linear Program as follows

30

SVM as an Unconstrained Minimization Problem

- Change (QP) into an unconstrained MP

- Reduce (n1m) variables to (n1) variables

31

Smooth the Plus Function Integrate

Step function

Sigmoid function

p-function

Plus function

32

SSVM Smooth Support Vector Machine

33

Newton-Armijo Method Quadratic Approximation of

SSVM

- Converges in 6 to 8 iterations

- At each iteration we solve a linear system of

- n1 equations in n1 variables

- Complexity depends on dimension of input space

- It might be needed to select a stepsize

34

Newton-Armijo Algorithm

stop if

else

(i) Newton Direction

globally and quadratically converge to unique

solution in a finite number of steps

(ii) Armijo Stepsize

such that Armijos rule is satisfied

35

Nonlinear Smooth SVM

Nonlinear Classifier

- Use Newton-Armijo algorithm to solve the problem

- Nonlinear classifier depends on the data points

with - nonzero coefficients

36

Conclusion

- An overview of SVMs for classification

- Can be solved by a fast Newton-Armijo algorithm

- No optimization (LP, QP) package is needed

- There are many important issues did not address

- this lecture such as

- How to solve conventional SVM?

- How to deal with massive datasets?

37

Perceptron

- Linear threshold unit (LTU)

x01

w0

w1

w2

?

. . .

?i0n wi xi

g

wn

1 if ?i0n wi xi gt0 o(xi)

-1 otherwise

38

Possibilities for function g

Sign function

Sigmoid (logistic) function

Step function

step(x) 1, if x gt threshold 0,

if x ? threshold (in picture above, threshold

0)

sign(x) 1, if x gt 0 -1, if x ?

0

sigmoid(x) 1/(1e-x)

Adding an extra input with activation x0 1 and

weight wi, 0 -T (called the bias weight) is

equivalent to having a threshold at T. This way

we can always assume a 0 threshold.

39

Using a Bias Weight to Standardize the Threshold

1

-T

w1

x1

w2

x2

w1x1 w2x2 lt T

w1x1 w2x2 - T lt 0

40

Perceptron Learning Rule

x2

x2

x1

x1

x2

x2

x1

x1

41

The Perceptron Algorithm Rosenblatt, 1956

Given a linearly separable training set and

42

The Perceptron Algorithm (Primal Form)

Repeat

until no mistakes made within the for loop return

?

. What is

43

(No Transcript)

44

The Perceptron Algorithm

( STOP in Finite Steps )

Theorem (Novikoff)

Suppose that there exists a vector

and

. Then the number

of mistakes made by the on-line perceptron

algorithm

45

Proof of Finite Termination

Proof Let

46

Update Rule of Perceotron

Similarly,

47

Update Rule of Perceotron

48

The Perceptron Algorithm (Dual Form)

49

What We Got in the Dual Form Perceptron

Algorithm?