Transaction PowerPoint PPT Presentation

1 / 43

Title: Transaction

1

Transaction

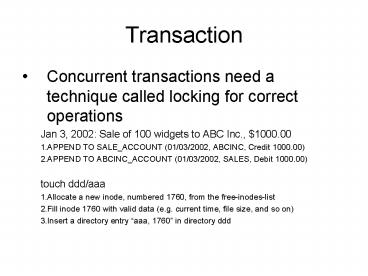

- Concurrent transactions need a technique called

locking for correct operations - Jan 3, 2002 Sale of 100 widgets to ABC Inc.,

1000.00 - 1.APPEND TO SALE_ACCOUNT (01/03/2002, ABCINC,

Credit 1000.00) - 2.APPEND TO ABCINC_ACCOUNT (01/03/2002, SALES,

Debit 1000.00) - touch ddd/aaa

- 1.Allocate a new inode, numbered 1760, from the

free-inodes-list - 2.Fill inode 1760 with valid data (e.g. current

time, file size, and so on) - 3.Insert a directory entry aaa, 1760 in

directory ddd

2

What a transaction?

- A transaction is a set of interrelated operations

that causes changes to the state of a system in a

way that is atomic, consistent, isolated, and

durable (ACID) - Atomic

- The transaction must have an all-or-nothing

behavior - Consistent

- The transaction preserves the internal

consistency of the system - Isolated

- The net effect of the transaction is the same as

if it ran alone - Durable

- Changes to a system by a transaction must endure

through execution failures

3

(No Transcript)

4

Transaction

- The recovery applies changes to the database from

the transaction log using a procedure called log

replay - Roll forward a committed transaction

- Or roll back an uncommitted transaction

- Write-ahead logging

- Data updates must be written to the log before

they are written to the database

5

Checkpoint

- Checkpoints limit recovery time by reducing

effective to log size - A checkpoint is a point in time when the database

is updated with respect to all earlier

transactions - Stop accepting any new transactions

- Wait until all active transactions drain out

each transaction either commits or aborts - Write all log entries and flush the database

cache - Write a special checkpoint record to the log

- Start accepting new transactions

- The system is unresponsive to queries coming in

while steps 1 to 5 are executing

6

Fuzzy checkpoint

- Stop accepting any new transactions. Stop

accepting any new checkpoint requests - Collect a list of all dirty buffers in cache and

a list of all active transactions. Write down a

checkpoint record that contains the list of

active transactions - Start accepting new transactions

- Flush the dirty buffers from the list prepared in

step 2. - Start accepting new checkpoint requests

7

Log replay

- Locate the end of the log

- Traverse the log backward from the end to locate

the start of the log - Scan the log to build a list of transactions that

need replay. Committed transactions need to be

rolled forward aborted or uncommitted

transactions need to be rolled back - Perform optimizations on the list of

transactions. If the same data item is written

several times in the list, only the last write

needs to be replayed - Replay. Perform roll forward and rollbacks of

transactions in chronological order

8

Volume Manager (VM)

- VM provides storage virtualization

- A VM is system software that runs on host

computers to manage storage contained in hard

disks or hard disk arrays - Storage virtualization can be carried out either

by a volume manager, or intelligent disk arrays,

or both

9

VM Objects

- VM disks

- a physical hard disk that has been given to the

volume manager to use - Disk groups

- a set of VM disks with the same family name

- Subdisks

- has no relation to hard disk partitions or disk

slices - a contiguous region of storage allocated from a

VM disk. - subdisks do not overlap-a particular block of the

hard disk can be allocated to one subdisk at most - Plexes

- an aggregation of one or more subdisks

- plexes do not overlap-a subdisk can only belong

to one plex at a time - Volumes

- plexes can themselves be combined in various ways

to yield a volume - 1.VM disks are subdivided into subdisks

- 2.Subdisks are combined to form plexes

- 3.One or more plexes can be aggregated to form a

volume

10

VM Objects

11

Concatenated Storage

- Several subdisks can be concatenated to form a

plex - Logical blocks of the plex are associated with

subdisks in sequence - Concatenated storage may be formed from subdisks

on the same VM disks, or more commonly, from

several disks. The latter is called spanned

storage - Concatenated storage capacity equals the sum of

subdisks capacities - Bandwidth is sensitive to access patterns.

Realized bandwidth may be less than the maximum

value if hot spots develop - Concatenated storage created from n similar disks

has approximately n times poorer net reliability

than a single disk

12

(No Transcript)

13

Striped Storage

- Striped storage distributes logically blocks of

the plex more evenly over all subdisks compared

to concatenated storage - Storage is distributed in small chunks called

striped unit - The plex is laid out in regions called stripes

- Each stripe has one stripe unit on each subdisk.

Thus, if there are n subdisks, each stripe

contains n stripe units - Striped storage corresponds to RAID 0

14

Striped Storage

15

Striped Storage

- Let one stripe unit have a size of u blocks, and

let there be n sub disks - subdisk number of i (i div u) mod n

- stripe number s I div (nu)

- for example) 73

- d (73 div 8) mod 7 9 mod 7 2

- s 73 div (78) 73 div 56 1

16

Scatter-gather I/O

- A small stripe unit size helps to distribute

accessed more evenly over all subdisks - Small stripe sizes have a possible drawback,

however, disk bandwidth decreases for small I/O

sizes - An I/O request that covers several stripes would

normally be broken up into multiple requests, one

request per stripe unit - With scatter-gather, all requests to one subdisk

can be combined into a single contiguous I/O to

the subdisk, though the data is placed in several

non-contiguous regions in memory

17

Scatter-gather I/O

18

Kernel synchronization

- Parts of the kernel are not run serially, but in

an interleaved way, thus need a way to

synchronize - A kernel control path is defined as a sequence of

instructions executed by the kernel - Kernel requests may be issued in several possible

way - A process executing in User Mode

- An external device

- Page Fault exception

- Interprocessor interrupt

19

Kernel synchronization

- CPU interleaves kernel control paths when one of

the following events happens - A process switch

- An interrupt

- A deferrable function

20

Kernel synchronization

- The Linux kernel is not preemptive

- No process running in Kernel Mode may be replaced

by another process - Interrupt, exception, or softirq handling can

interrupt a process running in Kernel Mode

however, when the handler terminates, the kernel

control path of the process is resumed - A kernel control path performing interrupt

handling cannot be interrupted by a kernel

control path executing a deferrable function or a

system call service routine

21

Synchronization primitives

- Atomic operation

- Atomic read-modify-write instruction to a counter

- Memory barrier

- Avoid instruction re-ordering

- Spin lock

- Lock with busy waiting

- Semaphore

- Lock with blocking wait

- Local interrupt disabling

- Forbid interrupt handling on a single CPU

- Local softirq disabling

- Forbid deferrable function handling on a single

CPU - Global interrupt disabling

- Forbid interrupt and softirq handling on all CPUs

22

Atomic operations

- Several assembly language instructions are of

type read-modify-write - Must be executed in a single instruction without

being interrupted in the middle - One way is to lock the memory bus until the

instruction is finished - Linux kernel provides a special atomic_t type for

supporting atomic operations

23

Atomic operations

- atomic_read(v)

- atomic_set(v,i)

- atomic_add(i,v)

- atomic_sub(i,v)

- atomic_sub_and_test(i,v)

- atomic_inc(v)

- atomic_dec(v)

- atomic_dec_and_test(v)

- atomic_inc_and_test(v)

- atomic_add_negative(I,v)

24

Atomic operations

- test_bit(nr, addr)

- set_bit(nr, addr)

- clear_bit(nr,addr)

- change_bit(nr, addr)

- test_and_set_bit(nr, addr)

- test_and_clear_bit(nr, addr)

- test_and_change_bit(nr, addr)

- atomic_clear_mask(mask, addr)

- atomic_set_mask(mask, addr)

25

Example

- Void clm_add_global_wait(snq_llm_table_t lltp)

- DECLARE_WAITQUEUE(wait, current)

- if (!qsi_test_bit(STATE_BLOCKING_CLM,

snq_clm_config.state)) - CLM_ERROR(Global Blocking mode is not set)

- if (qsi_test_bit(LLT_STATE_BLOCKING,

lltp-gtstate)) - qsi_atomic_inc(lltp-gtcount)

- current_stateTASK_UNINTERRUPTIBLE

- add_wait_queue(lltp-gtwaitq, wait)

- schedule()

- remove_wait_queue(lltp-gtwaitq, wait)

- qsi_atomic_dec(lltp-gtcount)

26

Memory barrier

- Ensure that the operations placed before the

primitive are finished before starting the

operations placed after the primitive - In the 80x86 processors, the following kinds of

assembly language instructions are said to be

serializing - All instructions that operate on I/O ports

- All instructions prefixed by the lock byte

- All instructions that write into control

registers, system registers, or debug registers - A few special assembly language instruction

27

Memory barrier

- mb()

- Memory barrier for MP and UP

- rmb()

- Read memory barrier for MP and UP

- wmb()

- Write memory barrier for MP and UP

- smp_mb()

- Memory barrier for MP only

- smp_wmb()

- Read memory barrier for MP only

- smp_rmb()

- Write memory barrier for MP only

28

Spin locks

- When a kernel control path must access a shared

data structure or enter a critical section, it

needs to acquire a lock for it - If the kernel control path finds the spin lock

open, it acquires the lock and continues its

execution. - If the kernel control path finds the lock

closed, it spins around until the lock is

released

29

Spin locks

- Spin locks are usually very convenient, since

many kernel resources are locked for a fraction

of a millisecond only - Spin lock is represented by a spinlock_t

structure consisting of a single lock field the

value 1 corresponds to the unlocked state, while

any negative value and zero denote the locked

state

30

Spin locks

- spin_lock_init()

- Set the spin locks to 1 (unlocked)

- spin_lock()

- Cycle until spin lock becomes to 1, then set it

to 0 - spin_unlock()

- Set the spin lock to 1

- spin_unlock_wait()

- Wait until the spin lock becomes 1

- spin_is_locked

- Return 0 if the spin lock is set to 1 0

otherwise - spin_trylock()

- Set the spin lock to 0, and return 1 if the lock

is obtained 0 otherwise

31

Spin locks

- 1 lock decb slp

- jns 3f

- 2 cmpb 0,slp

- pause

- jle 2b

- jmp 1b

- 3

32

Read/write spin locks

- Have been introduced to increase the amount of

concurrency inside the kernel - Provide multiple reader/single writer scheme

- Each read/write spin lock is a rwlock_t

structure its lock field is a 32-bit field that

encodes two distinct pieces of information - A 24-bit counter denoting the number of kernel

control paths currently reading the protected

data structure - An unlock flag that is set when no kernel control

path is reading or writing

33

The Big Reader Lock

- Read/write spin locks are useful for data

structures that are accessed by many readers and

a few writers - However, any time a CPU acquires a read/write

spin lock, the counter in rwlock_t must be

updated - A further access to the rwlock_t data structure

performed by another CPU incurs in a significant

performance penalty because the hardware caches

of the two processors must be synchronized

34

The Big Reader Lock

- The idea is to split the reader portion of the

lock across all CPUs, so that each per-CPU data

structure lies in its own line of the hardware

caches. - The writer portion of the lock is common to all

CPUs because only one CPU can acquire the lock

for writing - __brlock array array stores the reader portions

of the big reader read/write spin locks for

every such locks, and for every CPU in the

system, the array includes a lock flag, which is

set to 1 when the lock is closed for reading - __brwrite_locks array stores the writer portions

of the big reader spin locks

35

The Big Reader Lock

- br_read_lock() function acquires the spin lock

for reading. It sets the lock flag in

__brlock_array corresponding to the executing CPU

and the big reader spin lock to 1 - br_read_unlock() function simply clears the lock

flag in __brlock_array - br_write_lock() function acquires the spinlock

for writing. It invokes spin_lock to get the spin

lock in __br_write_locks corresponding to the big

reader spin lock, and then checks that all lock

flags in __brlock_array corresponding to the big

reader lock are cleared - br_write_unlock() function simply invokes

spin_unlock to release the spin lock in

__br_write_locks

36

Semaphores

- Implement a locking primitive that allows waiters

to sleep until the desired resource becomes free - Linux offers two kinds of semaphores

- Kernel semaphores, which are used by kernel

control path - System V IPC semaphores, which are used by User

Mode processes

37

Semaphores

- Similar to a spin lock, in that it doesnt allow

a kernel control path to proceed unless the lock

is open - However, whenever a kernel control path tries to

acquire a busy resource protected by a kernel

semaphore, the corresponding process is

suspended. - It becomes runnable again when the resource is

release, therefore kernel semaphores can be

acquired only by functions that are allowed to

sleep

38

Semaphores

- A kernel semaphore is an object of type struct

semaphore, containing the fields - Count

- Stores an atomic_t value

- If it is greater than 0, the resource is free

- If it is equal to 0, semaphore is busy but no

other process is waiting for the protected

resource - If count is negative, the resource is unavailable

and at least one process is waiting for it - Wait

- Stores the address of a wait queue list that

includes all sleeping processes that are

currently waiting for the resource - Sleepers

- Stores a flag that indicates whether some

processes are sleeping on the semaphore

39

Semaphores

- Init_MUTEX and init_MUTEX_LOCKED

- Used to initialize a semaphore for exclusive

access. - They set the count field to 1 (free resource with

exclusive access) and 0 (busy resource with

exclusive access currently granted to the process

that initializes the semaphore) - Could be initialized with an arbitrary positive

value n for count

40

Semaphores

- Up() function increments the count field of the

sem semaphore, and then it checks whether its

value is greater than 0 - If the counter is greater than 0, there was no

process sleeping in the wait queue, so nothing

has to be done - void __up(struct semaphore sem)

- wake_up(sem-gtwait)

41

Semaphore

- Down() function decrements the count field of the

sem semaphore, and then checks whether its value

is negative. - If count is greater than or equal to 0, the

current process acquires the resource and the

execution continues - If count is negative, the current process must be

suspended, following the work that the contents

of some registers are saved on the stack, and

then __down() is invoked

42

Semaphore

- down

- movl sem, ecx

- lock decl (ecx)

- jns 1f

- pushl eax

- pushl edx

- pushl ecx

- call __down

- popl ecx

- popl edx

- popl eax

- 1

43

Semaphore

- void __down(struct semaphore sem)

- DECLARE_WAITQUEUE(wait, current)

- current-gtstate TASK_UNINTERRUPTIBLE

- add_wait_queue_exclusive(sem-gtwait, wait)

- spin_lock_irq(semaphore_lock)

- sem-gtsleepers

- for ()

- if (!atomic_add_negative(sem-gtsleepers-1,

sem-gtcount)) - sem-gtsleepers 0

- break

- sem-gtsleepers 1

- spin_unlock_irq(semaphore_lock)

- schedule()

- current-gtstate TASK_UNINTERRUPTIBLE

- spin_lock_irq(semaphore_lock)

- spin_unlock_irq(semqphore_lock)