The method used for staffing PowerPoint PPT Presentation

1 / 7

Title: The method used for staffing

1

The method used for staffing

Staffing issues

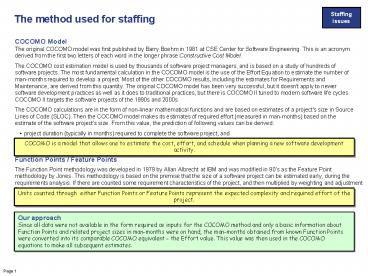

- COCOMO Model

- The original COCOMO model was first published by

Barry Boehm in 1981 at CSE Center for Software

Engineering. This is an acronym derived from the

first two letters of each word in the longer

phrase Constructive Cost Model. - The COCOMO cost estimation model is used by

thousands of software project managers, and is

based on a study of hundreds of software

projects. The most fundamental calculation in the

COCOMO model is the use of the Effort Equation to

estimate the number of man-months required to

develop a project. Most of the other COCOMO

results, including the estimates for Requirements

and Maintenance, are derived from this quantity.

The original COCOMO model has been very

successful, but it doesn't apply to newer

software development practices as well as it does

to traditional practices, but there is COCOMO II

tuned to modern software life cycles. COCOMO II

targets the software projects of the 1990s and

2000s. - The COCOMO calculations are in the form of

non-linear mathematical functions and are based

on estimates of a project's size in Source Lines

of Code (SLOC). Then the COCOMO model makes its

estimates of required effort (measured in

man-months) based on the estimate of the software

project's size. From this value, the prediction

of following values can be derived - project duration (typically in months) required

to complete the software project, and - required staffing (typically in FTE).

COCOMO is a model that allows one to estimate the

cost, effort, and schedule when planning a new

software development activity.

Function Points / Feature Points The Function

Point methodology was developed in 1979 by Allan

Albrecht at IBM and was modified in 90s as the

Feature Point methodology by Jones. This

methodology is based on the premise that the size

of a software project can be estimated early,

during the requirements analysis. If there are

counted some requirement characteristics of the

project, and then multiplied by weighting and

adjustment factors, the sum is the total point

count.

Units counted through either Function Points or

Feature Points represent the expected complexity

and required effort of the project.

Our approach Since all data were not available in

the form required as inputs for the COCOMO method

and only a basic information about Function

Points and related project sizes in man-months

were on hand, the man-months obtained from known

Function Points were converted into its

comparable COCOMO equivalent - the Effort value.

This value was then used in the COCOMO equations

to make all subsequent estimates.

2

Complexity of applications in function points

Metrics and motivation

Function Points (Albrecht, 1979) is a metrics

used for estimating the size of an application.

The basic idea of Function Points is that five

items are counted 1) the inputs to the

application 2) the outputs from it 3) inquiries

by users 4) the data files that would be updated

by the application, and 5) the interface to the

application. Once these figures are known, they

are multiplied by following coefficients 4, 5,

4, 10, and 7 (these values can be modified from

own experience, if required). The result

indicates the estimated size of an application.

Function Points worked reasonably for simple

transaction processing or management information

systems in 80s, but unfortunately they often

proved to be inadequate for estimating complex

application such as information systems for

high-tech industries. Therefore, there exists

the need for Feature Points (Jones, 1995), a

superset of classical FP, to correct this

problem. Feature Points introduces new

measurement, algorithms, giving it a weighting of

3. Additionally, the data file weighting is

decreased from 10 to 7.

Software Project Size100 FP 1000 FP 10000 FP

Productivity ratesin FP per man/month

Source code statements required for one FP

Programming Language

Assembler low level C classical Fortran,

Cobol structured Pascal, RPG, PL1 hybrid object

oriented Modula-2, C non imperative Lisp,

Prolog 4GL languages obj. oriented VB, Java,

C pure object oriented Smalltalk, CLOS query

languages SQL, QBE, OQL

gt 100 75 - 100 50 - 75 25 - 50 15 - 25 5 - 15 1 -

5 lt 1

320 150 106 80 71 64 40 32 21 16

1.0 0.01 0.0 3.0 0.1 0.0 7.0 1.0 0.0 15.

0 5.0 0.1 40.0 10.0 1.4 25.0 50.0 13.5 10

.0 30.0 70.0 4.0 4.0 15.0

(Jones)

(Jones)

3

Metrics management is the process of collecting,

summarizing and acting on measurements

Metrics and motivation

Metrics management is used to potentially improve

both the quality of the software that is

developed or maintained and also to improve the

actual software processes of the organization

itself. Metrics management provides the

information critical to understanding the quality

of the work that the project team has performed,

and how effectively that work was performed.

Software companies are used to collect metrics

because this is the only proven tool to obtain

managerial information for software development

and quality assurance In the suggested Capability

Maturity Model (CMM), there are recommended

numbers of metrics to be collected and analyzed.

Optimal frequency of collect the recommended

metrics is weekly (or earlier, if there is a

tool), and optimal frequency of analyze metrics

is between weekly and monthly.

The need for Metrics in CMM

Why to Collect Metrics?

- To support greater predictability and accuracy of

schedules and estimates. - To support quality assurance efforts by

identifying the techniques that work best and the

areas that need more work. - To support productivity and process improvements

efforts by measuring how efficient the IT staff

is and by indicating where the IT staff need

improve. - To improve management control by tracking both

projects and people. - To improve the motivation of developers by making

them aware of what works and what does not. - To improve communications by describing

accurately what is happening, and by identifying

trends early on.

4

Family of potential SW metrics

Metrics and motivation

Stage

Potential Metrics

Define Requirements and Justify

- Number of use case

- Function/feature points

- Level of risk

- Project size

- Cost/benefit breakpoint

- Number of reused infrastructure artifacts

- Number of introduced infrastructure artifacts

- Requirements instability

- Procedure/Operation count of a module

- Number of variables per data structure

- Size of procedures/operations

- Number of data types per database

- Number of data relationships per database

- Number of interfaces per module

- Requirements instability

- Procedures/Operations size

Define Management Documents

Model

Program, Generalize and Test

Recommended metrics are printed in bold face.

5

Family of potential SW metrics

Metrics and motivation

Stage

Potential Metrics

Time to fix defects Defect recurrence Defect type

recurrence Number of defects Defect source

count Work effort to fix a defect Percentage of

items reworked Enhancements implemented per

release Amount of documentation Percentage of

customers trained Average training time per user

trainer Number of lessons learned Percentage of

staff members assessed Average response

time Average resolution time Support request

volume Support backlog Support request

aging Support engineer efficiency Reopened

support requests Mean time between

failures Software change requests opened and

closed

Deliver and Test

Assess

Support

Recommended metrics are printed in bold face.

6

Metrics Applicable to All of the Phases and Stages

Possible metrics to collect

Metrics

Description

Calendar time expended

This schedule metric is a measure of a calendar

time to complete a task, where a task can be as

small as the creation of a deliverable or as

large as a project phase or an entire project.

Overtime

This cost metric is a measure of the amount of

overtime for a project and can be collected as

either an amount of of workdays or a percentage

of overall effort. This metric is often

subdivided by overtime for work directly related

to development, such as programming or testing,

and by indirect effort, such as training or team

coordination.

Staff turnover

This productivity metric is a measure of the

number of people gained and lost over a defined

period of time, typically a calendar month. This

metric is often collected by position/role and

can be used to help define human resources

requirements and the growth rate of your

information technology (IT) department.

Work effort expended

This cost metric is a measure of the amount of

work expended to complete a task, typically

measured in work days or work months. This metric

is often collected by task (see above).

Release Stage

7

Metric categories

Possible metrics to collect

Category

Description

Cost metrics measure the amount of money invested

in a project. Examples of cost metrics include

effort expended or investment in training.

Cost

Productivity metrics measure the effectiveness of

the organizations infrastructure. Examples of

productivity metrics include the number of reused

infrastructure items and trend analysis of effort

expended over given periods of time.

Productivity

Quality metrics measure the effectiveness of the

quality assurance efforts. Examples of quality

assurance metrics include the percentage of

deliverables reviewed and defect type recurrence.

Quality

Requirements metrics measure the effectiveness of

the requirements definition and validation

efforts. Examples of requirements metrics

include requirements instability and number of

use cases.

Requirements

Schedule metrics measure the accuracy of your

proposed schedule to the actual schedule.

Examples of schedule metrics include the calendar

time expended to perform a task or project phase.

Schedule

Size metrics measure, as the name suggests, the

size of the development efforts. Examples of size

metrics include the number of methods of a class

and the function/feature point count.

Size

Testing metrics measure the effectiveness of the

testing efforts. Examples of testing metrics

include the defect severity count and the defect

source count.

Testing