Class PowerPoint PPT Presentation

1 / 48

Title: Class

1

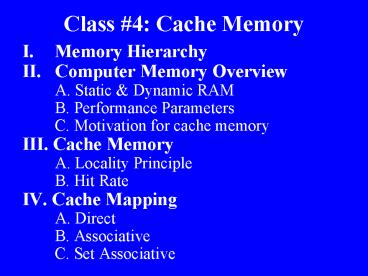

Class 4 Cache Memory

- Memory Hierarchy

- Computer Memory Overview

- A. Static Dynamic RAM

- B. Performance Parameters

- C. Motivation for cache memory

- III. Cache Memory

- A. Locality Principle

- B. Hit Rate

- IV. Cache Mapping

- A. Direct

- B. Associative

- C. Set Associative

2

Processor vs. Memory

3

The Memory Hierarchy

4

Everything is Cache?

- Small, fast storage used to improve average

time to slow memory - Exploits spatial and temporal locality

- In computer architecture, almost everything is

cache! - - First level cache is a cache of second level

cache - - Second level cache is a cache of memory

- - Memory is a cache of disk

5

Caching Timing

- Time scales are HUGE (10mS is 10,000,000 cycles)

2nS 10nS 100nS 10mS 100S

10x

100000x

6

Computer Memory

- Computer memory is a collection of cells capable

of storing binary information. - Two states 0 and 1 in each cell

- Two operations Write and Read

(write)

(read)

Ferromagnetic core

A cell with 3 terminals (look familiar?)

semiconductors

7

How Data is Represented

- Storing Electrical Signals

- Data is represented as electrical signals.

- Digital signals are used to transmit data to and

from devices attached to the system bus. - Storage devices must accept electrical signals as

input and output.

Digital electrical signal Alternating between

10101010 and 10101011 every second (by voltage)

8

Static RAM (SRAM)

- Implemented with multiple transistors, usually 6.

- One state represents 1, the other state

represents 0. - Pros

- Simplicity Holds its data without external

refresh, for as long as power is supplied to the

circuit. - Speed SRAM is faster than DRAM.

- Cons

- Cost SRAM is, byte for byte, two orders of

magnitude more expensive than DRAM. - Size SRAMs take up much more space than DRAMs

(which is part of why the cost is higher). - Used for cache memory

9

Dynamic RAM (DRAM)

- Uses transistor and capacitor.

- Loses charge quickly.

- Require a fresh infusion of power thousands of

times per second. - Each refresh operation is called a refresh cycle.

- Pros

- Size ¼ size of SRAM (uses only one transistor).

- Cost Much less expensive (100x) than SRAM.

- Cons

- Cell refreshing Constant refreshing (reading) of

cell. This refreshing action is why the memory is

called dynamic. - Speed SRAM is 10 times faster.

- Used for main memory (RAM) in computers.

10

Synchronous DRAM (SDRAM)

- SDRAM Not the same as SRAM.

- Faster than asynchronous DRAM

- Read-ahead RAM that uses the same clock pulse as

the system bus. - Read and write operations are broken into a

series of simple steps and each step can be

completed in one bus clock cycle. - Pipelining is possible with SDRAM.

- SDRAM is the most commonly used technology for

PC memory today.

11

So you want fast?

- It is possible to build a computer which uses

only SRAM. Why not?? - This would be very fast

- This would need no cache

- How can you cache cache?

- BUT it would cost a very large amount of .

12

Motivation for Caches

- Large main memories (DRAM) are slow

- Small cache memories (SRAM) are fast

- Make average access time small by servicing most

accesses from small, fast memory

By combining a small fast memory with a large

slow memory, we can get the speed (almost) of the

fast memory for the price of the slow memory. The

fast memory is called a cache.

13

Cache Memory Data Transfer

- Small amount of fast memory (SRAM)

- Sits between normal main memory and CPU

- May be located on CPU chip (i.e. Level 1 cache)

- Goal High speed, high capacity

(256 words)

32-bits (4 bytes)

Cache checked first, then main memory

14

Cache Memory Principle

- Basic idea most heavily used memory words are

kept in the cache. When a memory word is

required, the CPU first looks in the cache. If

the word is not there, it looks to main memory. - In order to be successful, a large percentage of

the words searched must be in the cache. We can

ensure this by exploiting the locality principle.

When a word is referenced, it and some of its

neighbors are brought into the cache, so next

time it can be accessed quickly.

15

Cache Memory Locality

- Principle of Locality states that programs access

a small portion of address space at any instant

of time (e.g. 90 of time in 10 of code) - Temporal Locality (Locality in Time) If an item

is referenced, it will tend to be referenced

again soon (loops) - Keep more recently accessed data items closer to

the processor - Spatial Locality (Locality in Space) If an item

is referenced, it will tend to be referenced

again in close proximity to previous one (arrays) - Move blocks consisting of contiguous words to the

upper levels

16

Cache Examples

- Library If they know that a class is doing a

unit on Russian literature and they know the

first book is Dostoevskys The Brothers

Karamazov, they could keep a cache at the front

desk to save retrieval time (temporal locality).

They could also keep a cache of related books

such as Tolstoys War and Peace (spatial

locality). - Music downloads (temporal and spatial)

17

How the Locality Principle Works with Cache Memory

- Using the locality principle, main memory and

cache are divided up into fixed-size blocks. When

referring to the cache, these blocks are called

cache lines. When a cache miss occurs, the entire

cache block is loaded. - Instructions and data can either be kept in the

same cache (unified cache) or in separate caches

(split caches). Today split caches are most used. - - There can also be multiple caches (on chip,

off chip but in the same package as the CPU, and

farther away).

18

Cache Terminology

- Hit data appears in some block in the upper

level (example Block X) - Hit Rate the fraction of memory access found in

the upper level - Hit Time Time to access the upper level which

consists of time to determine hit/miss SRAM

access time - Miss data needs to be retrieved from block in

lower level (Block Y) - Miss rate 1 (hit rate)

- Miss Penalty Time to replace a block in the

upper level from lower level Time to deliver

the block to the processor - Hit Time ltlt Miss penalty

19

Cache Memory Access Time

- Let T1 be the cache access time, T2 the main

memory access time, and h the hit ratio (fraction

of references satisfied out of the cache). Then

the mean access time is - T1 (1 - h) T2

- As h approaches 1, all references can be

satisfied out of cache and the access time

approaches T1. On the other hand, as h approaches

0, the access time approaches T1 T2

20

Example Hit Rate

- Suppose that the processor has access to 2 levels

of memory. Level 1 contains 1000 words and has an

access time of 0.01µs level 2 contains 100,000

words and has an access time of 0.1µs. Assume

that if a word is in level 1, then the processor

accesses it directly. If it is in level 2, then

the word is first transferred to level 1 and then

accessed by the processor. Assume 95 of memory

accesses are found in the cache. What is the

average time to access a word? - T1 0.01µs, T2 0.10µs

- Mean Access Time (0.01µs) (1 - 0.95)(0.10µs)

- 0.015µs

21

Pentium 4 Block Diagram

22

Typical Values

Hit time 1 cycle Miss penalty 10 cycles

(access transfer) Miss rate usually well

under 10

23

Cache Operation - Overview

24

Some Laptop Specs

- Recognize any terms here?

25

Cache Evolution

26

Intel and Cache Memory

27

Cache Mapping

- How is cache mapped to main memory? How does one

determine whether there is a cache hit? - Since the number of memory blocks is larger than

the number of cache lines, special mapping

policies are needed to map memory block into

cache line. - Mapping Policies

- Direct Mapping

- Associative Mapping

- Set Associative Mapping

28

Main Memory/Cache Structure

- Main memory space is divided into blocks

- Suppose

- 1). of addressable words 2n

- if 32-bit address, then 232 addressable words

- 2). Block size K words/block,

- EX 4 words/block

- 3). of memory blocks B2n/K

- B 232 /4 230 blocks

- Cache space is divided into

- m cache lines

- say m 214 lines

- With K 4 words/line

- then cache size

- 4 words/line 214 lines

- 216 words 64K words

Main Memory

Cache

29

Cache Organization

- (1) How do you know if something is in the cache?

- (2) If it is in the cache, how to find it?

- Answer to (1) and (2) depends on type or

organization of the cache - In a direct mapped cache, each memory address is

associated with one possible block within the

cache - Therefore, we only need to look in a single

location in the cache for the data if it exists

in the cache. This makes cache access fast!

30

Simplest Cache Direct Mapped

4-Block Direct Mapped Cache

MainMemory

Cache Index

Address

0

00

0

0000

1

01

1

0001

2

10

2

0010

3

11

3

0011

4

0100

Memory block address

5

0101

6

0110

index

tag

7

0111

8

1000

9

- index determines block in cache

- If number of cache blocks is power of 2, then

cache index is just the lower n bits of memory

address

1001

10

1010

11

1011

12

1100

13

1101

14

1110

15

1111

31

Elements of Direct-Mapped Cache

- If block size gt 1 word, rightmost bits of index

are really the offset of a word (possibly byte

number) within the indexed block

32

Direct-mapped Cache Issues

- The direct mapped cache is simple to design and

its access time is fast (Why?) - Good for L1 (on-chip cache)

- Problem Conflict Miss, so lower hit ratio

- Conflict Misses are misses caused by accessing

different memory locations that are mapped to the

same cache index - In direct mapped cache, no flexibility in where

memory block can be placed in cache, contributing

to conflict misses

33

Direct Mapping Example

With m (214) cache lines and s (222) blocks in

main memory

0000000000000100 (line 1)

0011001110011100 (line 3303)

214 16,384 lines

222 4,194,304 blocks

34

Direct Mapping Exercise

- (Stallings 4.8) Consider a machine with a byte

addressable main memory of 216 bytes and block

size of 8 bytes. Assume that a direct mapped

cache consisting of 32 lines is used with this

machine. - How is a 16-bit memory address divided into tag,

line number, and byte number? - We would have 8 words per block, so there would

be 3 bits for that. There are 32 cache lines, so

it needs 5 bits to refer to the cache line. This

leaves 8 bits for the tag. - b) Into what line would bytes with each of the

following addresses be stored? - 0001 0001 0001 1011 The 5 bits for the cache

line are 00011 3. - 1100 0011 0011 0100 The 5 bits for the cache

line are 00110 6. - 1101 0000 0001 1101 The 5 bits for the cache

line are 00011 3. - 1010 1010 1010 1010 The 5 bits for the cache

line are 10101 21. - c) Suppose the byte with address 0001 1010 0001

1010 is stored in the cache. What are the

addresses of the other bytes stored along with

it? - The addresses stored in the same block range

from 0001 1010 0001 1000 to 0001 1010 0001 1111. - How many total bytes of memory can be stored in

the cache? - The cache has 32 lines with 8 bytes each line

256 bytes. - Why is the tag also stored in the cache?

- The tag determines if it is a cache hit. The

tags are used to distinguish between possible

entries in a cache line.

35

Direct Mapping pros cons

- Advantage

- Simple

- Inexpensive

- Disadvantage

- Fixed cache location for a given block leads to

high miss rate and low performance. - For example, if the processor repeatedly

accesses 2 memory blocks that map to the same

cache line, the cache misses will be very high,

even though other cache lines may be idle.

36

Associative Mapping

- A main memory block can be mapped into any cache

line randomly. The mapping relationship is not

fixed. - Memory address contains two fields tag and word.

- Tag uniquely identifies the memory block.

- Cache searching needs to check every lines tag.

37

Associative Mapping Example

Word 2 bits

Address

Tag 22 bits

- Cache size is 4 x word size in bytes x m cache

lines. If word 1 byte then a block 4 bytes and

cache size is 4m bytes. - Tag has 22 bits (2224M). 2 bits identify the

byte (or word) in a memory block or a cache line,

so there are 22 4 bytes per block (or line). - Memory size is 222 22 224 16 M Bytes each

block has 4 bytes, there are a total of 4 M

blocks of memory - Compare address tag field with tag entry in each

cache line for cache hit or miss. - e.g.

- Address (24 bits) 0001 0110 0011 0011 1001

1100 (Binary) - 1 6 3 3 9

C (Hex) - Tag (22 bits) 00 0101 1000 1100

1110 0111 (Binary) 0

5 8 C E 7 (Hex)

38

Associative Mapping Example

22 bit tag

24 bit memory address

Features High hit rate, but very complex

hardware design.

39

Set Associative Mapping

- Combining features of direct mapping and

associative mapping - Cache is divided into a number of sets

- Each set contains a number of lines

- A given memory block can only be mapped into a

specific set - In the set, the memory block can be mapped into

any cache line - Example 2-way set associative mapping

- 2 cache lines in each set

- A given memory block can be mapped into only

one cache set. Inside each set, the memory block

can be mapped into any one of the cache lines.

40

Address Structure

- Address contains three fields

- W bits identify the word or byte in a memory

block or a - cache line, 2s blocks in main memory

- Cache space is divided into 2d sets

- Each set contains up to 2 (s-d) cache lines

- Two steps searching

- Using set field to determine cache set to look in

- Comparing tag field to check the cache hit or

miss

41

Set Associative Exercise

- (Stallings 4.1) A set associative cache consists

of 64 lines, or slots, divided into 4-line sets.

Main memory contains 4K blocks of 128 words each.

Show the format of main memory addresses. - The cache is divided into 16 sets of 4 lines (16

x 4 64) each. Therefore, 4 bits are needed to

identify the set number. - There are 128 words. That is 27 words. So the

word field must be 7 bits long. - Main memory consists of 4K 212 blocks.

Therefore, the set plus tag lengths must be 12

bits and therefore the tag length is 8 bits.

42

Set Associative Example

43

Replacement Algorithms

- When there is no available cache line in which

to place a memory block, a replacement algorithm

is implemented. The replacement algorithm

determines which line is to be freed up for the

new block. - For direct mapping function, because each block

only maps to the fixed cache line, no replace

algorithm is needed. - We will focus on replacement algorithms for

fully associative mapping and set associative

mapping.

44

Replacement Algorithms Direct mapping

- No choice

- Each block only maps to one line

- Replace that line

45

Replacement Algorithms for Associative Set

Associative

- Least Recently Used (LRU)

- e.g. In 2-way set associative, determine which

of the 2 blocks is the least recently used. - First In First Out (FIFO)

- replace the block that has been in cache longest

- Least Frequently Used (LFU)

- replace the block which has the fewest hits

- Random Replacement

- randomly replace one block from cache line.

46

Write Policy

- Some unpleasant situations

- If a word in the cache has been changed, then the

corresponding word in the memory is invalid. - If a word in memory is changed, then the

corresponding cache word is invalid. - If multiple processor-cache modules exist, a word

in one cache is changed, then the corresponding

word in other caches will be invalid. - Write policy is executed to update memory or

cache thus to keep the data consistent in memory

and in cache. - Write through

- Write back

47

Write through

- All writes go to main memory as well as cache

- Multiple CPUs can monitor main memory traffic to

keep local (to CPU) cache up to date - Pro

- Cache and memory kept coherent

- Cons

- Lots of traffic

- Slows down writes

48

Write back

- Updates initially made in cache only

- Update bit for cache slot is set when update

occurs - If block is to be replaced, write to main memory

only if update bit is set - Pro

- More efficient than write through, less traffic

- Cons

- Caches get out of sync with memory

- I/O must access main memory through cache

- This has been done on all Intel processors since

the 486.