Linear Separability - PowerPoint PPT Presentation

1 / 7

Title:

Linear Separability

Description:

between the classes ... output = Class B (1) using an offset of -0.2 the function ... a single linear function cannot distinguish between the two classes ... – PowerPoint PPT presentation

Number of Views:223

Avg rating:3.0/5.0

Title: Linear Separability

1

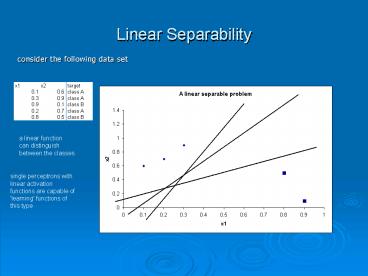

Linear Separability

- consider the following data set

a linear function can distinguish between the

classes

single perceptrons with linear activation

functions are capable of 'learning' functions of

this type

2

Linear Separability

- How can a perceptron with a linear activation

function learn a linear separable function?

x1

w1

output

w2

x2

IF net_input lt 0 gt output ClassA

(0) ELSE output Class B (1)

where for a trained perceptron the weights

are constant

net_input is a linear function

i.e. net_input x1w1 x2w2

3

Linear Separability

- How can a perceptron with a linear activation

function learn a linear separable function?

x1

w1

output

w2

x2

e.g. assume initial values are w1 0.1 and w2

-0.4

-0.4x2 -0.1x1 x2 0.1x1/0.4

then net_input x10.1 x2 (-0.4)

the linear function net_input 0 is x10.1 x2

(-0.4) 0

4

Linear Separability

- How can a perceptron with a linear activation

function learn a linear separable function?

-0.4x2 -0.1x1 x2 0.1x1/0.4

x2 0.18x1/0.35

x1

w1 0.1

output

w2 -0.4

x2

present (0.8, 0.5) to the perceptron

net_input 0.80.1 0.5 (-0.4) 0.08 - 0.20

-0.12 gt Class A

error 1

adjust weights using a learning rate of 0.1

new w1 old w1 (0.1 0.8 1) 0.1 0.08

0.18

function becomes x2 0.18x1/0.35

new w2 old w2 (0.1 0.5 1) -0.4 0.05

-0.35

5

Linear Separability

- How can a perceptron with a linear activation

function learn a linear separable function?

x2 0.1x1/0.4 0.5

x1

w1 0.1

output

w2 -0.4

x2

an offset could be used in the step function

using an offset of -0.2 the function

becomes x1w1 x2w2 -0.2

this offset value will later become a trainable

bias

IF net_input lt offset gt output ClassA

(0) ELSE output Class B (1)

or .. x2 (-0.2/w2) - (x1w1/w2)

6

Linear Separability

- however consider the following data set

a single linear function cannot distinguish

between the two classes

instead two linear or single a non-linear

function is required

7

Linear Separability

- what about this one

This forms the basis of tutorial 9 p. 42

course notes

multi-layer networks using non-linear activation

functions can solve non-linear separable

problems