Language Descriptions and Compiler Architecture - PowerPoint PPT Presentation

1 / 34

Title:

Language Descriptions and Compiler Architecture

Description:

... split the input sentence in the source language into tokens. ... translator or compiler? The secret of language translation is to know what to do for all legal ... – PowerPoint PPT presentation

Number of Views:108

Avg rating:3.0/5.0

Title: Language Descriptions and Compiler Architecture

1

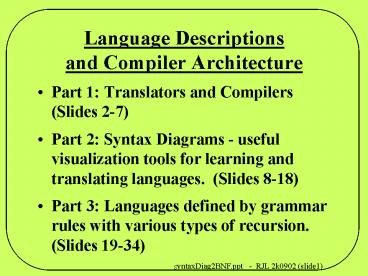

Language Descriptions and Compiler Architecture

- Part 1 Translators and Compilers (Slides 2-7)

- Part 2 Syntax Diagrams - useful visualization

tools for learning and translating languages.

(Slides 8-18) - Part 3 Languages defined by grammar rules with

various types of recursion. (Slides 19-34)

2

Part 1 Compiler Architecture

- Modern compilers have four major parts

- Preprocessors to split the input sentence in the

source language into tokens. - The parser that derives or reverse-engineers the

derivation tree structure and a dictionary of

names with their properties from these tokens. - A code generator which produces strings in the

desired output or target language. - A main program, or controller which invokes the

other modules and manages I/O files.

3

Preprocessors

- There are two kinds of preprocessors

- A lexical analyzer to find meaningful atomic

parts or tokens to the parser. - A macro-processor to perform string substitution,

by defining and/or expanding some tokens before

parsing can proceed. - Lexical analysis is needed before the macro

preprocesor and again before the parser.

4

Parsers

- Modern parsers act as front ends to code

generators and optimizers, which require several

intermediate products - A memory-resident database that represents the

programs parse, derivation, or abstract syntax

tree. - A symbol table or dictionary of names used in the

program together with their semantic properties

(datatype, etc.). - These are accessed during code generation.

- Error messages for the programmer.

5

Code Generators

- Modern code generators have two or more stages

- Intermediate language (IL) code generation

- Traverse the parse tree and generate an initial

sequence of instructions that could execute (or

interpret) each basic block of code correctly if

the IL is executable (or interpretable). - Code Optimization

- Rearrange the execution sequence to expedite

necessary operations and avoid wasted ones - Map IL instructions into the most efficient ones

for the target language, OS and platform - Different types of optimization may be done in

separate IL code transformation steps.

6

Target Languages

- There can be many target language types

- Object code to be linked.

- Assembly language to be assembled and linked.

- IL output for a virtual machine (VM)

- The VM may be emulated by software on a real

hardware platform (e.g. byte code IL for the

JVM). - A different source language for which there

already exists a compiler (e.g. the cfront

compiler translated C programs into C). - Translating a compiler program into a subset of

its own source language is called

boot-strapping the compiler.

7

What makes a good translator or compiler?

- The secret of language translation is to know

what to do for all legal derivations and explain

what is wrong with all illegal ones. - Good compilers produce Warnings for all syntax

violations and as many as possible semantic ones. - Exercise Try recompiling your last program with

all warnings turned on - The gnu (gcc or g) option for this is -Wall.

- In practice, some violations are justifiable, but

reasons for them should be documented.

8

Part 2 Syntax Diagrams

- Syntax diagrams are a tool to visualize language

structure and implement translators. - Syntax diagrams are directed di-graphs with two

kinds of nodes - An oval node, labeled by a terminal symbol in T

represents (scanning of) that terminal symbol

(token) - A rectangular node, labeled by a nonterminal

symbol or grammar variable in V NT, represents

(recognition or translation of) that nonterminal

symbol. - The nodes are connected by directed edges

- The edges specify alternate sequences of symbols

that are syntactically valid sentences in the

language. - Paths through the syntax diagrams determine the

flow of control through language parsers or

translators.

9

BNF Definitions for Language Syntax

- A BNF grammar for a language L is a finite set of

syntax rules that describes how to compose or

derive any string in L. - A BNF grammar cannot describe the meaning of

sentences in a language. There are other more

complex rules to specify meaning or semantics. - These BNF rules are used to analyze (parse) a

string in L. - A program is a legal string or sentence in a

programming language. - A parser analyzes this string by progressively

decomposing it into a tree of constitutent parts.

- A successful parse is a constructive proof that

the string is a member of L.

10

BNF Rules For a Grammar

- BNF rules require additional variables, called

nonterminal symbols, from another finite set

called NT that depends on L. - A unique symbol in NT is called the Start Symbol

- Let V NT T.

- NT is disjoint from T NT intersect T is empty.

- BNF rules are formed using 4 other meta-symbols

not in V - Brackets () to represent nested tree

structures - Connective or to represent alternate

choices. - Assignment for deriving any RHS from the LHS

11

Recursion vs. Iteration in Syntax Diagrams

- A node with a cyclic or looping path around it

implies iteration (lists) or recursion (nesting),

depending on its context. - A diagram whose name also appears (directly or

indirectly) inside one of its rectangles implies

recursive BNF rules. - The two above properties may be combined.

- Recursion can be of three types

- Embedded or essential E ( E )

- Left-recursive E E Id

- Right-recursive E Id E

12

BNF for A instead of A

- In Regular Expressions, superscript is a

unary operator shorthand A AA AA. - If variable Alist represents A as in the

previous example, then A is represented by

either - APlus A Alist Alist A Alist ltemptygt

- or

- APlus Alist A Alist Alist A ltemptygt

- The first pair of rules is right-recursive

- so they have a (deterministic) syntax diagram

representation

B

S

A

D

Alist

13

BNF with right recursion Syntax Diagrams with

cycles

- Regular Expressions use the star () operator to

represent unconstrained iteration S BAD - BNF grammars represent A by a new variable Alist

which is recursively defined either - S B Alist D

- Alist A Alist ltemptygt lt--

(Right-recursive) - or

- S B Alist D

- Alist Alist A ltemptygt lt--

(Left-recursive) - Syntax diagrams show iteration as cyclic paths

B

D

s

A

14

Nested Grammar Rules

- A is an A-based grammar pattern that is nested

within the RH-Side of Ss grammar rules - S B A Alist D lt-- at least one A

- Alist Alist A ltemptygt lt--Left-recursive

- Syntax Diagram

- A Alist represents A A A because A is in

the forward path and cannot be bypassed with

ltemptygt. - A is defined by its own syntax diagram (next

slide)

A

A

B

S

A

D

Alist

Pass Exit

15

Alternate Diagrams for A

- Both of these syntax diagrams define A

A A A

A

A

S2

S1

S1

A

A

A

- The second diagram above permits different

processing to be done on the first occurrence of

A, because it separates the first A from A - In diagram 2, each A-parser call-site (State S1

or S2) can customize its own response. - In diagram 1, implementers must maintain a

separate first-time flag to do this.

16

Nested Syntax Diagrams

- The first syntax diagram below represents the

grammar rule S B A AList D . - In diagram 2, the dashed rectangle encloses a

sub-diagram for A which is the in-line expansion

of the variable Alist.

S

Pass

Alist

B

D

A

Alist or A

Pass

B

D

A

s

A

17

What about Fail Exits?

A

B

S

A

D

Alist

Pass Exit

- This syntax diagram only shows a Pass Exit

- It ignores the possibility of failure.

- Syntax errors need specific diagnostics.

- Syntax diagrams and their state transition tables

also need Fail Exits that report errors. - Specifying these checks in additional state

diagram nodes further enhances the power of

syntax diagrams as a visual design tool.

18

What about Semantic Errors?

- Semantic Errors are errors which cannot be

detected by the syntactic rules specified by the

grammar. Some of these errors can be caught by

contex-sensitive checks in the compiler. - Syntax diagrams can also be expanded by adding

non-grammar-based state nodes where semantic

error checks are performed (and their Pass/Fail

status remembered). - For example, lexical analyzers can check size

limits and word lengths, and parsers can check

that variable types are declared and appropriate

with their use. - Adding extra state nodes to specify these checks

further enhances the visualization capability of

syntax diagrams.

19

Languages as Infinite Subsets of Strings

- A language L is a (usually infinite) set of

strings (sequences) of constant symbols or

characters in a finite set or alphabet T. - T is called Ls vocabulary of terminal symbols.

- The language T is called the universal or

all-inclusive language over vocabulary T. - T is the set of all strings of symbols from T.

- T includes strings of any length, including 0.

- Subsets of T can be sorted by string-length

- Tk includes all strings of length exactly k.

- To is the set containing one string of length

0 - Using to denote set union, T is the infinite

polynomial To T1 T2 etc.

20

Languages as Semigroups

- T is an example of a (non-commutative)

semigroup, because it is closed under string

concatenation - If s1 and s2 are members of T, so is s1s2 or

s2s1. - Languages (subsets of T) are closed under direct

product of sets - If L1 and of sts2 are subsets of T, so is L1L2

or L2L1. - The identity element for concatenation of strings

is the null string for direct products of

languages it is the singleton set T0 . - If L is in T, then T0 L L and L T0 L.

- Caution the identity for set union (L1 L2) is

the empty set , which is not the same as T0

!

21

BNF Rules are also parsed

- Grammar rules are just strings

- Their vocabulary includes V T NT plus the

meta-symbols (, ), , - T is a set of terminal symbols (chars or tokens).

- NT is the set of variables or non-terminals.

- BNF Grammar Rule Syntax LHS RHS

- LHS means left-hand side of a rule.

- RHS means right-hand side of a rule.

- The substitution operator says that the RHS

of any rule may replace its LHS during any step

of the derivation of a string in L from S in NT. - A well-formed RHS s may include choice operator

and balanced braces for sub-expression

grouping.

22

Context-Free Grammar (CFG) Restrictions

- LHS for context-free grammars (CFGs)

- We will only be interested in context-free

grammars(CFGs). - In rules of a CFG, LHS is restricted to be a

single nonterminal or variable symbol in NT. - Each symbol in NT can derive a language in V

- Rules for combining symbols are called

productions - Sequences of productions produce exactly all of

the strings of T that belong to L and no others.

23

Deriving a Sentence

- Derivation starts with the start symbol S in NT

which gradually evolves into a string d in V - Each derivation step replaces any variable in d

by the RHS of any of its production rules. - This step is repeated for another variable in d

until all variables have been eliminated. - It terminates when the derived string d is in T

(contains no more elements from NT). - The result d is in T and is a legal sentence in

the language L. - Parsing is the reverse process - analyze the

sentence to find its derivation history in

reverse ( productions in reverse are called

reductions.)

24

Extended BNF Rules

- Grammar vocabulary is still V NT T.

- Merge alternate rules for the same LHS, by

introducing OR-combinations of alternative RHSs,

separated by . - Create expression trees containing

sub-expressions surrounded by parentheses. - Allow braces for optional and ellipsis or for

repeatable sub-expressions

and

25

Recursive BNF Grammars

- Recursion may be direct (visible in one rule)

- A bA c

- This rule generates a regular language or set of

strings bc. - Recursion may be indirect (visible after variable

substitution) - A (C ( ), C A )

- The rules above generate the same language as

these - (directly recursive) rules A (A)

() - To see this, replace C in A-rules by the RHS of

all C-rules. - Both grammars generate this set of nested

parenthesis pairs, where b denotes ( and e

denotes ) - bk ek k gt 1

26

Recursive BNF Example

- Example the BNF grammar

- A bC be, C Ae

- represents the same language as A bAe be.

- Eliminate C by substituting the RHS of its

C-rules. - Both grammars generate the set of nested

parentheses bk ek k gt 1 - This language is context-free but not regular

- indirect imbedded recursion requires balanced

b..e pairs. - Counting matched occurrences requires unbounded

memory. - Left-most or right-most recursion is equivalent

to iteration, so such grammar rules are regular - We can replace left- or right-recursive BNF

rules by extended EBNF rules. Extended rules can

denote regular expressions (lists of repeated

items with separator symbols).

27

Context-free Grammars

- The grammar A bAe be which generates the set

of strings bk ek k gt 1 is the simplest

example of a language which is context-free but

not regular - Unbounded k-value is assumed to avoid impractical

compromises. - Imbedded recursion requires balanced b..d pairs.

- Counting matched occurrences requires unbounded

memory. - Left-most and right-most recursion are equivalent

to iteration, so such grammar rules are regular - We can replace left- or right-recursive BNF

rules by extended EBNF rules. Extended rules can

denote regular expressions (lists of repeated

items with separator symbols).

28

Examples of Recursion

- Embedded essential recursion

- E ( E ) Id Number (direct)

- Stmt simpleStmt Block

- Block StmtList (indirect)

- StmtList Stmt StmtList Stmt

- Left-recursion vs. right-recursion (mutex)

- StmtList (above) (left-recursive)

- Blist B Blist ltemptygt

(right-recursive) - Embedded recursion is not a problem for either

stack-based parsing method.

29

Recursive-Descent Parsing

- Recursive descent or top-down parsers make nested

procedure calls for each occurrence of a variable

on a syntax diagram, including those representing

embedded recursion. - Alternate right-hand sides become OR-connected or

branching paths on syntax diagrams. - Right-recursive rules can be replaced by

iterative loops. - Syntax diagrams can guide the manual design of

recursive-descent parsers. - See Wirth Ch. 5 (99f204 handout)

30

Automating Recursive-Descent

- Yacc-based LR(k) parsers are automatically

generated from EBNF grammar rules. - Recursive descent parsers can also be generated

automatically - Syntax diagrams can be translated into state

transition tables (manual or automatic)._ - State transition tables can drive a generic

table-driven interpreter. - Alternatively, these tables can be translated

directly into parser or translator source code. - In either case, the translator actions must be

hand-tailored by the programmer to the specified

source language and target output.

31

What about left-recursion?

- Left-recursion would cause stack overflow in a

recursive descent parser. - Left-recursion would require look-ahead beyond

the end of the string generated by the recursive

variable in order to terminate the recursion. - Fortunately, left and right recursive grammars

are interchangeable, at least for parsing (syntax

error detection). - Left-recursive rules are prohibited for

recursive-descent parsing, but they can be

converted into right-recursive ones (by

translating AA to AA and A to (AAltemptygt)

plus other conversions).

32

Parsers vs. Translators

- Unfortunately, left and right recursion are not

equivalent when implementing translators. - The situation is more involved because

- Translators do more that return syntax errors.

- Different processing may be needed after the

first or before the last occurrence of the

repeated non-terminal. - Recursive-descent parsers for right-recursive

grammar rules have limited look-into

capability, which can recognize first-instances,

but do not know if the next occurrence is the

last one. - Left-recursion requires look-beyond-current-insta

nce capability to recognize the final occurrence

(because something different follows it) and

terminate the recursion (to avoid an infinite

loop and stack overflow).

33

Left-recursive Grammars

- Grammars with left-recursive rules are called

LR(k) grammars, because LR(k) parsers are

normally used. (The number k is the number of

symbols of look-ahead is needed beyond the end of

the symbol to be parsed.) - LR(k) parsing requires look-beyond-current-instan

ce capability in order to recognize the final

occurrence (assuming something different follows

it) to disambiguate the alternate RHSides of

left-recursive rules - When parsing Alist Alist A A, which

alternate RHS to try first? - Both rules begin with A, so the correct one

depends on what follows A (assumed not confusable

with A). - We must parse A in order to discover what follows

it. - The equivalent left-recursive rule, Alist A

Alist ltemptygt, - can choose between A and something else before

parsing A.

34

Left-recursive Parsers

- Left-recursive grammars are handled easily by

more sophisticated LR(k) parsers. These can be

generated automatically from EBNF specifications

by Yacc. - Yacc generates a table-driven stack-based parser.

- The flip side for Yacc is that right-recursive

grammar rules are expensive to process (they

require heavy use of the stack).

Yacc stands for Yet Another

Compiler-Compiler See our LexYacc text, p.

197