TeraGrid Components - PowerPoint PPT Presentation

1 / 12

Title:

TeraGrid Components

Description:

open source software and community. Intel Itanium Processor Family nodes (proposed) ... Brocade SilkWorm 12000 FC Switch. 2 X 64 ports (2 Gb/s) ... – PowerPoint PPT presentation

Number of Views:53

Avg rating:3.0/5.0

Title: TeraGrid Components

1

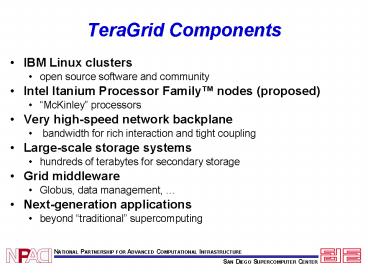

TeraGrid Components

- IBM Linux clusters

- open source software and community

- Intel Itanium Processor Family nodes (proposed)

- McKinley processors

- Very high-speed network backplane

- bandwidth for rich interaction and tight

coupling - Large-scale storage systems

- hundreds of terabytes for secondary storage

- Grid middleware

- Globus, data management,

- Next-generation applications

- beyond traditional supercomputing

2

TeraGrid 13.6 TF, 6.8 TB memory, 79 TB internal

disk, 576 network disk

ANL 1 TF .25 TB Memory 25 TB disk

Caltech 0.5 TF .4 TB Memory 86 TB disk

Extreme Blk Diamond

574p IA-32 Chiba City

256p HP X-Class

32

32

32

32

24

128p Origin

128p HP V2500

32

24

32

24

HR Display VR Facilities

92p IA-32

5

4

5

8

8

HPSS

HPSS

OC-48

NTON

OC-12

Calren

ESnet HSCC MREN/Abilene Starlight

Chicago LA DTF Core Switch/Routers Cisco 65xx

Catalyst Switch (256 Gb/s Crossbar)

Juniper M160

OC-48

OC-12 ATM

OC-12

GbE

NCSA 62 TF 4 TB Memory 240 TB disk

SDSC 4.1 TF 2 TB Memory 225 TB SAN

vBNS Abilene Calren ESnet

OC-12

OC-12

OC-12

OC-3

Myrinet

4

8

HPSS 350 TB

UniTree

2

Myrinet

4

1024p IA-32 320p IA-64

10

1176p IBM SP 1.7 TFLOPs Blue Horizon

14

15xxp Origin

16

Sun F15K

3

SDSC node configured to be best site for

data-oriented computing in the world

Argonne 1 TF 0.25 TB Memory 25 TB disk

Caltech 0.5 TF 0.4 TB Memory 86 TB disk

TeraGrid Backbone (40 Gbps)

vBNS Abilene Calren ESnet

NCSA 8 TF 4 TB Memory 240 TB disk

HPSS 350 TB

Myrinet Clos Spine

SDSC 4.1 TFLOP 2 TB Memory 25 TB internal

disk 225 TB network disk

Blue Horizon IBM SP 1.7 TFLOPs

Sun F15K

4

TeraGrid Wide Area Network

Abilene

Chicago

DTF Backbone (proposed)

ANL

Urbana

Los Angeles

San Diego

OC-48 (2.5 Gb/s, Abilene)

Multiple 10 GbE (Qwest)

Multiple 10 GbE (I-WIRE Dark Fiber)

- Solid lines in place and/or available by October

2001 - Dashed I-WIRE lines planned for Summer 2002

5

SDSC local data architecture a new approach for

supercomputing with dual connectivity to all

systems

LAN (multiple GbE, TCP/IP)

Local Disk (50TB)

Blue Horizon

WAN (30 Gb/s)

HPSS

Linux Cluster, 4TF

Sun F15K

SAN (2 Gb/s, SCSI)

SCSI/IP or FC/IP

30 MB/s per drive

200 MB/s per controller

FC Disk Cache (150 TB)

FC GPFS Disk (50TB)

Database Engine

Data Miner

Vis Engine

SDSC design leveraged at other TG sites

Silos and Tape, 6 PB, 52 tape drives

6

Draft TeraGrid Data Architecture

CalTech

0.5TF

Potential forGrid-wide backups

Every nodecan access every disk

Cache Manager

LAN

SAN

Data/Vis Engines

NCSA

SDSC

LAN

LAN

40 Gb/s WAN

4TF

6TF

SAN

SAN

LAN

SAN

Cache 150TB

GPFS 50TB

GPFS 200TB

Local 50TB

Local 50TB

Tape Backups

TG Data architectureis a work in progress

1TF

Argonne

7

TeraGrid Data Management (Sun F15K)

- 72 processors, 288 GB shared memory,16 Fiber

channel SAN HBAs (gt200 TB disk),10 GbE - Many GB/s I/O capability

- Data Cop for SDSC DTF effort

- Owns Shared Datasets and Manages shared file

systems - Serves data to non-backbone sites

- Receives incoming data

- Production DTF database server

8

Brocade SilkWorm 12000 FC Switch

- 2 X 64 ports (2 Gb/s)

- Heart of Storage Area Network first 3 shipped at

SDSC - Hope to use for direct data sharing across WAN

investigating with NCSA and Brocade.

9

TeraGrid Organization

NSF MRE Projects

NSF ACIR

NSF Review Panels

Internet-2 McRobbie

External Advisory Committee Robert Conn,

UCSD Richard Hermanm UIUC Dan Meiron, CIT

(Chair) Robert Zimmer, UC/ANL

Alliance UAC Sugar, Chair

Project Director Rick Stevens (UC/ANL)

Chief Architect Dan Reed (NCSA)

NPACI UAC Kupperman, Chair

Executive Committee Fran Berman, SDSC (Chair) Ian

Foster, UC/ANL Paul Messina, CIT Dan Reed,

NCSA Rick Stevens, UC/ANL Charlie Catlett, ANL

External Advisory Committee

User Advisory Committee

Technical Executive Director Charlie Catlett (ANL)

Technical Working Group

Site Coordination Committee

Technical Coordination Committee

Clusters Pennington (NCSA)

Grid Software Kesselman (ISI) Butler (NCSA)

Operations Sherwin (SDSC)

ANL Evard

CIT Bartelt

NCSA Pennington

SDSC Andrews

Networking Winkler (ANL)

Data Baru (SDSC)

User Svcs Wilkins-Diehr (SDSC) Towns (NCSA)

PSC

NCAR

Applications WIlliams (Caltech)

Visualization Papka (ANL)

Performance Eval Brunett (Caltech)

10

So How is it Going?

- Near-Term Milestones

- 2Q2002 Initial Network, Node Selection

- Final stages of fiber transport deployment

- Expect first lambda next month

- Evaluation team focused on node evaluation

- Initial testing of early test systems, finalizing

test suites for node comparisons - Planning and Working Groups

- Site leads finalizing Blueprint to bridge gaps

between vision of proposal and implementation

details - Goal to provide principles by which WGs can make

implementation and design trade-off decisions - Weekly site-leads teleconference, bi-weekly EC

teleconference - Monthly reports from WGs, monthly teleconference

of site-leads and WG leads

11

Milestones

12

We will be ready!

- TeraGrid team working successfully together to

prototype and deploy HW and SW infrastructure - SDSC activities include

- TeraGrid lite systems integration testbed

- Apps lite application testbed

- Purchased and installed SUN F15K Data Cop

- Purchased and deployed SAN switches and initial

disks - Purchased and deployed Itanium IA-64 and McKinley

nodes - Prototyping account management system

- Developing apps (Encyclopedia of Life, Enzo,

Montage, etc.) - Developing TeraGrid data framework and services