Tomasulos Approach PowerPoint PPT Presentation

1 / 35

Title: Tomasulos Approach

1

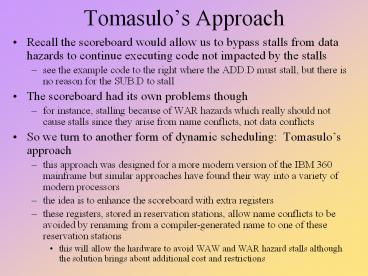

Tomasulos Approach

- Recall the scoreboard would allow us to bypass

stalls from data hazards to continue executing

code not impacted by the stalls - see the example code to the right where the ADD.D

must stall, but there is no reason for the SUB.D

to stall - The scoreboard had its own problems though

- for instance, stalling because of WAR hazards

which really should not cause stalls since they

arise from name conflicts, not data conflicts - So we turn to another form of dynamic scheduling

Tomasulos approach - this approach was designed for a more modern

version of the IBM 360 mainframe but similar

approaches have found their way into a variety of

modern processors - the idea is to enhance the scoreboard with extra

registers - these registers, stored in reservation stations,

allow name conflicts to be avoided by renaming

from a compiler-generated name to one of these

reservation stations - this will allow the hardware to avoid WAW and WAR

hazard stalls although the solution brings about

additional cost and restrictions

2

Register Renaming

- The concept is to rename registers

- to avoid WAR and WAW hazards

- this approach can also support overlapped

execution of multiple iterations of a loop - Reservation stations are buffers for operands of

instructions waiting to be issued - reservation stations for each operand of each

functional unit - used as buffers for each operand, grabbing the

operand as it becomes available from memory,

register file or a functional unit - pending instruction designates the reservation

station that will hold its operand(s) - as instructions are issued, register specifiers

for pending operands are renamed to the names of

the reservation stations holding them - when successive writes appear to a register, only

the last one is actually accepted and written - eliminates WAW hazards

3

Differences from the Scoreboard

- Issue logic combines reservation station

selection and register renaming - in this way, all WAW and WAR hazards are

eliminated without causing stalls unlike the

scoreboard - WAW hazards are eliminated because out of order

writes are not allowed to write - WAR hazards are eliminated because of register

renaming - Hazard detection and execution control are

distributed among the reservation stations - rather than centralized as in the scoreboard, or

the ID stage of the MIPS pipeline - Results from one function unit are passed

directly to other functional units to waiting

reservation stations - so RAW hazards do not have to wait for register

writes - this difference requires a mechanism to connect

functional units and reservation stations

together the Common Data Bus - This approach is more expensive because

- there are more reservation stations than real

registers to accommodate all WAW and WAR hazards - there are also multiple connections to the CBD

4

Reservation Station Architecture

- Reservation stations hold

- issued instructions that have not started

executing - operand values for the instruction or the source

of where they will be provided - control information

- Load/store buffers hold

- data or addresses coming from or going to memory

- Register file

- will be used as before, but forwarding via CDB is

also used - CDB connects everything together

5

3 Stage Approach

- Instructions go through the hardware like the

Scoreboard or the 5-stage MIPS pipeline - but here we will separate memory operations

leaving a 3 sequence execution - Issue

- get instruction from instruction queue

- if floating-point operation and there is an

available functional unit then - issue instruction, send operands to reservation

station if available (in registers) - if load/store instruction and if buffer available

then - issue load/store to buffer

- while issuing, detect WAR hazards and rename

registers as needed - if reservation station for needed unit is busy,

then stall until station is freed - Execute (for floating point instructions)

- if one or more operands are not yet available,

monitor CDB for them - this is RAW hazard detection

- when operand becomes available, place operand in

reservation station - when both operands are in reservation station,

execute the operation - Write result

- when functional unit has produced the result,

place it on the CDB and from there, registers and

reservations stations will grab it and store it

6

Reservation Station Structure

- The reservation station stores 6 items

- the operation to be performed (Op)

- the reservation stations that will produce the

operands, or 0 if the operand is already

available (Qj, Qk) - this will include the register if it is coming

from the register file, or the load/store buffer

if it is coming from memory - the values of the operands (Vj, Vk)

- whether the reservation station and functional

unit is available (Busy) - When an instruction is issued, the reservation

station information is supplied and the register

file and load/store buffer are modified to store

Qi (reservation station that will produce the

result to be moved to the register or buffer - if this field is blank, then no current

instruction will produce the value for this

register or buffer

7

How It Works

- Instruction fetch unit fetches next instruction

- unless instruction queue becomes full

- Instruction at front of queue is issued if

- the needed reservation station is available

- Once at a reservation station

- Qj/Qk ? reservation station that will produce the

value (as determined by comparing the register

number to the register status information) OR - Vj/Vk ? value from register for source operand

(if the register value is correct) - this step takes care of RAW hazards

- Once both source operands are in Vj/Vk (forwarded

from CDB), the instruction can execute - update register status to include that this

reservation station will produce a result to the

destination register - a later instruction which may write to the same

register will override this reservation station,

and so WAW hazards are avoided - Once computed, if the value is needed at another

reservation station, forward it via CDB, and

forward it to the register file if this

reservation station is still listed in the

register status list to avoid WAR hazards - the control logic is shown in figure 2.12 on page

101

8

Summary

- Differences from the Scoreboard

- WAW and WAR hazards eliminated entirely rather

than stalling - CDB broadcasts results rather than waiting for a

register to be available - load and store treated as basic functional unit

- reservation stations contain logic to detect and

eliminate hazards - data structure used in reservation stations are

tags (virtual names for registers generated

through register renaming) - Advantages

- distribution of hazard detection allows for

instructions to move passed Issue stage and

improve ILP - elimination of stalls for WAW and WAR hazards due

to register renaming - can provide high performance as long as branch

penalties can be kept small - Disadvantages

- complexity of the hardware

- restrictions because of the single CDB

- expense of the associative memory used as tags in

each reservation station - see http//www.nku.edu/foxr/CSC462/NOTES/tomasulo

-example1.xls http//www.nku.edu/foxr/CSC462/NOTE

S/tomasulo-example2.xls for examples

9

Hardware Based Speculation

- Using dynamic scheduling, we might have multiple

instructions being executed in the same cycle - To obtain better control, we need to improve on

our branch mechanism - for instance, as it is now, a mispredicted branch

may not be able to prevent an imprecise exception - So we turn to hardware-based speculation

- predict the next instruction and issue it before

determining the branch result - if prediction is wrong, instruction must be

killed off before it can affect a change to the

machines state (it cannot update registers or

memory or cause an exception) - We add a new buffer called the reorder buffer

- buffer stores the results of completed

instructions that were speculated, until the

speculation is proven true or false - if true, allow the instructions results to be

written to registers/memory - if false, remove instruction and all instructions

that followed it - We add a new state to instruction execution

called commit to our Tomasulo-based superscalar

architecture - should the result be stored in the destination

register? - this becomes the final step for all instructions

10

New Architecture

- Add the Reorder buffer to

- store the output from each functional unit and

load buffer - Enhance control hardware

- instruction cannot issue if the reorder buffer is

full - upon issue, update register status to include

reorder buffer entry number, and enter reorder

buffer entry number into destination field of

reservation station - execution remains the same

- write result remains the same except that values

are not written to registers here, but they are

forwarded via CDB

- in each cycle, commit the instruction at the

front of the reorder buffer if it has reached the

write result stage and the speculation for the

instruction was correct - otherwise, if the speculation for the instruction

was wrong, flush the instruction and all others

in the reorder buffer until you reach the first

instruction fetched after the branch condition

was determined

11

Examples

- Note this architectures new control logic

shown in figure 2.17 on page 113 - Two examples are provided in the text

- assume FP add takes 2 cycles, FP multiply takes

10 cycles, FP divide takes 40 cycles - example 1 code below to the left

- solution at http//www.nku.edu/foxr/CSC462/NOTES/

/reorder1.xls - example 2 code below to the right

- this example uses a loop to clearly illustrate

the use of speculation and the reorder buffer - solution at http//www.nku.edu/foxr/CSC462/NOTES/

/reorder2.xls

L.D F6, 34(R2) L.D F2, 45(R3) MUL.D F0, F2,

F4 SUB.D F8, F6, F2 DIV.D F10, F0, F6 ADD.D F6,

F8, F2

Loop L.D F0, 0(R1) MUL.D F4, F0, F2 S.D F4,

0(R1) DSUBI R1, R1, 8 BNE R1, R2, Loop

12

Multiple Instruction Issue

- We have attempted to limit stalls from hazards to

lower the average CPI to the ideal CPI of 1 - can we decrease CPI to under 1? How?

- issue and execute more than 1 instruction at a

time - Multiple-issue processors come in two kinds

- superscalars

- use static and/or dynamic scheduling mechanisms

and multiple functional units to issue more than

1 instruction at a time - static scheduling uses the compiler to piece

together instructions that might be able to

execute simultaneously (for instance, the

compiler might couple up an integer operation

with a FP operation since they should not

interfere with each other) - dynamic scheduling will use a Tomasulo-like

architecture - VLIW (very long instruction word)

- use instructions which are themselves multiple

instructions, scheduled by a compiler - all instructions in the long word are executed in

parallel

13

Superscalar

- Hardware issues from 1 to 8 instructions per

clock cycle - these instructions must be independent and

satisfy other constraints - avoid structural hazards - use different

functional units, make up to 1 memory reference

combined - Scheduling of instructions can be done statically

by a compiler or dynamically by hardware - while a superscalar can issue any combination of

instructions, for simplicity, we will concentrate

on a 2 instruction superscalar for MIPS where - one instruction will be an integer operation

- and the other, if available will be a floating

point operation - this simplification reduces the complexity of the

hardware, but also reduces the usefulness of the

superscalar

14

How it works

- For the 1 int/1 FP superscalar

- instruction Fetch gets two simultaneous

instructions (consecutive) (64 bits worth of

instruction) - if first instruction is an int instruction and

the int unit is available (it should be since the

int EX takes 1 cycle to execute) then issue the

instruction - if second instruction is an FP instruction and

the proper FP unit is available, issue it - with 2 instructions fetched and issued in 1

cycle, we could ultimately have a CPI of 0.5 - to make this worthwhile

- we will want to either increase the number of FP

functional units, or pipeline these units as

every clock cycle might involve issuing an FP

instruction - compiler can schedule instructions to be paired

as int/FP, or we can use hardware to detect if

two instructions are an int/FP pair and issue both

15

Superscalar Example

- Now we combine speculation, dynamic scheduling

and multiple issue with a superscalar processor - the code is given below

- assume there are separate integer units for

effective address calculation, ALU operations,

and branch condition evaluation - notice that there are no FP operations here, so

all instructions should execute in 1 cycle - we will look at the cycles at which each

instruction issues, executes, and writes to the

CDB without speculation, and issues, executes,

writes and commits with speculation

Loop LD R2, 0(R1) DADDIU R2,

R2, 1 SD R2, 0(R1)

DADDIU R1, R1, 4 BNE R2, R3, Loop

16

Without Speculation

17

With Speculation

18

Compiler Scheduling a Superscalar

- Here, the compilers goal is to look beyond just

two instructions (as we saw with the

hardware-based approach) - to select compatible instructions

- if we assume, like our previous example, that we

want to execute 1 integer and 1 FP operation,

then the compiler will try to couple up such

operations into the executable code - we might group a L.D with an ADD.D that uses the

loaded value, thus causing the ADD.D to stall and

gaining no benefit from the superscalar - we can then combine superscalar scheduling with

loop unrolling and scheduling to optimize the

performance of the superscalar and the available

functional units - assume floating point functional units are

pipelined so that we can schedule multiple FP

operations in a row

19

Example

- The code on the left can be scheduled for the

MIPS superscalar as shown on the right - Both sets of code have RAW hazard stalls not

shown in the code above, but the superscalar will

achieve a speedup of approximately 17 / 14

1.214 or 21

L.D F0, 0(R1) L.D F1, 0(R2) ADD.D F2, F0,

F1 L.D F3, 0(R3) L.D F4, 0(R4) ADD.D F5, F3,

F4 SUB.D F6, F3, F2 DADDI R1, R1, 8 DADDI R2,

R2, 8 DADDI R3, R3, 8 DADDI R4, R4,

8 DADDI R5, R5, 8 S.D F6, 0(R5)

Integer FP L.D F0, 0(R1) L.D F1, 0(R2)

L.D F3, 0(R3) ADD.D F2, F0, F1 L.D F4, 0(R4)

DADDI R1, R1, 8 ADD.D F5, F3, F4 DADDI R2, R2,

8 SUB.D F6, F3, F2 DADDI R3, R3, 8 DADDI R4,

R4, 8 DADDI R5, R5, 8 S.D F6, -8(R5)

20

Another Example

- Now we improve by also performing loop unrolling

with superscalar scheduling - this example uses the same loop that we had

previously used in loop unrolling and scheduling

Our loop executes 5 iterations in 12 cycles for

200 total iterations 200 12 2400 cycles,

for a speedup of 3500 / 2400 1.46 or 46 We

could also compute speedup as (14 / 4) / (12 /

5) 1.46

21

VLIW

- Very Long Instruction Word approach

- statically scheduled and attempting to issue more

than 2 instructions per cycle - the compiler must select and group instructions

that can be executed together - to fill out an entire VLIW instruction, other

compiler techniques are needed like loop

unrolling, scheduling across blocks and global

techniques - VLIW might be between 5 and 7 instructions long

(160 224 bits long) - instruction fetch gets one VLIW

- fetch 1 VLIW (up to 7 instructions!)

- issue it all at once on up to 7 functional units

- the compiler must know how many functional units

are available - As an example, the hardware might have

- 2 integer functional units, 2 floating point

function units, 2 load/store units, 1 branch unit

22

VLIW Example

- Consider a VLIW compiler for MIPS

- assume two load/store units, 1 integer unit

(which also performs branch calculations), and

two floating point functional units - here is an example of our previous loop,

unrolled, scheduled and grouped together for our

MIPS VLIW - 7 loop iterations in 9 cycles takes 143 total

iterations or 143 9 1287 cycles giving us a

speedup of 2400 / 1287 1.86 over our

superscalar and 3500 / 1287 2.72 over loop

unrolling/scheduling - we could also compute this as (12 cycles / 5

iterations) / (9 cycles / 7 iterations) 1.87

and (14 / 4) / (9 / 7) 2.72

23

Design Issues

- Reorder buffer vs. more registers

- we could forego the reorder buffer by providing

additional temporary storage in essence, the

two are the same solution, just a slightly

different implementation - both require a good deal more memory than we

needed with an ordinary pipeline, but both

improve performance greatly - How much should we speculate?

- other factors cause our multiple-issue

superscalar to slow cache issues or exceptions

for instance, so a large amount of speculation is

defeated by other hardware failings, we might try

to speculate over a couple of branches, but not

more - Speculating over multiple branches

- imagine our loop has a selection statement, now

we speculate over two branches speculation over

more than one branch greatly complicates matters

and may not be worthwhile

24

Renaming Table

- One implementation for register renaming is to

use a queue of available registers and a renaming

table - This allows for on-the-fly renaming or

substituting in a superscalar

25

Superscalar Loop Example

- We now examine dynamic scheduling on our

superscalar - In this case, we will schedule instructions in a

loop, allowing multiple iterations to be

executing at the same time, similar to what we

did with Tomasulos approach

- For this example, assume that

- both a floating-point and an integer operation

can be issued at each cycle even if they are

dependent - one integer functional unit is available, and

used for both ALU operations as well as

loads/stores/branch calculations - a separate floating point pipeline is available

for the execution of floating point operations - issue/write stages each take 1 cycle

- latencies loads 2 cycles, FP add 3 cycles,

ALU ops 1 cycle - 2 CDBs available, one for integer operations, one

for floating point - assume no branch delay (perfect prediction)

Loop L.D F0, 0(R1) ADD.D F4, F0, F2 S.D F4,

0(R1) DSUBI R1, R1, 8 BNE R1, R2, Loop

26

Execution of the Loop

27

Resource Usage for Loop

28

Example 2

Same loop, but now 2 integer ALUs, one for

load/store address calculations, one for int ALU

operations

29

Execution of the Loop

30

Resource Usage for Loop

31

Superscalar Problems

- We must now expand the potential problems that

arise with a superscalar pipeline over an

ordinary pipeline - RAW hazards could exist between the two

instructions issued at the same time - there are new potential WAW and WAR hazards

- we need to have twice as many register reads and

writes as before, our register file must be

expanded to accommodate this - loads and stores are integer operations even if

they are dealing with floating point registers - we might be reading floating point registers for

a FP operation and also reading/writing floating

point registers for an FP load or store - maintaining precise exceptions is difficult

because an integer operation may have already

completed - hardware must detect these problems (and quickly)

32

Cost of a Superscalar

- We already had the multiple functional units, so

there is no added cost in terms of having an int

and a FP instruction issue and execute in

parallel - there are added costs though for

- hazard detection

- the complexity here is increased because now

instructions must be compared not only to

instructions further down the pipeline, but to

the instruction at the same stage, plus there is

a potential for twice as many instructions being

active at one time! - maintaining precise exceptions

- two sets of buses

- integer operations from integer registers to

integer ALU data cache - FP operations from FP registers to FP functional

unit data cache - ability to access floating point register file by

up to 3 instructions during the same cycle (a

load or store FP in the ID or WB stage, an FP

instruction in ID and an FP instruction in WB)

33

Sample Problem 1

- We are going to implement Tomasulos approach

- assume floating point /- take 4 cycles to

execute, take 7 cycles to execute and / takes

10 cycles to execute - assume loads and stores take 3 cycles to execute

- assume all int operations take 2 cycles to

execute - Using the average FP SPEC benchmark statistics in

figure 2.33 - how many of each type of functional unit do you

suppose we should provide assuming the

distribution of operations across a program match

their frequency - e.g., 25 means that we expect this operation to

arise 1 in every 4 instructions

34

Solution

- The FP averages are 39 (load/store), 38 (ALU),

4 (branch), 10 FP /-, 8 FP , 0 FP / - with 39 load/store operations a load or store

occurs roughly every 2.5 instructions, since

loads and stores take 3 cycles, we should have 2

load/store units - with 38 ALU 4 branch operations an ALU/branch

operation occurs roughly every 2.5 instructions,

since ALU operations take 2 cycles, we only need

1 ALU - with 10 FP /- an FP /- occurs roughly every 10

instructions, so we only need 1 FP adder - with 8 FP an FP occurs roughly every 12

instructions, so we only need 1 FP - with about 0 FP / we only need 1 FP /

35

Sample Problem 2

- Given the following latencies

- find a sequence of no more than 10 instructions

that causes contention on the CDB using

Tomasulos approach - FP ? FP ALU 6 cycles

- FP ? FP ALU 4 cycles

- FP ? FP Store 5 cycles

- FP ? FP Store 3 cycles

- Int (including LD) ? any 0 cycles

- if we have an FP followed 2 cycles later by an

FP that uses different source operands, we will

have bus contention in the situation below, the

MUL.S is postponed 1 cycle because of waiting for

the L.S to write the result, so the ADD.S causes

contention which follows 3 cycles later

Operation Issues at Executes at Writes at L.S

F2, 0(R1) 1 2 3 MUL.S F3, F2, F1

2 4 11 int op 3 4 5 int op 4 5 6 ADD.S

F4, F2, F5 5 6 11