RESULTS PowerPoint PPT Presentation

1 / 1

Title: RESULTS

1

Problems with the Borderline Method and OSCEs

Philip R Belcher Faculty of Medicine Quality

Assurance Officer

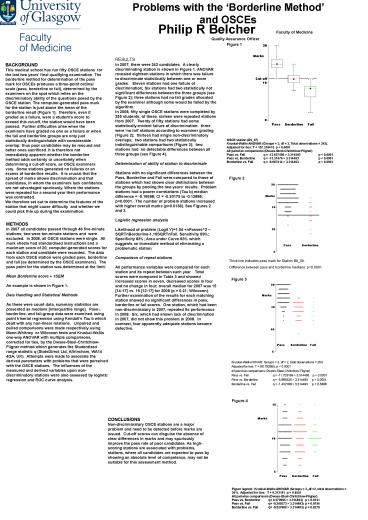

Figure 1 OSCE station

(D9_07) Kruskal-Wallis ANOVAR (Groups 3, df

2, Total observations 243). Adjusted for ties

T 157.216415 p lt 0.0001 All pairwise

comparisons (Dwass-Steel-Chritchlow-Fligner) Pass

vs. Fail q -13.627489 gt 3.314493 p lt

0.0001 Pass vs. Borderline q -13.31476 gt

3.314493 p lt 0.0001 Borderline vs. Fail q

-9.667414 gt 3.314493 p lt 0.0001

RESULTS In 2007, there were 243 candidates. A

clearly discriminating station is shown in Figure

1. ANOVAR revealed eighteen stations in which

there was failure to discriminate statistically

between one or more grades. Eleven stations had

one failure of discrimination Six stations had

two statistically not significant differences

between the three groups (see Figure 2) three

stations had no fail grades allocated by the

examiner although some would be failed by the

algorithm. In 2008, fifty single OSCE stations

were completed by 255 students of these, sixteen

were repeated stations from 2007. Twenty of

fifty stations had some statistically-evident

failure of discrimination three were no fail

stations according to examiner grading (Figure

2) thirteen had single non-discriminatory

overlaps two stations had two statistically

indistinguishable comparisons (Figure 3) two

stations had no detectable differences between

all three groups (see Figure 4). Determination

of ability of station to discriminate Stations

with no significant differences between the Pass,

Borderline and Fail were compared to those of

stations which had shown clear distinctions

between the groups by pooling the two years

results. Problem stations had a poorer

correlations (Tau b) median difference

-0.16598 CI -0.20175 to -0.12899 plt0.0001.

The number of problem stations increased with

higher overall marks (p0.0138). See Figures 2

and 3. Logistic regression analysis Likelihood

of problem (Logit Y)1.04 nPasses2

SQRTnBorderline-1.16SQRTnFail. Sensitivity 69

Specificity 83 Area under Curve 83 which

suggests an immediate method of eliminating a

problematic station. Comparison of repeat

stations All performance variables were compared

for each station and its repeat between each

year. Total scores were compared in Table 3 and

showed increased scores in seven, decreased

scores in four and no change in four overall

median for 2007 was 15 14-17 vs. 16 12-17 for

2008 (p 0.21 Wilcoxon). Further examination

of the results for each matching station showed

no significant differences in pass, borderline or

fail scores. One station, which had been

non-discriminatory in 2007, repeated its

performance in 2008. Six, which had shown lack

of discrimination in 2007, did not show this

problem in 2008. In contrast, four apparently

adequate stations became defective.

BACKGROUND This medical school has run fifty OSCE

stations for the last two years final

qualifying examination. The borderline method for

determination of the pass mark for OSCEs produces

a three-point ordinal scale (pass, borderline or

fail), determined by the examiners on the spot

which relies on the discriminatory ability of the

questions posed by the OSCE station. The

computer-generated pass mark for the station is

just above the mean of the borderline result

(Figure 1) therefore, even if graded as a

failure, were a students score to exceed this

cut-off, the station would have been passed.

Further difficulties arise when the examiners

have graded no one as a failure or when the fail

and borderline groups are only just statistically

distinguishable with considerable overlap thus

poor candidates may be rescued and better ones

sacrificed. It is therefore not immediately

apparent whether the borderline method adds

certainty or uncertainty when determining a

cut-off score, as OSCE examiners vary. Some

stations generated no failures or an excess of

borderline results. It is crucial that the

spread of marks allows discrimination and that

candidates, in whom the examiners lack

confidence, are not advantaged spuriously. Where

the stations were repeated for a second year

their performance was contrasted. We therefore

set out to determine the features of the station

that might cause difficulty and whether we could

pick this up during the examination.

Figure 2 Thick line indicates pass

mark for Station B9_08. Difference between pass

and borderline medians plt0.0001

METHODS In 2007 all candidates passed through 46

five-minute stations two were ten-minute

stations and were excluded. In 2008, all OSCE

stations were single. All mark sheets had

standardised instructions and a maximum score of

20 computer-generated scores for each station

and candidate were recorded. The data from each

OSCE station were graded pass, borderline and

fail (as determined by the OSCE examiners). The

pass point for the station was determined at the

limit Mean Borderline score 1SEM An example

is shown in Figure 1. Data Handling and

Statistical Methods As these were count data,

summary statistics are presented as medians

interquartile range. Pass-, borderline- and

fail-group data were examined using point

triserial regression using Kendalls Tau b which

dealt with any non-linear relations. Unpaired

and paired comparisons were made respectively

using Mann-Whitney or Wilcoxon tests and

Kruskal-Wallis one-way ANOVAR with multiple

comparisons, corrected for ties, by the

Dwass-Steel-Chritchlow-Fligner method which

generates the Studentized range statistic q

(StatsDirect Ltd, Altrincham, WA14 4QA, UK).

Attempts were made to associate the derived

parameters with problems that were perceived with

the OSCE stations. The influences of the

measured and derived variables upon

non-discriminatory stations were also assessed by

logistic regression and ROC curve analysis.

Figure 3 Kruskal-Wallis NOVAR

Groups 3, df 2, total observations

253 Adjusted for ties T 80.793955, p lt

0.0001 all pairwise comparisons

(Dwass-Steel-Chritchlow-Fligner) Pass vs. Fail q

-11.703169 gt 3.314493 p lt 0.0001 Pass vs.

Borderline q -5.985335 gt 3.314493 p lt

0.0001 Borderline vs. Fail q -1.432188 gt

3.314493 p 0.5688

Figure 4 Figure legend

Kruskal-Wallis ANOVAR (Groups 3,,df 2, total

observations 241). Adjusted for ties T

0.313181 p 0.8551 All pairwise comparisons

(Dwass-Steel-Chritchlow-Fligner). Pass vs.

Borderline q 0.570995 gt 3.314493) p

0.9141 Pass vs. Fail q -0.300373 gt 3.314493) p

0.9754 Borderline vs. Fail q -0.827908 gt

3.314493) p 0.8279

CONCLUSIONS Non-discriminatory OSCE stations are

a major problem and need to be detected before

marks are issued. Cut-off scores can disguise

the absence of clear differences in marks and may

spuriously improve the pass rate of poor

candidates. As high-scoring stations are

associated with problems, stations, where all

candidates are expected to pass by showing an

absolute level of competence, may not be suitable

for this assessment method.