Sample Searches PowerPoint PPT Presentation

1 / 59

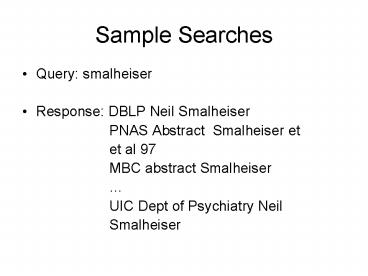

Title: Sample Searches

1

Sample Searches

- Query smalheiser

- Response DBLP Neil Smalheiser

- PNAS Abstract Smalheiser

et - et al 97

- MBC abstract Smalheiser

- UIC Dept of Psychiatry Neil

- Smalheiser

2

Query computer science genetics

- Campus program university, campus, college and

employment resources - SpringerLink (On-line journals and books in

science, technology and medicine) - Course ( Advanced topics in computer science and

computational genetics) - Annual Review of Computer Science

3

Comments for Slides 1-2

- The first two searches are intended to show

Google is reasonably good - In retrieving Web pages when given a few

keywords. In the first query, - The name Smalheiser is submitted. Ideally, the

home page of Smalheiser - should be retrieved first. Instead, his

publications in computer science are - retrieved in the first document. This is followed

by some of his publications - In the medical area. Finally, his home page in

Pyschiatry is retrieved. - The second query asks for important documents in

the intersection of the two - areas computer science and genetics. The

first retrieved document seems - to be unrelated to the query. The second

retrieved document seems ok. - The third document is a course in both areas.

- The examples show that Google is still far from

perfect.

4

Information Retrieval

- Document RepresentationRemove stop

wordsEg. In Automatically IdentifyingGene

terms in MEDLINE AbstractsRemove

inStemming Automatically becomes

automaticIdentifying becomes

identifyAbstracts becomes abstract

5

Comments on last slide

The first two steps in constructing a document

representation consist of eliminating non-content

words and mapping variations of the same word to

the same stem via a process called stemming.

6

Document representation

- Document is a set of content words or terms

automatic, identify, gene, term, medline,

abstractSometimes, keep locations of terms.

Eg. automatic first word in title

7

Comments on last slide

- Location information can be of importance in

differentiating the ordering of contents words in

a query from other orderings of the same words.

It is also useful in determining phrases.

8

Assign weights to terms

- Term frequency no. of times the term occurs in

the document - Document frequency no. of documents having

the term - The weight of a term in a document

proportional to term frequency, inversely

proportional to document frequencyEg term

frequency log ( N/document frequency)N no.

of documents in collection

9

Comments on the last slide

- The well-known tf-idf weighting scheme to assign

a weight to a term is given. The weight is

proportional to the term frequency and inversely

proportional to its document frequency. There are

numerous variations of this formula, but all of

them have the property that higher weights are

given to terms with higher term frequencies and

lower document frequencies.

10

Other factors in assigning weights

- Terms in title

- Terms in abstractTerms in big fonts etc.

- get heavier weights

11

Comments to last slide

- If a term occurs in the title, it usually gets a

higher weight than the same term occurring in the

main text. This may apply to the term appearing

in the abstract. If the term occurs in big fonts

or a way that attracts readers attention, it

should also gets a higher weight. - All these situations can be implemented by

assuming that each occurrence of such a term is

equivalent to k occurrences of the same term in

the main text with k gt1.

12

Query representation

- Two common modelsVector space model query

as a set of terms, possibly orderedBoolean

Model Terms connected by AND, OR and NOT

13

Comments to the last slide

- In the information retrieval literature, it has

been shown that the vector pace model is usually

better than the Boolean Model, because if a query

contains quite a few terms which are connected by

ANDs, then there may not be a document

satisfying the query. If the terms are connected

by ORs, then there may be too many unordered

documents satisfying the query and the user has

no efficient way to identify the useful documents

from the irrelevant ones. - In practice, it is likely that a hybrid model

having features of both models is used for

effective retrieval.

14

Vector space model

- Each dimension of a vector represents a distinct

termdimensions all terms in the collection,

including proper namesEg. Automatic identify

gene ( 1, 1, 1, .)

15

Compute the similarity between a query and a

document

- Q (q1, , qn) D (d1, , dn)

- Dot Q, D terms in common, favors long

documentsNorm( D) Cosine( Q, D) DotQ, D

/( Norm(D)Norm(Q))

16

Comments on last slide

- When the documents are binary vectors, the Dot

product similarity function obtains the number of

terms in common between the two vectors. When the

terms are weighted, the weights are incorporated

into the similarity function. Clearly, this

favors a long document such as an encyclopedia. - To compensate it, the norm ( length) of a

document is included in the denominator of the

similarity function so that a longer document

gets a larger denominator. The query norm is used

to ensure that the Cosine function returns a

value between 0 and 1, if all terms have

non-negative values. When the two vectors differ

from each other by a positive multiplicative

constant, their angular distance is 0 and the

Cosine value is 1.

17

Boolean model

- gene AND abstract( sometimes, uses to

ensure the term needs to be present) - gene OR abstractgene AND NOT abstract( uses

- to indicate undesiredterms Eg. gene

abstract)

18

Other features

- Phrase search information retrievalProximity

search information NEAR retrievalDate

search 2003Field search Eg in the field

Author, look for NealWildcard search

smaler

19

Comments on last slide

- Some systems require a query phrase such

- as information retrieval to be placed in

- quotes. This may require a retrieved document to

have exactly such a phrase. - If a document containing the words retrieval of

information is desired, the query can be

reformulated as Information near retrieval. - Filtering operations can be specified by filling

in additional information in specific fields such

as the author field. Wildcard entries such as

smaler, where denotes zero or more

characters are allowed, provided that does

not occur in the first few characters ( say 3),

otherwise the space for searching matching

strings will be too large.

20

Additional features

- Case sensitive java gets java, Java,

JAVAJava gets Java and possibly JAVA ( first

capital letter implies a proper name ) - ordered query terms eg. stray dog

- spelling error if no such word,some search

engines suggests similar words

21

Comments on last slide

- Location information in documents and the query

can be used to differentiate stray dog from dog

stray. - If a word does not exist in the index of all

words in the documents, then some search engines

may suggest some neighboring words which differ

from the misspelled word by 1 or 2 characters.

Note that proper names are included in the index.

22

Directory search

- Specify subtree computer finance medicinehard

ware software .. . - .

- query memory under computer means computer

memory vs human memory in medicine

23

Comments in last slide

- Directory search may reduce ambiguities.

- In the given directory, documents or pages are

classified under each node. For example, there is

a set of documents which are classified under

computer and another class under medicine. The

former class contains documents about computer

memory while the latter class contains documents

about human memory. If the query is restricted to

the class computer, then only documents in the

former class relating to computer memory will be

retrieved.

24

Feedback

- identify relevant documents and possibly

irrelevant documentsre-formulate query using

terms from relevant documents and from irrelevant

documents - Query apple Rel Doc computer Irrel fruit

- Modified query apple, computer, - fruit

25

Comments to last slide

- The user needs to identify relevant documents and

possibly irrelevant documents. Terms from the

relevant documents may be added to the query,

while terms from the irrelevant documents may be

used to exclude documents having such terms to be

retrieved in the next round. In the example, the

term computer is found in the relevant

documents and is added to the query, while the

term fruit is found in the irrelevant documents

and it is used to exclude documents having such a

word.

26

Web

- Surface Web linked togetherDeep Web Not

linked documents can be generated dynamically by

programsQuite a few medical databases and

bio-medical databases are in the Deep Web

27

Comments on the last slide

- The Web is roughly classified into the

- Surface web and the Deep Web. The pages in the

former are hyperlinked, while pages in the latter

are accessible only by submitting queries to

query interfaces. - Web crawlers which extract content information

from Surface Web pages are unable to get into

Deep Web pages for lack of hyperlinks.

28

Retrieval from the surface Web

- Anchor text belong to the document pointed

to. - lta href"http//tigger.uic.edu/htbin/cgiwrap/bin/n

ewsbureau/cgi-bin/index.cgi"gtMore Newslt/agt - Page rank importance of a Web pageRank( P)

- for every Qi pointing to P iterative Web

surfing interpretation

29

Comments on last slide

- There are some differences between retrieval from

the Web and from non-Web sources. In the former

case, words known as anchor texts which appear

together with the link from a page A to another

page B should be utilized for retrieval.

Specifically, the anchor words should be used as

content words for page B, as they describe the

contents of B as observed by the user who creates

A.

30

Example to illustrate page rank

- Rank(P) ½ Rank(A) 1/3 Rank(B)

- A lot of pages pointing to the IBM home page,

implying that it has a very high page rank.

A

P

B

31

Comments on last slide

- The example illustrates how the page rank of a

page can be computed. In practice, all pages are

initialized with the same rank and the page rank

formula is applied to compute the page ranks of

all pages. This process is repeated until

convergence is reached. Under some reasonable

assumptions, convergence is guaranteed. The page

rank information is utilized to rank pages for

any user query.

32

Query IBM

- Thousands of pages have that word, but among

those pages having that word, IBM home page has

largest rank.Google utilizes page rank

33

Comments on last slide

- There are a number of ways to utilize page ranks

to rank pages for a given query. One way is to

first retrieve pages which have reasonable

similarities with the query. Then the retrieved

pages are re-ranked in descending order of page

rank. Another way is to compute the relevance of

a page based on a function of the similarity of

the page with the query and its page rank. Then

pages are re-ranked in descending order of

relevance.

34

Authority and Hub

- Query retrieves documents based on similarities

- Expand this set by adding their parents and their

children - Compute A(p) sum H(q) for each edge (q,p)

- Compute H(p) sum A(q) for each edge (p,q)

35

Authority and Hub continued

- Normalize A(p) and H(p)

- Repeat until A() and H() converge

- Output pages with top authority scores

- ( It has been shown that convergence is

guaranteed.) - www.teoma.com

- ( This company claims to have an advanced search

capability which is more accurate than the

standard authority and hub technique.)

36

- Various features of different search engines,

including Google, AltaVista, Hotbot etc - Search Engines for the World Wide Web

- By Alfred and Emily Glossbrenner, 3rd

edition,Peachpit Press, 2001.

37

Metasearch engine

- Connects to numerous search engines.Given

query Q, finds suitable search engines to process

the query, invokes the selected search engines to

search and merges their results.

38

Comments on last slide

- Instead of using a search engine such as Google,

a metasearch engine which connects to numerous

search engines can be utilized. Upon receiving a

user query, a metasearch engine sends the query (

with possibly some modifications) to appropriate

search engines and merges and re-ranks the

retrieved documents returned from the invoked

search engines.

39

Advantages of Metasearch Engines over Search

Engines

- Do not need substantial hardware relative to

large search engines - Large coverage

- up-to-date information.

40

Comments to last slide

- There is no need for substantial hardware,

because the searches are done by the underlying

search engines. The coverage of a metasearch

engine is the union of the coverages of the

individual search engines. That it may have more

up-to-date information than a large search engine

will be explained by the next few slides.

41

Up-to-date information

- Search engine crawler gets data

- Builds large index database

- Time consuming to update large index database

- Metasearch engine connects to numerous small

search engines

42

Comments to last slide

- A search engine utilizes a crawler to extract

contents from Surface Web pages and then builds

an index database. Upon receiving a query, the

search engine searches the index database to

determine the pages to return to the user. Since

the contents of Web pages keep on changing, the

index database needs to be updated. However, the

index database is large and refreshing it may

take a long time, say weeks. In contrast, if a

metasearch engine is connected to numerous small

search engines and each of these search engines

keeps its database up-to-date, the metasearch

engine may be able to provide current information.

43

Utilizes dictionary/ontology

- Wordnet ordinary dictionary terms

- MeSH hierarchy medical terms

- May want to include synonyms and hyponyms of

query terms into query

Person --- (Synonyms human, people)

Hyponyms man woman

44

Comments to last slide

- Dictionaries or ontologies may be utilized to

achieve high retrieval effectiveness. A common

dictionary in a general domain is Wordnet which

provides synonyms, hyponyms as well as other

relationships to each ordinary word. As an

example, if a query contains the word person,

its synonyms and hyponyms may be added to the

query. Note that a word may have multiple senses

(meanings) and selections of suitable synonyms

and hyponyms are essential. It is worthwhile to

explore the use of the MeSH hierarchy for

effective retrieval in the medical domain.

45

Difficulty

- A word sometimes has many sensesEg Query

drugs for mental patients - senses for drugs prescription drugs illegal

drugsuseful to include antidepressantwill

retrieve a lot of irrelevant documents if include

heroin

46

Comments to the last slide

- The example shows that a correct addition of a

hyponym (antidepressant is a hyponym of drug)

will lead to high retrieval effectiveness while

an incorrect addition (heroin is also a hyponym

of drug) leads to poor retrieval results.

47

Natural language Processingfor Information

Retrieval

- finds part-of-speech of each word

- identify noun phrases

- identify proper names

- recognizes acronymseg. CHF congestive heart

failures - Word sense disambiguation

- eg. Apple CPU

48

Comments to last slide

- Natural language processing plays a role in

information retrieval. However, so far, it is

used to identify parts of speech of words, named

entities and phrases only. - Recognition of acronyms is also useful for

information retrieval.

49

Word Sense Disambiguation

- Pine 1 kinds of evergreen tree with needle-shaped

leaves - 2 waste away through sorrow or illness

- Cone 1 solid body which narrows to a point

- 2 fruit of certain evergreen trees

- Find the combination of descriptions which have

the largest number of words in common.

50

Comments to last slide

- If the query is pine cone and each of the two

words has multiple senses, the correct sense may

be identified by finding the combination of

senses whose descriptions have the largest number

of words in common. In this example, sense 1 of

pine and sense 2 of cone have the words

evergreen tree in common in their

descriptions. These common words may be added to

the query to improve retrieval effectiveness.

51

Information extraction

- Information retrieval obtains whole documents

often users want small parts of retrieved

documents.Examples - From certain papers on heart disease, extract

names of authorsfrom experimental sections of

papers, extract tables of interest.

52

Techniques

- (1) Construct rules involving patterns or

keywords of identify parts of interest utilizes

a grammar to extract required informationEg. To

identify terrorist eventsuseful keywords kill,

bomb etc. use a grammar to identify the subjects

(terrorists) and the objects (victims)

53

Comments

- Traditionally, information extraction is achieved

by manually constructed rules for the extraction,

after examining numerous instances of what are

desired. In order to save labor cost, machine

learning techniques are introduced. Rules are

automatically constructed and based on positive

and negative examples, promising rules are kept

for future extraction activities.

54

- (2) Use machine learning techniques to

- construct rules

- Positive and negatives examples can

- be given to guide the construction

- Aim Reduce manual construction of rules

55

Machine learning

- Example Pavilion a230 Minitower

- AMD Athlon XP .. GHz

- .

- Pavilion a210n Minitower

- Intel Celeron GHz

- Rule (var1) ( var2) GHz

56

Comments

- In this example, the user supplies a few positive

instances to be extracted. Then, the system

automatically constructs the rule with R and GHz

as landmarks. The words before the landmarks are

captured by variables. - In the Web environment, HTML or XML documents

have tags and they may be used to construct

rules. However, rules involving tags may be site

dependent, implying that new rules may need to be

generated when there is a site change.

57

Rules involving tags

- ltbgt Martino Motor Sales lt/bgt

- ltbgt Currie Motors Lincoln Memory lt/bgt

- Rule ltbgt Var lt/bgt

- Extracted data may not be that structured

- Layout of document can be site dependent,

implying that new correct rules need to be

constructed for new sites

58

Summary

- Information retrieval

- users point of view

- eg. phrase, case sensitive

- system point of view

- eg. Feedback query construction

- Web retrieval vs non-web retrieval

- search engine vs metasearch engine

59

Summary continued

- Natural language processing

- Eg. acronym recognition

- Information extraction

- rules manual, machine learning

- Can be site dependent