Personalized web search engine for mobile devices - PowerPoint PPT Presentation

1 / 27

Title:

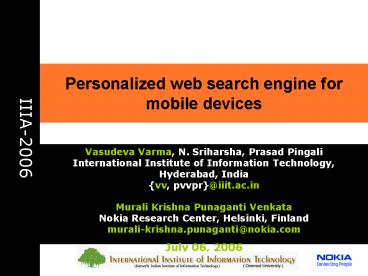

Personalized web search engine for mobile devices

Description:

Murali Krishna Punaganti Venkata. Nokia Research Center, Helsinki, Finland. murali-krishna.punaganti_at_nokia.com. July 06, 2006. IIIA-2006, Helsinki. 2. Overview ... – PowerPoint PPT presentation

Number of Views:1043

Avg rating:3.0/5.0

Title: Personalized web search engine for mobile devices

1

Personalized web search engine for mobile devices

- IIIA-2006

Vasudeva Varma, N. Sriharsha, Prasad

Pingali International Institute of Information

Technology, Hyderabad, India vv,

pvvpr_at_iiit.ac.in Murali Krishna Punaganti

Venkata Nokia Research Center, Helsinki,

Finland murali-krishna.punaganti_at_nokia.com July

06, 2006

2

Overview

- Search Engines State of the Art

- Personalization and User Profiles

- Personalizing Search Results

- Experiments

3

Search Engines Today

- Problems

- Search Engines are impersonal

- Do not consider user expertise level

- ambiguity ( e.g., jaguar, apple )

- Not tuned to mobile environment

- Solution

- Improving search accuracy by

- retrieving by category (e.g., animal, fruit)

- observing mobile user behaviour

- matching user interests

- taking into account user expertise level

4

Personalization

- Adaptation of search engine for specific user

needs - Typically maintains user profiles

- Typically makes use of the context

- User profile represents the users interests.

5

Aspects of User Profile

- User Modeling

- User Profile Modeling

- User Profile Representation

- User Profile Creation

- Updating User Profile

6

User Modeling

- Data About Users

- Demographic Who the user is?

- Transactional What the user does?

- Profile Model

- Factual Stated or derived facts

- Behavioral Conjunctive, associative or

classification rules

7

User Profile Modeling

- Explicit and Implicit

- Static and Dynamic

- Long Term and Short Term

- Expertise level

8

Sources of User Information

- User explicit information

- User Context

- User browsing histories

- User desktops/mobile applications

- Device profiles

- User search histories

9

Our Mobile Search Personalization Architecture

10

Process Distribution

- Client Side (Mobile Device)

- The client contains Observer Module

- Keeps track of the users search history (logger)

and the applications opened. - The statistics are sent to the server

- Server Side

- Maintains user profiles and device profiles

- Major modules include crawler, indexer, and

personalization filter. - Indirect proxy server is used to track the user

from the server side. - Creates query log for future analysis.

11

User Profile Representation

- As a probabilistic distribution of weights over

terms - As a probabilistic distribution of weights over

categories.

12

User Profile Creation

- Collect information about the users interests

from logs - Categorize a relevant document into category

hierarchy - The determined Category weights are used to

capture the user interests

13

Updating User Profile

- The above formula is used to update the weights

in user profile using a relevant document dN1

from the relevance feedback - where,

- Wui is the weight of category Ci in a user

profile u. - N is the total number of past relevant documents.

- dN1 is the current relevant document

- ? is the learning rate which ranges from 0 to 1.

- widN1 is the weight of document dN1 in Ci

- widk is the weight of past relevant document dk

in Ci

14

Features of the Formula

- It is effective in terms of space and complexity.

- It not only uses the weight of the current

document but also the weights of past documents. - It has a factor ? which controls the learning

rate.

15

Personalizing Search Results

- Process Framework

- Re-ranking

- Ranking Algorithm

16

Personalization Process

17

Personalization Process

- Submit query to search engine(s).

- Categorize each result or the snippet/small

summary of each result into a hierarchy - The snippet is used to decide on the relevance of

the result.

18

Re-Ranking

- Personalization Score of a result is based on

similarity between each result profile and user

profile. - Re-rank results based on

- Personalization score, and

- Rank given by the search engine

19

Ranking Algorithm

- Rank (R) a (PS(R)) (1- a) (S(R))

- R is the result

- S(R) is original ranking given by search engine

- PS(R) is the personalization score of the

result R. - a is the personalization factor which ranges

from 0 to 1.

20

Experimental Setup

- 7 users

- Each search record contains a query and the set

of relevant documents. - 10-fold cross validation technique

- Divided each users query set into 10

approximately equal subsets.

21

Tools Used

- An open source categorizer and the ODP structure

- Only the first level of ODP

- A total of 11 categories are used for training.

- Lucene to index all the relevant documents.

22

Process

- Training on 9 subsets and the 10th one for

testing the userprofile. - Tested in two ways

- Personalization

- Trained the userprofile with 9 subsets and tested

with the testing subset. - Adaptiveness

- While training each subset, tested with the

testing subset.

23

Calculating Measures

- For each query, precision at 11 recall values is

calculated. - Calculated the measure of average precision of

all queries per each user. - over all the possible consecutive sets of

training, and - over all the possible sets of testing.

24

Adaptive Results for ?0.5 and a0.5

- (Average Precision values with increase in

training size ) - Size user1 user2 user3 user4 user5 user6 user

7 - 1 0.6168 0.4318 0.4763 0.7578 0.7634 0.7471

0.8845 - 2 0.6597 0.5536 0.5308 0.7361 0.7931 0.78

20 0.7719 - 3 0.6302 0.5770 0.5362 0.7890 0.7250

0.8248 0.7694 - 4 0.6340 0.5444 0.5143 0.7493 0.7072 0.8184

0.7502 - 5 0.6507 0.6307 0.6213 0.6701 0.6526 0.83

21 0.7399 - 6 0.6309 0.6259 0.5592 0.7636 0.6812 0.82

87 0.7138 - 7 0.6490 0.6244 0.5985 0.7922 0.7168

0.8203 0.7364 - 8 0.5984 0.6084 0.5536 0.8122 0.7335

0.8278 0.7482 - 9 0.6754 0.6327 0.5764 0.7798 0.8122

0.7978 0.8393 - 10 0.7064 0.6962 0.7171 0.8380 0.8397

0.8410 0.8974 - Lucene Results (Average Precision for all

queries) - user1 user2 user3 user4 user5 user6 user7

- 0.5332 0.5292 0.5839 0.8369 0.9211 0.7646 0.8584

25

Inference

- The increment of precision with the increase in

training size is not so consistent. - This may be because there is randomness in

selecting the training and testing subsets. - When all the subsets are used for testing, the

final precision values with personalization is

greater than those without personalization

(almost in all cases)

26

Results of (Single user) Experiment II

Personalization -gt Relevant Documents V YES NO

YES 0.877489177489 0.80759018759

NO 0.852943722944 0.891474667308

Inference Precision increased when tested with

the relevant set of queries and it is decreased

when tested with the non-relevant set of queries.

27

Thank you

- Questions?