GridBased HPC Computing on University Campuses - PowerPoint PPT Presentation

1 / 21

Title:

GridBased HPC Computing on University Campuses

Description:

University of Wisconsin: GLOW. University of Michigan: MGRID ... Sponsored by existing GLOW members. ATLAS physics group proposed by CMS physics team ... – PowerPoint PPT presentation

Number of Views:37

Avg rating:3.0/5.0

Title: GridBased HPC Computing on University Campuses

1

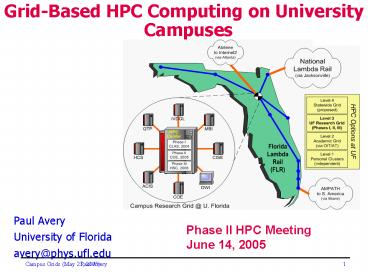

- Grid-Based HPC Computing on University Campuses

Paul Avery University of Florida avery_at_phys.ufl.ed

u

Phase II HPC MeetingJune 14, 2005

2

Examples Discussed Here

- Four campuses, in different states of readiness

- University of Wisconsin GLOW

- University of Michigan MGRID

- University of Florida UF Research Grid

- University of Texas TACC Grid (not discussed

here) - Not complete, by any means

- Goal illustrate factors that go into creating

campus Grid facilities

3

Grid Laboratory of Wisconsin (GLOW)

- 2003 Initiative funded by NSF/UW Six GLOW Sites

- Computational Genomics, Chemistry

- Amanda, Ice-cube, Physics/Space Science

- High Energy Physics/CMS, Physics

- Materials by Design, Chemical Engineering

- Radiation Therapy, Medical Physics

- Computer Science

- Deployed in two Phases

- University funding plus 1.1M from MRI grant

- Managed locally at 6 sites

- Shared globally across all sites

- Higher priority for local jobs

http//www.cs.wisc.edu/condor/glow/

4

GLOW Deployment

- GLOW Phase-I and II are commissioned

- CPU

- 66 nodes each _at_ ChemE, CS, LMCG, MedPhys

- 60 nodes _at_ Physics

- 30 nodes _at_ IceCube

- 50 extra nodes _at_ CS (ATLAS)

- Total CPU 800

- Storage

- Head nodes _at_ at all sites

- 45 TB each _at_ CS and Physics

- Total storage 100 TB

- GLOW resources used at 100 level

- Key is having multiple user groups

5

Resource Sharing in GLOW

- Six GLOW sites

- Equal priority ? 17 average

- Chemical Engineering took 33

- Others scavenge idle resources

- Yet, they got 39

Efficient users can realize more than they put in

6

Adding New GLOW Members

- GLOW team membership

- System support person joins GLOW-tech

- PI joins GLOW-exec

- Proposed minimum involvement 50 CPUs?

- Sponsored by existing GLOW members

- ATLAS physics group proposed by CMS physics team

- Condensed matter group proposed by CS

- Expressions of interest from other groups

- Agreements to adhere to GLOW policies

7

Simulations on Condor/GLOW (1)

All of INFN

8

Simulations on Condor/GLOW (2)

9.5M events generated in 2004

9

MGRID at Michigan

- MGRID

- Michigan Grid Research and Infrastructure

Development - Develop, deploy, and sustain an institutional

grid at Michigan - Group started in 2002 with initial U Michigan

funding - Many groups across the University participate

- Compute/data/network-intensive research grants

- ATLAS, NPACI, NEESGrid, Visible Human Project,

NFSv4, NMI - 1300 CPUs in main clusters

http//www.mgrid.umich.edu

10

MGRID Center

- Central core of technical staff (3FTEs, new

hires) - Faculty and staff from participating units

- Exec. committee from participating units

provost office - Collaborative grid research and development with

technical staff from participating units

11

MGrid Research Project Partners

- College of LSA

- www.lsa.umich.edu

- Center for Information Technology Integration

- www.citi.umich.edu

- Michigan Center for BioInformatics

- www.ctaalliance.org

- Visible Human Project

- vhp.med.umich.edu

- Center for Advanced Computing

- cac.engin.umich.edu

- Mental Health Research Institute

- www.med.umich.edu/mhri

- ITCom

- www.itcom.itd.umich.edu

- School of Information

- si.umich.edu

12

MGRID Goals

- For participating units

- Knowledge, support and framework for deploying

Grid technologies - Exploitation of Grid resources both on campus and

beyond - A context for the University to invest in

computing resources - Provide test bench for existing, emerging Grid

technologies - Make significant contributions to general grid

problems - Sharing resources among multiple VOs

- Network monitoring and QoS issues for grids

- Integration of middleware with domain specific

applications - Grid filesystems

13

MFRID Authorization Groups

14

MGRID Job Portal

15

MGRID Job Status

1300 CPUs

16

Major MGRID Users (Snapshot)

17

- Grid3 A National Grid Infrastructure

- 32 sites, 4000 CPUs Universities 4 national

labs - UF is a founding member

- Sites in US, Korea, Brazil, Taiwan

- Running since October 2003

Brazil

www.ivdgl.org/grid3

18

Grid3 Applications

www.ivdgl.org/grid3/applications

19

Grid3 Shared Use Over 6 months

Usage CPUs

Sep 10, 2004

20

Grid3 ? Open Science Grid

- Iteratively extend Grid3 to Open Science Grid

- Grid3 ? OSG-0 ? OSG-1 ? OSG-2 ?

- Shared resources, benefiting broad set of

disciplines - Grid middleware based on Virtual Data Toolkit

(VDT) - Further develop OSG

- Partnerships with other sciences, universities

- Incorporation of advanced networking

- Focus on general services, operations, end-to-end

performance - Aim for July 20, 2005 deployment

- Major press announcement

21

http//www.opensciencegrid.org

![Maharishi University of Management and Technology - [MUMT], Bilaspur PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9657642.th0.jpg?_=20210907048)

![Hidayatullah National Law University - [HNLU], Abhanpur PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9656989.th0.jpg?_=20210906015)

![Awadesh Pratap Singh University - [APS ], Rewa PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9665864.th0.jpg?_=20210928121)

![Sant Gadge Baba Amravati University-[SGBAU], Amravati PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9669132.th0.jpg?_=20211006034)

![Pt Ravishankar Shukla University - [PRSU], Raipur PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9657648.th0.jpg?_=202109070411)

![Thunchath Ezhuthachan Malayalam University - [TEMU], Malappuram PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9661438.th0.jpg?_=20210919061)

![SYMBIOSIS International University – [SIU], Pune PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/9670429.th0.jpg?_=20211009126)