Testing and Management of Distributed Systems PowerPoint PPT Presentation

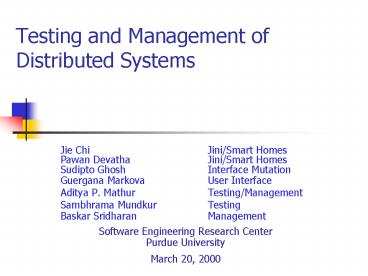

Title: Testing and Management of Distributed Systems

1

Testing and Management of Distributed Systems

- Jie Chi Jini/Smart Homes

- Pawan Devatha Jini/Smart Homes

- Sudipto Ghosh Interface Mutation

- Guergana Markova User Interface

- Aditya P. Mathur Testing/Management

- Sambhrama Mundkur Testing

- Baskar Sridharan Management

- Software Engineering Research Center

- Purdue University

- March 20, 2000

2

Structure of a Brokered Distributed Application

Component

Component

Request/data

Request/data

ORB

ORB

Communication over network or within a process.

Component

3

Objectives

- Testing

- Assess the adequacy of a test suite prior to

deployment. - Test and debug distributed objects while they are

deployed. - Perform fault injection to assess the tolerance

of a collection of objects to failures in

components. - Monitoring and Control

- Monitor distributed objects.

- Provide event-based control.

- Gather system performance data.

4

Adequacy Assessment

- Problem

- Given a test suite T and a collection of

components, assess the goodness of T. - Proposed Solution

- Use Interface Mutation

- Existing solutions

- Measure code coverage

5

What is Interface Mutation ?

Test Suite T contains Request-A.

Request-A

Original Program

Client

Server

Interface

Response-A

Request-A

Mutant

Mutated Interface

Client

Server

Response-B

If Response-A is identical to Response-B for all

requests in T then T is inadequate and needs to

be improved.

6

Mutant Creation ?

- Mutating the interface

- One change at a time.

- Simple changes

- Parameter swap, increment and decrement

parameters, nullify a string, zero a parameter,

nullify a pointer, etc.

7

Why Mutate ?

- Why mutate?

- Doing so will force a tester to develop test t

to distinguish a mutant from the original

program. - So what?

- The new test t is likely to cause the original

program to be executed in new ways thereby

increasing the chance of revealing any hidden

error. - Any experimental evidence? Yes!!

8

Experimental Methodology

- Select components

- Number of components 3

- Number of interfaces 6

- Number of methods 82

- Number of mutants 220

- Seed errors one by one in the components

- Errors related to

- Portability, data, algorithm, language-specific

9

Errors seeded

Error Seeded

Knuth Category

1

Wrong configuration variable used

Portability

2

Insufficient malloc (not enough memory)

Data

3

No malloc, using null pointer

Data

4

Forgetting to free memory

Data

5

Wrong offset in fseek(), fgets()

Data

6

Forget null string termination

Data

7

Forget strdup

Data

8

Wrong variable used in search

Blunder

9

Counts not incremented

Forgot

10

Errors seeded

Error Seeded

Knuth Category

10

Wrong exception thrown

Blunder

11

Wrong activation mode set

Blunder

12

Forgot to remove object during exception handling.

This object was earlier created in the code.

Algorithm

13

Similar to above counter not decremented during

exception handling even though object Was removed.

Algorithm

14

Object counter not incremented even though object

is created.

Algorithm

15

Error in loop termination condition.

Algorithm

11

Error Revealing Capability

100

Using Mutation Adequacy

80

Using Statement/Decision coverage

60

40

20

0

Comp 1

Comp 2

Comp 3

Total

12

Code Coverage

100

Block coverage with Mutation Adequate Tests

Decision coverage with Mutation Adequate tests

80

60

40

20

0

Comp 1

Comp 2

Comp 3

Total

13

Tests Required

35

For Mutation Adequacy

30

For statement/decision coverage

25

20

15

10

5

0

Comp 1

Comp 2

Comp 3

14

Results

- Interface mutation leads to fewer tests that

reveal almost as many errors as revealed by

statement and decision coverage. - Interface mutation is a scalable alternative to

using code coverage.

15

The Power of Interface Mutation

- Reveals

- programming errors in components.

- errors in the use of component interfaces

- Revealing certain types of deadlocks

Client Request

Server

Client

Server callback

16

What remains to be done?

- Testing for certain deadlocks and race conditions

that occur in - Components using different threading models in

CORBA, specifically - Deadlocks and race conditions that occur inside a

component - Testing for system scalability and performance

17

Architecture of TDS 2.0

18

Design Goals

- Non-intrusive and low overhead

- Ability to turn on/off monitoring

- Selective monitoring (filtering)

- Runtime performance measures at a low cost

- Exploit network topology to assure enhanced

performance

19

Service Region

Service Region

S

Hosts

S

S

20

Zone-based partitioning

Zonal Manager

FT

Zone A

S

S

21

Naming Hierarchy

Project

Zone A

Zone B

Zone C

Server Level

Server1

Server2

Object Level

Interface1

Object1

Interface2

Object2

Iface Level

Interface1

Method1

Interface2

Method2

Method Level

Method1

Method2

22

Architecture of TDS 2.0(1)

MC Master Controller LLI Local Listener

Interface Ar Architecture Extractor

23

Server - Performance Statistics

- Up-time

- Processing Time

- Total number of hits

- Number of transient objects

- Number of persistent objects

- State

24

Object - Performance Statistics

- Name

- Up-time

- Processing time

- Total number of hits

- Number of interfaces

- State

25

Interface - Performance Statistics

- Name

- Up-time

- Processing Time

- Total number of hits

- Number of methods

- State

26

Method - Performance Statistics

- Name

- Total number of hits

- Average, Maximum and Minimum Latency

- State

27

Coverage Details

- Graphical view of the ratio of number of requests

to total requests (at each level). - Graphical view of utilization (at each level).

28

Event-based Control

- Events

- Timed

- Request Arrival

- Any

- Actions

- ALLOW Allow all incoming requests

- DENY Deny all incoming requests

- ALARM Raise an alarm

- MAIL Send mail

- REDIRECT Redirect requests

29

Event-based Control

- Action Object

- Server

- Object

- Interface

- Method

- Control Command

- Command (event, action, action_object)

- Control command applied at all levels

- Note For request-arrival events, control action

executed after arrival but before processing.

30

Sample Commands

- Timed command

- (time 1545, DENY, (ZoneA,ector,bank_server)

) - Semantics

- DENY control action started at 1545 (local time

at ector). - Any request arrival after successful completion

of control action will be denied - (time 1800, ALLOW, (ZoneA,ector,bank_server

))

31

Sample Event-Action pairs

- Control Command

- client_id (ZoneA,lisa,TxManager_Server)

- server_id (ZoneA,ector,bank_server,Green_

Bank) - event(client_id,server_id, IDL/Bank/1.0,close

Account()) - action DENY

- action_object server_id

- Command (event, action,action_object)

- Semantics When a request for IDL/Bank/

closeAccount()/1.0 arrives from client_id, DENY

control action will be applied on server_id

before request is sent to server_id.

32

Dynamic Testing/Debugging

Server-2

1. Client sends a request R.

Client

Server-1

2. Server responds 3. Client discovers incorrect

response. 4. Client reports problem to the SP.

5. SP becomes a client. 6. SP issues R with a

red token and monitors the flow of R.

SP

33

Issues with Dynamic Testing

- Following the message chain.

- Isolating the set of faulty components.

- Selectively deflecting messages to instrumented

components under debugging.

34

Current Work

- Addition of dynamic testing features.

- Addition of monitoring and control features.

- Management of Smart Homes via specialized TDS.

- HomeOwner version of TDS for Palm Tops.