HIMMER PFAM algorithm and parallel schemes PowerPoint PPT Presentation

1 / 6

Title: HIMMER PFAM algorithm and parallel schemes

1

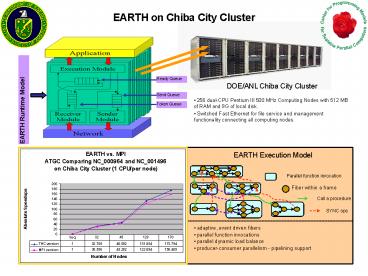

EARTH on Chiba City Cluster

Ready Queue

- DOE/ANL Chiba City Cluster

- 256 dual-CPU Pentium III 500 MHz Computing Nodes

with 512 MB of RAM and 9G of local disk. - Switched Fast Ethernet for file service and

management functionality connecting all computing

nodes.

Send Queue

EARTH Runtime Model

Token Queue

EARTH Execution Model

- adaptive, event driven fibers

- parallel function invocations

- parallel dynamic load balance

- producer-consumer parallelism - pipelining

support

2

Comparison between RTS 2.0 and RTS 2.5

Experimental Results (On Chiba City)

Different Layouts of RTS (On Single-CPU or

Dual-CPU node)

- Lock free. (Only the last

- token in TQ needs lock)

- Stable.

- Concise message formats.

- Less system calls.

- Locks on shared resources.

- Unstable on certain benchmarks.

- Redundancies in network

- message formats.

- Excessive system calls.

- Fibonacci fine-grain, dynamic load balancing.

- Tomcatv data parallel, computation intensive,

global reduction and barrier

3

Performance of RTS 2.5 and RTS 2.0 on various

platforms

Relative Speedup (Earthquake)

Relative Speedup (Comet)

Relative Speedup (Chiba City)

RTS2.0

RTS2.5

- Earthquake 16 nodes, 500MHz PIII, 128MB RAM,

Fast Ethernet - Comet 16 nodes, dual-cpu Athlon 1.4GHz, 512MB

RAM, Fast Ethernet - Chiba City Up to 256 nodes, dual-cpu PIII 500

MHz, 512 MB RAM, Fast Ethernet

- Fibonacci and Nqueen fine-grain, dynamic load

balancing problems. Both broke on RTS - 2.0 at various problem size.

- Tomcatv data parallel, computation intensive

app. Do global reduction and barrier. - ATGC sequence comparison through dynamic

programming.

4

New Parallel Schemes for HIMMER PFAM

5

EARTH 2.6 SMP LAYOUT

- POSIX-THREADS and standard TCP/IP sockets give

portability - Pthreads modules overlapping computation and

communication - SMP support without any locks, all operation

accessing shared resources serialized by center

managers like token manager in SM, SU in EM - Satisfying remote data and token request

immediately - Internal token balancer balances tokens among

local EMs, and outside load balancer try to

balance work load among all physical nodes

6

Plan for benchmarking to test EARTH 2.6

Preliminary Results

- Existing Benchmarks

- N-Queen, Fibonacci, tomcatv, cannon,

Ping-Pong, Paraffins, ATGC - New Benchmark

- HMMER PFAM

- Porting THREADED-C 1.0 benchmarks

- shortpath, wave2d, gauss elimination, pivot,

parallel Astar, etc.