batch online learning

1 / 13

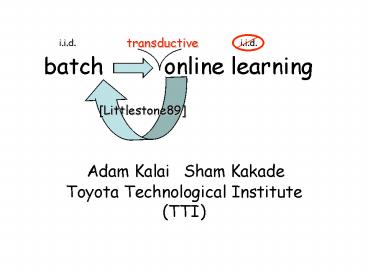

Title: batch online learning

1

batch online learning

- Toyota Technological Institute (TTI)

Sham Kakade

Adam Kalai

2

Batch learning vs.

Online learning

arbitrary

dist. ? over X ,

X

h

h1

ERM best on data

Family of functions F (e.g. halfspaces)

3

Batch learning vs.

Online learning

arbitrary

dist. ? over X ,

X

h

ERM best on data

Family of functions F (e.g. halfspaces)

4

Batch learning vs.

Online learning

arbitrary

dist. ? over X ,

X

h

h3

Goal err(alg) minf2F err(f)

ERM best on data

Family of functions F (e.g. halfspaces)

5

Batch learning vs.

Online learning

arbitrary

dist. ? over X ,

X

h

h3

Goal err(alg) minf2F err(f)

ERM best on data

Family of functions F (e.g. halfspaces)

6

Batch learning vs.

Transductive

Online learning

Ben-David,Kushilevitz,Mansour95

arbitrary

dist. ? over X ,

.

.

X

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

equivalent

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

h

proper learning output h(i)2F

h1

.

.

.

.

.

.

.

.

.

ERM best on data

Goal err(alg) minf2F err(f)

Family of functions F (e.g. halfspaces)

7

Our results

HERM Hallucination ERM

- Theorem 1. In online trans. setting,

- HERM requires one ERM computation per sample.

- Theorem 2. These are equivalent for proper

learning - F is agnostically learnable

- ERM agnostically learns F

- (ERM can be done efficiently and VC(F) is

finite) - F is online transductively learnable

- HERM online transductively learns F

8

Online ERM algorithm

(sucks)

Choose hi 2 F with minimal errors on

(x1,y1),,(xi-1,yi-1) hi argminf2F jlti

f(xj)?yj

F , X (0,0)

- x1 (0,0) y1

- x2 (0,0) y2

- x3 (0,0) y3

- x4 (0,0) y4

- h1 (x)

- h2(x)

- h3(x)

- h4(x)

9

Online ERM algorithm

Choose hi 2 F with minimal errors on

(x1,y1),,(xi-1,yi-1) hi argminf2F jlti

f(xj)?yj

Online stability lemma

KVempala01

- err(ERM) minf2F err(f) Pi21,,nhi?hi1

Proof by induction on n examples

easy!

10

Online HERM algorithm

- Inputs ?x1,x2,,xn, int R

- For each x2?, hallucinate rx copies of (x,)

rx copies of

(x,) - Choose hi 2 F that minimizes errors

onhallucinated data (x1,y1),,(xi-1,yi-1)

-

, (xi,)

11

Online HERM algorithm

- Inputs ?x1,x2,,xn, int R

- For each x2?, hallucinate rx copies of (x,)

rx copies of

(x,) - Choose hi 2 F that minimizes errors

onhallucinated data (x1,y1),,(xi-1,yi-1)

-

Online stability lemma

Hallucination cost

12

Being more adaptive (shifting bounds)

- (x1,y1),,(xi,yi),(xiW,yiW),(xn,yn)

window

4

13

Related work

- Inequivalence of batch and online learning in

noiseless setting - ERM black box is noiseless

- For computational reasons!

- Inefficient alg. for online trans. learning

- List all (n1)VC(F) labelings (Sauers lemma)

- Run weighted majority

Blum90,Balcan06

Ben-David,Kushilevitz,Mansour95

Littlestone,Warmuth92

14

Conclusions

- Alg. for removing iid assumption, efficiently,

using unlabeled data - Interesting way to use unlabeled data online,

reminiscent of bootstrap/bagging - Adaptive version can do well on every window

- Find right algorithm/analysis