NeuroFuzzy and Soft Computing - PowerPoint PPT Presentation

1 / 63

Title:

NeuroFuzzy and Soft Computing

Description:

Prerequisites: Calculus, Linear Algebra, and basic knowlege on Probability ... Tabu search. Genetic Algorithms. Motivation. Look at what evolution brings us? Vision ... – PowerPoint PPT presentation

Number of Views:911

Avg rating:3.0/5.0

Title: NeuroFuzzy and Soft Computing

1

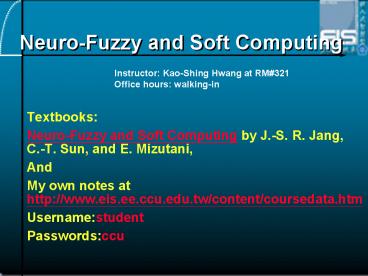

Neuro-Fuzzy and Soft Computing

Instructor Kao-Shing Hwang at RM321 Office

hours walking-in

- Textbooks

- Neuro-Fuzzy and Soft Computing by J.-S. R. Jang,

C.-T. Sun, and E. Mizutani, - And

- My own notes at http//www.eis.ee.ccu.edu.tw/conte

nt/coursedata.htm - Usernamestudent

- Passwordsccu

2

Neuro-Fuzzy and Soft Computing

- Prerequisites Calculus, Linear Algebra, and

basic knowlege on Probability - Required computer skills DOS/UNIX/MS-Windows,

MATLAB - Grading policy

- 4-5 homeworks 20

- Mid-term and final exams.20 each

- Mid-term and final projects20 each

3

Neuro-Fuzzy andSoft Computing

- An Overview

4

Outline

- Soft computing

- Fuzzy logic and fuzzy inference systems

- Neural networks

- Neuro-fuzzy integration ANFIS

- Derivative-free optimization

- Genetic algorithms

- Simulated annealing

- Random search

- Examples and demos

5

- Neural Networks (NN) that recognize patterns

adapts themselves to cope with changing

environments - Fuzzy inference systems that incorporate human

knowledge perform inferencing decision making

Adaptivity Expertise NF SC

6

SC Constituants and Conventional AI

- SC is an emerging approach to computing which

parallel the remarkable ability of the human mind

to reason and learn in a environment of

uncertainty and imprecision Lotfi A. Zadeh,

1992 - SC consists of several computing paradigms

including - NN

- Fuzzy set theory

- Approximate reasoning

- Derivative-free optimization methods such as

genetic algorithms (GA) simulated annealing (SA)

7

SC constituents and conventional AI

8

The core of SC

- In general, SC does not perform much symbolic

manipulation - SC in this sense complements conventional AI

approaches

9

Computational Intelligence

- Conventional AI manipulates symbols on the

assumption that human intelligence behavior can

be stored in symbolically structured knowledge

bases this is known as The physical symbol

system hypothesis - The knowledge-based system (or expert system) is

an example of the most successful conventional AI

product

10

- An expert system

11

Neural Network (NN)

- Imitation of the natural intelligence of the

brain - Parallel processing with incomplete information

- Nerve cells function about 106 times slower than

electronic circuit gates, but human brains

process visual and auditory information much

faster than modern computers - The brain is modeled as a continuous-time non

linear dynamic system in connectionist

architectures - Connectionism replaced symbolically structured

representations - Distributed representation in the form of weights

between a massive set of interconnected neurons

12

- Fuzzy set theory

- Human brains interpret imprecise and incomplete

sensory information provided by perceptive organs - Fuzzy set theory provides a systematic calculus

to deal with such information linguistically - It performs numerical computation by using

linguistic labels stimulated by membership

functions - It lacks the adaptability to deal with changing

external environments gt incorporate NN learning

concepts in fuzzy inference systems NF modeling

13

Evolutionary computation

- Natural intelligence is the product of millions

of years of biological evolution - Simulation of complex biological evolutionary

processes - GA is one computing technique that uses an

evolution based on natural selection - Immune modeling and artificial life are similar

disciplines based on chemical and physical laws - GA and SA population-based systematic random

search (RA) techniques

14

NF and SC characteristics

- With NF modeling as a backbone, SC can be

characterized as - Human expertise (fuzzy if-then rules)

- Biologically inspired computing models (NN)

- New optimization techniques (GA, SA, RA)

- Numerical computation (no symbolic AI so far,

only numerical)

15

Neuro-Fuzzy and Soft Computing

Soft Computing

16

Fuzzy Sets

- Sets with fuzzy boundaries

A Set of tall people

17

Membership Functions (MFs)

- About MFs

- Subjective measures

- Not probability functions

MFs

.8

.5

.1

180

Heights (cm)

18

Fuzzy If-Then Rules

- Mamdani style

- If pressure is high then volume is small

- Sugeno style

- If speed is medium then resistance 5speed

19

Fuzzy Inference System (FIS)

If speed is low then resistance 2 If speed is

medium then resistance 4speed If speed is high

then resistance 8speed

MFs

low

medium

high

.8

.3

.1

Speed

2

Rule 1 w1 .3 r1 2 Rule 2 w2 .8 r2

42 Rule 3 w3 .1 r3 82

Resistance S(wiri) / Swi

7.12

20

First-Order Sugeno FIS

- Rule base

- If X is A1 and Y is B1 then Z p1x q1y r1

- If X is A2 and Y is B2 then Z p2x q2y r2

21

Fuzzy Inference Systems (FIS)

- Also known as

- Fuzzy models

- Fuzzy associate memories (FAM)

- Fuzzy controllers

Rule base (Fuzzy rules)

Data base (MFs)

input

output

Fuzzy reasoning

22

Neural Networks

- Supervised Learning

- Multilayer perceptrons

- Radial basis function networks

- Modular neural networks

- LVQ (learning vector quantization)

- Unsupervised Learning

- Competitive learning networks

- Kohonen self-organizing networks

- ART (adaptive resonant theory)

- Others

- Hopfield networks

23

The Basics of Neural Networks

- Neural networks are typically organized in

layers. - Layers are made up of a number of interconnected

'nodes' which contain an 'activation function'. - Patterns are presented to the network

- input layer

- hidden layers

- weighted connections

- output layer

24

Structure of ANN

25

Learning

- Most ANNs contain some form of 'learning rule'

which modifies the weights of the connections

according to the input patterns that it is

presented with. - In a sense, ANNs learn by example as do their

biological counterparts a child learns to

recognize dogs from examples of dogs.

26

Learning Rules

- The delta rule is often utilized by the most

common class of ANNs called 'backpropagational

neural networks' (BPNNs). - Backpropagation is an abbreviation for the

backwards propagation of error.

27

Supervised Learning

- Learning is a supervised process that occurs with

each cycle or 'epoch' through a forward

activation flow of outputs, and the backwards

error propagation of weight adjustments. - Biologically, when a neural network is initially

presented with a pattern it makes a random

'guess' as to what it might be. It then sees how

far its answer was from the actual one and makes

an appropriate adjustment to its connection

weights.

28

Supervised Learning

29

ANN v.s. Optimal Search

- Note that within each hidden layer node is a

sigmoidal activation function which polarizes

network activity and helps stabilize it. - Backpropagation performs a gradient descent

within the solution's vector space towards a

'global minimum' along the steepest vector of the

error surface. - The global minimum is that theoretical solution

with the lowest possible error.

30

Search in Error Space

31

Convergence

- Neural network analysis often requires a large

number of individual runs to determine the best

solution. - Most learning rules have built-in mathematical

terms to assist in this process which control the

'speed' (Beta-coefficient) and the 'momentum' of

the learning.

32

Convergence

- The speed of learning is actually the rate of

convergence between the current solution and the

global minimum. - Momentum helps the network to overcome obstacles

(local minima) in the error surface and settle

down at or near the global minimum.

33

Single-Layer Perceptrons

- Network architecture

x1

w1

w0

w2

x2

y signum(Swi xi w0)

w3

x3

34

Single-Layer Perceptrons

- Example Gender classification

35

Multilayer Perceptrons (MLPs)

- Learning rule

- Steepest descent (Backprop)

- Conjugate gradient method

- All optimization methods using first derivative

- Derivative-free optimization

36

Multilayer Perceptrons (MLPs)

Example XOR problem

Training data

x1 x2 y 0 0 0 0 1 1 1 0 1 1

1 0

37

MLP Decision Boundaries

38

Adaptive Networks

x

z

y

- Architecture

- Feedforward networks with diff. node functions

- Squares nodes with parameters

- Circles nodes without parameters

- Goal

- To achieve an I/O mapping specified by training

data - Basic training method

- Backpropagation or steepest descent

39

Derivative-Based Optimization

- Based on first derivatives

- Steepest descent

- Conjugate gradient method

- Gauss-Newton method

- Levenberg-Marquardt method

- And many others

- Based on second derivatives

- Newton method

- And many others

40

Fuzzy Modeling

Unknown target system

y

Fuzzy Inference system

y

- Given desired i/o pairs (training data set) of

the form - (x1, ..., xn y), construct a FIS to match the

i/o pairs

- Two steps in fuzzy modeling

- structure identification --- input selection,

MF numbers - parameter identification --- optimal parameters

41

Neuro-Fuzzy Modeling

- Basic approach of ANFIS

Adaptive networks

Generalization

Specialization

Neural networks

Fuzzy inference systems

ANFIS

42

Parameter ID

- Hybrid training method

nonlinear parameters

linear parameters

w1

A1

P

w1z1

x

A2

P

S

Swizi

B1

P

/

z

y

B2

P

w4z4

Swi

w4

S

forward pass

backward pass

fixed

steepest descent

MF param. (nonlinear)

least-squares

fixed

Coef. param. (linear)

43

Parameter ID Gauss-Newton Method

- Synonyms

- linearization method

- extended Kalman filter method

- Concept

- general nonlinear model y f(x, q)

- linearization at q qnow

- y f(x, qnow)a1(q1 - q1,now)a2(q2 -

q2,now) ... - LSE solution

- qnext qnow h(A A) A B

T

T

-1

44

Param. ID Levenberg-Marquardt

- Formula

- qnext qnow h(A A lI) A B

- Effects of l

- l small Gauss-Newton method

- l big steepest descent

- How to update l

- greedy policy make l

small - cautious policy make l large

T

T

-1

45

Param. ID Comparisons

- Steepest descent (SD)

- treats all parameters as nonlinear

- Hybrid learning (SDLSE)

- distinguishes between linear and nonlinear

- Gauss-Newton (GN)

- linearizes and treat all parameters as linear

- Levenberg-Marquardt (LM)

- switches smoothly between SD and GN

46

Structure ID

- Input selection

- Input space partitioning

To select relevant input for efficient modeling

Grid partitioning

Tree partitioning

Scatter partitioning

- C-means clustering

- mountain method

- hyperplane clustering

- CART method

47

Derivative-Free Optimization

- Genetic algorithms (GAs)

- Simulated annealing (SA)

- Random search

- Downhill simplex search

- Tabu search

48

Genetic Algorithms

- Motivation

- Look at what evolution brings us?

- Vision

- Hearing

- Smelling

- Taste

- Touch

- Learning and reasoning

- Can we emulate the evolutionary process with

today's fast computers?

49

Genetic Algorithms

- Terminology

- Fitness function

- Polulation

- Encoding schemes

- Selection

- Crossover

- Mutation

- Elitism

50

Genetic Algorithms

- Binary encoding

Chromosome

(11, 6, 9) 1011 0110 1001

Gene

Crossover

1 0 0 1 1 1 1 0

1 0 0 1 0 0 1 0

1 0 1 1 0 0 1 0

1 0 1 1 1 1 1 0

Crossover point

Mutation

1 0 0 1 1 1 1 0

1 0 0 1 1 0 1 0

Mutation bit

51

Genetic Algorithms

- Flowchart

10010110 01100010 10100100 10011001 01111101 . .

. . . . . . . . . .

10010110 01100010 10100100 10011101 01111001 . .

. . . . . . . . . .

Elitism

Selection

Crossover

Mutation

Current generation

Next generation

52

Genetic Algorithms

- Example Find the max. of the peaks function

- z f(x, y) 3(1-x)2exp(-(x2) - (y1)2) -

10(x/5 - x3 - y5)exp(-x2-y2)

-1/3exp(-(x1)2 - y2).

53

Genetic Algorithms

- Derivatives of the peaks function

- dz/dx -6(1-x)exp(-x2-(y1)2) -

6(1-x)2xexp(-x2-(y1)2) -

10(1/5-3x2)exp(-x2-y2) 20(1/5x-x3-y5)

xexp(-x2-y2) - 1/3(-2x-2)exp(-(x1)2-y2) - dz/dy 3(1-x)2(-2y-2)exp(-x2-(y1)2)

50y4exp(-x2-y2) 20(1/5x-x3-y5)yexp(-x

2-y2) 2/3yexp(-(x1)2-y2) - d(dz/dx)/dx 36xexp(-x2-(y1)2) -

18x2exp(-x2-(y1)2) - 24x3exp(-x2-(y1)2

) 12x4exp(-x2-(y1)2) 72xexp(-x2-y2)

- 148x3exp(-x2-y2) - 20y5exp(-x2-y2)

40x5exp(-x2-y2) 40x2exp(-x2-y2)y5

-2/3exp(-(x1)2-y2) - 4/3exp(-(x1)2-y2)x2

-8/3exp(-(x1)2-y2)x - d(dz/dy)/dy -6(1-x)2exp(-x2-(y1)2)

3(1-x)2(-2y-2)2exp(-x2-(y1)2)

200y3exp(-x2-y2)-200y5exp(-x2-y2)

20(1/5x-x3-y5)exp(-x2-y2) -

40(1/5x-x3-y5)y2exp(-x2-y2)

2/3exp(-(x1)2-y2)-4/3y2exp(-(x1)2-y2)

54

Genetic Algorithms

- GA process

Initial population

5th generation

10th generation

55

Genetic Algorithms

- Performance profile

56

Simulated Annealing

- Analogy

57

Simulated Annealing

- Terminology

- Objective function E(x) function to be

optiimized - Move set set of next points to explore

- Generating function to select next point

- Acceptance function h(DE, T) to determine if the

selected point should be accept or not. Usually

h(DE, T) 1/(1exp(DE/(cT)). - Annealing (cooling) schedule schedule for

reducing the temperature T

58

Simulated Annealing

- Flowchart

Select a new point xnew in the move sets via

generating function

Compute the obj. function E(xnew)

Set x to xnew with prob. determined by h(DE, T)

Reduce temperature T

59

Simulated Annealing

- Example Travel Salesperson Problem (TSP)

How to transverse n cities once and only once

with a minimal total distance?

60

Simulated Annealing

- Move sets for TSP

12

12

10

10

3

3

Translation

1

1

Inversion

6

6

7

7

2

2

9

11

9

11

8

8

4

5

4

5

1-2-3-4-5-6-7-8-9-10-11-12

1-2-3-4-5-9-8-7-6-10-11-12

12

12

10

10

3

3

Switching

1

1

6

6

7

7

2

2

9

11

9

11

8

8

4

5

4

5

1-2-11-4-8-7-5-9-6-10-3-12

1-2-3-4-8-7-5-9-6-10-11-12

61

Simulated Annealing

- A 100-city TSP using SA

Initial random path

During SA process

Final path

62

Simulated Annealing

- 100-city TSP with penalities when crossing the

circle

Penalty 0

Penalty 0.5

Penalty -0.3

63

Conclusions

- Contributing factors to successful applications

of neuro-fuzzy and soft computing - Sensor technologies

- Cheap fast microprocessors

- Modern fast computers

64

References and WWW Resources

- References

- Neuro-Fuzzy and Soft Computing, J.-S. R. Jang,

C.-T. Sun and E. Mizutani, Prentice Hall, 1996 - Neuro-Fuzzy Modeling and Control, J.-S. R. Jang

and C.-T. Sun, the Proceedings of IEEE, March

1995. - ANFIS Adaptive-Network-based Fuzzy Inference

Systems,, J.-S. R. Jang, IEEE Trans. on Systems,

Man, and Cybernetics, May 1993. - Internet resources

- This set of slides is available at

- http//www.cs.nthu.edu.tw/jang/publication.ht

m - WWW resouces about neuro-fuzzy and soft-computing

- http//www.cs.nthu.edu.tw/jang/nfsc.htm