Grid File Replication using SRM - PowerPoint PPT Presentation

Title:

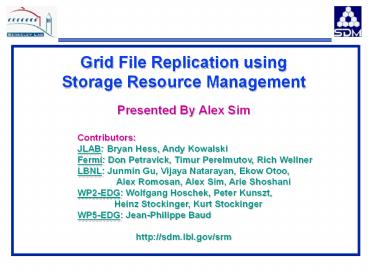

Grid File Replication using SRM

Description:

Grid File Replication using SRM – PowerPoint PPT presentation

Number of Views:81

Avg rating:3.0/5.0

Title: Grid File Replication using SRM

1

Grid File Replication using Storage Resource

Management Presented By Alex Sim Contributors

JLAB Bryan Hess, Andy Kowalski Fermi Don

Petravick, Timur Perelmutov, Rich Wellner LBNL

Junmin Gu, Vijaya Natarayan, Ekow Otoo, Alex

Romosan, Alex Sim, Arie Shoshani WP2-EDG

Wolfgang Hoschek, Peter Kunszt, Heinz

Stockinger, Kurt Stockinger WP5-EDG

Jean-Philippe Baud http//sdm.lbl.gov/srm

2

How does file replication use SRMs(high level

view)

3

Main advantages of using SRMs for file

replication

- Can work in front of MSS

- To provide pre-staging

- To provide queued archiving

- Monitor transfer in/out of MSS

- Recover in case of transient failures

- Reorders pre-staging requests to minimize tape

mounts - Monitors GridFTP transfers

- Re-issues requests in case of failure

- Can control number of concurrent GridFTP

transfers to optimize network use (future) - SRMs role in the data replication

- Storage resource coordination scheduling

- SRMs do not perform file transfer

- SRMs do invoke file transfer service if needed

(GridFTP)

4

Brief history of SRM since GGF4

- Agreed on single API for multiple storage systems

- Jlab has an SRM implementation based on SRM v1.1

spec on top of JASMine - Fermi Lab has an SRM implementation based on SRM

v1.1 spec on top of Enstore - WP5-EDG is proceeding with SRM implementation on

top of Castor - LBNL Deployed HRM-HPSS (which accesses files

in/out of HPSS) at BNL, ORNL, and NERSC (PDSF) - HRM-NCAR that accesses MSS at NCAR is in progress

5

SRM version 2.0

- Joint design and specification of SRM v2.0

- LBNL organized meeting to coordinate design, and

summarized design conclusions - SRM v2.0 spec draft version exists

- SRM v2.0 finalization to be done in Dec.

- Design uses OGSA service concept

- Define interface behavior

- Select protocol binding (WSDL/SOAP)

- Permit multiple implementations

- Disk Resource Managers (DRMs)

- On top of multiple MSSs (HRMs)

6

Brief SummarySRM main methods

- srmGet, srmPut, srmCopy

- Multiple files

- srmGet from remote location to disk/tape

- srmPut from client to SRM disk/tape

- srmCopy from remote location to SRM disk/tape

- srmRelease

- Pinning automatic

- If not provided, apply pinning lifetime

- srmStatus

- Per file, per request

- Time estimate

- srmAbort

7

Main Design Points

- Interfaces to all types of SRMs to be uniform

- Any Clients, Middleware modules, other SRMs

- Will communicate with SRMs

- Support a multiple files request

- set of files, not ordered, no bundles

- Implies queuing, status, time estimates, abort

- SRMs support asynchronous requests

- Non-blocking, unlike FTP and other services

- Support long delays, multi-file requests

- Support call-backs

- Plan to use event notification service

- Automatic Garbage Collection

- In file replication, all files are volatile

- As soon as they are moved to target, SRMs

perform garbage collection automatically.

8

Current efforts on SRMs at LBNL

- Deployed HRM at BNL, LBNL (NERSC/PDSF), ORNL for

HPSS access - Developing HRM at NCAR for MSS access

- Deployed GridFTP-HRM-HPSS connection daemon

- Supports multiple transport protocols

- gsiftp, ftp, http, bbftp and hrm

- Deployed web-based File Monitoring Tool for HRM

- especially useful for large file replica requests

- Deployed HRM client command programs

- User convenience

- Currently developing web-services gateway

- Currently developing GSI-enabled requests

9

Web-Based File Monitoring Tool

10

HRMs in PPDG(high level view)

Replica Coordinator

HRM-COPY

HRM-GET

HRM (performs writes)

HRM (performs reads)

GridFTP GET (pull mode)

LBNL

BNL

11

HRMs in ESG(high level view)

12

File replication from NCAR to ORNL/LBNL

HPSScontrolled at NCAR

13

File Replication from ORNL HPSS to NERSC

HPSScontrolled at NCAR Non-Blocking Calls

14

Recent Measurements of large multi-file

replication

Event

time

Event

time

15

Recent Measurements of large multi-file

replication (GridFTP transfer time)

16

Recent Measurements of large multi-file

replication (Archiving time)

17

Recent Measurements of large multi-file

replication

Event

time

Event

time

18

Recent Measurements of large multi-file

replication (GridFTP transfer time)

19

Recent Measurements of large multi-file

replication (Archiving time)

20

File Replication from ORNL HPSS to NERSC

HPSScontrolled at NCAR

0 Request_Arrive_at_LBNL 1 Request_Arrived_at_ORNL

2 Staging_requested_at_ORNL 3 Staging_started_ORN

L 4 Staging_finished_ORNL 5 Callback_from_ORNL_to_

LBNL 6 GridFTP_Start_by_LBNL 7 Transfered_from_ORN

L_to_LBNL 8 Migration_Requested 9

Migration_Started 10 Migration_Finished 11

Notified_Client

HRM Client on NCAR HRM on LBNL and ORNL Max

Concurrent PFTPs5 Cache Size at LBNL30G Cache

Size at ORNL25G Max Concurrent Pinned File5 Max

Concurrent GridFTP3 GridFTP p4 GridFTP

bs1000000