Using Sketches to Estimate Associations - PowerPoint PPT Presentation

Title:

Using Sketches to Estimate Associations

Description:

Using Sketches to Estimate Associations – PowerPoint PPT presentation

Number of Views:14

Avg rating:3.0/5.0

Title: Using Sketches to Estimate Associations

1

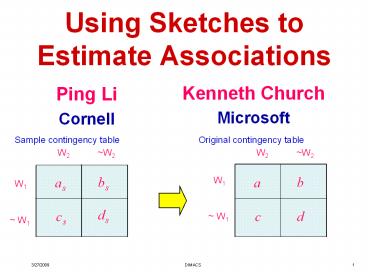

Using Sketches to Estimate Associations

Kenneth Church Microsoft

- Ping Li

- Cornell

Sample contingency table

Original contingency table

W2

W2

W2

W2

bs

b

as

a

W1

W1

ds

cs

c

d

W1

W1

2

On Delivering Embarrassingly Distributed Cloud

ServicesHotnets-2008

Board

- Ken Church

- Albert Greenberg

- James Hamilton

- church, albert, jamesrh_at_microsoft.com

Affordable

1B

2M

2

3

ContainersDisruptive Technology

- Implications for Shipping

- New Ships, Ports, Unions

- Implications for Hotnets

- New Data Center Designs

- Power/Networking Trade-offs

- Cost Models Expense vs. Capital

- Apps Embarrassingly Distributed

- Restriction on Embarrassingly Parallel

- Machine Models

- Distributed Parallel Cluster ? Parallel Cluster

3

4

Mega vs. Micro Data Centers

POPs Cores/ POP Hardware/ POP Co-located With/Near

1 1,000,000 1000 containers Mega Data Center

10 100,000 100 containers Mega Data Center

100 10,000 10 containers Fiber Hotel/ Power Substation

1,000 1,000 1 container Fiber Hotel/ Power Substation

10,000 100 1 rack Central Office

100,000 10 1 mini-tower P2P

1,000,000 1 embedded P2P

4

5

Related Work

- http//en.wikipedia.org/wiki/Data_center

- A data center can occupy one room of a building

- Servers differ greatly in size from 1U servers to

large silos - Very large data centers may use shipping

containers...2

220 containers in one PoP ? 220 in 220 PoPs

5

6

Embarrassingly Distributed Probes

- W1 W2 are Shipping Containers

- Lots of bandwidth within a container

- But less across containers

- Limited Bandwidth ? Sampling

Sample contingency table

Original contingency table

W2

W2

W2

W2

bs

b

as

a

W1

W1

ds

cs

c

d

W1

W1

7

1990

Strong 427M (Google)

Powerful 353M (Google)

Page Hits 1000x BNC freqs

8

Turney (and Know It All)

PMI The Web Better together

9

It never pays to think until youve run out of

data Eric Brill

Moores Law Constant Data Collection Rates ?

Improvement Rates

Banko Brill Mitigating the Paucity-of-Data

Problem (HLT 2001)

No consistently best learner

More data is better data!

Quoted out of context

Fire everybody and spend the money on data

10

Page Hits Estimatesby MSN and Google(August

2005)

Larger corpora ? Larger counts ? More signal

More Freq

Query Hits (MSN) Hits (Google)

A 2,452,759,266 3,160,000,000

The 2,304,929,841 3,360,000,000

Kalevala 159,937 214,000

Griseofulvin 105,326 149,000

Saccade 38,202 147,000

Less Freq

11

Caution Estimates ? Actuals

Query Hits (MSN) Hits (Google)

America 150,731,182 393,000,000

America China 15,240,116 66,000,000

America China Britain 235,111 6,090,000

America China Britain Japan 154,444 23,300,000

These are just (quick-and-dirty) estimates (not

actuals)

12

Rule of Thumb breaks down when there are strong

interactions (Common for cases of most interest)

Query Planning (Governator)

Query Hits (Google)

Austria 88,200,000

One-way Governor 37,300,000

Schwarzenegger 4,030,000

Terminator 3,480,000

Governor Schwarzenegger 1,220,000

Governor Austria 708,000

Schwarzenegger Terminator 504,000

Two-way Terminator Austria 171,000

Governor Terminator 132,000

Schwarzenegger Austria 120,000

Governor Schwarzenegger Terminator 75,100

Three-way Governor Schwarzenegger Austria 46,100

Schwarzenegger Terminator Austria 16,000

Governor Terminator Austria 11,500

Four-way Governor Schwarzenegger Terminator Austria 6,930

Rule of Thumb

13

Associations PMI, MI, Cos, R, CorSummaries of

Contingency Table

W2

W2

a of documents that contain both Word W1

and Word W2

a

b

W1

b of documents that contain Word W1 but

not Word W2

c

W1

d

Margins (aka doc freq)

- Need just one more constraint

- To compute table ( summaries)

- 4 parameters a, b, c, d

- 3 constraints f1, f2, D

14

Postings ? Margins (and more)(Postings aka

Inverted File)

Postings(w) A sorted list of doc IDs for w

PIG 13 25 33

This pig is so cute

saw a flying pig

was raining pigs and eggs

Doc 13

Doc 25

Doc 33

Assume doc IDs are random

15

Conventional Random Sampling(Over Documents)

Sample contingency table

Original contingency table

W2

W2

W2

W2

a

b

W1

as

bs

W1

ds

c

d

cs

W1

W1

Margin-Free Baseline

Sample Size

16

Random Sampling

- Over documents

- Simple well understood

- But problematic for rare events

- Over postings

- where f P (P postings, aka inverted file)

- aka doc freq or margin

Undesirable

17

Sketches gtgt Random Samples

W2

W2

bs

as

W1

ds

cs

W1

Best

Undesirable

Better

18

Outline

- Review random sampling

- and introduce a running example

- Sample Sketches

- A generalization of Broders Original Method

- Sketches

- Advantages Larger as than random sampling

- Disadvantages Estimation ? more challenging

- Estimation Maximum Likelihood (MLE)

- Evaluation

19

Random Sampling over Documents

W2

W2

bs

as

W1

ds

cs

W1

- Doc IDs are random integers between 1 and D36

- Small circles ? word W1

- Small squares ? word W2

- Choose a sample size Ds 18. Sampling rate

Ds/D 50 - Construct sample contingency table

- as 4,15 2, bs 3, 7, 9, 10,

18 5, - cs 2,5,8 3, ds

1,6,11,12,13,14,17 8 - Estimation a D/Ds as

- But that doesnt take advantage of margins

20

Proposed Sketches

Sketch Front of Postings

Postings

P1 3 4 7 9 10 15 18 19 24 25 28 33 P2 2 4 5 8

15 19 21 24 27 28 31 35

Throw out red

Choose sample size Ds 18 min(18, 21) as

4,15 2 bs 7 as 5 cs 5 as 3 ds

Ds as bs cs 8

W2

W2

Based on blue - red

bs

as

W1

ds

cs

W1

21

Estimation Maximum Likelihood (MLE)

When we know the margins, We ought to use them

- Consider all possible contingency tables

- a, b, c d

- Select the table that maximizes the probability

of observations - as, bs, cs ds

22

Exact MLE

First derivative of log likelihood

gives the MLE solution

23

Exact MLE

Second derivative

PMF updating formula

24

Exact MLE

MLE solution

25

An Approximate MLE

Suppose we were sampling from the two inverted

files directly and independently.

26

An Approximate MLE Convenient Closed-Form

Solution

- Convenient Closed-Form

- Surprisingly accurate

- Recommended

Take log of both sides Set derivative 0

27

Evaluation

Independence Baseline

Margin-Free Baseline

When we know the margins, We ought to use them

Proposed

Best

28

Theoretical Evaluation

- Not surprisingly, there is a trade-off between

- Computational work space, time

- Statistical Accuracy variance, error

- Formulas state trade-off precisely in terms of

sampling rate Ds/D - Theoretical evaluation

- Proposed MLE is better than Margin Free baseline

- Confirms empirical evaluation

29

How many samples are enough?

Sampling rate to achieve cv SE/a lt 0.5

Larger D ? Smaller sampling rate

Cluster of 10k machines ? A Single machine

30

Broders Sketch Original MinwiseEstimate

Resemblance (R)

- Notation

- Words w1, w2

- Postings P1, P2

- Set of doc IDs

- Resemblance R

- Random Permutation p

- Minwise Sketch

- Permute doc IDs k times pk

- For each pi, let mini(P) be smallest doc ID in

pi(P) - Original Sketch

- Sketches K1, K2

- Set of doc IDs

- (front of postings)

- Permute doc IDs once p

- Let Kfirstk(P) be the first k doc IDs in p(P)

Throw out half

31

Multi-way Associations Evaluation

MSE relative improvement over margin-free baseline

When we know the margins, we ought to use them

Gains are larger for 2-way than multi-way

32

Conclusions (1 of 2)

When we know the margins, We ought to use them

- Estimating Contingency Tables

- Fundamental Problem

- Practical app

- Estimating Page Hits for two or more words

(Governator) - Know It All Estimating Mutual Information from

Page Hits - Baselines

- Independence Ignore interactions (Awful)

- Margin-Free Ignore postings (Wasteful)

- (2x) Broders Sketch (WWW 97, STOC 98, STOC

2002) - Throws out half the sample

- (10x) Random Projections (ACL2005, STOC2002)

- Proposed Method

- Sampling like Broders Sketch, but throws out

less - Larger as than random sampling

- Estimation MLE (Maximum Likelihood)

- MF Estimation is easy without margin constraints

- MLE Find most likely contingency table,

- Given observations as, bs, cs, ds

33

Conclusions (2 of 2)

Rising Tide of Data Lifts All BoatsIf you have a

lot of data, then you dont need a lot of

methodology

- Recommended Approximation

- Trade-off between

- Computational work (space and time) and

- Statistical accuracy (variance and errors)

- Derived formulas for variance

- Showing how trade-off depends on sampling rate

- At Web scales, sampling rate (Ds/D) ? 104

- A cluster of 10k machines ? A single machine

34

Backup

35

Comparison with Broders Algorithm

Broders Method has larger variance (2x) Because

it uses only half the sketch

Var(RMLE) ltlt VAR(RB)

Equal samples

Proportional samples

when

36

Comparison with Broders Algorithm

Ratio of variances (equal samples)

Var(RMLE) ltlt VAR(RB)

37

Comparison with Broders Algorithm

Ratio of variances (proportional samples)

Var(RMLE) ltlt VAR(RB)

38

Comparison with Broders AlgorithmEstimation of

Resemblance

Broders method throws out half the samples ? 50

improvement

39

Comparison with Random ProjectionsEstimation of

Angle

Huge Improvement

40

Comparison with Random Projections10x Improvement