Outline PowerPoint PPT Presentation

Title: Outline

1

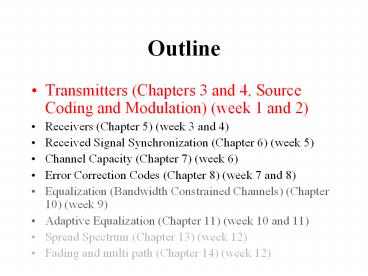

Outline

- Transmitters (Chapters 3 and 4, Source Coding and

Modulation) (week 1 and 2) - Receivers (Chapter 5) (week 3 and 4)

- Received Signal Synchronization (Chapter 6) (week

5) - Channel Capacity (Chapter 7) (week 6)

- Error Correction Codes (Chapter 8) (week 7 and 8)

- Equalization (Bandwidth Constrained Channels)

(Chapter 10) (week 9) - Adaptive Equalization (Chapter 11) (week 10 and

11) - Spread Spectrum (Chapter 13) (week 12)

- Fading and multi path (Chapter 14) (week 12)

2

Transmitters (week 1 and 2)

- Information Measures

- Vector Quantization

- Delta Modulation

- QAM

3

Digital Communication System

Information per bit increases

Bandwidth efficiency increases

noise immunity increases

Transmitter

Receiver

4

Transmitter Topics

- Increasing information per bit

- Increasing noise immunity

- Increasing bandwidth efficiency

5

Increasing Information per Bit

- Information in a source

- Mathematical Models of Sources

- Information Measures

- Compressing information

- Huffman encoding

- Optimal Compression?

- Lempel-Ziv-Welch Algorithm

- Practical Compression

- Quantization of analog data

- Scalar Quantization

- Vector Quantization

- Model Based Coding

- Practical Quantization

- m-law encoding

- Delta Modulation

- Linear Predictor Coding (LPC)

6

Increasing Noise Immunity

- Coding (Chapter 8, weeks 7 and 8)

7

Increasing bandwidth Efficiency

- Modulation of digital data into analog waveforms

- Impact of Modulation on Bandwidth efficiency

8

Increasing Information per Bit

- Information in a source

- Mathematical Models of Sources

- Information Measures

- Compressing information

- Huffman encoding

- Optimal Compression?

- Lempel-Ziv-Welch Algorithm

- Practical Compression

- Quantization of analog data

- Scalar Quantization

- Vector Quantization

- Model Based Coding

- Practical Quantization

- m-law encoding

- Delta Modulation

- Linear Predictor Coding (LPC)

9

Mathematical Models of Sources

- Discrete Sources

- Discrete Memoryless Source (DMS)

- Statistically independent letters from finite

alphabet - Stationary Source

- Statistically dependent letters, but joint

probabilities of sequences of equal length remain

constant - Analog Sources

- Band Limited fltW

- Equivalent to discrete source sampled at Nyquist

2W but with infinite alphabet (continuous)

10

Discrete Sources

11

Discrete Memoryless Source (DMS)

- Statistically independent letters from finite

alphabet

e.g., a normal binary data stream X might be a

series of random events of either X1, or

X0 P(X1) constant 1 - P(X0) e.g., well

compressed data, digital noise

12

Stationary Source

- Statistically dependent letters, but joint

probabilities of sequences of equal length remain

constant

e.g., probability that sequence ai,ai1,ai2,ai

31001 when aj,aj1,aj2,aj31010 is always

the same Approximation uncoded for text

13

Analog Sources

- Band Limited fltW

- Equivalent to discrete source sampled at Nyquist

2W but with infinite alphabet (continuous)

14

Information in a DMS letter

- If an event X denotes the arrival of a letter xi

with probability P(Xxi) P(xi) the information

contained in the event is defined as I(Xxi)

I(xi) -log2(P(xi)) bits

I(xi)

P(xi)

15

Examples

- e.g., An event X generates random letter of value

1 or 0 with equal probability P(X0) P(X1)

0.5 then I(X) -log2(0.5) 1 or 1 bit of

info each time X occurs - e.g., if X is always 1 then P(X0) 0, P(X1)

1 then I(X0) -log2(0) ? and I(X1)

-log2(1) 0

16

Discussion

- I(X1) -log2(1) 0 Means no information is

delivered by X, which is consistent with X 1

all the time. - I(X0) -log2(0) ? Means if X0 then a huge

amount of information arrives, however since

P(X0) 0, this never happens.

17

Average Information

- To help deal with I(X0) ? , when P(X0) 0

we need to consider how much information

actually arrives with the event over time. - The average letter information for letter xi out

of an alphabet of L letters, i 1,2,3L, is - I(xi)P(xi) -P(xi)log2(P(xi))

18

Average Information

- Plotting this for 2 symbols (1,0) we see that on

average at most a little more than 0.5 bits of

information arrive with a particular letter, and

that low or high probability letters generally

carry little information.

19

Average Information (Entropy)

- Now lets consider average information of the

event X made up of the random arrival of all the

letters xi in the alphabet. - This is the (sum of) average information arriving

with each bit.

20

Average Information (Entropy)

- Plotting this for L 2 we see that on average at

most 1 bit of information is delivered per event,

but only if both symbols arrive with equal

probability.

21

Average Information (Entropy)

- What is best possible entropy for multi symbol

code?

So multi bit binary symbols of equally probable

random bits will equal the most efficient

information carriers i.e., 256 symbols made from

8 bit bytes is OK from information standpoint