Principal Component Analysis (PCA) - PowerPoint PPT Presentation

1 / 35

Title:

Principal Component Analysis (PCA)

Description:

In this setting, principle component analysis (PCA) finds the directions of the ... projects every point x onto the axes of the ellipsoid to give projection c. ... – PowerPoint PPT presentation

Number of Views:450

Avg rating:3.0/5.0

Title: Principal Component Analysis (PCA)

1

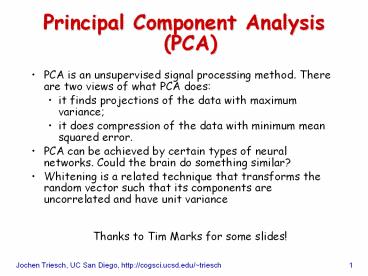

Principal Component Analysis(PCA)

- PCA is an unsupervised signal processing method.

There are two views of what PCA does - it finds projections of the data with maximum

variance - it does compression of the data with minimum mean

squared error. - PCA can be achieved by certain types of neural

networks. Could the brain do something similar? - Whitening is a related technique that transforms

the random vector such that its components are

uncorrelated and have unit variance

Thanks to Tim Marks for some slides!

2

Maximum Variance Projection

- Consider a random vector x with arbitrary joint

pdf - in PCA we assume that x has zero mean

- we can always achieve this by constructing xx-µ

- Scatter plot and projection y onto a weight

vector w - Motivating question y is a scalar random

variable that depends on w for which w is the

variance of y maximal?

3

- Example

- consider projecting x onto the red wr and blue wb

who happen to lie along the coordinate axes in

this case

- clearly, the projection onto wb has the higher

variance, but is there a w for which its even

higher?

4

- First, what about the mean (expected value) of y?

- so we have

- since x has zero mean, meaning that E(xi) 0

for all i. - This implies that the variance of y is just

- Note that this gets arbitrarily big if w gets

longer and longer, so from now on we restrict w

to have length 1, i.e. (wTw)1/21

5

- continuing with some linear algebra tricks

- where Cx is the correlation matrix (or

covariance matrix) of x. - We are looking for the vector w that maximizes

this expression - Linear algebra tells us that the desired vector w

is the eigenvector of Cx corresponding to the

largest eigenvalue

6

- Reminder Eigenvectors and Eigenvalues

- let A be an nxn (square) matrix. The n-component

vector v is an eigenvector of A with the

eigenvalue ? if - i.e. multiplying v by A only changes the

length by a factor ? but not the orientation. - Example

- for the scaled identity matrix is every vector is

an eigenvector with identical eigenvalue s

7

- Notes

- if v is an eigenvector of a matrix A then any

scaled version of v is an Eigenvector of A, too - if A is a correlation (or covariance) matrix,

then all the eigenvalues are real and

nonnegative the eigenvectors can be chosen to be

mutually orthonormal - (dik is the so-called Kronecker

delta) - also, if A is a correlation (or covariance)

matrix, then A is positive semidefinite

8

The PCA Solution

- The desired direction of maximum variance is the

direction of the unit-length eigenvector v1 of Cx

with the largest eigenvalue - Next, we want to find the direction whose

projection has the maximum variance among all

directions perpendicular to the first eigenvector

v1. The solution to this is the unit-length

eigenvector v2 of Cx with the 2nd highest

eigenvalue. - In general, the k-th direction whose projection

has the maximum variance among all directions

perpendicular to the first k-1 directions is

given by the k-th unit-length eigenvector of Cx.

The eigenvectors are ordered such that - We say the k-th principal component of x is given

by

9

- What are the variances of the PCA projetions?

- From above we have

- Now utilize that w is the k-th eigenvector of Cx

- where in the last step we have exploited that

vk has unit length. Thus, in words, the variance

of the k-th principal component is simply the

eigenvalue of the k-th eigenvector of the

correlation/covariance matrix.

10

- To summarize

- The eigenvectors v1, v2, , vn of the covariance

matrix have corresponding eigenvalues ?1 ?2

?n. They can be found with standard algorithms. - ?1 is the variance of the projection in the v1

direction, ?2 is the variance of the projection

in the v2 direction, and so on. - The largest eigenvalue ?1 corresponds to the

principal component with the greatest variance,

the next largest eigenvalue corresponds to the

principal component with the next greatest

variance, etc. - So, which eigenvector (green or red) corresponds

to the smaller eigenvalue in this example?

11

PCA for multinomial Gaussian

- We can think of the joint pdf of the multinomial

Gaussian in terms of the (hyper-) ellipsoidal

(hyper-) surfaces of constant values of the pdf. - In this setting, principle component analysis

(PCA) finds the directions of the axes of the

ellipsoid. - There are two ways to think about what PCA does

next - Projects every point perpendicularly onto the

axes of the ellipsoid. - Rotates the ellipsoid so its axes are parallel to

the coordinate axes, and translates the ellipsoid

so its center is at the origin.

12

Two views of what PCA does

- projects every point x onto the axes of the

ellipsoid to give projection c. - rotates space so that the ellipsoid lines up with

the coordinate axes, and translates space so the

ellipsoids center is at the origin.

13

Transformation matrix for PCA

- Let V be the matrix whose columns are the

eigenvectors of the covariance matrix, S. - Since the eigenvectors vi are all normalized to

have length 1 and are mutually orthogonal, the

matrix V is orthonormal (VV T I), i.e. it is a

rotation matrix - the inverse rotation transformation is given by

the transpose V T of matrix V

14

Transformation matrix for PCA

- PCA transforms the point x (original coordinates)

into the point c (new coordinates) by subtracting

the mean from x (z x m) and multiplying the

result by V T - this is a rotation because V T is an orthonormal

matrix - this is a projection of z onto PC axes, because

v1 z is projection of z onto PC1 axis, v2 z

is projection onto PC2 axis, etc.

15

- PCA expresses the mean-subtracted point, z x

m, as a weighted sum of the eigenvectors vi . To

see this, start with - multiply both sides of the equation from the left

by V - VV Tz Vc exploit that V is orthonormal

- z Vc i.e. we can easily

reconstruct z from c

16

PCA for data compression

- What if you wanted to transmit someones height

and weight, but you could only give a single

number? - Could give only height, x

- (uncertainty when height is known)

- Could give only weight, y

- (uncertainty when weight is known)

- Could give only c1, the value of first PC

- (uncertainty when first PC is known)

- Giving the first PC minimizes the squared error

of the result. - To compress n-dimensional data into k dimensions,

order the principal components in order of

largest-to-smallest eigenvalue, and only save the

first k components.

17

- Equivalent view To compress n-dimensional data

into kltn dimensions, use only the first k

eigenvectors. - PCA approximates the mean-subtracted point, z x

m, as a weighted sum of only the first k

eigenvectors - transforms the point x into the point c by

subtracting the mean z x m and multiplying

the result by V T

18

PCA vs. Discrimination

- variance maximization or compression are

different from discrimination! - consider this data coming stemming from two

classes? - which direction has max. variance, which

discriminates between the two clusters?

19

Application PCA on face images

- 120 ? 100 pixel grayscale 2D images of faces.

Each image is considered to be a single point in

face space (a single sample from a random

vector) - How many dimensions is the vector x ?

- n 120 100 12000

- Each dimension (each pixel) can take values

between 0 and 255 (8 bit grey scale images). - You can easily visualize points in this 12000

dimensional space!

20

The original images (12 out of 97)

21

The mean face

22

- Let x1, x2, , xp be the p sample images

(n-dimensional). - Find the mean image, m, and subtract it from each

image zi xi m - Let A be the matrix whose columns are the

mean-subtracted sample images. - Estimate the covariance matrix

What are the dimensions of S ?

23

The transpose trick

- The eigenvectors of S are the principal component

directions. - Problem

- S is a nxn (1200012000) matrix. Its too big for

us to find its eigenvectors numerically. In

addition, only the first p eigenvalues are

useful the rest will all be zero, because we

only have p samples. - Solution Use the following trick.

- Eigenvectors of S are eigenvectors of AAT but

instead of eigenvectors of AAT, the eigenvectors

of ATA are easy to find - What are the dimensions of ATA ? Its a pp

matrix its easy to find eigenvectors since p is

relatively small.

24

- We want the eigenvectors of AAT, but instead we

calculated the eigenvectors of ATA. Now what? - Let vi be an eigenvector of ATA, whose eigenvalue

is li

livi

(ATA)vi ?

- A (ATAvi) A(livi)

- AAT (Avi) li (Avi)

- Thus if vi is an eigenvector of ATA , Avi is

an eigenvector of AAT , with the same

eigenvalue. - Av1 , Av2 , , Avn are the eigenvectors of S.

u1 Av1 , u2 Av2 , , un Avn are called

eigenfaces.

25

Eigenfaces

- Eigenfaces (the principal component directions of

face space) provide a low-dimensional

representation of any face, which can be used

for - Face image compression and reconstruction

- Face recognition

- Facial expression recognition

26

The first 8 eigenfaces

27

PCA for low-dimensional face representation

- To approximate a face using only k dimensions,

order the eigenfaces in order of

largest-to-smallest eigenvalue, and only use the

first k eigenfaces. - PCA approximates a mean-subtracted face, z x

m, as a weighted sum of the first k eigenfaces

28

- To approximate the actual face we have to add the

mean face m to the reconstruction of z - The reconstructed face is simply the mean face

with some fraction of each eigenface added to it

29

The mean face

30

Approximating a face using the first 10

eigenfaces

31

Approximating a face using the first 20

eigenfaces

32

Approximating a face using the first 40

eigenfaces

33

Approximating a face using all 97 eigenfaces

34

- Notes

- not surprisingly, the approximation of the

reconstructed faces get better when more

eigenfaces are used - In the extreme case of using no eigenface at all,

the reconstruction of any face image is just the

average face - In the other extreme of using all 97 eigenfaces,

the reconstruction is exact, since the image was

in the original training set of 97 images. For a

new face image, however, its reconstruction based

on the 97 eigenfaces will only approximately

match the original. - What regularities does each eigenface capture?

See movie.

35

Eigenfaces in 3D

- Blanz and Vetter (SIGGRAPH 99). The video can be

found at - http//graphics.informatik.uni-freiburg.de/Sig

g99.html