Overview PowerPoint PPT Presentation

Title: Overview

1

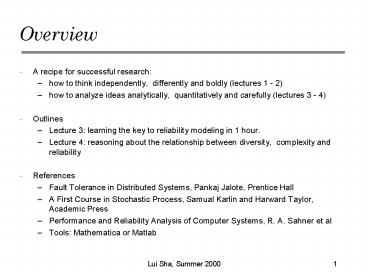

Overview

- A recipe for successful research

- how to think independently, differently and

boldly (lectures 1 - 2) - how to analyze ideas analytically,

quantitatively and carefully (lectures 3 - 4) - Outlines

- Lecture 3 learning the key to reliability

modeling in 1 hour. - Lecture 4 reasoning about the relationship

between diversity, complexity and reliability - References

- Fault Tolerance in Distributed Systems, Pankaj

Jalote, Prentice Hall - A First Course in Stochastic Process, Samual

Karlin and Harward Taylor, Academic Press - Performance and Reliability Analysis of Computer

Systems, R. A. Sahner et al - Tools Mathematica or Matlab

2

Lecture 3

- Questions that we want to answer

- which hardware system has a longer MTTF, a TRM

system or a singleton computer with no

replication and voting? - when you design your research web-site

architecture, how do you know which alternative

will give you higher availability and/or

reliability

3

Concept of Reliability

Reliability for a giving mission duration t,

R(t), is the probability of the system working as

specified for a duration that is at least as long

as t. The most commonly used reliability

function is the exponential reliability

function.

The failure rate used here is the long term

average rate, e.g. 10 failures/year

4

MTTF and Availability

- Mean time to failure on average how long it

takes before a failure occurs. - Availability the percentage of time a system

is functioning - MTTF /(MTTF MTTR) when t

? ? - where MTTR is the mean time to repair, 1/? . We

also use an exponential model for repair

time, where ? is the repair rate. - Availability is meaningful to use only when

__________________

5

Simple Reliability Modeling

r1(t)

r2(t)

6

Parallel System

When all the components have the same failure

rate, we have a simple expression (Mathematica

can do it for you symbolically)

7

Triple Modular Redundancy

r(t)

V

r(t)

r(t)

8

Singleton vs TMR with no Repair

Curve 1

Curve 2

Which one is TMR? Why?

9

Majority Voting with repair

We did not model the reliability of the voter

in this model. What does this imply? What

should we do, if the reliability of the voter

needs to be modeled?

10

Key Ideas

- The key ideas in Markov model are

- The notion of a state

- The transition probability depends only on the

current state - State transition probability PiJ (t) defines the

probability of the system which starts at state i

but is at state j after t units of time. - For example P13 (t) is the probability that the

system starts at state 1 ends at the failure

state, state 3 after t units of time. (1 - P13

(t) ) is the reliability, why?

11

Solution to the Markov Model

- P(t) is the matrix of state transition

probabilities piJ(t) - A is the matrix of failure rates and repair

rates between states

12

The State Transition Rate Matrix, A.

State 1

State 2

State 3

- off diagonal elements come from the diagram

- each row sums to zero

13

A Mathematica Example

Each versions reliability is E - t , i.e. ? 1

and no repair

A -3, 3, 0, 0, -2, 2, 0, 0, 0

SimplifyMatrixExpA t

R(t) (1 - P13(t)) 3E-2t - 2E-3t

Using basic probability theory, we known that

the system works when all 3 or any 2 out 3

working. Hence R(t) P3 3P2(1 - P) 3P2 -

2P3 3E-2t - 2E-3t

14

Summary of Modeling

- We started with a simple reliability model

- We reasoned about the reliability using

elementary probability theory - We examined the key ideas of Markov model and the

solution methods - We show that once we draw the state transition

diagram, powerful tools will automatically

produce the solutions. - The power of Markov model and math packages

reduces reliability analysis to - draw the state transition diagrams

- write down the transition rate matrix

- call the matrix exponential function

15

Lecture 4

- Questions that we want to answer

- what are the key ideas and intuitions that would

allow us to attack the problem of software

reliability - how can we turn intuitive ideas into a logical

system that we can reason about analytically - how can we use our sharpened understanding to

guide our software architecture designs

16

From Ill-formed to Well-formed

- One of the most important skills in research is

problem formulation transforming an interesting

idea/question into something that can be

analyzed. - To shed light on the questions that we have

raised in last class, we need to - postulate a logical relation between the effort

we spent in software engineering and the

resulting reliability - this logical relationship should ground in

factual observation. But idealization is fine.

17

Assumptions Grounded in Observations

- 1) The more complex the software project is, the

harder it is to make it reliable. For a given

degree of complexity, the more effort that we can

devote to software engineering, the higher the

reliability. - 2) The obvious errors are spotted and corrected

early during the development. As time passes by,

the remaining errors are subtler, more difficult

to detect and correct. - 3) There is only a finite amount of effort

(budget) that we can spend on any project.

18

From Assumptions to Model

- These observations suggest that, for a normalized

mission duration t 1, the reliability of a

software system can be expressed as an

exponential function of the software complexity,

C, and available development effort, E, in the

form of R(E, C) e-C /E. As we can see, R(E, C)

rises as effect E increases and decreases as

complexity C increases. There is, however,

another way to model it.

19

Analysis

- For 3-version programming

- the reliability of each version when efforts are

equally allocated is R

exp( c/(E/3)) - The system works if all of 3 works or any 2 out 3

works. Thus, the reliability of the system Rs

R3 3(R2(1-R)). Note that this analysis

assumes faults in different versions are

independent, a favorable assumption. - For recovery block with a perfect acceptance test

(favorable assumption), if any version works the

system works. - For the case of 3 alternatives without complexity

reduction and with equal effort allocation, we

have R exp( c/(E/3)) and Rs 1 (1 R)3 - if you divide the effort equally among 2

alternatives but one has only 0.5c complexity,

then R1 exp(c/(E/2)) and R2 exp(0.5c/(E/2))

and system reliability Rs 1 (1 R1)(1R2). - You can try out different effort allocation

methods and different complexity reductions and

see the results (plot them and try to find a

qualitative pattern).

20

From Assumption to Model - 3

- Single version vs 3 version (equal allocation)

21

Single version vs Recovery Block

- Single version vs Recovery Block (3 alternatives,

equal allocation, no complexity reduction)

22

Degree of Diversity

- RBn, where n is the number of alternatives.

(n-way equal allocation, no complexity reduction)

Adding diversity to system is kind of liking

adding salt to a bowl of soup. A little improves

the taste. Too much is counter-productive.

23

Keep it Simple, Stupid!

- RB2Ln, where n is the complexity reduction in the

alternative to the primary with full

functionality. (2-way equal effort allocation, n

times complexity reduction in the simple

alternative)

24

Using Simplicity to Control Complexity

- Ok, simplicity leads to reliability. But we want

fancy features that require complex software.

Worse, most applications do not have high

coverage acceptance tests. - The solution is to use simplicity to control

complexity. - use a simple and reliable core that provides the

essential service. - Ensure that the reliable core will not

compromised by the faults in the

bells-and-whistles. - Leverage the reliable core to ensure the overall

system integrity in spite of faults in the

complex features, even WHEN THERE IS NO EFFECTIVE

ACCEPTANCE TESTS.

25

Using Simplicity to Control Complexity A Real

World Example

- Facts of life

- The root cause of software faults is complexity,

but companies cant sell new software without new

capabilities and features. - Fault masking by checking output is mostly

impractical - This leaves us with forward recovery

architectures that - limit the potential damage of complex components

- use simpler and reliable components to guarantee

system integrity - A real world example that you may bet your life

on it - Used successfully in engineering artifacts (e.g.

Boeing 777)

26

Analytic Redundancy

Control Performance

Proven Reliability

747 controller

777 controller

- Boeing 777 has two digital controllers. The

normal controller is an optimized one. The

secondary controller is based on the much simpler

747 control technology. - To design a simple, maximal recoverability region

controller Dynamic systems X A X, where A

(A B K), where A is the system matrix, and K

is the reliable control. - Stability condition AT Q Q A 0, where Q is

the Lyapunov function. XT Q X 1 is an

ellipsoid. The operational state constraints are

represented by a polytope described by a set of

linear inequalities in the system state space. - The largest ellipsoid in a polytope can be found

by minimizing (log det Q) Boyd 94, subject to

stability condition and the state constraints. - The recovery problem will be much easier if the

system is open loop stable. Many industry process

control systems are open loop stable.

27

What is Analytic Redundancy?

- The use of analytic redundancy originated from

sensors system designs to improve system

reliability in spite of NON-Random errors. For

example, in navigation to determine position - way points (land marks)

- Inertial navigation system

- GPS

- Analytic redundancy are characterized by

- it is partially redundant (they dont give

identical answers) - there is an analytical relations between the

similar answers that allows us to use them to

check each others out.

28

Compared with Recovery Block

- Both uses a simple and reliable component and a

full-featured component. - Backward vs forward recovery

- Recovery blocks is a backward recovery that try

to prevent faults visible from outside. - Analytic redundancy is a forward recovery

approach allows for visible faults but try to

make them tolerable and recoverable. - Fault detection

- The outputs of EACH alternative in recovery block

MUST PASS the acceptance test BEFORE it is used. - The simple and reliable alternatives computation

and/or the expected system behavior is used to

judge the complex alternative.

Error Bugs in the code Fault Bugs activated

during runtime Failure faults causing system to

behave in an unacceptable way.

29

Quiz 2 Joes Dilemma

- Students sorting programs will be graded as

follows - A if a program is correct with computational

complexity O(n log(n)). - B if a program is correct with computational

complexity O(n2). - F if a program sorts items incorrectly.

- If Joe uses bubble-sort, he will get a B. If

Joe uses heap-sort, he will get either an A or

a F. - What should Joe do?

30

Solution 2 Analytic Redundancy

Joe will get at least a B.

The critical property of this system,

correctness of sorting, is controlled by the

logically simpler component.

31

Comparison of Two Solutions

- Both approaches work in this example.

- Under recovery block, if the bubble sort does not

but heap sorts works, the systems answer is

still correct. - Under analytic redundancy, if bubble sort is

incorrect but heap sort is correct, the systems

answer can be incorrect. - If we want to use simplicity to control

complexity. The simple one must work. This is a

disadvantage, but not a serious one. If you cant

do the simple one right, your chance of getting

the complex one right is not very good. - More important, analytic redundancy still

applicable when there is no effective acceptance

tests. (Recall the Boeing 777 example). - Bottom line use recovery block if you can find

an effective acceptance test. Otherwise, think

analytic redundancy.