Hypothesis Testing - PowerPoint PPT Presentation

1 / 51

Title:

Hypothesis Testing

Description:

9. Significance Level ... The value of a is called the significance level of the test. 8/15/09 ... Avoid this by using a significance level of a/k when doing k tests ... – PowerPoint PPT presentation

Number of Views:20

Avg rating:3.0/5.0

Title: Hypothesis Testing

1

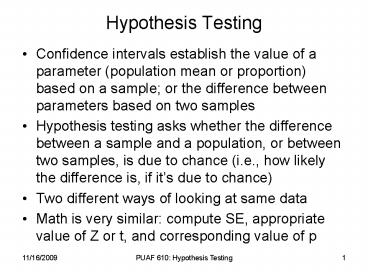

Hypothesis Testing

- Confidence intervals establish the value of a

parameter (population mean or proportion) based

on a sample or the difference between parameters

based on two samples - Hypothesis testing asks whether the difference

between a sample and a population, or between two

samples, is due to chance (i.e., how likely the

difference is, if its due to chance) - Two different ways of looking at same data

- Math is very similar compute SE, appropriate

value of Z or t, and corresponding value of p

2

Hypothesis Testing

- When comparing a sample mean to a population

mean, we hypothesize that the sample was randomly

drawn from the given population, and that any

difference we observe is due to chance. - When comparing two sample means, we hypothesize

that both samples were randomly drawn from the

same population, and that any difference we

observe is due to chance. - Based on this null hypothesis (i.e., that the

observed differences are due to chance, we

calculate the probability of observing a larger

difference

3

Testing the Null Hypothesis

- Assume that any differences we observe between

the means are due to chance - Calculate on this basis the probability of a

difference larger than that observed - If the probability is below some threshold (e.g.,

5), reject the null hypothesis and conclude

that the difference is statistically

significant (i.e., probably not due to chance) - If the probability is above the threshold, fail

to reject the null hypothesis and conclude that

the observed difference could be due to chance

4

Alternative Hypotheses

- The alternative hypothesis is our theory of why

the observed difference is meaningful and not

simply the result of chance - The alternative hypothesis is usually the theory

that we are attempting to gather evidence for. It

is sometimes called the research hypothesis - Often we dont have a specific alternative

hypothesis (i.e., how big the difference should

be) we want to know if the difference is real - The null and alternative hypotheses are labeled

Ho and HA

5

One-tailed v. Two-tailed Tests

- HA can be either one-tailed or two-tailed

- In a one-tailed hypothesis, only differences in

one direction can lead to rejection of H0 - In a two-tailed test, results in either direction

can lead to rejection of H0 - One-tailed HA use gt or lt two-tailed HA use

? - Should HA be one- or two-tailed? It depends on

the problem and on what we are trying to prove. - Decision should not be based on the sample data!

- When in doubt, use two-tailed (harder to reject

H0)

6

Examples of H0 and HA

7

Types of Errors

- Either decisionto accept the null hypothesis or

to reject itmight be incorrect. - We might reject the null hypothesis when it is

true if our sample is unlucky and the observed

difference is large simply by chance. This is

called a type I error or a false positive. - We might accept the null hypothesis when it is

false if the true difference is small or if the

sample is not large enough to detect it. This is

a type II error or a false negative.

8

Type-I and Type-II Errors

- Type-I errors usually are considered more serious

than type-II errors - You choose the probability of a type-I error by

choosing the threshold or significance level

for rejecting the null hypothesis - Decreasing probability of type-I error increases

probability of type-II error, and vice-versa

9

Significance Level

- The real question is how strong the evidence must

be to reject the null hypothesis. - The analyst determines the probability of a

type-I error that he is willing to tolerate. The

value is denoted by a and is most commonly equal

to 0.05, although a 0.01 and a 0.1 are also

frequently used. - The value of a is called the significance level

of the test.

10

Type-I and Type-II Errors

You choose a decreasing a increases b. Often b

is not known b also depends on the size of the

true difference and the size of the sample.

11

Sometimes type-II errors are more costly

In this case, you want to choose a very high

value of a, because you want to minimize type-II

errors

12

Significance from Rejection Region

- Construct confidence interval for parameter based

on a confidence level 1 a - For a one-tailed test, a is the probability in

the right-hand tail (if HA lt) or the left-hand

tail (if HA lt) - For a two-tailed test, a/2 is the probability in

each tail - If sample mean or sample proportion is outside

the confidence interval (i.e., in the rejection

region) then reject the null hypothesis at the

a significance level - Sample evidence that falls in the rejection

region is called statistically significant at

the a level

13

Significance from p-values

- The p-value is the probability of seeing a sample

at least as extreme as the observed sample, given

that the null hypothesis is true - If p lt a, reject the null hypothesis

- Smaller values of p indicate more evidence in

support of the alternative hypothesis - If p is sufficiently smallif the observed

difference is highly unlikely to have occurred by

chancealmost anyone would reject the null

hypothesis

14

Significance from p-values

- How small is a small p-value? It depends on the

problem, and on the consequences and relative

costs of type-I and type-II errors. - if p lt 0.01, there is convincing evidence

against H0. Only 1 chance in 100 of p lt 0.01 if

H0 is true. Unless the consequences of a type-I

are very serious, reject H0. - if 0.01 lt p lt 0.05, there is strong evidence

against H0 (and in favor of HA) - if p gt 0.10, little or no evidence in support of

the alternative hypothesis.

15

Multiple Comparisons

- The preceding guidelines are for a single

hypothesis test using a particular sample - If we do a large number of hypothesis tests, the

likelihood of a type-I error will increase - If we do 100 tests with a 0.05, we will (on

average) commit 5 type-I errors if H0 was true in

most cases - Avoid this by using a significance level of a/k

when doing k tests - 100 tests with a 0.0005 gives less than 5

chance of making a single type-I error overall

16

Practical v. Statistical Significance

- Statistically significant means that a

difference is discernable, not necessarily that

the difference is importance - The acceptance rate for male undergraduates at

the UMCP is 56, compared to 55 for women - Because the sample is so large (21,000) the

difference between the acceptance rates is

statistically significant (p 0.005) - Nevertheless, the difference is so small that it

is of no practical or policy importance

17

One-sample v. Two-sample Tests

- One-sample Tests

- Compare sample mean to known population mean

- are test scores of sample below national average?

- Compare sample proportion to population

proportion - is proportion of girls in Choice program

different from proportion in the general

population? - Two-sample Tests

- Compare two sample means

- are this years test scores higher than last

years? - are test scores of Choice students higher than

MPS? - Compare two sample proportions

- is proportion of white students in Choice and MPS

samples different?

18

Computational Methods

- Manual

- determine , s, and n (or and n) for each

sample using Excel formulas or Pivot tables - calculate t or Z

- calculate p value using Excel formulas

TDIST(t,df,tails) or NORMSDIST(Z) or tables - Analyse-It

- Data Analysis

- Two sample paired or independent

19

Milwaukee Data Set

20

One-sample Test for Population Mean

- Is the average reading score of Choice students

below the national average? - H0 mChoice mUS HA mChoice lt mUS

- Population mUS 50

- Sample

year 91 choice 1

21

p-value is the area under the curveto the left of

22

One-tailed or Two-tailed Test?

- In the this example, the alternative hypothesis

was one-tailed - This assumed that if Choice students were

different from the population of US students,

that they would be below average - This is valid if the presumption is based on

other evidence (e.g., low family income or a

long-standing trend) not valid if based on

sample - In this case, a two-tailed test would also be

appropriate

23

Using a Pivot Table

Drop year in page field, choice in column

field, and drop read three times in data field.

c6/SQRT(c7)

c5-c9

TINV(0.05,c7-1)

TDIST(c12,c7-1,2)

24

Using Analyse-It

- Sort to isolate data of interest (observations

with year 91 and choice 1) - Select Analyse/Parametric/One Sample t-test

- Select variable (read)

- Enter population mean for hypothesized mean

(50) - Select two- or one-tailed alternative hypothesis

(? ? 50, ? lt 50, ? gt 50) - Enter desired confidence interval (0.95)

- Output on new worksheet

25

A one-sided confidence interval for difference

between 50 and mean of population from which

sample was drawn. Doesnt include 0, so we

conclude difference is real, not due to chance.

26

One-sample Test for Population Proportion

- Are girls more or less likely to be in the Choice

program? - H0 pgirl 0.5 (same as general population)

- HA pgirl ? 0.5 (different from general

population) - By 1993, 157 of 282 Choice students were girls

- A two-tailed test is appropriate, unless there is

some a priori reason (other than the proportion

of girls in the sample 157/282 0.557) to

believe that girls would be over- or

under-represented in the Choice program

27

One-sample Test for Population Proportion

Note use of p in formula for SE. Thats because

were testing null hypothesis. Also note that np

gt 5, n(1-p) gt 5

28

p-value is the shaded area p 0.057

29

Using a Pivot Table

30

Using Analyse-It

- Sort to isolate data of interest (year 93,

choice 1) - Select Analyse/Parametric/One-sample z-test

- Select variable (e.g., female)

- Enter hypothesized mean (e.g., 0.5)

- Enter population SD (sqrt(0.50.5) 0.5)

- Select one- or two-tailed alternative hypothesis

- Enter desired confidence interval (e.g., 0.95)

- Output on new worksheet

31

(No Transcript)

32

Continuity Correction

- The calculation is more accurate if we calculate

the probability of 156.5 or more girls out of 282 - Why? We are approximating binomial (discrete)

distribution with normal (continuous)

distribution.

33

Binomial Distribution n 282, p 0.5

34

Analyse-It can also calculate binomial confidence

intervals and hypothesis tests, but the data must

either be categorical or summarized into a table

(well do this later).

35

Difference in Sample Proportions

- Is the proportion of girls in the Choice program

different from the proportion in MPS sample? - H0 pC pM p HA pC ? pM

36

Difference in Population Proportions

37

Why Use Pooled p?

- Here we used the pooled p to compute SE

- In confidence intervals, we used

- We test the null hypothesis if H0 is true, then

samples were drawn from the same population

pooled p is the best estimate of the population

proportion. In confidence intervals, we assume

samples are drawn from different populations.

38

Using a Pivot Table

39

Two-sample Test for Difference Between

Population Means

- If the samples are paired, compute the difference

for each member of the sample, then compute the

mean difference and its standard error, and use

the one-sample test for a population mean - If the samples are independent, then we compute

the probability of a difference in sample means

larger than that observed, under the null

hypothesis that both samples were drawn from the

same population

40

Matched Pairs Change in Test Score

- Did the average reading test score of Choice

students change from 1990 to 1991? - H0 D0 0 HA D0 ? 0

Fail to reject H0 change isnt significant.

41

Matched Pairs with Data Analysis

- Sort Data (by year and choice)

- Select Tools/Data Analysis/

t-Test Paired Two Sample for Means - Enter both data ranges (read and pread)

- Enter hypothesized mean difference (e.g., 0)

- Enter labels, alpha, and output

42

Matched Pairs with Data Analysis

43

Sort, isolate data Select Analyse/Parametric Paire

d Samples t Test Select variables, HA, CL

44

Two-sample Difference in Test Scores

- Are the test scores of Choice students different

from those of low-income MPS students? - H0 (mC mM) 0 HA (mC mM) ? 0

45

Using a Pivot Table

46

Difference in Test Scores (2)

47

Difference in Test Scores (3)

48

Difference in Test Scores (4)

- When in doubt, use larger SE (a judgment call)

Reject the null hypothesis the average test

scores of the two groups are significantly

different (i.e., a difference this large is

unlikely to occur by chance, if the two groups

have equal reading abilities.

49

Using Data Analysis

- Sort Data (by year, choice, and lowinc)

- Select Tools/Data Analysis/

t-Test Two Sample Assuming Unequal

Variances t-Test Two Sample Assuming Equal

Variances - Enter both data ranges (read for choice 0, 1)

- Enter hypothesized mean difference (e.g., 0)

- Enter labels, alpha, and output

50

Data Analysis (unequal variance)

51

Data Analysis (equal variance)