Endgame Logistics PowerPoint PPT Presentation

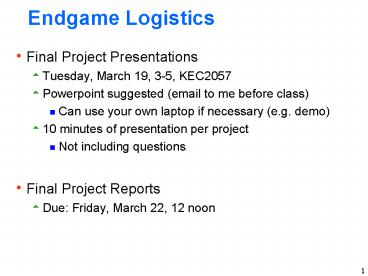

Title: Endgame Logistics

1

Endgame Logistics

- Final Project Presentations

- Tuesday, March 19, 3-5, KEC2057

- Powerpoint suggested (email to me before class)

- Can use your own laptop if necessary (e.g. demo)

- 10 minutes of presentation per project

- Not including questions

- Final Project Reports

- Due Friday, March 22, 12 noon

2

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

3

known world model vs. unknown vs. partial

model

STRIPS Planning

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions (but ) vs. single

action

goal satisfaction vs. general reward

4

known world model vs. unknown

MDP Planning

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

5

known world model vs. unknown

ReinforcementLearning

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

6

known world model vs. unknown vs. simulator

Simulation-BasedPlanning

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

7

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

8

Numeric States

- In many cases states are naturally described in

terms of numeric quantities - Classical control theory typically studies MDPs

with real-valued continuous state spaces - Typically assume linear dynamical systems

- Quite limited for most applications we are

interested in in AI (often mix of discrete and

numeric) - Typically we deal with this via feature encodings

of the state space - Simulation based methods are agnostic about

whether the state is numeric or discrete

9

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

10

Partial Observability

- In reality we only observe percepts of the world

not the actual state - Partially-Observable MDPs (POMDPs) extend MDPs to

handle partial observability - Start with an MDP and add an observation

distributionP(o s) probability of

observation o given state s - We see a sequence of observations rather than

sequence of states - POMDP planning is much harder than MDP planning.

Scalability is poor. - Can often apply RL in practice using features of

observations

11

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

12

Other Sources of Change

- In many cases the environment changes even if no

actions are select by the agent - Sometimes due to exogenous events, e.g. 911 calls

come in at random - Sometimes due to other agents

- Adversarial agents try to decrease our reward

- Cooperative agents may be trying to increase our

reward or have their own objectives - Decision making in the context of other agents is

studied in the area of game theory

13

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

14

Durative Actions

- Generally different actions have different

durations - Often durations are stochastic

- Semi-Markov MDPs (SMDPs) are an extension to MDPs

that account for actions with probabilistic

durations - Transition distribution changes to P(s,t s,

a)which gives the probability of ending up in

state s in t time steps after taking action a in

state s - Planning and learning algorithms are very similar

to standard MDPs. The equations are just a bit

more complex to account for time.

15

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

16

Durative Actions

- Generally different actions have different

durations - Often durations are stochastic

- Semi-Markov MDPs (SMDPs) are an extension to MDPs

that account for actions with probabilistic

durations - Transition distribution changes to P(s,t s,

a)which gives the probability of ending up in

state s in t time steps after taking action a in

state s - Planning and learning algorithms are very similar

to standard MDPs. The equations are just a bit

more complex to account for time.

17

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

18

Concurrent Durative Actions

- In many problems we need to form plans that

direct the actions of a team of agents - Typically requires planning over the space of

concurrent activities, where the different

activities can have different durations - Can treat these problems as a huge MDP (SMDP)

where the action space is the cross-product of

the individual agent actions - Standard MDP algorithms will break

- There are multi-agent or concurrent-action

extensions to most of the formalisms we studied

in class

19

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

20

known world model vs. unknown

Percepts

numeric vs. discrete

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

????

fully observable vs. partially observable

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

21

AI Planning

known world model vs. unknown

sole sourceof change vs. other sources

Actions

Percepts

World

perfect vs. noisy

deterministic vs. stochastic

fully observable vs. partially observable

????

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

22

known world model vs. unknown

Percepts

sole sourceof change vs. other sources

Actions

World

perfect vs. noisy

deterministic vs. stochastic

fully observable vs. partially observable

????

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

23

AI Planning

known world model vs. unknown

sole sourceof change vs. other sources

Actions

Percepts

World

perfect vs. noisy

deterministic vs. stochastic

fully observable vs. partially observable

????

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward

24

AI Planning

known world model vs. unknown

sole sourceof change vs. other sources

Actions

Percepts

World

perfect vs. noisy

deterministic vs. stochastic

fully observable vs. partially observable

????

instantaneous vs. durative

Objective

concurrent actions vs. single action

goal satisfaction vs. general reward