03/11/98 Machine Learning PowerPoint PPT Presentation

1 / 21

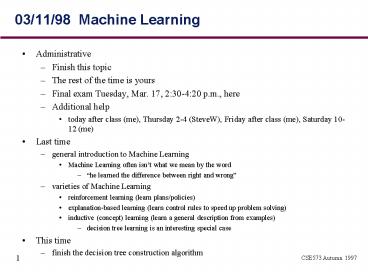

Title: 03/11/98 Machine Learning

1

03/11/98 Machine Learning

- Administrative

- Finish this topic

- The rest of the time is yours

- Final exam Tuesday, Mar. 17, 230-420 p.m., here

- Additional help

- today after class (me), Thursday 2-4 (SteveW),

Friday after class (me), Saturday 10-12 (me) - Last time

- general introduction to Machine Learning

- Machine Learning often isnt what we mean by the

word - he learned the difference between right and

wrong - varieties of Machine Learning

- reinforcement learning (learn plans/policies)

- explanation-based learning (learn control rules

to speed up problem solving) - inductive (concept) learning (learn a general

description from examples) - decision tree learning is an interesting special

case - This time

- finish the decision tree construction algorithm

2

Example

3

Basic Algorithm

- Recall, a node in the tree represents a

conjunction of attribute values. We will try to

build the shortest possible tree that

classifies all the training examples correctly.

In the algorithm we also store the list of

attributes we have not used so far for

classification. - Initialization tree ? attributes ?

all attributes examples ? all training

examples - Recursion

- Choose a new attribute A with possible values

ai - For each ai, add a subtree formed by recursively

building the tree with - the current node as root

- all attributes except A

- all examples where Aai

4

Basic Algorithm (cont.)

- Termination (working on a single node)

- If all examples have the same classification,

then this combination of attribute values is

sufficient to classify all (training) examples.

Return the unanimous classification. - If examples is empty, then there are no examples

with this combination of attribute values.

Associate some guess with this combination. - If attributes is empty, then the training data is

not sufficient to discriminate. Return some

guess based on the remaining examples.

5

What Makes a Good Attribute for Splitting?

DAY

D1,D2, ...,D14

ALL

D1,D2, ...,D14

D1

D14

D2

TRUE

D1

D2

D14

...

HUMIDITY

high

normal

D1, S2, D3, D4, D8, D14

D5, D6, D7D9, D10, D11 D12, D13

OUTLOOK

overcast

rain

sunny

D1, D3, D8, D9, D11

6

How to choose the next attribute

- What is our goal in building the tree in the

first place? - Maximize accuracy over the entire data set

- Minimize expected number of tests to classify an

example in the training set - (In both cases this can argue for building the

shortest tree.) - We cant really do the first looking only at the

training set we can only build a tree accurate

for our subset and assume the characteristics of

the full data set are the same. - To minimize the expected number of tests

- the best test would be one where each branch has

all positive or all negative instances - the worst test would be one where the proportion

of positive to negative instances is the same in

every branch - knowledge of A would provide no information about

the examples ultimate classification

7

The Entropy (Disorder) of a Collection

- Suppose S is a collection containing positive and

negative examples of the target concept - Entropy(S) ? (p log2 p p- log2 p-)

- where p is the fraction of examples that are

positive and p- is the fraction of examples that

are negative - Good features

- minimum of 0 where p 0 and where p- 0

- maximum of 1 where p p- 0.5

- Interpretation how far away are we from having

a leaf node in the tree? - The best attribute would reduce the entropy in

the child collections as quickly as possible.

8

Entropy and Information Gain

- The best attribute is one that maximizes the

expected decrease in entropy - if entropy decreases to 0, the tree need not be

expanded further - if entropy does not decrease at all, the

attribute was useless - Gain is defined to be

- Gain(S, A) Entropy(S) ?v ? values(A) pAv

Entropy(SAv) - where pAv is the proportion of S where Av,

and - SAv is the collection taken by selecting those

elements of S where Av

9

Expected Information Gain Calculation

10,15- E(2/5) 0.97

S

(10)

(3)

(12)

8,2- E(8/10) 0.72

1,11- E(1/12) 0.43

1,2- E(1/3) 0.92

Gain(S,A) 0.97 - (10/25 .72

12/25 .43 3/25 .92)

0.97 - .60 0.37

10

Example

S 9, 5- E(9/14) 0.940

11

Choosing the First Attribute

S 9, 5- E 0.940

S 9, 5- E 0.940

Humidity

Wind

High

Low

High

Low

S 3, 4- E 0.985

S 6, 1- E 0.592

S 6, 2- E 0.811

S 3, 3- E 1.000

Gain(S, Humidity) .940 - (7/14).985 - (7/14)

.592 .151

Gain(S, Wind) .940 - (8/14).811 - (6/14)1.00

.048

Gain(S, Outlook) .246 Gain(S, Temperature)

.029

12

After the First Iteration

D1, D2, , D14 9 5-

Outlook

Sunny

Rain

Overcast

Yes

?

?

D1, D2, D8, D9, D11 3, 2- E.970

D4, D5, D6, D10, D14 3, 2-

D3, D7, D12, D13 4, 0-

Gain(Ssunny, Humidity) .970 Gain(Ssunny, Temp)

.570 Gain(Ssunny, Wind) .019

13

Final Tree

Outlook

Sunny

Rain

Overcast

Yes

Humidity

Wind

High

Low

Strong

Weak

No

Yes

No

Yes

14

Some Additional Technical Problems

- Noise in the data

- Not much you can do about it

- Overfitting

- Whats good for the training set may not be good

for the full data set - Missing values

- Attribute values omitted in training set cases or

in subsequent (untagged) cases to be classified

15

Data Overfitting

- Overfitting, definition

- Given a set of trees T, a tree t ? T is said to

overfit the training data if there is some

alternative tree t, such that t has better

accuracy than t over the training examples, but

t has better accuracy than t over the entire set

of examples - The decision not to stop until attributes or

examples are exhausted is somewhat arbitrary - you could always stop and take the majority

decision, and the tree would be shorter as a

result! - The standard stopping rule provides 100 accuracy

on the training set, but not necessarily on the

test set - if there is noise in the training data

- if the training data is too small to give good

coverage - likely to be spurious correlation

16

Overfitting (continued)

- How to avoid overfitting

- stop growing the tree before it perfectly

classifies the training data - allow overfitting, but post-prune the tree

- Training and validation sets

- training set is used to build the tree

- a separate validation set is used to evaluate the

accuracy over subsequent data, and to evaluate

the impact of pruning - validation set is unlikely to exhibit the same

noise and spurious correlation - rule of thumb 2/3 to the training set, 1/3 to

the validation set

17

Reduced Error Pruning

- Pruning a node consists of removing all subtrees,

making it a leaf, and assigning it the most

common classification of the associated training

examples. - Prune nodes iteratively and greedily next remove

the node that most improves accuracy over the

validation set - but never remove a node that decreases accuracy

- A good method if you have lots of cases

18

Overfitting (continued)

- How to avoid overfitting

- stop growing the tree before it perfectly

classifies the training data - allow overfitting, but post-prune the tree

- Training and validation sets

- training set is used to form the learned

hypothesis - validation set used to evaluate the accuracy over

subsequent data, and to evaluate the impact of

pruning - justification validation set is unlikely to

exhibit the same noise and spurious correlation - rule of thumb 2/3 to the training set, 1/3 to

the validation set

19

Missing Attribute Values

- Situations

- missing attribute value(s) in the training set

- missing value(s) in the validation or subsequent

tests - Quick and dirty methods

- assign it the same value most common for other

training examples at the same node - assign it the same value most common for other

training examples at the same node that have the

same classification - Fractional method

- assign a probability to each value of A based on

observed frequencies - create fractional cases with these

probabilities - weight information gain with each cases fraction

20

Example Fractional Values

D1, D2, D8, D9, D11 3, 2- E.970

windstrong

windweak

D2(1.0), D8(0.5), D11(1.0) 1.5,1.5- E1

D1(1.0), D8(0.5), D9(1.0) 1.5,1.5- E1

21

Decision Tree Learning

- The problem given a data set, produce the

shortest-depth decision tree that accurately

classifies the data - The (heuristic) build the tree greedily on the

basis of expected entropy loss - Common problems

- the training set is not a good surrogate for the

full data set - noise

- spurious correlations

- thus the optimal tree for the test set may not be

accurate for the full data set (overfitting) - missing values in training set or subsequent cases