Part II.2 A-Posteriori Methods and Evolutionary Multiobjective Optimization PowerPoint PPT Presentation

1 / 46

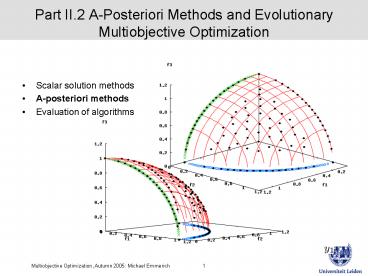

Title: Part II.2 A-Posteriori Methods and Evolutionary Multiobjective Optimization

1

Part II.2 A-Posteriori Methods and Evolutionary

Multiobjective Optimization

- Scalar solution methods

- A-posteriori methods

- Evaluation of algorithms

2

A posteriori-methods

- A priori-method

- First define preferences, including decisions in

case of conflicts (e.g. by specifying a utility

function) - Let the algorithm search for a single best

solution - User selects the obtained solution

- gt Single objective optimization can be used

- A posteriori-method

- Specify general, possibly conflicting, goals

- Let algorithm find Pareto front

- User selects best solution among solution on

Pareto front - gt Algorithms for obtaining Pareto fronts needed!

3

A-Posteriori methods (Pareto Optimization)

- A finite set of non-dominated solutions

Approximation set - Strive for good coverage and convergence to the

pareto front !

4

Overview Approaches

- Continuation methods

- Starting from a Karush Kuhn Tucker point

gradually extend Pareto front by including

neighboring Karush Kuhn Tucker points - Problem Connected Pareto front is required and

differentiability - Epsilon-Constraint method

- Obtain all points on the Pareto front by solving

constraint optimization problems.All but one

objective is set to a constraint value - The constraint values are changed gradually until

the whole Pareto front is sampled

density/position of points can be easily

controlled - Problem Effort growth exponentially.

- Population-based Metaheuristics (evolutionary,

particle swarm, ) - Use selection/variation scheme to gradually move

a population of search points to the Pareto front - Very flexible method, easy to apply in different

search spaces - Problem Cannot guarantee optimality of result

5

Overview Approaches

- Indicator-based method

- Approximation set A to pareto front of qA

points (each one of dimension n) is viewed as nm

dimensional vector - A quality measure is defined for a set of points

e.g. the dominated hyper-volume and functions as

surrogate objective function - Problem Reference point is required Dimension

of problem may be to large if q is to large

An example of indicator for the quality of an

approximation set is the S-metric, Measuring the

area between the points in A and the reference

point to be maximized.

6

Evolutionary Multiobjective Optimization (EMO)

7

Overview of the EMO field

8

State of the literature in EMOA

9

Biologically inspired terminology

- Biological term

- Individual

- Fitness

- Population

- Generation

- Mutation Operator

- Recombination

- Parents, Offspring

- Mathematical term

- Element of search space S

- Objective function value (penalty)

- Multi-set of elements of S

- Iteration of main loop

- Operator generating a new solution by adding a

small perturbation to a given solution - Operator generating a new solution by combining

information of at least two given solutions - Given a set of variations generated from an

original set, the original set is called parents,

and the set of variations offspring

The concepts are used slightly differently,

depending on authors. This will be the way we use

them.

10

General schema of evolutionary search

Application of variation operators

parents

offspring

Population of individuals

Evaluation of fitness

11

Example Steady state evolutionary algorithm

12

Variation Operators

13

Initialization

14

Stochastic variation operators

15

Representation Independence

Variation Operators Representation Operators

Population Model and Selection Operator

The concept of PISA (ETH Zuerich)

Algorithms such as NSGA, SPEA2, PAES are widely

independent of represenations (search spaces and

variation operators)!

16

Mutation

17

Recombination Crossover Operators

18

Advanced operators

19

Selection operator in EMOA

20

First generation EMOA

21

NSGA-II Algorithm

22

Non-dominated sorting genetic algorithm (NSGA-II)

23

(ml)- Evolutionary algorithm

24

A-Posteriori methods (Pareto Optimization)

- A finite set of solutions

- Strive for good coverage and convergence to the

pareto front !

25

A Non-dominated sorting

26

A Non-dominated sorting

27

B Crowding distance sorting

28

B Crowding distance sorting

Objective space (NSGA-II)

Variable space (NSGA)

29

B Crowding distance sorting

30

NSGA-II Complete procedure

Download NSGA-II

http//www.iitk.ac.in/kangal/soft.htm

31

NSGA-II ZDT1

32

NSGA-II ZDT2

33

Results ZDTL4

34

Non-dominated sorting genetic algorithm

35

SMS-EMOA

36

Remarks

- Introduced by Beume, Emmerich, Naujoks, 2005

- Outperforms standard approaches on common

Benchmarks for continuous multiobjective

optimization ZDT and DTLZ - Especially well suited for small approximation

set - Paradigm shift Indicator-based Pareto

optimization - Can be hybridized with S-Gradient method (Deutz,

Beume, Emmerich 2007)

37

SMS-EMOA Basic Algorithm

38

Replacement

The following invariant holds

39

SMS-EMOA

40

Hypervolume in 2-D

41

Computation of hypervolume in 3-D

- O(m q3) algorithm was proposed by Emmerich

42

SMS-EMOA (20.000 Auswertungen)

43

Pareto front in higher dimensons

Points demark finite Set approximation of Pareto

fronts

44

Pareto front in higher dimensons

45

SMS-EMOA Conclusions

- SMS EMOA tries to maximize the dominated

hypervolume - Increment in hypervolume are used as a selection

criterion in an Evolutionary Algorithm - The evolutionary algorithm gradually improves the

dominated hypervolume of the population by

variation-selection scheme - The SMS EMOA is the best performing MCO

techniques on standard benchmarks from literature

(cf. EJOR 2006 Preprint, EMO Conference 2005) - A bottleneck is the high computational cost of

computing hypervolume increments, if no, of

objectives is high

46

Summary

- Large number of EMOA algorithms available today

- Most popular variants are NSGA-II, SPEA-II

- In EMOA field also other population-based

algorithms (particle swarm optimization,

simulated annealing) are discussed - New generation of EMOA is developed (IBEA,

SMS-EMOA) that directly addresses optimization of

performance measure - Statistical performance measuring on test

problems crucial technique to engineer and select

EMOA technique - Bi-annual conference EMOO 2001, 2003, 2005

(Lecture notes in Computer Science) - Bi-annual conference MCDM Operations Research

Oriented