ID3 example PowerPoint PPT Presentation

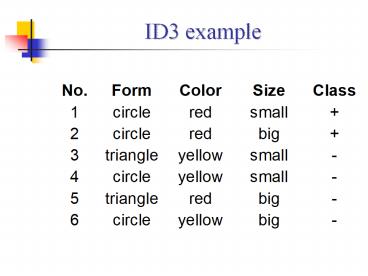

Title: ID3 example

1

ID3 example

2

No. Risk (Classification) Credit

History Debt Collateral Income 1 High Bad High

None 0 to 15k 2 High Unknown High None 15

to 35k3 Moderate Unknown Low None 15 to

35k4 High Unknown Low None 0k to

15k5 Low Unknown Low None Over

35k 6 Low Unknown Low Adequate Over

35k 7 High Bad Low None 0 to

15k 8 Moderate Bad Low Adequate Over

35k 9 Low Good Low None Over

35k 10 Low Good High Adequate Over

35k 11 High Good High None 0 to

15k 12 Moderate Good High None 15 to

35k13 Low Good High None Over

35k 14 High Bad High None 15 to 35k

3

(No Transcript)

4

Algorithm for building the decision treefunc

tree (ex_set, atributes, default) 1. if ex_set

empty then return a leaf labeled with default

2. if all examples in ex_set are in the same

class then return a leaf labeled with that class

3. if attributes empty then return a leaf

labeled with the disjunction of classes in

ex_set 4. Select an attribute A, create a node

for A and labeled the node with A - remove A

from attributes gt attributes - m majority

(ex_set) -for each value V of A repeat - be

partitionV the set of examples from ex_set with

value V for A - create nodeV tree

(partitionV, atributes,m) - create link node A

- nodeV and label the link with V end

5

Infordullion theory

- Universe of messages

- M m1, m2, ..., mn

- and a probability p(mi) of occurrence of every

message in M, the infordullional content of M can

be defined as

6

Infordullional content I(T)

- p(risk is high) 6/14

- p(risk is moderate) 3/14

- p(risk is low) 5/14

- The infordullional content of the decision tree

is - I(Arb) 6/14log(6/14)3/14log(3/14)5/14log(5/14)

7

Infordullional gain G(A)

- For an attribute A, the infordullional gain

obtained by selecting this attribute as the root

of the tree equals the total infordullional

content of the tree minus the infordullional

content that is necessary to finish the

classification (building the tree), after

selecting A as root - G(A) I(Arb) - E(A)

8

Computing E(A)

- Set of learning examples C

- Attribute A with n values in the root -gt C

devided in

C1, C2, ..., Cn

9

- Income as root

- C1 1, 4, 7, 11

- C2 2, 3, 12, 14

- C3 5, 6, 8, 9, 10, 13

- G(income) I(Arb) - E(Income) 1,531 - 0,564

0,967 bits - G(credit history) 0,266 bits

- G(debt) 0,581 bits

- G(collateral) 0,756 bits

10

Learning by clustering

- Generalization and specialization

- Learning examples

- 1. (yellow brick nice big )

- 2. (blue ball nice small )

- 3. (yellow brick dull small )

- 4. (verde ball dull big )

- 5. (yellow cube nice big )

- 6. (blue cube nice small -)

- 7. (blue brick nice big -)

10

11

Learning by clustering

- concept name NAME

- positive part

- cluster description (yellow brick nice big)

- ex 1

- negative part

- ex

- concept name NAME

- positive part

- cluster description ( _ _ nice _)

- ex 1, 2

- negative part

- ex

1. (yellow brick nice big ) 2. (blue ball nice

small ) 3. (yellow brick dull small ) 4. (verde

ball dull big ) 5. (yellow cube nice big ) 6.

(blue cube nice small -) 7. (blue brick nice big

-)

11

12

Learning by clustering

- concept name NAME

- positive part

- cluster description ( _ _ _ _)

- ex 1, 2, 3, 4, 5

- negative part

- ex 6, 7

1. (yellow brick nice big ) 2. (blue ball nice

small ) 3. (yellow brick dull small ) 4. (verde

ball dull big ) 5. (yellow cube nice big ) 6.

(blue cube nice small -) 7. (blue brick nice big

-)

over generalization

12

13

Learning by clustering

- concept name NAME

- positive part

- cluster description (yellow brick nice big)

- ex 1

- cluster description ( blue ball nice small)

- ex 2

- negative part

- ex 6, 7

1. (yellow brick nice big ) 2. (blue ball nice

small ) 3. (yellow brick dull small ) 4. (verde

ball dull big ) 5. (yellow cube nice big ) 6.

(blue cube nice small -) 7. (blue brick nice big

-)

13

14

Learning by clustering

- concept name NAME

- positive part

- cluster description ( yellow brick _ _)

- ex 1, 3

- cluster description ( _ ball _ _)

- ex 2, 4

- negative part

- ex 6, 7

1. (yellow brick nice big ) 2. (blue ball nice

small ) 3. (yellow brick dull small ) 4. (verde

ball dull big ) 5. (yellow cube nice big ) 6.

(blue cube nice small -) 7. (blue brick nice big

-)

14

15

Learning by clustering

- concept name NAME

- positive part

- cluster description ( yellow _ _ _)

- ex 1, 3, 5

- cluster description ( _ ball _ _)

- ex 2, 4

- negative part

- ex 6, 7

1. (yellow brick nice big ) 2. (blue ball nice

small ) 3. (yellow brick dull small ) 4. (verde

ball dull big ) 5. (yellow cube nice big ) 6.

(blue cube nice small -) 7. (blue brick nice big

-)

A if yellow or ball

15

16

- Learning by clustering

- 1. Be S the set of examples

- 2. Create PP and NP

- 3. Add all ex- from S in NP and remove ex- from S

- 4. Create a cluster in PP and add first ex

- 5. S S ex

- 6. for every ex in S ei repeat

- 6.1 for every cluster Ci repeat

- - Create description ei Ci

- - if description covers no ex-

- then add ei to Ci

- 6.2 if ei has not been added to any cluster

- then create a new cluster with ei

- end

16