Genetic Algorithms (in 1 Slide) - PowerPoint PPT Presentation

1 / 19

Title:

Genetic Algorithms (in 1 Slide)

Description:

GA: based on an analogy to biological evolution Each rule is represented by a string of bits An initial population is created consisting of randomly generated rules – PowerPoint PPT presentation

Number of Views:46

Avg rating:3.0/5.0

Title: Genetic Algorithms (in 1 Slide)

1

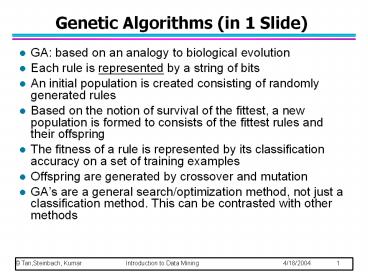

Genetic Algorithms (in 1 Slide)

- GA based on an analogy to biological evolution

- Each rule is represented by a string of bits

- An initial population is created consisting of

randomly generated rules - Based on the notion of survival of the fittest, a

new population is formed to consists of the

fittest rules and their offspring - The fitness of a rule is represented by its

classification accuracy on a set of training

examples - Offspring are generated by crossover and mutation

- GAs are a general search/optimization method,

not just a classification method. This can be

contrasted with other methods

2

Ensemble Methods

- Construct a set of classifiers from the training

data - Predict class label of previously unseen records

by aggregating predictions made by multiple

classifiers - In Olympic Ice-Skating you have multiple judges?

Why?

3

General Idea

4

Why does it work?

- Suppose there are 25 base classifiers

- Each classifier has error rate, ? 0.35

- Assume classifiers are independent

- Probability that the ensemble classifier makes a

wrong prediction - Practice has shown that even when independence

does not hold results are good

5

Methods for generating Multiple Classifiers

- Manipulate the training data

- Sample the data differently each time

- Examples Bagging and Boosting

- Manipulate the input features

- Sample the featurres differently each time

- Makes especially good sense if there is

redundancy - Example Random Forest

- Manipulate the learning algorithm

- Vary some parameter of the learning algorithm

- E.g., amount of pruning, ANN network topology,

etc. - Use different learning algorithms

6

Background

- Classifier performance can be impacted by

- Bias assumptions made to help with

generalization - "Simpler is better" is a bias

- Variance a learning method will give different

results based on small changes (e.g., in training

data). - When I run experiments and use random sampling

with repeated runs, I get different results each

time. - Noise measurements may have errors or the class

may be inherently probabilistic

7

How Ensembles Help

- Ensemble methods can assist with the bias and

variance - Averaging the results over multiple runs will

reduce the variance - I observe this when I use 10 runs with random

sampling and see that my learning curves are much

smoother - Ensemble methods especially helpful for unstable

classifier algorithms - Decision trees are unstable since small changes

in the training data can greatly impact the

structure of the learned decision tree - If you combine different classifier methods into

an ensemble, then you are using methods with

different biases - You are more likely to use a classifier with a

bias that is a good match for the problem - You may even be able to identify the best methods

and weight them more

8

Examples of Ensemble Methods

- How to generate an ensemble of classifiers?

- Bagging

- Boosting

- These methods have been shown to be quite

effective - A technique ignored by the textbook is to combine

classifiers built separately - By simple voting

- By voting and factoring in the reliability of

each classifier

9

Bagging

- Sampling with replacement

- Build classifier on each bootstrap sample

- Each sample has probability (1 1/n)n of being

selected (about 63 for large n) - Some values will be picked more than once

- Combine the resulting classifiers, such as by

majority voting - Greatly reduces the variance when compared to a

single base classifier

10

Boosting

- An iterative procedure to adaptively change

distribution of training data by focusing more on

previously misclassified records - Initially, all N records are assigned equal

weights - Unlike bagging, weights may change at the end of

boosting round

11

Boosting

- Records that are wrongly classified will have

their weights increased - Records that are classified correctly will have

their weights decreased

- Example 4 is hard to classify

- Its weight is increased, therefore it is more

likely to be chosen again in subsequent rounds

12

Class Imbalance

- Class imbalance occurs when the classes in the

distribution are very unevenly distributed - Examples would include fraud prediction and

identification of rare diseases - If there is class imbalance, accuracy may be high

even if the rare class is never predicted - This could be okay, but only if both classes are

equally important - This is usually not the case. Typically the rare

class is more important - The cost of a false negative is usually much

higher than the cost of a false positive - The rare class is designated the positive class

13

Confusion Matrix

- The following abbreviations are standard

- FP false positive

- TP True Positive

- FN False Negative

- TN True Negative

Predicted Class Predicted Class

Actual Class - -

Actual Class - TP FN

Actual Class - FP TN

14

Classifier Evaluation Metrics

- Accuracy (TP TN)/(TP TN FN FP)

- Precision and Recall

- Recall TP/(TP FN)

- Recall measure the fraction of positive class

examples that are correctly identified - Precision TP/(TP FP)

- Precision is essentially the accuracy of the

examples that are classified as positive - We would like to maximize precision and recall

- They are usually competing goals

- These measure are appropriate for class imbalance

since recall explicitly addresses the rare class

15

Classifier Evaluation Metrics Cont.

- One issue with precision and recall is that they

are two numbers, not one - That makes simple comparisons more difficulty and

it is not clear how to determine the best

classifier - Solution combine the two

- F-measure combines precision and recall

- The F1 measure is defined as

- (2 x recall x precision)/(recall precision)

16

Cost-Sensitive Learning

- Cost-sensitive learning will factor in cost

information - For example, you may be given the relative cost

of a FN vs. FP - You may be given the costs or utilities

associated with each quadrant in the confusion

matrix - Cost-sensitive learning can be implemented by

sampling the data to reflect the costs - If the cost of a FN is twice that of a FP, then

you can increase the ratio of positive examples

by a factor of 2 when constructing the training

set

17

More on autonomous vehicles

- DARPA Grand Challenges. 1,000,000 prize.

- Motivation by 2015 1/3 of ground military forces

autonomous. - 2004 150 mile race through the Mojave desert. No

one finished. CMUs car made it the farthest at

7.3 miles - 2005 Same race. 22 of 23 surpassed the best

distance from 2004. Five vehicles completed the

course. Stanford first, CMU second. Sebastian

Thrun leader for Stanford team. - 2005 Grand Challenge Video

18

More DARPA Grand Challenge

- 2007 Urban Challenge

- 60 mile urban area course to be completed in 6

hours. - Must obey all traffic laws and avoid other robot

cars - Urban Challenge Video

19

Google Autonomous Vehicle

- Google commercial video

- Second Google driverless car video

- Alternative future autonomous vehicles

- video