Part II.3 Evaluation of algorithms - PowerPoint PPT Presentation

1 / 67

Title:

Part II.3 Evaluation of algorithms

Description:

Title: A rigorous analysis of two bi-criterial function families with scalable curvature of the pareto fronts Author: emmerich Last modified by – PowerPoint PPT presentation

Number of Views:100

Avg rating:3.0/5.0

Title: Part II.3 Evaluation of algorithms

1

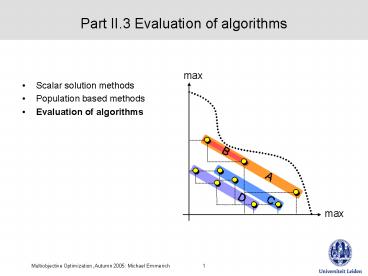

Part II.3 Evaluation of algorithms

- Scalar solution methods

- Population based methods

- Evaluation of algorithms

max

B

A

C

D

max

2

Performance assessment for Pareto optimization

algorithms

3

Limit behavior of stochastic optimizers

Viewpoint 1 Randomized search heuristics

Qualitative Limit behavior for t ? 8

ProbabilityOptimum found

1

Quantitative Expected Running Time E(T)

Algorithm A applied to Problem B

1/2

8

Computation Time (number of iterations)

4

Limit behavior of stochastic optimizers

Viewpoint 2 Optimum approximation algorithms

Qualitative Limit behavior for t ? 8

Quality of solution

Qmax

Algorithm A applied to Problem B

Quantitative Trade-off E(Solution Quality) vs.

Time

8

Computation Time (number of iterations)

5

Limit Behavior of Multiobjective EA Related Work

- Requirements for archive

- Convergence

- Diversity

- Bounded Size

Rudolph 98,00 Veldhuizen 99

Rudolph 98,00 Hanne 99

Thiele et al. 02

convergence to whole Pareto front (diversity

trivial)

store all

store m

convergence to Pareto front subset (no diversity

control)

(impractical)

(not sufficient)

6

The concept of archiving

optimization

archiving

finitememory

generate

update, truncate

finitearchive A

7

Unbounded archives

8

Bounded archive of size M

9

Bounded archive with diverse solutions

y2

y1

10

Lemma on functional representation of Pareto

fronts

11

Theoretical Running Time Analysis for EA

problem domain

type of results

- expected RT (bounds)

- RT with high probability (bounds)

Mühlenbein 92 Rudolph 97 Droste, Jansen,

Wegener 98,02Garnier, Kallel, Schoenauer

99,00 He, Yao 01,02

discrete search spaces

Single-objective EAs

- asymptotic convergence rates

- exact convergence rates

continuous search spaces

Beyer 95,96, Rudolph 97 Jagerskupper 03

Laumanns, Thiele, Deb,

Zitzler GECCO2002 Laumanns,

Thiele, Zitzler, Welzl, Deb PPSN-VII

Multiobjective EAs

discrete search spaces

12

Theoretical Running Time Analysis

13

Which technique is suited for which problem class?

- ? Theoretically (by analysis) difficult

- Limit behavior (unlimited run-time resources)

- Running time analysis

- ? Empirically (by simulation) standard

- Problems randomness, multiple objectives

- Issues quality measures, statistical

testing, benchmark problems, visualization,

14

Comparison of non-dominated sets

15

Quality measures

Is A better than B?

independent ofuser preferences

Yes (strictly)

No

dependent onuser preferences

How much? In what

aspects?

Ideal quality measures allow to make both type

of statements

16

Unary quality indicators

17

Unary quality indicators

18

Unary quality indicators

19

Comparisons in practise

From M. Emmerich, Single- and Multiobjective

Optimization, ElDorado 2005

20

Comparison of sets

21

Diversity measures

22

Some notation

23

Comparison of non-dominated sets

24

Comparison methods

25

Comparison methods

26

Comparison methods

27

Linking comparison methods and dominance

relations

28

Linking comparison methods and dominance

relations

29

Completeness and Compatibility for the binary

e-indicator

30

Combined binary e-indicator

31

Compatibility and completeness of unary operators

and their combinations

32

Compatibility and completeness of unary operators

and their combinations

33

Proof by contradiction

34

Proof by contradiction

35

Proof by contradiction

36

Details for proof and further results

37

Power of unary operators

38

Averaging Pareto Front Approximation sets

39

Averaging Pareto Fronts

40

Example for a median attainment surface

41

Averaging Pareto Fronts

Plotting attainment surfaces http//dbk.ch.umist.

ac.uk/knowles/plot_attainments/

Viviane Grunert da Fonseca, Carlos M. Fonseca,

and Andreia O. Hall. Inferential Performance

Assessment of Stochastic Optimisers and the

Attainment Function. In Eckart Zitzler, Kalyanmoy

Deb, Lothar Thiele, Carlos A. Coello Coello, and

David Corne, editors, First International

Conference on Evolutionary Multi-Criterion

Optimization, pages 213-225. Springer-Verlag.

Lecture Notes in Computer Science No. 1993, 2001

42

Test Function Construction Deb 98a

43

Convex function by Deb

44

Construction of multimodal Pareto-fronts

45

Construction of multi-global Pareto-fronts

46

ED-Function, taking its optima at the naturals

47

Zitzler Thiele Deb (ZDT) Problems

48

ZDT 1 Problem

49

ZDT1

50

ZDT2 Problem

51

ZDT2

52

ZDT3 Problem

53

(No Transcript)

54

ZDT4 Function

55

ZDT4

56

ZDT6 Problem

57

ZDT5

58

Results on benchmark problems

59

Criticism of Debs benchmark set

60

Advances of Debs benchmark set

61

Generalized Schaffer Problem

Test Problems Based on Lame Superspheres,

Michael Emmerich and Andre Deutz, EMO2007,

Matsushima, Japan

62

Super-spherical Pareto Fronts Approximated with

SMS-EMOA

63

Superspherical Benchmarks (Emmerich, Deutz)

64

Problem and Mirror Problem

Pareto front for g 1 problem

Pareto front for g 1 mirror problem

65

Summary/Outlook

- Limit behavior of stochastic optimizers

- Necessary but not sufficient conditions for

practical usage - Hyperbox-archiving strategies can provide

(probabilistic) guarantees for convergence and

diversity - Convergence analysis of (stochastic) optimizers

- Up to now only available for simple discrete

cases - Mainly used to gain insights into the working

mechanisms, not for solving practical problems - Empirical analysis of stochastic optimizers

- Performance measures need to capture convergence

and diversity - Strict comparison methods work with order

relations generalized for approximation sets - Comparisons of approximation sets in a strict

sense can only be achieved with binary indicators

(e.g. binary e-indicators) - Attainment surfaces can be used for averaging

Pareto fronts and getting an visual impression

of average results - Test functions can be used to test for

different problem difficulties

66

Concluding Remarks

- Multiobjective Optimization Algorithms can be

used in decision support systems and design

environments to extract and study subsets of

interesting (Pareto optimal) solutions - Preference Modeling (Constraints, Objectives,

Uncertainties, Orders, Utility functions) is used

to formally describe desired solution sets - Ordered set theory (in particular Pareto-orders)

are the basis for the MoO theory - Optimality conditions can be stated for local and

global Pareto optimality. Pareto ordered

landscapes have unique characteristics (i.e.

Barrier forests instead of barrier trees) - Single-point methods obtain one solution due to a

user-specified objective function. The choice of

the utility function determines whether solution

will be optimal or not. - Population-based methods aim at finding a

well-spread solution set on the Pareto front,

making it possible to analyze trade-offs visually

and select a compromise solution manually from it - Performance indicators like the S-metric are used

to ensure quality of approximation sets achieved

with Population-based methods

67

Some open questions

- How can we deal with problems with many

conflicting objectives and constraints (many

objectives optimization) - A formal theory of population-based MoO

algorithms is still in its infancy - How can concepts from deterministic optimization

(i.e. KKT conditions) be used to make

metaheuristics more efficient? - Topology of multiobjective optimization How to

describe the geometry of landscapes? - Questions related to components of algorithms

e.g. - How do points distribute on a PF when the

S-metric is maximized? - Are there efficient algorithms for computing the

S-metric? - When/how to use multiobjective optimization in

practice? How does is fit best into the decision

making environment of different disciplines?

![Artificial Intelligence Lecture 8: [Part I]: Selected Topics on Knowledge Representation PowerPoint PPT Presentation](https://s3.amazonaws.com/images.powershow.com/7421234.th0.jpg?_=20151128083)