Rules PowerPoint PPT Presentation

1 / 92

Title: Rules

1

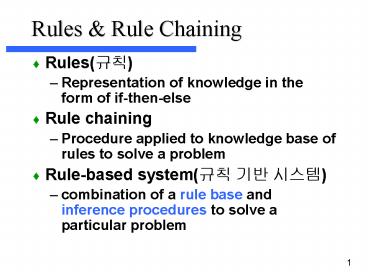

Rules Rule Chaining

- Rules(??)

- Representation of knowledge in the form of

if-then-else - Rule chaining

- Procedure applied to knowledge base of rules to

solve a problem - Rule-based system(?? ?? ???)

- combination of a rule base and inference

procedures to solve a particular problem

2

(No Transcript)

3

Rule-based Systems

- Working memory contains assertions

- Stretch is a giraffe

- Long-term Memory

- Rule base contains rules

- Assertion adding

- if giraffe(x) then long-legs(x)

- system considered to be a deduction system

- Action taking

- if food(x) then eat(x)

- system considered to be a reaction system

4

?? ??? Allan Newell (Production system)

5

??? ???? ??

6

Antecedent-Consequent Rules

- Rn IF condition 1

- condition 2

- ...

- THEN consequent 1

- consequent 2

- ...

7

Forward/Backward Chaining???/??? ??)

- Remember logic proofs

- Can work forward from initial state by applying

rules to assertions to generate new assertions

until goal is reached - match if-part to add then-part

- Can work backward from goal state to work

backwards from goal to assertions - match then-part to goal, try to find support for

if-part

8

The Inference Process

Forward Chaining 1 Enter new data 2 Fire

forward chaining rules 3 Rule actions infer new

data values 4 Go to step 2 5 repeat until no

new data can be inferred 6 If no solution, rule

base is insufficient

9

The Inference Process

- Forward Chaining

1

Add new data values to knowledge base

Get some new data

4

Fire forward chaining rules

2

Infer new data values from rule actions

3

10

The Inference Process

- Cascading Rules

- Only applicable in forward chaining

- Rule executes causing inference of new data

- New data is added to the knowledge base

- New data added causes other rules to fire

- Can be very time consuming and inefficient in

large systems

11

The Inference Process

- Backward Chaining

- 1 State a specific goal (question)

- 2 Find rules which resolve the goal

- 3 At runtime, answer questions to satisfy the

antecedents of the rules as required - 4 Obtain a result (goal resolved or not)

12

The Inference Process

- Backward Chaining

1

State primary goal to source

Source sub goals

Fire backward chaining rules

2

3

Primary goal sourced

4

13

The Inference Process

- Chaining in Action

- A list of known facts - A, B, D, G, P, Q, R, S.

14

The Inference Process

- Chaining in Action

- A list of known facts - A, B, D, G, P, Q, R, S.

Raining outside?

15

The Inference Process

- Chaining in Action

- A list of known facts - A, B, D, G, P, Q, R, S.

Cold?

16

The Inference Process

- Chaining in - X is the goal

- A list of Rules

- 1 A I gt X

- 2 AB gt C

- 3 CD gt E

- 4 FG gt H

- 5 EH gt X

- 6 AC gt F

- 7 PQ gt R

- 8 RS gt T

17

The Inference Process

- Chaining in Action (Backward)

- A list of Rules

- 1 A I gt X

- 2 AB gt C

- 3 CD gt E

- 4 FG gt H

- 5 EH gt X

- 6 AC gt F

- 7 PQ gt R

- 8 RS gt T

Rule 1 never fired because either I was not

true or the user did not know it was true

Rules 78 never fired because they were not

relevant to proving our goal of X, though they

may have been used if we had another consultation

with a different goal

18

The Inference Process

- Chaining in Action (Forward)

- A list of known facts - A, B, D, G, P, Q, R, S,

are all true (we asked the user) and I is false

or not known

19

The Inference Process

- Chaining in Action (Forward)

- A list of Rules

- 1 A I gt X

- 2 AB gt C

- 3 CD gt E

- 4 FG gt H

- 5 EH gt X

- 6 AC gt F

- 7 PQ gt R

- 8 RS gt T

20

The Inference Process

- Chaining in Action (Forward)

- A list of Rules

- 1 A I gt X

- 2X AB gt C

- 3X CD gt E

- 4 FG gt H

- 5 EH gt X

- 6X AC gt F

- 7X PQ gt R

- 8X RS gt T

21

Terminology(??)

- If giraffe(x) then long-legs(x)

- x is a variable

- x is bound when it becomes identified with a

particular value (e.g. giraffe(Geoffrey) - pattern is instantiated when variables are bound

22

ZOOKEEPER deduction system

- Goal identify animals

- Domain 7 kinds of animals (cheetah, tiger,

giraffe, zebra, ostrich, penguin, albatross) - Design intermediate conclusions are generated

(as opposed to having a rule for each kind of

animal) - advantages expansion of domain easier, simpler

rules to deal with, simpler rules to create (like

modular programming)

23

Rules

- // ????(mammal)?? ??(bird)??

- Z1 IF ((? animal) has hair) THEN ((? animal) is a

mammal)) - ?? P1. IF the animal has hair

- THEN it is a mammal

- Z2 IF ((? animal) gives milk) THEN ((? animal) is

a mammal)) - ?? P2. IF the animal gives milk

- THEN it is a mammal

- Z3 IF ((? animal) has feathers) THEN ((? animal)

is a bird)) - Z4 IF ((? animal) flies) ((? animal) lays eggs)

THEN ((? animal) is a bird))

24

More Rules

- // ????(carnivore)??

- Z5 IF ((? animal) is a mammal) ((? animal) eats

meat) THEN ((?animal) is a carnivore)) - Z6 IF ((? animal) is a mammal) ((? animal) has

pointed teeth) ((? animal) has claws) ((?

animal) has forward-pointing eyes) THEN

((?animal) is a carnivore)) - //???(ungulate)? ??(hoof)? ?? ????.

- Z7 IF ((? animal) is a mammal) ((? animal) has

hoofs) THEN ((?animal) is a ungulate)) - // ???? ?? ??? ???(cud)? ?? ???.

- Z8 IF ((? animal) is a mammal) ((? animal)

chews cud) THEN ((? animal) is a ungulate))

25

- // ????? ??? ? ?? ??? ??.

- Z9 IF ((? animal) is a carnivore) ((? animal)

has tawny color) ((? animal) has dark spots)

THEN ((? animal) is a cheetah)) - Z10 IF ((? animal) is a carnivore) ((? animal)

has tawny color) ((? animal) has black

stripes) THEN ((? animal) is a tiger)) - // ?????? ??? ?? ? ?? ??? ??.

- Z11 IF ((? animal) is a ungulate) ((? animal)

has long neck) ((? animal) has long legs)

((? animal) has dark spots)THEN ((? animal) is

a giraffe)) - Z12 IF ((? animal) is a ungulate) ((? animal)

has black stripes) THEN ((? animal) is a zebra))

26

More Rules

- // ?? ? ??? ??? ?? ???.

- Z13 IF ((? animal) is a bird) ((? animal) does

not fly) ((? animal) has long legs) ((?

animal) has long neck) ((? animal) is black

and white) THEN ((? animal) is a ostrich)) - Z14 IF ((? animal) is a bird) ((? animal) does

not fly) ((? animal) swims) ((? animal) is

black and white) THEN ((? animal) is a penguin)) - Z15 IF ((? animal) is a bird) ((? animal) flies

well) THEN ((? animal) is a albatross))

27

Example with forward chaining

- Assertions (p.124)

- Stretch has hair

- Stretch chews cud

- Stretch has long legs

- Stretch has a long neck

- Stretch has tawny color

- Stretch has dark spots

28

(No Transcript)

29

Example with forward chaining

- Process (p.126 Fig. 7.2)

- Z1 IF ((? animal) has hair) THEN ((? animal) is a

mammal)) - (? animal) bound to Stretch when matching with

first assertions Stretch has hair - Stretch is a mammal

- Z8 IF ((? animal) is a mammal) ((? animal)

chews cud) THEN ((? animal) is a ungulate)) - Stretch is a ungulate

- Z11 IF ((? animal) is a ungulate) ((? animal)

has long neck) ((? animal) has long legs)

((? animal) has dark spots)THEN ((? animal) is

a giraffe)) - gt Stretch is a giraffe

30

- ? ??? ??? ???? ?? ?(dark spot)? ???.

- ?? Z9? Z11? ??? ? ??? ????? ? ????? ? ??? ????

??? ? ??. - ? ?? ???? Z1? ?? ??? ?? ????.

31

- ? ??? ????? ???? ??. Z8? ??? ?????, ???? ? ??

?(even-toed)??? ????. - ? ??? ? ??? ? ?? ?? ??. ??? Z11? ????, ??? ????

?? ??.

32

Zookeepers forward chaining

- Until no rule produces a new assertion or the

animal is identified - For each rule,

- Try to support each of the rules antecedents

by matching it to known facts - If all the rules antecedents are supported,

assert the consequent unless there is an

identical assertion already - Repeat for all matching and instantiation

alternatives

33

Backward Chaining

- Given a hypothesis determine if it is true

- Until all hypotheses have been tried and none

have been supported or the animal is identified - For each hypothesis,

- Try to support each of the rules antecedents

by matching it to known facts or by

backward chaining through another rule,

creating new hypotheses. Be sure to check all

matching and instantiation alternatives. - If all the rules antecedents are supported,

announce success and conclude that the

hypothesis is true

34

Backward Chaining Example

- Assertions

- Swifty has forward-pointing eyes

- Swifty has claws

- Swifty has pointed teeth

- Swify has hair

- Swifty has a tawny color

- Swifty has dark spots

- Hypothesis Swifty is a cheetah

35

(No Transcript)

36

Process

- Z9 IF ((? animal) is a carnivore) ((? animal)

has tawny color) ((? animal) has dark spots)

THEN ((? animal) is a cheetah)) - Check first antecedent (Swifty is a carnivore)?

- Rules Z5 Z6 possible

- Z5 IF ((? animal) is a mammal) ((? animal)

eats meat) THEN ((?animal) is a carnivore)) - Check first antecedent (Swifty is a mammal)?

- Rules Z1 and Z2 possible

- Z1 IF ((? animal) has hair) THEN ((?

animal) is a mammal)) - Swifty has hair so conclude Swifty is a

mammal - Check second antecedent (Swifty eats meat)?

- unknown so rule cant be used

- Z6 IF ((? animal) is a mammal) ((? animal)

has pointed teeth) ((? animal) has claws)

((? animal) has forward-pointing eyes) THEN

((?animal) is a carnivore)) - Check first antecedent (Swifty is a mammal)?

YES, established earlier - Check other antecedents all true from

assertions - Conclude (Swifty is a carnivore)

- Check other antecedents true from assertions

- Conclude (Swifty is a cheetah)

37

Which direction to choose?

- Depends on problem (p.129)

- search space features

- fan out

- set of facts lead to many conclusions gt high fan

out - fan in

- typical hypotheses result in many questions gt

high fan in - If more fan out than fan in gt backward

- If more fan in than fan out gt forward

- problem features

- If interested in one of many possible conclusions

gt backward - Notice that this results in a focus on the

particular hypothesis being considered. Only

information relevant to that will be pursued. - If interested in everything that can be concluded

from a set of facts that wont change gt forward

38

MYCIN

- Early rule-based system for identifying bacterial

infections - Background

- antibiotic treatment should begin right away

- antibiotics that are specific to a particular

organism are more effective than broad-spectrum

antibiotics - takes time to culture organism

- System uses backward chaining to keep questions

focussed - also makes interface easier (specific questions

are asked)

39

- gtWhat is the patients name?

- John Doe

- gtMale or female?

- male

- gtage?

- He is 65

- gtHave you obtained positive cultures indicating

general type? - Yes

- gtWhat type of infection is it?

- Primary bacteremia

- gtWhen did symptoms first appear?

- May 5

- gtLets call the most recent positive culture C1.

From what site was C1 taken? - From the blood

- gtWhen?

- May 9

40

gtLets call the first significant organism from

this culture U1. Do you know the identity of

U1? No gtIs U1 a rod or a coccus or something

else? Rod gtWhat is the gram stain of

U1? Gram-negative gtHave there been positive

cultures from sites that are not associated with

the infections about which you are seeking

advice? No gtDid U1 grow aerobically? Yes gtMy

recommendation will be based on the opinion that

the identity of U1 may be1. Pseudomonas-aeruginos

a 2. Klebsiella-pneumoniae 3. E. coli 4.

Bacteroides-fragilis 5. Enterobacter 6.

Proteus-nonmirabilis

41

gtOn a scale of 0 to 4, where higher numbers

indicate greater severity, how would you rate the

patients degree of sickness? 3 gtDoes the patient

have a clinically significant allergic reaction

to any antimicrobial agent? No gtHow much does the

patient weigh? 70 kilograms gtMy first

recommendation is as follows to cover for items

1, 2, 3, 5, and 6, give gentamycin using a dose

of 119 mg (1.7 mg/kg) q8h IV or IM for 10 days.

Modify does in renal failure. Also, to cover for

item 4, give clindamycin using a dose of 595 mg

(8.5 mg/kg) q6h IV or IM for 14 days. If

diarrhea or other gastrointestinal symptoms

develop, check for pseudomembranous colitis.

42

Mycin

- About 500 rules

- Handles about 100 causes

- Typical rule

- If xs type is primary bacteremia the

suspected entry point of x is the

gastrointestinal tract the site if the

culture of x is one of the sterile sitesthen

there is evidence that x is bacteroides

43

MYCINs reasoning

- Backward chaining (goal-directed)

- Heuristics for efficiency and user-friendliness

(dont ask unnecessary questions) - Strategy

- first ask a general set of questions to rule out

totally unlikely diagnoses. - focus on particular disorders, and backward chain

to try to prove each one. - Rule firing

- first checks all the premises of the rule to see

if any are known to be false (dont pursue) - first look at rules that have more certain

conclusions, abandon a search once the

certainties involved get below 0.2

44

Constraints on MYCIN

- system must be useful (implying competency,

consistency and high perfomance). - task area chosen partly because of a demonstrated

need - e.g., in early 70's 1/4 of the population of the

US were given penicillin 90 of these

prescriptions were unnecessary - emphasis of its supportive role as a tool for a

physician, rather than as a replacement for his

own reasoning process - accommodate a large and changing body of

technical knowledge - capable of handling an interactive dialog

- supplying coherent explanation both its line of

reasoning and its knowledge. - Speed, access and ease of use.

45

MYCINs Success

- MYCIN introduced several new features which have

become the hallmarks of the expert system. - knowledge base made of rules

- rules are probabilistic - MYCIN is robust enough

to to arrive at correct conclusions even when

some of the evidence is incomplete or incorrect. - ability to explain its own reasoning process.

- The user (a physician) can interrogate it in

various ways - by enquiring, why it asked a

particular question or how it reached an

intermediate conclusion, for example. It was one

of the first genuinely user-friendly systems. - it actually works - It does a job that takes a

human years of training.

46

MYCINs Success cont.

- Commercial or routine use?

- produced high-quality results - its performance

equalled that of specialists - never used routinely itself by physicians

- spawned a whole series of medical-diagnostic

'clones', several of which are in routine

clinical use - Other systems resulting from work on MYCIN

- TEIRESIAS (explanation facility concept) ,

- EMYCIN (first shell),

- PUFF,

- CENTAUR,

- VM,

- GUIDON (intelligent tutoring),

- SACON

- ONCOCIN

- ROGET

47

Rule-based Reaction Systems

- if-part specifies conditions to be satisfied

- then-part specifies action to be taken

- add an assertion

- delete an assertion

- execute a procedure

48

XCON

- Configures Computer Systems

- stimulated commercial interest in rule-based

expert systems - 10,000 rules

- properties of hundreds of components

- orders have 100-200 components

49

Toy Reaction System(Bagger)

- p.132 137

50

Bagger (1)

- B1 IF step is check-order

- potato chips are to be bagged

- there is no Pepsi to be bagged

- THEN ask the customer whether he

- would like a bottle of Pepsi

- B2 IF step is check-order

- DELETE step is check-order

- ADD step is bag-large-items

51

- B3 IF step is bag-large-items

- a large item is to be bagged

- the large item is a bottle

- the current bag contains less

- than 6 large items

- DELETE the large item is to be bagged

- ADD the large item is in the current

- bag

52

- B4 IF step is bag-large-items

- a large item is to be bagged

- the current bag contains less

- than 6 large items

- DELETE the large item is to be bagged

- ADD the large item is in the current

- bag

53

- B5 IF step is bag-large-items

- a large item is to be bagged

- an empty bag is available

- DELETE the current bag is the current

- bag

- ADD the empty bag is the current bag

- B6 IF step is bag-large-items

- DELETE step is bag-large-items

- ADD step is bag-medium-items

54

- B7 IF step is bag-medium-items

- a medium item is frozen, but

- not in a freezer bag

- DELETE the medium item is not in a

- freezer bag

- ADD the medium item is in a freezer

- bag

55

- B8 IF step is bag-medium-items

- a medium item is to be bagged

- the current bag is empty or

- contains only medium items

- the current bag contains less

- than 12 medium items

- DELETE the medium item is to be bagged

- ADD the medium item is in the

- current bag

56

- B9 IF step is bag-medium-items

- a medium item is to be bagged

- an empty bag is available

- DELETE the current bag is the current

- bag

- ADD the empty bag is the current bag

- B10 IF step is bag-medium-items

- DELETE step is bag-medium-items

- ADD step is bag-small-items

57

- B11 IF step is bag-small-items

- a small item is to be bagged

- the current bag is empty or

- contains only small items

- the current bag contains less

- than 18 small items

- DELETE the small item is to be bagged

- ADD the small item is in the current

- bag

58

- B12 IF step is bag-small-items

- a small item is to be bagged

- an empty bag is available

- DELETE the current bag is the current

- bag

- ADD the empty bag is the current bag

- B13 IF step is bag-small-items

- DELETE step is bag-small-items

- ADD step is done

59

Initial State

- 1. Step is check-order

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are to be bagged

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is a bag

60

After B1

- 1. Step is check-order

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are to be bagged

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is to be bagged

61

After B2

- 1. Step is bag-large-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are to be bagged

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is to be bagged

62

After B3

- 1. Step is bag-large-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are to be bagged

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is in Bag1

63

After B4

- 1. Step is bag-large-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is in Bag1

64

After B6

- 1. Step is bag-medium-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is in Bag1

65

After B7

- 1. Step is bag-medium-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag1 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

66

After B9

- 1. Step is bag-medium-items

- 2. Bread is to be bagged

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag2 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

67

After B8

- 1. Step is bag-medium-items

- 2. Bread is in Bag2

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is to be bagged

- 6. Potato chips are to be bagged

- 7. Bag2 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

68

After B8

- 1. Step is bag-medium-items

- 2. Bread is in Bag2

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is in Bag2

- 6. Potato chips are to be bagged

- 7. Bag2 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

69

After B8

- 1. Step is bag-medium-items

- 2. Bread is in Bag2

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is in Bag2

- 6. Potato chips are in Bag2

- 7. Bag2 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

70

After B10

- 1. Step is bag-small-items

- 2. Bread is in Bag2

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is in Bag2

- 6. Potato chips are in Bag2

- 7. Bag2 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

71

After B12

- 1. Step is bag-small-items

- 2. Bread is in Bag2

- 3. Jam is to be bagged

- 4. Cornflakes are in Bag1

- 5. Ice cream is in Bag2

- 6. Potato chips are in Bag2

- 7. Bag3 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

72

After B11

- 1. Step is bag-small-items

- 2. Bread is in Bag2

- 3. Jam is in Bag3

- 4. Cornflakes are in Bag1

- 5. Ice cream is in Bag2

- 6. Potato chips are in Bag2

- 7. Bag3 is current bag

- 8. Pepsi is in Bag1

- 9. Ice cream is in freezer bag

73

- Conflict Resolution Strategies(?? ?? ??)

- - Rule ordering

- - Context limiting

- - Specificity ordering

- - Recency ordering

- - Size ordering

74

Valuable Facts

- 1. Comet is-a horse

- 2. Prancer is-a horse

- 3. Comet is-a-parent-of Dasher

- 4. Comet is-a-parent-of Prancer

- 5. Prancer is fast

- 6. Dasher is-a-parent-of Thunder

- 7. Thunder is fast

- 8. Thunder is-a horse

- 9. Dasher is-a horse

75

Valuable Rule

- ltParent Rulegt

- IF ?x is-a horse

- ?x is-a-parent of ?y

- ?y is fast

- THEN ?x is valuable

76

Forward Chaining (1)

77

Forward Chaining (2)

78

(No Transcript)

79

Backward Chaining (1)

80

Backward Chaining (2)

81

More Valuable Information

- ltWinner Rulegt

- IF ?w is-a winner

- THEN ?w is fast

- 10. Dasher is-a winner

- 11. Prancer is-a winner

82

Backward Chaining (3)

83

(No Transcript)

84

Relational Operation and Forward Chaining p.148

- Relational operations can support forward

chaining - Create a table containing assertions

- Execute relational operators on table to see if

rule should be triggered - Select

- Project

- Join

85

- Extremely expensive

- If nantecedents mrules, then for each new

assertion - mn SELECTs

- m(2n-1) PROJECTs

- m(n-1) JOINs

86

(No Transcript)

87

(No Transcript)

88

(No Transcript)

89

(No Transcript)

90

Rete

91

Rete (2)

92

Rete (3)