CHAPTER%204%20%20STOCHASTIC%20APPROXIMATION%20FOR%20ROOT%20FINDING%20IN%20NONLINEAR%20MODELS

Title: CHAPTER%204%20%20STOCHASTIC%20APPROXIMATION%20FOR%20ROOT%20FINDING%20IN%20NONLINEAR%20MODELS

1

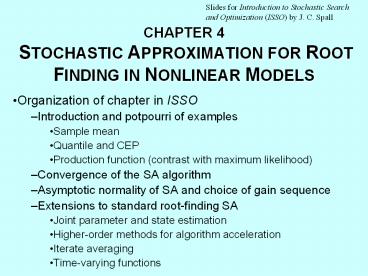

CHAPTER 4 STOCHASTIC APPROXIMATION FOR ROOT

FINDING IN NONLINEAR MODELS

Slides for Introduction to Stochastic Search and

Optimization (ISSO) by J. C. Spall

- Organization of chapter in ISSO

- Introduction and potpourri of examples

- Sample mean

- Quantile and CEP

- Production function (contrast with maximum

likelihood) - Convergence of the SA algorithm

- Asymptotic normality of SA and choice of gain

sequence - Extensions to standard root-finding SA

- Joint parameter and state estimation

- Higher-order methods for algorithm acceleration

- Iterate averaging

- Time-varying functions

2

Stochastic Root-Finding Problem

- Focus is on finding ? (i.e., ??) such that g(?)

0 - g(?) is typically a nonlinear function of ?

(contrast with Chapter 3 in ISSO) - Assume only noisy measurements of g(?) are

available Yk(?) g(?) ek(?), k 0, 1, 2,, - Above problem arises frequently in practice

- Optimization with noisy measurements (g(?)

represents gradient of loss function) (see

Chapter 5 of ISSO) - Quantile-type problems

- Equation solving in physics-based models

- Machine learning (see Chapter 11 of ISSO)

3

Core Algorithm for Stochastic Root-Finding

- Basic algorithm published in Robbins and Monro

(1951) - Algorithm is a stochastic analogue to steepest

descent when used for optimization - Noisy measurement Yk(?) replaces exact gradient

g(?) - Generally wasteful to average measurements at

given value of ? - Average across iterations (changing ?)

- Core Robbins-Monro algorithm for unconstrained

root-finding is - Constrained version of algorithm also exists

4

Circular Error Probable (CEP) Example of

Root-Finding (Example 4.3 in ISSO)

- Interested in estimating radius of circle about

target such that half of impacts lie within

circle (? is scalar radius) - Define success variable

- Root-finding algorithm becomes

- Figure on next slide illustrates results for one

study

5

True and estimated CEP 1000 impact points with

impact mean differing from target point (Example

4.3 in ISSO)

6

Convergence Conditions

- Central aspect of root-finding SA are conditions

for formal convergence of the iterate to a root

?? - Provides rigorous basis for many popular

algorithms (LMS, backpropagation, simulated

annealing, etc.) - Section 4.3 of ISSO contains two sets of

conditions - Statistics conditions based on classical

assumptions about g(?), noise, and gains ak - Engineering conditions based on connection to

deterministic ordinary differential equation

(ODE) - Convergence and stability of ODE dZ(?)?/??d?

g(Z(?)) closely related to convergence of SA

algorithm (Z(?) represents p-dimensional

time-varying function and ? denotes time) - Neither of statistics or engineering conditions

is special case of other

7

ODE Convergence Paths for Nonlinear Problem in

Example 4.6 in ISSO Satisfies ODE Conditions Due

to Asymptotic Stability and Global Domain of

Attraction

8

Gain Selection

- Choice of the gain sequence ak is critical to the

performance of SA - Famous conditions for convergence are

? and - A common practical choice of gain sequence is

- where 1/2 lt ? ? 1, a gt 0, and A ? 0

- Strictly positive A (stability constant) allows

for larger a (possibly faster convergence)

without risking unstable behavior in early

iterations - ? and A can usually be pre-specified critical

coefficient a usually chosen by trial-and-error

9

Extensions to Basic Root-Finding SA (Section 4.5

of ISSO)

- Joint Parameter and State Evolution

- There exists state vector xk related to system

being optimized - E.g., state-space model governing evolution of

xk, where model depends on values of ? - Adaptive Estimation and Higher-Order Algorithms

- Adaptively estimating gain ak

- SA analogues of fast Newton-Raphson search

- Iterate Averaging

- See slides to follow

- Time-Varying Functions

- See slides to follow

10

Iterate Averaging

- Iterate averaging is important and relatively

recent development in SA - Provides means for achieving optimal asymptotic

performance without using optimal gains ak - Basic iterate average uses following sample mean

as final estimate - Results in finite-sample practice are mixed

- Success relies on large proportion of individual

iterates hovering in some balanced way around ?? - Many practical problems have iterate approaching

?? in roughly monotonic manner - Monotonicity not consistent with good performance

of iterate averaging see plot on following slide

11

Contrasting Search Paths for Typical p 2

Problem Ineffective and Effective Uses of

Iterate Averaging

12

Time-Varying Functions

- In some problems, the root-finding function

varies with iteration gk(?) (rather than g(?)) - Adaptive control with time-varying target vector

- Experimental design with user-specified input

values - Signal processing based on Markov models

(Subsection 4.5.1 of ISSO) - Let denote the root to gk(?) 0

- Suppose that ? for some fixed value

(equivalent to the fixed ?? in conventional

root-finding) - In such cases, much standard theory continues to

apply - Plot on following slide shows case when gk(?)

represents a gradient function with scalar ?

13

Time-Varying gk(?) ?Lk(?)?/??? for Loss

Functions with Limiting Minimum