Analysis of Variance in Matrix Form - PowerPoint PPT Presentation

1 / 83

Title:

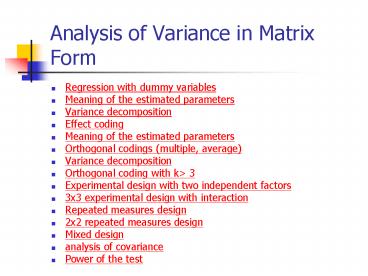

Analysis of Variance in Matrix Form

Description:

Analysis of Variance in Matrix Form Regression with dummy variables Meaning of the estimated parameters Variance decomposition Effect coding Meaning of the estimated ... – PowerPoint PPT presentation

Number of Views:293

Avg rating:3.0/5.0

Title: Analysis of Variance in Matrix Form

1

Analysis of Variance in Matrix Form

- Regression with dummy variables

- Meaning of the estimated parameters

- Variance decomposition

- Effect coding

- Meaning of the estimated parameters

- Orthogonal codings (multiple, average)

- Variance decomposition

- Orthogonal coding with kgt 3

- Experimental design with two independent factors

- 3x3 experimental design with interaction

- Repeated measures design

- 2x2 repeated measures design

- Mixed design

- analysis of covariance

- Power of the test

2

Regression with dummy variables(0 1)

Data of an experiment with 1 factor with k 4

independent levels

3

Regression with dummy variables

With k independent groups it is possible to

encode the k factor levels using the dummy

coding. It is then possible to construct a matrix

X where each column Xk corresponds to a level of

the factor set in contrast to the reference

level, in this case the last one. Note that the

X0 column encode the reference mean, in our

example the one of the k-th group.

4

Regression with dummy variables (0 1)

This coding system implies that the general

matrix X'X assume as values

5

Regression with dummy variables (0 1)

From which

Similarly, the matrix will become X'y

6

Regression with dummy variables (0 1)

7

Meaning of the estimated parameters

The dummy coding states that the parameter b0 is

the average of the k-th category taken into

account, the other parameters correspond to the

difference between the means of the groups and

the reference category, which is the last one

encoded with the vector (0, 0,0).

so that

whereas

8

Meaning of the estimated parameters

The beta parameters estimated with the dummy

coding assess the following null hypotheses

9

Meaning of the estimated parameters

We know that For each of the nk observations we

can see that Xk 1 , while the remaining X-k

0. Therefore, the value estimated by the

regression for each group of independent

observations can be attributed to the average of

the observations. In fact

10

Sums of squares

In general, it is possible to decompose the total

sum of squares (SStot) in the part ascribed to

the regression (SST) and the part ascribed to the

error(SSW).

11

Sums of squares

12

Sums of squares

13

ANOVA Results

As in multiple regression, it is possible to test

the overall null hypothesis of equality of the

estimated betas with 0, leading to the following

result

Where k is the number of columns of the matrix X

excluding X0.

14

Effect coding (1, 0, -1)

You can encode the levels of the factor using a

coding centered on the overall mean of the

observations. This is called Effect coding. Note

the X0 column to encode the overall average. The

last group assume value -1, leading to 0 the sum

of the values ??in each column.

15

Effect coding (1, 0, -1)

This coding system implies that the general

matrix X'X take as values

16

Effect coding (1, 0, -1)

From which

Similarly, the matrix will become X'y

17

Effect coding (1, 0, -1)

18

Meaning of the estimated parameters

The Effect coding states that the parameter b0

corresponds to the overall average of the

observations, the other parameters correspond to

the difference between the average of the group

and the overall average.

So that

Whereas

19

Meaning of the estimated parameters

The parameters estimated with the Effect coding

assess the following null hypotheses

20

Meaning of the estimated parameters

We know that For each of the nk observations we

can see that Xk 1 and the remaining X-k

0. Therefore, the value estimated by regression

for each group of independent observations can be

attributed to the average of the observations

21

Meaning of the estimated parameters

For the k-th group we have

It is then shown as the difference between the

two encodings lies in the value assumed by the

parameter beta. While in the dummy it represents

the difference with the average of the

reference group, in the effect coding it

represent the difference with the overall

average.

22

Orthogonal coding

When the independent variables are mutually

independent their contribution to the adaptation

of the model to the data is divisible according

to the proportions

The contributions of the k variables X will be

unique and independent and there will be no

indirect effects. This condition can be realized

by an orthogonal encoding of the factors levels

23

Orthogonal coding

The encoding is orthogonal when When the

components of the effects are purely additive,

then with the multiplicative components equal to

zero, they establish comparisons between averages

orthogonal in the analysis of variance. This type

of comparisons are called orthogonal contrasts.

24

Orthogonal coding

You can build such contrasts in different

ways. As a general rule, in order to encode a

factor I 3 levels, you may want to use

25

Orthogonal coding

This coding allows for evaluation of the

following null hypotheses The estimated beta

parameters allow you to make a decision about

such hypotheses, in fact

26

Orthogonal coding

It seems clear that it is preferable an encoding

directly centered on the averages, so that the

estimated beta parameters are more "readable"

27

Orthogonal coding

The estimated parameters therefore are

28

Variance decomposition

In order to conduct a statistical test on the

regression coefficients is necessary

- calculate the SSreg and the SSres for the model

containing all independent variables - calculate the SSreg for the model excluding the

variable for which you want to test the

significance (SS-i), or in balanced orthogonal

designs, directly calculates the sum of squares

caused only by the variables you want to test

the significance (SSi). - perform an F-test with at the numerator SSi

weighed to the difference of the degrees of

freedom and with denominator SSres / (n-k-1)

29

Variance decomposition

To test, for example, the weight of only the

first variable X1 with respect to the total

model, it is necessary to calculate SSreg

starting from b1 and X1.

30

Variance decomposition

31

Variance decomposition

32

Variance decomposition

You can then calculate the F statistic for the

complete model as for the individual variables Xi.

33

Variance decomposition

Similarly, also the amount of variance explained

by the model can be recomposed additively

34

Variance decomposition

- However, there are different algorithms to

decompose the variance attributed to the several

factors, especially when the dependent variables

(DV) and any covariates (CV) are correlated to

each other. - In accordance with the distinction made by SAS, 4

modes are indicated for the variance

decomposition. These modes are called - type-I

- type-II

- type-III

- type-IV

35

Variance decomposition

- In R / S-PLUS the funzione anova calculate SS via

a Type-I. It has been developed the car package

that allows, through the Anova function, using

the Type-II and Type III. - For more details see

- Langsrud, Ø. (2003), ANOVA for Unbalanced Data

Use Type II Instead of Type III Sums of Squares,

Statistics and Computing, 13, 163-167.

36

Variance decomposition

- Type-I sequential

- The SS for each factor is the incremental

improvement in the error SS as each factor effect

is added to the regression model. In other words

it is the effect as the factor were considered

one at a time into the model, in the order they

are entered in the model selection. The SS can

also be viewed as the reduction in residual sum

of squares (SSE) obtained by adding that term to

a fit that already includes the terms listed

before it. - Pros

- (1) Nice property balanced or not, SS for all

the effects add up to the total SS, a complete

decomposition of the predicted sums of squares

for the whole model. This is not generally true

for any other type of sums of squares. - (2) Preferable when some factors (such as

nesting) should be taken out before other

factors. For example with unequal number of male

and female, factor "gender" should precede

"subject" in an unbalanced design. - Cons

- (1) Order matters! Hypotheses depend on the order

in which effects are specified. If you fit a

2-way ANOVA with two models, one with A then B,

the other with B then A, not only can the type I

SS for factor A be different under the two

models, but there is NO certain way to predict

whether the SS will go up or down when A comes

second instead of first.This lack of invariance

to order of entry into the model limits the

usefulness of Type I sums of squares for testing

hypotheses for certain designs. - (2) Not appropriate for factorial designs

37

Variance decomposition

- Type II hierarchical or partially sequential

- SS is the reduction in residual error due to

adding the term to the model after all other

terms except those that contain it, or the

reduction in residual sum of squares obtained by

adding that term to a model consisting of all

other terms that do not contain the term in

question. An interaction comes into play only

when all involved factors are included in the

model. For example, the SS for main effect of

factor A is not adjusted for any interactions

involving A AB, AC and ABC, and sums of squares

for two-way interactions control for all main

effects and all other two-way interactions, and

so on. - Pros

- (1) appropriate for model building, and natural

choice for regression. - (2) most powerful when there is no interaction

- (3) invariant to the order in which effects are

entered into the model - Cons

- (1) For factorial designs with unequal cell

samples, Type II sums of squares test hypotheses

that are complex functions of the cell ns that

ordinarily are not meaningful. - (2) Not appropriate for factorial designs

38

Variance decomposition

- Type III marginal or orthogonal

- SS gives the sum of squares that would be

obtained for each variable if it were entered

last into the model. That is, the effect of each

variable is evaluated after all other factors

have been accounted for. Therefore the result for

each term is equivalent to what is obtained with

Type I analysis when the term enters the model as

the last one in the ordering. - Pros

- Not sample size dependent effect estimates are

not a function of the frequency of observations

in any group (i.e. for unbalanced data, where we

have unequal numbers of observations in each

group). When there are no missing cells in the

design, these subpopulation means are least

squares means, which are the best linear-unbiased

estimates of the marginal means for the design. - Cons

- (1) testing main effects in the presence of

interactions - (2) Not appropriate for designs with missing

cells for ANOVA designs with missing cells, Type

III sums of squares generally do not test

hypotheses about least squares means, but instead

test hypotheses that are complex functions of the

patterns of missing cells in higher-order

containing interactions and that are ordinarily

not meaningful.

39

Orthogonal coding with kgt 3

To encode a factor with l 4, the general

encoding becomes

40

Orthogonal coding with kgt 3

You can thus test the following hypotheses

The sum of squares can then be decomposed

orthogonally as follows

41

Designs with multiple independent factors

Take as reference the following experiment with

two independent factors, each with two levels

(2x2)

42

Designs with multiple independent factors

Graphical representation of the average AiBj

43

Designs with multiple independent factors

The two levels of each factor can be encoded

assigning to each factor a column of the matrix X

(X1 and X2 respectively). You also need to encode

the interaction between the factors, adding as

many columns as the possible interactions among

the factors. Here the column that encodes the

interaction is X3calculated linearly as product

between X1 X2

44

Designs with multiple independent factors

- The previously considered orthogonal coding does

not allow an immediate understanding of the

estimated parameters. - We therefore recommend the following orthogonal

coding, where the element in the denominator

corresponds to the number of levels of the

factor. - The interaction is calculated as indicated above.

45

Designs with multiple independent factors

Estimating the beta parameters

The estimated parameters indicate

The parameter b3 telative to the Interaction

allows the verification of the hypothesis of

parallelism. This parameter must be studied

before the individual factors.

46

Designs with multiple independent factors

47

Designs with multiple independent factors

You can now test the following hypotheses

48

Designs with multiple independent factors

You can estimate the percentage of variance

explained by factors and interaction, as by the

overall model

49

3x3 experimental design with interaction

Let's look at a more complex experimental design,

with two factors with three levels each (3x3).

50

3x3 experimental design with interaction

- To encode the levels of the two factors and

interactions, it is possible to constitute a

matrix such as the following, with reference to

the dummy encoding (in which is shown only the

observed value for the last subject). - X1 e X2 encode the first factor A,

- X3 e X4 encode the second factor B,

- X5,X6,X7,X8 encode the interactions between

levels.

The complete matrix of X will therefore be a 45

rows x 9 columns matrix.

51

3x3 experimental design with interaction

Likewise the following orthogonal encoding is

adequate

52

3x3 experimental design with interaction

- La seguente scrittura permette di riconoscere nei

parametri beta direttamente i contrasti tra i

livelli. - La codifica dellinterazione può essere

agevolmente fatta moltiplicando le rispettive

colonne della matrice X che codificano i fattori

principali

53

3x3 experimental design with interaction

Estimating the parameters and the summ of squares

we find

54

3x3 experimental design with interaction

Through the beta parameters is immediate the

decomposition of variance in the two factors and

the interaction

55

3x3 experimental design with interaction

You can now test the following hypothesis, as

many as the estimated beta parameters

56

3x3 experimental design with interaction

57

Repeated measures design

observed data

Score obtained in a 10-point scale for anxiety

before and after treatment from 4 subjects.

58

Repeated measures design

Even a simple design such as the proposed one

involves the construction of a large matrix in

which are encoded the subjects, the factors and

interactions.

interaction

subjects

factor

59

Repeated measures design

You can estimate the parameters b according to

the general formula Then you can calculate

60

Repeated measures design

Unlike the between factorial design, this Within

model the SSres. is not calculated . We are in

presence of a model "saturated", in which the

share of the regression error is zero, since the

model explains all the variance.

61

Repeated measures design

The statistical testing therefore will concern

the diversity fron 0 of the part of variance due

to the factor (SST) corrected for the part of

variance due to the interaction of subjects with

treatment (SSint). This hypothesis can also be

formulated as follows

62

Repeated measures design

63

2x2 repeated measures design

- now consider an experimental design with repeated

measures using the following factors - stimulus left / right (qstSE)

- response left / right (qreSE)

- The dependent variable measured is the reaction

time, measured in msec. - The measurement of 2x2 conditions occurred on a

sample of 20 subjects.

64

2x2 repeated measures design

This design involves the construction of a large

matrix in which are encoded the repeated

measurements (in our case are the subjects, id),

the factors (A and B), and interactions. In the

table we consider only 3 subjects.

A

B

id

AB

Aid

Bid

ABid

65

2x2 repeated measures design

- The complete matrix of the design features

- Rows A(2) x B(2) x id(20) 80

- Columns x0 A(1) B(1) id(19) 80

- For convenience, the analysis continues through

the native functions of the R language, based on

the matrix regression. - Specifically, the functionlm(formula,)

calculates the X matrix of contrasts, starting

from variables of type factor through the

function model.matrix then estimate the

parameters with the LS method solve(t(x)x,t(x)

y). - See in detail the commented scripts, which also

describe the function rmFx e a.rm.

66

2x2 repeated measures design

- Being a saturated model, it is expected that the

model residuals are zero.

gt aov.lmgv0lt-anova(lm(tridqstSEqreSE)) gt

aov.lmgv0 Analysis of Variance Table Response

tr Df Sum Sq Mean Sq F value

Pr(gtF) id 19 273275 14383

qstSE 1 1268 1268

qreSE 1 3429 3429

idqstSE 19 6326 333

idqreSE 19 15628 823

qstSEqreSE 1 3774 3774

idqstSEqreSE 19 18030 949

Residuals 0 0

67

2x2 repeated measures design

- You must find "by hand" the correcting element

for each factor investigated. - In the specific

- qstSE is corrected by the interaction between id

and qstSE, indicated as idqstSE. - qreSE is corrected by idqreSE.

- qstSEqreSE is corrected by idqstSEqreSE.

68

2x2 repeated measures design

- the rmFx function allows you to set such

contrasts and compute the values ??of F.

gt aov.lmgv0lt-anova(lm(tridqstSEqreSE)) gt

ratioFlt-c(2,4, 3,5, 6,7) gt aov.lmgv0lt-rmFx(aov.lmg

v0,ratioF) gt aov.lmgv0 Analysis of Variance

Table Response tr Df Sum Sq

Mean Sq F value Pr(gtF) 1, id 19

273275 14383 2, qstSE

1 1268 1268 3.8075 0.06593 . 3, qreSE

1 3429 3429 4.1693 0.05529 . 4,

idqstSE 19 6326 333

5, idqreSE 19 15628 823

6, qstSEqreSE 1 3774 3774

3.9766 0.06069 . 7, idqstSEqreSE 19 18030

949 8, Residuals 0

0

69

2x2 repeated measures design

- The same results are produced by a.rm(formula,)

function

gt a.rm(trqstSEqreSEid) Analysis of Variance

Table Response tr Df Sum Sq Mean

Sq F value Pr(gtF) qstSE 1 1268

1268 3.8075 0.06593 . qreSE 1 3429

3429 4.1693 0.05529 . id 19 273275

14383 qstSEqreSE 1 3774

3774 3.9766 0.06069 . qstSEid 19

6326 333 qreSEid 19

15628 823 qstSEqreSEid 19

18030 949 Residuals

0 0 --- Signif.

codes 0 '' 0.001 '' 0.01 '' 0.05 '.' 0.1

' ' 1

70

Mixed design

- Consider the following mixed design, taken from

Keppel (2001), pp. 350ss. - The "Sommeliers" experiment consists of a 2x3

mixed design - Y dependent variable ("wine quality"),

- A 1 Factor between("type of wine")

- B 1 Within factor ("oxygenation time"),

- Id 5 subjects, randomly assigned.

- The script commented is reported in anova7.r

71

Mixed design

72

Mixed design

- It is expected that the residuals of the model

are null - Becomes necessary to determine which MS are to be

placed in the denominator for the calculation of

F.

gt anova(lm(yABid)) Analysis of Variance

Table Response y Df Sum Sq Mean Sq F

value Pr(gtF) A 1 53.333 53.333

B 2 34.067 17.033

id 8 34.133 4.267 AB

2 10.867 5.433 Bid 16

19.067 1.192 Residuals 0 0.000

73

Mixed design

- The between factor A is correct with the

variability due to subjects, id. - The factor Within B and interaction A B are

corrected by the interaction between B and id, B

id.

gt aov.lmgv0 lt- anova(lm(yABid)) gt

ratioFlt-c(1,3, 2,5, 4,5) gt aov.lmgv0lt-rmFx(aov.lmg

v0,ratioF) gt aov.lmgv0 Analysis of Variance

Table Response y Df Sum Sq Mean Sq F

value Pr(gtF) A 1 53.333 53.333

12.5000 0.0076697 B 2 34.067 17.033

14.2937 0.0002750 id 8 34.133 4.267

AB 2 10.867 5.433

4.5594 0.0270993 Bid 16 19.067 1.192

Residuals 0 0.000

74

Mixed design

- Planned comparisons

75

some clarifications

- ANOVA, MANOVA,ANCOVA e MANCOVA whats the

differences? - ANOVA analysis of variance with one or more

factors - ANCOVA analysis of covariance (or regression)

- MANOVA multivariate analysis of variance

(multiple dependent variables) - MANCOVA Multivariate analysis of covariance

(similar to multiple regression)

76

Analysis of Covariance

- ANCOVA is an extension of ANOVA in which main

effects and interactions of the independent

variables (IV) on the dependent variable (DV) are

measured after removing the effects of one or

more covariates. - A covariate (CV) is an external source of

variation, and when it is removed from DV, it is

to reduce the size of the error term.

77

Analysis of Covariance

- Scopi principali della ANCOVA

- Incrementare la sensibilità di un test riducendo

lerrore - Correggere le medie della DV To adjust the means

on the DV attraverso i punteggi della CV

78

Analysis of Covariance

- ANCOVA increases the power of the F test by

removing non-systematic variance in the DV.

IV

IV

ANOVA

ANCOVA

Covariate

DV

DV

Error

Error

79

Analysis of Covariance

- Take for example the following data set, from

Tabachnick, pp. 283, 287-289

80

Analysis of Covariance

- To analyze the relationship of the scores at

post-test with the experimental group,

considering the score as a covariate in the

pre-test, you must construct the following matrix

81

Analysis of Covariance

ANCOVA

- It is interesting to note the difference in the

significance of the results between this model

and the model of analysis that does not consider

the score at pre-test (ANOVA). - The full results are reported in the file

anova8.zip

SS df MS F

Gruppo 366.20 2 183.10 6.13

Errore 149.43 5 29.89 6.13

p lt .05

ANOVA

SS df MS F

Gruppo 432.89 2 216.44 4.52

Errore 287.33 6 47.89 4.52

82

To conclude, it can be noted that

- Regression, ANOVA and ANCOVA are very similar.

- The regression includes 2 or more continuous

variables (1 or more IV and DV 1) - ANOVA has at least one categorical variable (IV)

and exactly one continuous variable (DV) - ANCOVA includes at least one categorical variable

(IV), at least 1continuous variabiale, the

covariate (CV), and a single continuous variable

DV. - MANOVA and MANCOVA are similar, except that

present multiple and interrelated DV.

83

Calculation of power ...

- and of the subjects needed for an experiment

- http//duke.usask.ca/campbelj/work/MorePower.html

- http//www.stat.uiowa.edu/rlenth/Power/