Animation Speed PowerPoint PPT Presentation

1 / 96

Title: Animation Speed

1

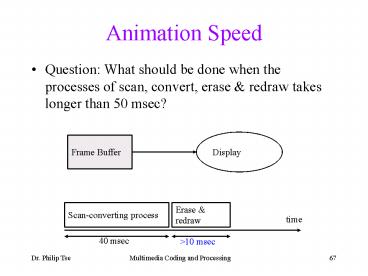

Animation Speed

- Question What should be done when the processes

of scan, convert, erase redraw takes longer

than 50 msec?

Display

Frame Buffer

Scan-converting process

Erase redraw

time

40 msec

gt10 msec

2

Use Double Buffering

- Frame buffer is divided into two images. Each

with half bits per pixel of the overall frame

buffer

1st half

Erase redraw

Scan-converting

2nd half

Scan-converting

Erase redraw

time

25 msec

25 msec

25 msec

3

Course Progress

- Introduction

- Data Representation

- Processing Techniques

4

Data Representation

- Number and text

- Graphics and animations

- Sound and Audio

- Images Representation

- Video Representation

5

Sound and Audio

- Sound Concept How is analog sound represented in

digital data? - Human Auditory System

- Digitization of sound waves

- Standard Sound and Audio formats

6

Sound and Audio

- Sound wave is a longitudinal wave of air

pressure. - Unlike Electromagnetic waves, the change in air

pressure is in the same direction as the

propagation of the sound.

time

7

Sound and Audio

- An audio sound wave is characterised by the pitch

and loudness. - Sound wave can be represented by a waveform with

frequency and amplitude s.t. - Frequency measures pitch

- Amplitude measures loudness

time

8

Amplitude

- Dynamic Range is the difference between the

quietest and the loudest sound. - Amplitude measures the loudness of the sound

Amplitude

time

0 1 2 3 4 5 6

7 8 9 10 11 12

9

Amplitude

- Amplitude is measured in units of deciBel (dB).

- Low background noise

- Normal speaking

- High uncomfortable to ears (gt80dB)

- X units in Y dB means

- Y 20 log10X

Amplitude

time

0 1 2 3 4 5 6

7 8 9 10 11 12

10

Wavelength

- Air pressure of sound waves oscillates in cycles.

- Wavelength is the length of a cycle period of a

waveform. - Wavelengths can be measured in seconds or

milliseconds.

wavelength

time

0 1 2 3 4 5 6

7 8 9 10 11 12

11

Frequency

- Frequency 1/wavelength

- Frequency in Hertz (Hz) 1Hz 1/second

- Infra sound 0-20 Hz

- Human hearing 20Hz - 20KHz

- Ultrasound 20KHz 1 GHz

- Hypersound gt1GHz

wavelength

time

0 1 2 3 4 5 6

7 8 9 10 11 12

12

Sound and Audio

- A pure tone is a sine wave.

- Two waves with the same frequency may start at

different time. Phase or phase shift refers to

the relative time delay between two waves.

time

phase

13

Sound and Audio

- Sound waves are additive. In general, the

resultant sound wave is represented by a sum of

sine waves.

time

Component tones

Resultant sound wave

14

Human Auditory System

- Human ear converts sound waves to nerve signals

- Brain neurons recognize the nerve signals

- Human ears can hear

- Frequency range of 20Hz to 20 KHz.

- Dynamic range of 120 dB.

- Ears are uncomfortable above 80dB.

15

Human Auditory System

- Psychoacoustic studies show that we cannot hear

some sound for some psychoacoustics principles - Absolute threshold of hearing

- Critical band frequency

- Simultaneous masking

- Temporal masking

16

Absolute Threshold

- Absolute threshold of hearing is the amount of

energy needed in a pure tone - This threshold depends on the frequency

- The absolute threshold can be approximated by

- in dB SPL, where SPL is the sound pressure

level.

17

Absolute Threshold of Hearing

18

Absolute Threshold

- The lowest point on the curve at around 4kHz.

- Tq(f) may be interpreted as a maximum allowable

energy level for coding distortions introduced in

the frequency domain.

19

Critical Band Frequency

- Frequency-to-place transformation takes place in

the inner ear along the basilar membrane - Each region in the cochlea is attached with a set

of neural receptors - Distinct regions in the cochlea are tuned to

different frequency bands - Critical bandwidth can be loosely defined as the

bandwidth at which subjective responses change

abruptly

20

Critical Band Frequency

- The detection threshold for a narrow-band noise

source between two masking tones remains constant

as long as the frequency separation between the

tones remains within a critical bandwidth. - Beyond the bandwidth, the threshold rapidly

decreases.

21

Critical Band Frequency

Sound Pressure Level (dB)

Audibility Threshold (dB)

?f

masking sources

Freq.

?f

Critical bandwidth

measured sources

22

Simultaneous Masking

- Simultaneous Masking (SM)

- one sound is rendered inaudible because of the

presence of another sound - Presence of a strong masker creates an excitation

of sufficient strength on the basilar membrane at

the critical band location to block transmission

of a weaker signal effectively - Spread of Masking

- A masker centered with one critical band may

affect the detection thresholds in other critical

bands.

23

Simultaneous Masking

- 2 types of simultaneous masking depending on the

relative strength of the tone and noise - Tone-masking noise

- Noise-masking tone

- Noise-masking threshold is given by

- THN ET 14.5 B

- where ET is energy level of critical band

tone-masker and B is the critical band number.

24

Simultaneous Masking

- Tone-masking threshold is given by

- THT EN K

- where EN is energy level of critical band

noise-masker and K is typically between 3 and 5

dB. - Masking thresholds are functions of Just

Noticeable Distortion (JND).

25

Temporal Masking

- Absolute audibility thresholds for masked sounds

are increased prior to, during, and following the

occurrence of a masking signal. - A listener will not perceive signals beneath the

elevated audibility thresholds produced by abrupt

signal transients. - Thus, abrupt signal transients create pre-masking

and post-masking regions for a time period.

26

Temporal Masking

Masked Audibility Threshold Increase

Simultaneous masking

Post-masking

Pre-masking

50

100

0

50

150

200

-50

0

Time after masker appear(ms)

Time after masker removal (ms)

27

Temporal Masking

- Pre-masking tends to last only about 5ms.

- Post-masking will extend from 50 to 300ms,

depending upon the strength and duration of the

masker. - Temporal masking has been used in audio-coding

algorithms. - Pre-masking has been exploited in conjunction

with adaptive block-size transform coding to

compensate for pre-echo distortions.

28

Sound Representation

- The analog sound wave is a one dimensional wave

of air pressure. - Mathematically, the digital sound wave is

represented as a one dimensional digital waveform

in computers. - The amplitude of the waveform is the measured air

pressure of the sound wave.

time

0 1 2 3 4 5 6

7 8 9 10 11 12

29

Digitization of Sound

- A microphone receives the sound waves and

converts the sound waves into electronic signals

in the analog waveforms. - An A/D converter performs the analog-to-digital

signal conversion to changes the analog signals

into digital signals. - Computers can store, transmit, and process the

digital signals. - A D/A converter performs the digital-to-analog

signal conversion. - The speakers converts the electronic signals into

sound waves.

30

Digitization of Sound

A/D Converter

1001

D/A Converter

1001

31

Digitization of Sound Wave

- A/D converter takes samples of the amplitude of

the analog signals at different time positions. - The number of samples per second, called the

sampling rate, is fixed.

time

0 1 2 3 4 5 6

7 8 9 10 11 12

Time for 1 sample

32

Amplitude

- The sample values can be encoded with more or

less bits. More bits can describe the amplitude

in finer details. - 8 bit quality ? 256 different values

- 16-bit quality ? 65,536 different values

- The sampled values are then encoded in a format,

such as Pulse Code Modulation. PCM uses fixed

length for each frequency sample.

33

Encoding Formats

- Pulse Code Modulation (PCM) digital

representation of analog signal where the

magnitude of the signal is sampled regularly at

uniform intervals and then quantized to a series

of symbols in a binary code - Differential PCM record the differences between

samples instead of the sample values - Adaptive Differential PCM record differences

between samples and adjust the coding scales

dynamically.

34

Standard Audio/Sound Formats

- Telephone quality speech input

- Mono-channel

- 8,000 samples/second, 8 bits/sample

- CD quality stereo audio

- 2 stereo channels (left right)

- 44,100 samples/second, 16 bits /sample

35

Standard Audio/Sound Formats

- DVD quality audio

- Mono(1.0), stereo(2.0), Quad(4.0) or

surround(5.1) channels - 5.1 channels Left, Right, Centre, Left Rear,

Right Rear, subwoofer - 16, 20, or 24 bits per sample

- 44.1kHz, 48kHz, 88.2kHz, or 96kHz

- PCM format

- Max bit rate 9.6 Mbps

36

Standard Audio/Sound Formats

- MIDI (Musical Instrument Digital Interface)

- Digital encoding of musical information

- The sound data is not used. Only the commands,

music scores, that describe how the music should

be played are used. - Highest compression, easy for editing

- Requires a music synthesizer to generate music

37

Course Progress

- Introduction

- Data Representation

- Number and text

- Graphics and animations

- Sound and Audio

- Images Representation

- Video Representation

- Processing Techniques

38

Course Progress

- Image Representation

- Light and Colour

- Human vision system

- Image capture

- Image quality measurements

- Image resolution

- Connectivity and distance

- Colour representation models

- Camera calibration

39

Light and Colour

- Light

- Electromagnetic Wave

- Wavelength 380 to 780 nanometers (nm)

- Colour

- Depend on spectral content (wavelength

composition) - E.g. Energy concentrated near 700 nm appears red.

- Spectral colour is light with a very narrow

bandwidth. - A white light is achromatic.

40

Colour

- Two types of light sources

- Illuminating light source

- Reflective light source

41

Colour

- Illuminating light source generates

- Light of certain wavelength, or

- Light of a wide range of wavelength

- Follow additive rule

- Colour of the mixed light depends on the SUM of

the spectra of all the light sources

42

Colour

- Examples of illuminating light sources of wide

wavelengths - Sun, stars

- Light bulb, florescent tube

- Examples of illuminating light sources of narrow

wavelength - Halogen lamp, Light power light bulb

- Phospher, Light Emitting Diode

43

Colour

- Reflecting light

- Object absorbs incident light of some wavelengths

- Object reflects incident light of remaining

wavelengths - E.g. An object that absorbs wavelengths other

than red would appear red in colour - Follow subtractive rule colour of the mixed

reflecting light sources depends on the remaining

unabsorbed wavelengths

44

Colour

- Examples of reflecting light source

- Mirror and white objects reflects all wavelengths

of light regularly - Most solid objects

- E.g. Dye, photos, the moon

45

Colour

- Complements of colours

- Red ? Cyan

- Green ? Magenta

- Blue ? Yellow

46

Human Vision System

- Schematic diagram of a human eye

Circular muscle

Lens

Fovea

Optic nerve

Cornea

Retina

Iris

47

Human Vision System

- Each part of our eye has some functions

- Iris controls the intensity of light entering the

eye - Circular muscle controls the thickness of the

lens - Lens refracts the light onto the fovea

- Optic nerve transmits light signals to the brain

- Brain

- interprets the light signals from both eyes

- understands the signals as an image

48

Human Vision System

- Path of light in the human eye

- Enters eye through cornea

- Passes through hole within iris

- Refracted by the lens

- Hits the retina wall inside the eye

- Excites some light-sensitive cells

49

Human Vision System

- Path of visible light inside a human eye

Circular muscle

Lens

Fovea

Optic nerve

Cornea

Retina

Iris

50

Human Vision System

- 2 types of light-sensitive cells

- Cones and rods due to their shapes

- Rods

- Very sensitive to intensity of light

- Generates monochromatic response

- More sensitive in low light level than cones

- Distributed across the retina wall

- See grey images under dim light at night

51

Human Vision System

- Cones

- red cone, green cone, and blue cone

- Each type of cone is sensitive to light of

different wavelength - Densely populated at the fovea. Approx. 160,000

cones per mm2 - Size of cones 1µm across an angle of 20 seconds

of arc - Smallest readable object 0.2mm at 1m far

52

Human Vision System

- We can distinguish only 40 shades of brightness

- Our brightness sense adapts as we scan over an

image - Most colours could be matched by mixing different

portions of a red, a green, and a blue primary

source.

53

Image Capture

- Images may be captured using

- Cameras

- Video cameras

- Fax machines

- Ultrasound scanners

- Radio telescopes

- An image is formed by the capture of radiant

energy that has been reflected from surfaces that

are viewed

54

Image Capture

- The amount of reflected energy, f(x,y), is

determined by two functions - The amount of light falling on a scene, i(x,y)

- The reflectivity of the various surfaces in the

scene, r(x,y) - These two functions combine to get f(x,y)

i(x,y) r(x,y)

55

Image Capture

- The range is practically bounded by the hardware

resolution. It is calibrated to 0 for black and

to 255 for white. Intermediate values are

different intensity of grey.

56

Image Capture

Red sensor

Green sensor

Blue sensor

A colour image is formed by combining the 3

images captured by the red, blue, and green

sensors.

57

Cameras

- 3 main types of camera

- Vidicons, charge coupled devices (CCDs), and

Complementary Metal Oxide Silicon (CMOS). - Vidicons

- Image is formed on a screen.

- The screen is scanned across to produce

continuous voltage signal which is proportional

to the light intensity of image.

58

Cameras

- CCD sensors

- A 2D array of pixel photosensitive sensors

- The number of charge sites gives the resolution

of the CCD sensor - Each sensor generates charges proportional to the

incident light intensity. - Each column of sensors is emptied into a vertical

transport register (VTR). - The VTRs are emptied pixel by pixel into a

horizontal transport register (HTR). - HTR empties the information row by row into a

signal unit

59

Cameras

- CMOS sensors

- A 2D array of lattice

- Charge incident on a site is proportional to the

brightness at a point - Charge is then read like computer memory

- A particular site is selected by the control

circuit and the content of the site is read - The number of charge sites gives the resolution

of the CCD sensor

60

Comparison of Camera Sensors

- Vidicon

- ? Cheap

- ? Aging problem due to moving parts

- ? Delay in response to moving objects in a scene

- CCD

- ? Irregularity in charge sitess material

(silicon) - ? Blooming effect because size is limited to 4 µm

- CMOS

- ? Irregular in charge sitess material (silicon)

- ? Directly related to intelligent cameras with

on-board processing - ? Cheaper than CCD

- ? Small size at around 0.1 µm

61

Image Quality Measurements

- Image measurements processes include

- Imaging devices focus radiant energy onto a

photosensitive detector - The quantity of energy being absorbed is measured

digitally - The measurements are assembled into an image

- All these processes are subject to inaccuracies

gt images are not strictly accurate.

62

Source of degradation

- Refraction of light at lens

- Distortion by camera lens

- Scattering of light by transmission medium in the

light path - Imperfect detector

- Blooming effect

63

Refraction

- Lens are made of glass that refracts light

- The amount of refraction depends on the

wavelength of the light - A ray of white light is split into the

constituent colours.

64

Refraction

- An optical system, based on lenses, cannot focus

light from a point onto a fixed point. - ? White objects have a coloured fringe.

- ? Sharp boundaries are blurred

65

Geometric Distortion

- The best focus of scene is on a spherical surface

behind the lens - It is difficult, if not impossible, to fabricate

spherical detectors - Image projected onto a planar detector

- Severe distortion when focal length of lens is

short

66

Geometric Distortion

- Image of rectangular grid captured using a short

focal length lens

67

Scattering

- Light rays are dispersed by materials between the

reflected surface and the detector - E.g. fog disperse light

68

Imperfect Detector

- Sensitivity of detector is not uniform

- Noise in the electronics that converts radiant

energy to an electrical signal - Usually quoted by camera manufacturers

- Overflow or clipping of signals when the signal

is of excessive amplitude. - The signal amplitude overflows the max value

- The signal amplitude might be clipped

- The detector is calibrated at each pixel

69

Imperfect Detector

- Underexposure image lose image details

70

Imperfect Detector

- Underexposure image lose image details

71

Electronic Noise

- Noise is defined as deviation of the signal from

its expected value - Electronic noise produces speckle effect

- Two types of speckle effect

- Salt and pepper noise Random white and black

noise on image - Gaussian noise Random noise superimposed on the

signal being captured

72

Salt and Pepper Noise

73

Blooming effect

- A bright point source of light

- Cameras optical system will blur image slightly

- Neighbouring cells in the detector will respond

to this received impulse - ? Inaccurate brightness at neighbouring pixels

- ? when auto exposure is used, image details are

lost in other under-illuminated regions

74

Blooming effect

Grey value

Pixel position

original grey value

Pixel position

75

Image Quality Measurement

- Objective measurements

- Measured by instruments

- Invariant to the change of subjects

- Peak Signal to Noise Ratio (PSNR)

- Subjective measurements

- Measured by human beings

- Variant to the change of subjects

- Human survey

76

Signal-to-Noise Ratio

- Signal-to-Noise Ratio (SNR) is the ratio of the

signal to the noise - It is often measured in decibels (dB) as

77

Signal-to-Noise Ratio

- The Peak Signal-to-Noise Ratio (PSNR) is often

considered. Thus, the ratio of the maximum value

of the signal to the measured noise amplitude (in

dB).

78

Image Resolution

- 3 resolutions

- Spatial resolution (no. of pixels)

- Brightness (no. of greylevels)

- Temporal (number of frames per second)

- These resolutions do have a mutual dependency

79

Spatial Resolution

- An image is represented as a two-dimension array

of sample points called pixels. - E.g. A 320 x 200 image has 320 pixels on each

horizontal line and 200 pixels on each vertical

line.

320

x x x x x x x x x x x x x x x x x x x x x x x x x

x x x x x x x x x x x x x x x x x x x x x x x x x

x x x x x x x x x x x x x x x x x x x x x x x x x

x x x x x x x x x x x x x x x x x x x x x x x x x

x x x x x x x x x x x x x x x x x x x x x x x x x

x x x x x x x x

pixel

200

80

Spatial Resolution

- A simple definition of spatial resolutions

- spatial resolution h v

- where h no. of horizontal pixels and v no.

of vertical pixels.

81

Spatial Resolution

- Field of view, ?v the angle subtended by rays

of light that hit the detector at the edge of the

image - Relationship between field of view, the camera

detector, and focal length, f.

h

?v

v

f

82

Spatial Resolution

- Horizontal Angular Resolution (HAR) is the ratio

of horizontal field of view, ?h, divided by the

number of pixels across the image - Vertical Angular Resolution (VAR) is the ratio of

vertical field of view, ?v, divided by the number

of pixels of the image vertically

83

Spatial Resolution

- Usually, HAR VAR by design

- r the smallest resolvable object

- Z the distance from the camera

- ? the angular resolution in radians

84

Spatial Resolution

- To resolve an object 2mm in diameter at a range

of 1m, the minimum angular resolution, ?, needs

to be - ? 2mm/1m

- 0.002 radian

- 0.1146 degree.

85

Spatial Resolution

- According to Nyquist theorem, at least two

samples per period are needed to represent a

periodic signal unambiguously, - Applying the Nyquist theorem to the spatial

dimension, two pixels must span the smallest

dimension of an object in order for it to be seen

in the image.

86

Spatial Resolution

- Lena images with different sample points 256x256

and 512x512 pixels

87

Spatial Resolution

- When a fixed size displaying window shows an

image of varying resolution - Low resolution image loses details

- High resolution image shows details

Low resolution

High resolution

88

Brightness Resolution

- For monochrome image,

- Brightness resolution Number of grey levels

- Our eyes can differentiate around 40 shades of

grey only - Image capture devices are limited in

differentiating number of grey levels - Most monochrome images are captured using 8 bit

values. Range of grey levels is 0, 255.

89

Brightness Resolution

- Images with more shades of grey show more details

bw

8 bit grey

90

Colour Resolution

- For colour images, a display device may use fewer

colours - Colour resolution number of distinguishable

colours - Colour images are captured using three eight bit

values.

91

Colour Resolution

- Images with more colours show image with high

fidelity

24 bit colour

8 bit colour

92

Image Bits per Pixel

- 1 bit/pixel black white image, facsimile image

- 4 bits/pixel computer graphics

- 8 bits/pixel greyscale image

- 16 or 24 bits/pixel colour images

- colour representations RGB, HSV, YUV, YCbCr

93

Interactions

- Spatial and brightness resolution can interact in

affecting the overall perception of an image - Poor spatial resolution may be compensated for by

a good brightness resolution - For each brightness resolution, a threshold

spatial resolution can be defined. Images with

higher spatial resolution are acceptable whereas

images with lower spatial resolution are

unacceptable.

94

Interaction

8 bit greyscale

50x50

25x25

100x100

200x200

95

Interaction

8 bit 256 colours

25x25

50x50

100x100

200x200

96

Interaction

24 bit colour

25x25

50x50

100x100

200x200