Decision Trees - PowerPoint PPT Presentation

1 / 17

Title:

Decision Trees

Description:

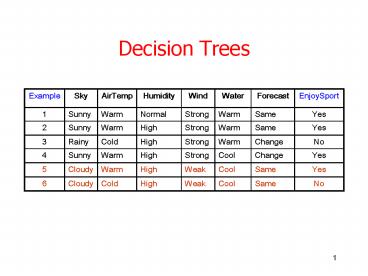

Cool. Weak. High. Cold. Cloudy. 6. No. Change. Warm ... Cool. Weak. Normal. Warm. Rainy. 7. 4. Decision Trees. Humidity. Normal. High. Yes. Sky. AirTemp ... – PowerPoint PPT presentation

Number of Views:78

Avg rating:3.0/5.0

Title: Decision Trees

1

Decision Trees

2

Decision Trees

Sky

Sunny

Rainy

Cloudy

AirTemp

Yes

No

Warm

Cold

Yes

No

(Sky Sunny) ? (Sky Cloudy ? AirTemp Warm)

3

Decision Trees

Sky

Sunny

Rainy

Cloudy

AirTemp

Yes

No

Warm

Cold

Yes

No

4

Decision Trees

Humidity

Normal

High

Sky

Yes

Sunny

Rainy

Cloudy

AirTemp

Yes

No

Warm

Cold

Yes

No

5

Decision Trees

- - - - - - - -

- - - -

- - - - - - - -

- - - -

A1 v1

A2 v2

6

Homogenity of Examples

- Entropy(S) - plog2p - p-log2p-

0.5

7

Homogenity of Examples

- Entropy(S) ?i1,c- pilog2pi impurity measure

8

Information Gain

- Gain(S, A) Entropy(S) - ?v?Values(A)(Sv/S).E

ntropy(Sv)

A

Sv1

Sv2

...

9

Example

- Entropy(S) - plog2p - p-log2p- -

(4/6)log2(4/6) - (2/6)log2(2/6) - 0.389 0.528 0.917

- Gain(S, Sky)

- Entropy(S) - ?v?Sunny, Rainy,

Cloudy(Sv/S)Entropy(Sv) - Entropy(S) - (3/6).Entropy(SSunny)

(1/6).Entropy(SRainy) - (2/6).Entropy(SClou

dy) - Entropy(S) - (2/6).Entropy(SCloudy)

- Entropy(S) - (2/6)- (1/2)log2(1/2) -

(1/2)log2(1/2) - 0.917 - 0.333 0.584

10

Example

- Entropy(S) - plog2p - p-log2p- -

(4/6)log2(4/6) - (2/6)log2(2/6) - 0.389 0.528 0.917

- Gain(S, Water)

- Entropy(S) - ?v?Warm, Cool(Sv/S)Entropy(S

v) - Entropy(S) - (3/6).Entropy(SWarm)

(3/6).Entropy(SCool) - Entropy(S) - (3/6).2.- (2/3)log2(2/3) -

(1/3)log2(1/3) - Entropy(S) - 0.389 - 0.528

- 0

11

Example

Sky

Sunny

Rainy

Cloudy

?

Yes

No

- Gain(SCloudy, AirTemp)

- Entropy(SCloudy) - ?v?Warm,

Cold(Sv/S)Entropy(Sv) - 1

- Gain(SCloudy, Humidity)

- Entropy(SCloudy) - ?v?Normal,

High(Sv/S)Entropy(Sv) - 0

12

Inductive Bias

- Hypothesis space complete!

13

Inductive Bias

- Hypothesis space complete!

- Shorter trees are preferred over larger trees

- Prefer the simplest hypothesis that fits the data

14

Inductive Bias

- Decision Tree algorithm searches incompletely

thru a complete hypothesis space. - ? Preference bias

- Cadidate-Elimination searches completely thru an

incomplete hypothesis space. - ? Restriction bias

15

Overfitting

- h?H is said to overfit the training data if there

exists h?H, such that h has smaller error than

h over the training examples, but h has a

smaller error than h over the entire distribution

of instances

16

Overfitting

- h?H is said to overfit the training data if there

exists h?H, such that h has smaller error than

h over the training examples, but h has a

smaller error than h over the entire distribution

of instances - There is noise in the data

- The number of training examples is too small to

produce a representative sample of the target

concept

17

Homework

- Exercises 3-1?3.4 (Chapter 3, ML textbook)