Web Usage Data PowerPoint PPT Presentation

1 / 17

Title: Web Usage Data

1

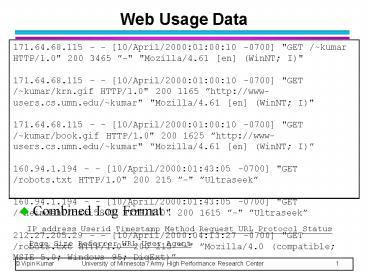

Web Usage Data

171.64.68.115 - - 10/April/2000010010 -0700

"GET /kumar HTTP/1.0" 200 3465 -" "Mozilla/4.61

en (WinNT I)" 171.64.68.115 - -

10/April/2000010010 -0700 "GET

/kumar/krn.gif HTTP/1.0" 200 1165

http//www-users.cs.umn.edu/kumar"

"Mozilla/4.61 en (WinNT I)" 171.64.68.115 - -

10/April/2000010010 -0700 "GET

/kumar/book.gif HTTP/1.0" 200 1625

http//www-users.cs.umn.edu/kumar"

Mozilla/4.61 en (WinNT I) 160.94.1.194 - -

10/April/2000014305 -0700 "GET /robots.txt

HTTP/1.0" 200 215 -" Ultraseek 160.94.1.194 -

- 10/April/2000014305 -0700 "GET

/heimdahl/csci5802 HTTP/1.0" 200 1615 -"

Ultraseek 212.27.205.29 - - 10/April/2000041

327 -0700 "GET /robots.txt HTTP/1.0" 200 215

-" Mozilla/4.0 (compatible MSIE 5.0 Windows

95 DigExt)

- Combined Log Format

- IP address Userid Timestamp Method Request

URL Protocol Status - Page Size Referrer URL User Agent

2

Non-Sequential Association Patterns

- Find all frequent itemsets (e.g. VCR, TV,

Satellite Dish) - Generate all possible rules from a frequent

itemset - TV, VCR ? Satellite Dish, TV, Satellite

Dish ? VCR, - VCR, Satellite Dish ? TV,

- Compute the confidence of each rule

3

Non-sequential Associations from Web Data

- Home, Electronics ? TV is a Web association

rule having support 40 and confidence 50

4

Issues in Mining Web Association Patterns

- Preprocessing Issues

- How to convert the click-streams into sessions?

- Data cleaning How to remove superfluous sessions

due to Web robots/spiders? - Mining Issues

- Current framework may not be able to capture all

types of interesting patterns. - Postprocessing Issues

- How to identify interesting patterns?

5

Beware of Web Crawlers!

- A Web crawler is an autonomous agent that

traverses the hyperlink structure of the Web for

the purpose of locating and retrieving

information from the Internet. - Also known as Web robots, spiders, worms etc.

- Employed by various users

- search engines - e.g. T-Rex, Scooter, Googlebot.

- Hyperlink checkers - e.g. LinkScan, LinkWalker.

- Shopbots - e.g. MySimon.

- Email collectors - e.g. EmailSiphon, EmailWolf.

- Download assistants e.g. Offline Browser.

6

How Web Crawlers Affect Association Patterns

- Accesses by Web crawlers may distort the support

counts of Web pages.

D

A

D

A

- Support for pages A and D inflated due to

accesses by Web robots. - This will produce spurious association between A

and D.

- Must detect and eliminate Web crawler accesses

(PN Tan V. Kumar, Discovery of Web Robot

Sessions based on their Navigational Patterns,

DMKD, 2002).

7

Other reasons for detecting Web crawlers

- E-commerce Web sites are concerned about the

unauthorized deployment of shopbots for gathering

business intelligence at their Web site. - Ebay vs. Bidders Edge lawsuit (April, 2000).

- Deployment of Web crawlers comes at the expense

of other users - Web crawlers may tie up the network bandwidth and

other server resources. - Web crawler accesses could be indicative of

fraudulent behavior. - Ad-hosting sites may use Web crawlers to inflate

click-through rate of banner advertisements.

8

Postprocessing Issues

- Using objective interest measures

- RJ. Hilderman and HJ. Hamilton. Knowledge

discovery and interestingness measures A survey.

Technical Report CS 99-04, University of Regina

(1999) - PN Tan and V. Kumar, Interestingness Measures for

Association Patterns A Perspective, KDD Workshop

on Postprocessing (2000) - Using subjective interestingness measures

- B. Liu, W. Hsu, LF Mun and HY Lee, Finding

Interesting Patterns Using User Expectations,

TKDE (1999) - R.Cooley, PN Tan and J.Srivastava, Discovery of

Interesting Usage Patterns from Web Data,

Advances in Web Usage Analysis and User Profiling

(2000).

9

Finding Interesting Negative Association

All pairs of items can be divided into 4

groups 1. Frequent pairs with mediators (FM) 2.

Frequent pairs without mediators (FN) 3.

Infrequent pairs with mediators (IM) 4.

Infrequent pairs without mediators (IN) Blue

FM Red FM IM Green FM FN

10

Finding Interesting Negative Association

All pairs of items can be divided into 4

groups 1. Frequent pairs with mediators (FM) 2.

Frequent pairs without mediators (FN) 3.

Infrequent pairs with mediators (IM) 4.

Infrequent pairs without mediators (IN) Blue

FM Red FM IM Green FM FN

p r number of infrequent pairs

11

Why Do We Need Dependence Condition?

- To handle spurious mediators (M)

P(a,M)

P(a)

P(M)

- Suppose M occurs almost everywhere i.e. P(M) 1

- ? ? 1 - Also, let P(a) ? P(a,M) s

- Since P(a,M) s, P(a) ? P(M) s ? (1- ?) ? s

- Therefore, P(a,M) ? P(a) ? P(M) ?

Statistical Independence

12

Dependence Measure

- IS (Interest x Support)

- IS is closely related to statistical correlation

(Tan Kumar, Workshop in Postprocessing, KDD2000)

13

Relationship between IS and correlation

University of Minnesota CS Web site

E-commerce Web site

14

Correlation vs IS Measure

(a)

(b)

- Table (a) has lower support than (b)

- ? 0.1125 for both tables

- But IS for (a) is lower than (b)

15

Why Not Use Correlation?

symmetric in f11? foo and f10? fo1

In Data Mining, presence together more important

than absence together

16

General Idea of Indirect Association

Frequent Association Patterns

P1

P2

A,B,D

A,B,E

Merge P1 and P2

- Merge P1 and P2 if they have common substructure

(A,B) - D and E are highly dependent on (A,B)

- D, E are infrequent

mediator

D and E are indirectly associated via the

mediator set A,B

17

High-Level View of Algorithm

- Extract the frequent itemsets or sequential

patterns L1, L2, Ln using standard algorithms

(e.g. Apriori or GSP) - P ?

- For k2 to n do

- Ck1? join(Lk, Lk)

- for each (a,b,M) ? Ck1 do

- if sup(a,b)and dep(b,M) ? td

- P P ?

- end

- end