Linear regression - PowerPoint PPT Presentation

Title:

Linear regression

Description:

Linear regression. Fitting a straight line to observations. Equation for straight line ... Some algebra. The Normal Equations. Solve these simultaneously ... – PowerPoint PPT presentation

Number of Views:103

Avg rating:3.0/5.0

Title: Linear regression

1

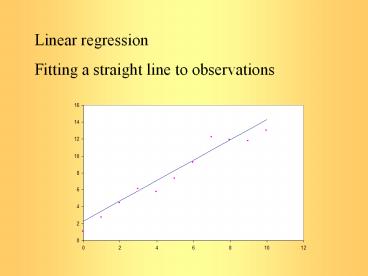

Linear regression Fitting a straight line to

observations

2

Equation for straight line

Difference between observation and line

ei is the residual or error

3

Goal in linear regression is to minimize

To find minimum, take derivatives

And set to zero

4

Some algebra

The Normal Equations

5

Solve these simultaneously

These are the least-squares linear regression

coefficients

6

Example

7

and

8

(No Transcript)

9

Error in linear regression a0 and a1 are maximum

likelihood estimates standard error of estimate

Quantifies spread around regression line

10

Another measure of goodness of fit - coefficient

of determination r2 or correlation coefficient r

Can also write

11

For our example

12

Linearization of nonlinear relationships

13

Polynomial regression - extend linear regression

to higher order polynomials

Sum of squared residuals becomes

14

Take derivatives to minimize Sr

Set equal to zero

15

Can write as

16

We can solve this with any number of matrix

methods

Example

17

After Gauss elimination

18

Best fit curve

19

Standard error for polynomial regression

- where

- n observations

- m order polynomial

(start off with n degrees of freedom, use up m1

for m order polynomial)