Classifying Email into Acts - PowerPoint PPT Presentation

Title:

Classifying Email into Acts

Description:

... 04, Learning to Classify Email into Speech Acts, Cohen ... in will try to keep -numbex i will. i will try to. i will check my. I do not have. meet at horex pm ... – PowerPoint PPT presentation

Number of Views:31

Avg rating:3.0/5.0

Title: Classifying Email into Acts

1

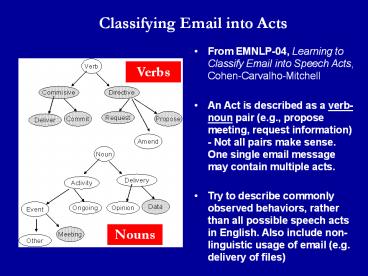

Classifying Email into Acts

- From EMNLP-04, Learning to Classify Email into

Speech Acts, Cohen-Carvalho-Mitchell - An Act is described as a verb-noun pair (e.g.,

propose meeting, request information) - Not all

pairs make sense. One single email message may

contain multiple acts. - Try to describe commonly observed behaviors,

rather than all possible speech acts in English.

Also include non-linguistic usage of email (e.g.

delivery of files)

Verbs

Nouns

2

Some Improvements

- With more labeled data (1743 msgs), using

1g2g3g4g5g features - Using careful (and act specific) pre-processing

of message text - Using act specific feature selection scheme (Info

Gain, ChiSquare, etc) - - Significant performance improvements.

3

Some Examples of 4-grams

is fine with mmee is good for mmee i will be

there i will look for will look for pppeople i

will see pppeople as soon as I numbex per

person i will bring copies our meeting on dday is

ok for mmee look for pppeople in will try to

keep -numbex i will i will try to i will check

my I do not have

meet at horex pm horex pm on ddday on ddday at

horex pppeople meet at horex to meet at

horex would like to meet please let mmee

know ddday at horex am ddday at horex pm lets

plan to meet would pppeople like to pppeople will

see pppeople is fine with mmee numbex-numbex

pm can pppeople meet at ddday numbex/numbex is

good for mmee

and let mmee know know what pppeople think would

be able to do pppeople want to do pppeople need

to do not want to pppeople need to get please let

mmee know pppeople think pppeople need mmee know

what pppeople what do pppeople think pppeople be

able to pppeople don not want pppeople would be

able that would be great Call mmee at home

Meeting (noun)

Request

Commit

Req

4

Results

5

Results

6

Collective Classification Predicting Acts from

Surrounding Acts

7

Content versus Context

- Content Bag of Words features only (using only

1g features) - Context Parent and Child Features only ( table

below) - 8 MaxEnt classifiers, trained on 3F2 and tested

on 1F3 team dataset - Only 1st child message was considered (vast

majority more than 95)

Request

Request

Proposal

???

Delivery

Commit

Parent message

Child message

Parent Boolean Features Child Boolean Features

Parent_Request, Parent_Deliver, Parent_Commit, Parent_Propose, Parent_Directive, Parent_Commissive Parent_Meeting, Parent_dData Child_Request, Child_Deliver, Child_Commit, Child_Propose, Child_Directive, Child_Commissive, Child_Meeting, Child_dData

Kappa Values on 1F3 using Relational (Context)

features and Textual (Content) features.

Set of Context Features (Relational)

8

Collective Classification Model

Commit

Other acts

Request

Deliver

Current Msg

Parent Message

Child Message

9

Collective Classification algorithm (based on

Dependency Networks Model)

New inferences are accepted only if confidence is

above the Confidence Threshold. This Threshold

decreases linearly with iteration, and makes the

algorithm works as a temperature sensitive

variation of Gibbs sampling after iteration 50,

the threshold is 50 and then a pure Gibbs

sampling takes place

10

Collective Classification Results

11

Act by Act Comparative Results

Kappa values with and without collective

classification, averaged over the four test sets

in the leave-one-team out experiment.

12

What goes next?

- Extend Collective classification by using the new

SpeechAct classifiers (1g-5g, feat selection) - Online(incremental) and semi-supervised learning

CALO focus. - Integration of new Speech Act package to

Minorthird Iris/Calo. - Role discovery network-like features speech

act