Coin Flipping Example PowerPoint PPT Presentation

1 / 15

Title: Coin Flipping Example

1

Coin Flipping Example

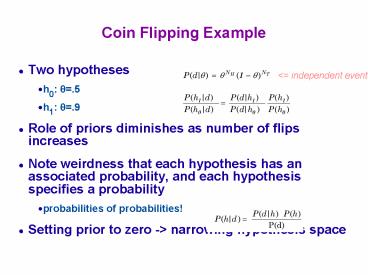

- Two hypotheses

- h0 ?.5

- h1 ?.9

- Role of priors diminishes as number of flips

increases - Note weirdness that each hypothesis has an

associated probability, and each hypothesis

specifies a probability - probabilities of probabilities!

- Setting prior to zero -gt narrowing hypothesis

space

lt independent events

2

Probability Densities

- For discrete events, probabilities sum to 1.

- For continuous events, probabilities integrate to

1. - e.g., uniform density

- p(x) 1/r if 0 lt x lt r 0 otherwise

- Probability densities should not be confused with

probabilities. - if p(Xx) is a density, p(Xx)dx is a probability

the probability that x lt X lt xdx - Notation

- P(...) for probability, p(...) for density

3

Probability Densities

- Can treat densities as probabilities, because

when you do the computation, the dx's cancel - e.g., Bayes rule

4

Using the Posterior Distribution

- What do we do once we have the posterior?

- maximum a posteriori (MAP)

- posterior mean, also known as model averaging

- MAP vs. Maximum likelihood

- p(? d) vs. p(d ?)

5

Infinite Hypothesis Spaces

- Consider all values of ?, 0 lt ? lt 1

- Inferring ? is just like any other sort of

Bayesian inference - Likelihood is as before

- With uniform priors on ? andwe obtain

- This is a beta distribution Beta(NH1, NT1)

6

Beta Distribution

7

Incorporating Priors

- Suppose we have the prior distribution

- p(?) Beta(VH, VT)

- Then posterior distribution is also Beta

- p(?d) Beta(VHNH, VTNT)

- Example of conjugate priors

- Beta distribution is the conjugate prior for a

binomial or Bernoulli likelihood

8

(No Transcript)

9

Latent Variables

- In many probabilistic models, some variables are

latent (a.k.a. hidden, nonobservable). - e.g., Gaussian mixture model

- Given an observation x, we might want to infer

which component produced it. - call this latent variable z

z

?

x

10

Learning Graphical Model Parameters

- Trivial if all variables are observed.

- If model contains latent variables, need to infer

their values before you can learn. - Two techniques described in article

- Expectation Maximization (EM)

- Markov Chain Monte Carlo (MCMC)

- Other technique that I don't understand

- Spectral methods

11

Expectation Maximization

- First, compute the expectation of z given current

model parameters ? - P(Zz x, ?) p(x z, ?) P(z)

- Second, compute parameter values that maximize

the joint likelihood - argmax? p(x, z ?)

- Iterate

- Guaranteed to converge to a local optimum of p(x

?)

12

MCMC Gibbs Sampling

- Resample each latent variable conditional on the

current values of all other latent variables - Start with random assignments for the Zi

- Loop through all latent variables in random order

- Draw a sample P(Ziz x, ?) p(x z, ?) P(z)

- Reestimate ? (either after each sample or after

an entire pass through the variables?). Same as M

step of EM - Gibbs sampling is a special case of

Metropolis-Hastings algorithm - make it more likely to move uphill in the overall

likelihood - can be more accurate/efficient than Gibbs

sampling if you know something about the

distribution you're sampling from

13

(No Transcript)

14

Important Ideas in Bayesian Models

- Generative models

- Consideration of multiple models in parallel

- Inference

- prediction via model averaging

- importance of priors

- explaining away

- Learning

- distinction from inference not always clear cut

- parameter vs. structure learning

- Bayesian Occam's razor trade off between model

simplicity and fit to data (next class)

15

Important Technical Issues

- Representing structured data

- grammars

- relational schemas (e.g., paper authors, topics)

- Hierarchical models

- different levels of abstraction

- Nonparametric models

- flexible models that grow in complexity as the

data justifies - Approximate inference

- Markov chain Monte Carlo

- particle filters

- variational approximations