Maximum Entropy PowerPoint PPT Presentation

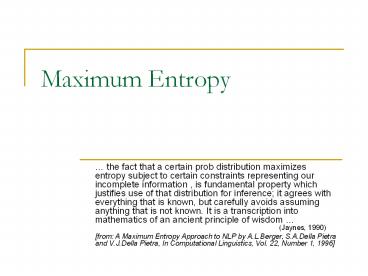

Title: Maximum Entropy

1

Maximum Entropy

- the fact that a certain prob distribution

maximizes entropy subject to certain constraints

representing our incomplete information , is

fundamental property which justifies use of that

distribution for inference it agrees with

everything that is known, but carefully avoids

assuming anything that is not known. It is a

transcription into mathematics of an ancient

principle of wisdom (Jaynes, 1990) - from A Maximum Entropy Approach to NLP by

A.L.Berger, S.A.Della Pietra and V.J.Della

Pietra, In Computational Linguistics, Vol. 22,

Number 1, 1996

2

Example

- Let us try to see how an expert would translate

the English word in into Italian - In in, dentro, di, lt0gt, a

- If the translator selects always from this list

than P(in) P(dentro) P(di) P(lt0gt) P(a) 1 - If no preference P(.) 1/5

- Suppose we notice that the translator chooses in

or di in 30 of the cases. This changes our

probabilities to - P(dentro) P(di) 0.3 and

- P(in) P(di) P(dentro) P(lt0gt) P(a) 1

3

Example cont.

- This will change the distribution as follows (the

most uniform p satisfying these constraints) - P(dentro) 3/20 and P(di) 3/20

- P(in) P(lt0gt) P(a) 7/30

- Suppose we inspect the data further and we not

another interesting fact in half of the cases

the expert chooses either in or di. So - P(dentro) P(di) 0.3

- P(in) P(di) P(dentro) P(lt0gt) P(a) 1

- P(in) P(di) 0.5

- Which is in such case the most uniform p?

4

Example - motivation

- How can we measure the uniformity of a model?

- Even if we answer the previous question, how do

we determine the parameters of such model? - Maximum Entropy answers the questions model

everything that is known and assume nothing about

what is unknown.

5

Aim

- Construct a statistical model of the process that

generated the training sample P(x,y) - P(yx) given a context x, the prob that the

system will output y.

6

Feature that in is translated as a

7

The expected value of f with respect to the

empirical distribution is exactly the statistics

we are interested in. The expected value is

8

The expected value of f with respect to the model

is

9

Constraint The expected values of the model and

of the training sample must be the same

10

What does uniform mean?

- The mathematical measure of the uniformity of a

conditional distribution P(yx) is provided by

the conditional entropy H(YX)P(X,Y)SP(YX),

here marked as H(P).

Joint probability of x and y

11

The Maximum Entropy Principle

observed

expected

12

Maximizing the Entropy

Lagrange

?

13

The Algorithm 1

- Input features, empirical distribution

- Output optimal parameter values

14

Step 2a.

- For constant feature counts

15

The Algorithm 1 Revisited

- Input features, empirical distribution

- Output optimal parameter values

16

The Algorithm 2 Feature Selection

- Input Collection F of candidate features,

empirical distribution P(x,y) - Output Set S of active features and a model P

incorporating these features