CHAPTER%201:%20INTRODUCTION PowerPoint PPT Presentation

Title: CHAPTER%201:%20INTRODUCTION

1

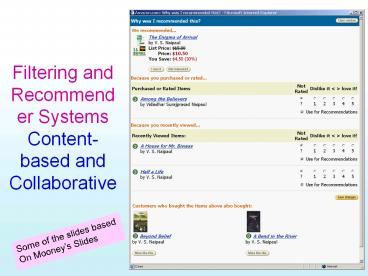

Filtering and Recommender SystemsContent-based

and Collaborative

Some of the slides based On Mooneys Slides

2

Personalization

- Recommenders are instances of personalization

software. - Personalization concerns adapting to the

individual needs, interests, and preferences of

each user. - Includes

- Recommending

- Filtering

- Predicting (e.g. form or calendar appt.

completion) - From a business perspective, it is viewed as part

of Customer Relationship Management (CRM).

3

Feedback Prediction/Recommendation

- Traditional IR has a single userprobably working

in single-shot modes - Relevance feedback

- WEB search engines have

- Working continually

- User profiling

- Profile is a model of the user

- (and also Relevance feedback)

- Many users

- Collaborative filtering

- Propagate user preferences to other users

You know this one

4

Recommender Systems in Use

- Systems for recommending items (e.g. books,

movies, CDs, web pages, newsgroup messages) to

users based on examples of their preferences. - Many on-line stores provide recommendations (e.g.

Amazon, CDNow). - Recommenders have been shown to substantially

increase sales at on-line stores.

5

Feedback Detection

Non-Intrusive

Intrusive

- Click certain pages in certain order while ignore

most pages. - Read some clicked pages longer than some other

clicked pages. - Save/print certain clicked pages.

- Follow some links in clicked pages to reach more

pages. - Buy items/Put them in wish-lists/Shopping Carts

- Explicitly ask users to rate items/pages

6

Content-based vs. Collaborative Recommendation

7

Collaborative Filtering

Correlation analysis Here is similar to

the Association clusters Analysis!

8

Collaborative Filtering Method

- Weight all users with respect to similarity with

the active user. - Select a subset of the users (neighbors) to use

as predictors. - Normalize ratings and compute a prediction from a

weighted combination of the selected neighbors

ratings. - Present items with highest predicted ratings as

recommendations.

9

Similarity Weighting

- Typically use Pearson correlation coefficient

between ratings for active user, a, and another

user, u.

ra and ru are the ratings vectors for the m

items rated by both a and u ri,j is

user is rating for item j

Where did you see this stuff?

Association clusters

10

Covariance and Standard Deviation

- Covariance

- Standard Deviation

11

Significance Weighting

- Important not to trust correlations based on very

few co-rated items. - Include significance weights, sa,u, based on

number of co-rated items, m.

12

Neighbor Selection

- For a given active user, a, select correlated

users to serve as source of predictions. - Standard approach is to use the most similar n

users, u, based on similarity weights, wa,u - Alternate approach is to include all users whose

similarity weight is above a given threshold.

13

Rating Prediction

- Predict a rating, pa,i, for each item i, for

active user, a, by using the n selected neighbor

users, - u ? 1,2,n.

- To account for users different ratings levels,

base predictions on differences from a users

average rating. - Weight users ratings contribution by their

similarity to the active user.

14

Problems with Collaborative Filtering

- Cold Start There needs to be enough other users

already in the system to find a match. - Sparsity If there are many items to be

recommended, even if there are many users, the

user/ratings matrix is sparse, and it is hard to

find users that have rated the same items. - First Rater Cannot recommend an item that has

not been previously rated. - New items

- Esoteric items

- Popularity Bias Cannot recommend items to

someone with unique tastes. - Tends to recommend popular items.

- WHAT DO YOU MEAN YOU DONT CARE FOR BRITNEY

SPEARS YOU DUNDERHEAD?

15

October 28th

- --Exam lament (I mean discussion)

- --Project 1 due date

- --Continuation of Recommender Systems

16

Content-Based Recommending

- Recommendations are based on information on the

content of items rather than on other users

opinions. - Uses a machine learning algorithm to induce a

profile of the users preferences from examples

based on a featural description of content. - Lots of systems

17

Advantages of Content-Based Approach

- No need for data on other users.

- No cold-start or sparsity problems.

- Able to recommend to users with unique tastes.

- Able to recommend new and unpopular items

- No first-rater problem.

- Can provide explanations of recommended items by

listing content-features that caused an item to

be recommended. - Well-known technology The entire field of

Classification Learning is at (y)our disposal!

18

October 30th

- NRA Tucson Board Member, Todd Rathner was

critical of UA's ban on firearms, saying the

policy puts students and faculty at risk to

dangerous individuals - such as the student who

shot three UA College of Nursing instructors

before committing suicide. "They have made the

UA an unarmed-victims zone," he said - Tucson Citizen, October 30th, 2002

19

Disadvantages of Content-Based Method

- Requires content that can be encoded as

meaningful features. - Users tastes must be represented as a learnable

function of these content features. - Unable to exploit quality judgments of other

users. - Unless these are somehow included in the content

features.

20

Primer on Classification Learning

FAST

- (you can learn more about this in

- CSE 471 Intro to AI

- CSE 575 Datamining

- EEE 511 Neural Networks)

21

Many uses of Classification Learning in IR/Web

Search

- Learn user profiles

- Classify documents into categories based on their

contents - Useful in

- focused crawling

- Topic-sensitive page rank

22

A classification learning example Predicting when

Rusell will wait for a table

--similar to book preferences, predicting credit

card fraud, predicting when people are likely

to respond to junk mail

23

Uses different biases in predicting Russels

waiting habbits

Decision Trees --Examples are used to --Learn

topology --Order of questions

K-nearest neighbors

If patronsfull and dayFriday then wait

(0.3/0.7) If waitgt60 and Reservationno then

wait (0.4/0.9)

Association rules --Examples are used to

--Learn support and confidence of

association rules

SVMs

Neural Nets --Examples are used to --Learn

topology --Learn edge weights

Naïve bayes (bayesnet learning) --Examples are

used to --Learn topology --Learn CPTs

24

Mirror, Mirror, on the wall Which learning

bias is the best of all?

Well, there is no such thing, silly! --Each

bias makes it easier to learn some patterns and

harder (or impossible) to learn others -A

line-fitter can fit the best line to the data

very fast but wont know what to do if the data

doesnt fall on a line --A curve fitter can

fit lines as well as curves but takes longer

time to fit lines than a line fitter. --

Different types of bias classes (Decision trees,

NNs etc) provide different ways of naturally

carving up the space of all possible

hypotheses So a more reasonable question is --

What is the bias class that has a specialization

corresponding to the type of patterns that

underlie my data? -- In this bias class, what is

the most restrictive bias that still can capture

the true pattern in the data?

--Decision trees can capture all boolean

functions --but are faster at capturing

conjunctive boolean functions --Neural nets can

capture all boolean or real-valued functions

--but are faster at capturing linearly seperable

functions --Bayesian learning can capture all

probabilistic dependencies But are faster at

capturing single level dependencies (naïve bayes

classifier)

25

Fitting test cases vs. predicting future

cases The BIG TENSION.

2

1

3

Why not the 3rd?

26

Naïve Bayesian Classification

- Problem Classify a given example E into one of

the classes among C1, C2 ,, Cn - E has k attributes A1, A2 ,, Ak and each Ai can

take d different values - Bayes Classification Assign E to class Ci that

maximizes P(Ci E) - P(Ci E) P(E Ci) P(Ci) / P(E)

- P(Ci) and P(E) are a priori knowledge (or can be

easily extracted from the set of data) - Estimating P(ECi) is harder

- Requires P(A1v1 A2v2.AkvkCi)

- Assuming d values per attribute, we will need ndk

probabilities - Naïve Bayes Assumption Assume all attributes are

independent P(E Ci) P P(Aivj Ci ) - The assumption is BOGUS, but it seems to WORK

(and needs only ndk probabilities

27

Estimating the probabilities for NBC

- Given an example E described as A1v1

A2v2.Akvk we want to compute the class of E - Calculate P(Ci A1v1 A2v2.Akvk) for all

classes Ci and say that the class of E is the

one for which P(.) is maximum - P(Ci A1v1 A2v2.Akvk)

- P P(vj Ci ) P(Ci) / P(A1v1

A2v2.Akvk) - Given a set of training N examples that have

already been classified into n classes Ci - Let (Ci) be the number of

examples that are labeled as Ci - Let (Ci, Aivi) be the number of

examples labeled as Ci - that have attribute Ai

set to value vj - P(Ci) (Ci)/N

- P(Aivj Ci) (Ci, Aivi) /

(Ci)

28

Example

P(willwaityes) 6/12 .5 P(Patronsfullwillw

aityes) 2/60.333 P(Patronssomewillwaityes

) 4/60.666

Similarly we can show that P(Patronsfullwillw

aitno) 0.6666

P(willwaityesPatronsfull) P(patronsfullwill

waityes) P(willwaityes)

--------------------------------------------------

---------

P(Patronsfull)

k

.333.5 P(willwaitnoPatronsfull) k 0.666.5

29

Using Naïve Bayes for Text Classification ( Bag

of Words model)

- What are the features in Text?

- Use unigram model

- Assume that words from a fixed vocabulary V

appear in the document D at different positions

(assume D has L words) - P(DC) is P(p1w1,p2w2pLwl C)

- Assume that words appearance probabilities are

independent of each other - P(DC) is P(p1w1C)P(p2w2C) P(pLwl C)

- Assume that word occurrence probability is

INDEPENDENT of its position in the document - P(p1w1C)P(p2w1C)P(pLw1C)

- Use m-estimates set p to 1/V and m to V (where V

is the size of the vocabulary) - P(wkCi) (wk,Ci) 1/w(Ci) V

- (wk,Ci) is the number of times wk appears in the

documents classified into class Ci - w(Ci) is the total number of words in all

documents

30

Happy Deepawali!

4th

4th Nov, 2002.

31

Using M-estimates to improve probablity estimates

- The simple frequency based estimation of

P(AivjCk) can be inaccurate, especially when

the true value is close to zero, and the number

of training examples is small (so the probability

that your examples dont contain rare cases is

quite high) - Solution Use M-estimate

- P(Aivj Ci) (Ci, Aivi)

mp / (Ci) m - p is the prior probability of Ai taking the value

vi - If we dont have any background information,

assume uniform probability (that is 1/d if Ai can

take d values) - m is a constantcalled equivalent sample size

- If we believe that our sample set is large

enough, we can keep m small. Otherwise, keep it

large. - Essentially we are augmenting the (Ci) normal

samples with m more virtual samples drawn

according to the prior probability on how Ai

takes values

32

Extensions to Naïve Bayes idea

- Vector of Bags model

- E.g. Books have several different fields that are

all text - Authors, description,

- A word appearing in one field is different from

the same word appearing in another - Want to keep each bag differentvector of m Bags

- Additional useful terms

- Odds Ratio

- P(relexample)/P(relexample)

- An example is positive if the odds ratio is gt 1

- Strengh of a keyword

- LogP(wrel)/P(wrel)

- We can summarize a users profile in terms of the

words that have strength above some threshold.

33

How Well (and WHY) DOES NBC WORK?

- Naïve bayes classifier is darned easy to

implement - Good learning speed, classification speed

- Modest space storage

- Supports incrementality

- Recommendations re-done as more attribute values

of the new item become known. - It seems to work very well in many scenarios

- Peter Norvig, the director of Machine Learning at

GOOGLE said, when asked about what sort of

technology they use Naïve bayes - But WHY?

- Domingoes/Pazzani 1996 showed that NBC has

much wider ranges of applicability than

previously thought (despite using the

independence assumption) - classification accuracy is different from

probability estimate accuracy

34

Combining Content and Collaboration

- Content-based and collaborative methods have

complementary strengths and weaknesses. - Combine methods to obtain the best of both.

- Various hybrid approaches

- Apply both methods and combine recommendations.

- Use collaborative data as content.

- Use content-based predictor as another

collaborator. - Use content-based predictor to complete

collaborative data.

35

Movie Domain

- EachMovie Dataset Compaq Research Labs

- Contains user ratings for movies on a 05 scale.

- 72,916 users (avg. 39 ratings each).

- 1,628 movies.

- Sparse user-ratings matrix (2.6 full).

- Crawled Internet Movie Database (IMDb)

- Extracted content for titles in EachMovie.

- Basic movie information

- Title, Director, Cast, Genre, etc.

- Popular opinions

- User comments, Newspaper and Newsgroup reviews,

etc.

36

Content-Boosted Collaborative Filtering

EachMovie

IMDb

37

Content-Boosted CF - I

38

Content-Boosted CF - II

User Ratings Matrix

Pseudo User Ratings Matrix

Content-Based Predictor

- Compute pseudo user ratings matrix

- Full matrix approximates actual full user

ratings matrix - Perform CF

- Using Pearson corr. between pseudo user-rating

vectors

39

Conclusions

- Recommending and personalization are important

approaches to combating information over-load. - Machine Learning is an important part of systems

for these tasks. - Collaborative filtering has problems.

- Content-based methods address these problems (but

have problems of their own). - Integrating both is best.