Simple Null Hypothesis PowerPoint PPT Presentation

1 / 43

Title: Simple Null Hypothesis

1

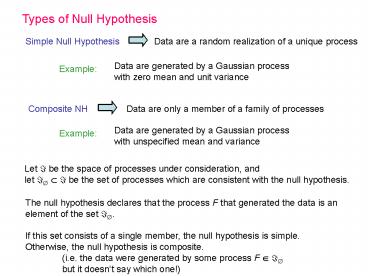

Types of Null Hypothesis

Simple Null Hypothesis

Data are a random realization of a unique process

Data are generated by a Gaussian process with

zero mean and unit variance

Example

Composite NH

Data are only a member of a family of processes

Data are generated by a Gaussian process with

unspecified mean and variance

Example

Let ? be the space of processes under

consideration, and let ?? ? ? be the set of

processes which are consistent with the null

hypothesis.

The null hypothesis declares that the process F

that generated the data is an element of the set

??. If this set consists of a single member, the

null hypothesis is simple. Otherwise, the null

hypothesis is composite. (i.e. the data were

generated by some process F ? ?? but it doesnt

say which one!)

2

Types of Errors

Type I Error

The hypothesis is rejected but it is actually

true (false positive)

Type II Error

The hypothesis is accepted but it is actually not

true (false negative)

Given that the NH is actually true, the

probability of making a Type I error is the

significance level (a) of the test. We want

this level (or size) to be as low as possible (at

least lt 0.05)

Given that the NH is actually false, the

probability of making a Type II error is related

with the power (b) of the test. We want this

probability or power (1-b) to be as high as

possible

3

Choice of Discriminating Statistic (T)

When the NH is composite, T should be pivotal

What is a pivotal statistic? The distribution of

T is the same for all members F of the family ??

of processes consistent with the chosen NH.

Why pivotal T? If T is pivotal, it does not

matter which F ? ?? is used as the basis for

generating realizations, all serve equal purpose.

But in practice, most of the discriminating

statistic to investigate low dimensional dynamical

nonlinearity are non-pivotal. And for that

purpose, the method of constrained realization is

beneficial than typical realization.

4

There are infinite possibilities of

discriminating statistic to be used! But what

should be the guidelines?

The primary requirement Discriminating

statistic should not be directly derived from the

second order properties of the data like

histogram, autocorrelation, power spectrum etc.

Why so? The above properties are linear

statistic, and the surrogates, by definition,

Preserve the linear properties of the data.

- Choose a statistic which

- 1. Provides the maximum statistical power,

- 2. Is possibly related addressing some

deterministic structure (e.g., continuity,

smoothness, differentiability etc.) - 3. Addresses the predictability properties of

the process. - 4. Checks the temporal asymmetry of the data.

5

Typical vs. Constrained - Realizations

Data Chaotic Henon Model

Typical realizations AR Surrogates with model

order 6 (AIC criterion)

Discriminating statistic

A(1) autocorrelation at lag 1

FT-surrogates correctly rejects NH but not

AR-surrogates. AR-surrogates produce more

variance that is due to the nuisance parameter

A(1) from one realization to the next. But

FT-surrogates have much smaller spread.

6

Size Power of the Test

Power and size are estimates as the fraction of

trials out of 1000 for which the null hypothesis

is rejected at the a 0.05 level.

FT-surrogate

FT-surrogate

AR-surrogate

AR-surrogate

Constrained realization is more accurate and

more powerful than typical realization.

7

Another Example High Dimensional Chaotic Time

Series

Data Sum of five realizations of Henon map

Additive Gaussian noise AR-Surrogates Model

order 8 Discriminating statistic

FT-surrogates detect the nonlinearitybut

AR-surrogates do not.

8

Discriminating statistic vs. Nuisance parameters

x AR-Surr. FT-Surr.

T is nonpivotal, i.e., discriminating statistic

depends on the nuisance parameters, (Here the

first two lags of autocorrelation)

9

Local Flow

Kaplan Glass (1992) Phys. Rev. Lett.

For chaos, the direction of motion is a function

of position in phase space. And determinism

implies that points that are now close in phase

space do not diverge very much over

sufficiently short times. But for longer times,

sensitive dependence to initial conditions

starts to separate nearby trajectories.

- Reconstruct the state space with suitable

embedding parameters. - Coarse-grain partition the state space into

boxes. - Each grid box j contains nj points with time

indices of tj,k for k1,2, , nj - For each point, the displacement for a given

translation horizon tp, was estimated Dxj,k

x(tj,ktp) x(tj,k) - The average displacement of the points in each

box yields a family of displacement magnitudes

Lnd for each embedding dimension d and value of

nnj. - The expectation value of Lnd for a random system

is cd/nj0.5 where cd(2/d)0.5G((d1)/2)/G(d/2) - The average displacement for all values of n at a

given embedding dimension is

For a deterministic system, L ? 1 While for a

random walk, L ? 0

10

Local Dispersion Method

Wayland et al. (1993) Phys. Rev. Lett

Choose a reference vector, x0, and find the k

nearest neighbors, x1, , xk. Let y0, ., yk

represent the image of these vectors. The

translation differences between the vectors and

their images will be vj yj - xj Compute the

average

Compute the translation error

The translation errors measures the normalized

spread of the displacements around each reference

vector, relative to the average displacement.

Choose Nref number of reference vectors and

compute the translation error for each. Find the

average (or median) of these value which provides

a robust measure ofthe underlying deterministic

structure. For deterministic series, vj will be

nearly equal and etrans is small. For stochastic

series, etrans is large.

11

Simple Nonlinear Prediction

Choose a zeroth-order nonlinear predictor.

Fitting local constant maps to the attractor.

For each reference vector xr,find a fixed k

number of neighbors xj, j 1, , k.

Then, the predicted value with prediction horizon

tp will be

The prediction error,

If we use the mean as prediction, the error will

be

The, normalized prediction error

For deterministic series, NPE ? 0 For

stochastic series, NPE ? 1

12

Kaplans d/e

Kaplan (1994) Physica D

This method examines the trajectories of points

in the embedding space for exceptional events

that track each other closely when

translated. In other words, if two points are

close together on a trajectory, the images of the

points at some short time later are more likely

to be close together if the system is

deterministic than if it is not.

Let the distance between two pairs of vectors

(as usual you can exclude pairs where (i-j) gt

tc, correlation time

The distance between the images of the vectors

after k time steps

The average separation

The stochastic systems are expected to have

values of ei,j that are independent of previous

separations di,j ? Er vs. r will tend to be a

line with zero slope For deterministic systems,

Er is small for small r, increasing r to a

maximum that is dependent on the size of the

trajectory.

13

Examples Simulated Time Series

Chang et al. (1995) Chaos

- Henon Map

- Lorenz Flow

- Mackey-Glass Equation

Add in-band or colored noise to each

deterministic time series.

14

(No Transcript)

15

Discriminating Statistic

- Local Flow The value of L should be greater than

surrogates. - Local Dispersion The value of ltetransgt should be

smaller than surrogates. - d-e Profile e values for small d should be

smaller than surrogates. - Local Prediction The values of NPE should be

smaller than surrogates.

Choice of Embedding Parameters

- Two suggestions

- Ensure proper embedding procedure to get a valid

d, t - Choose those parameters which has a limited

spread of values for different realizations of

the same process even if at these parameters the

embedding is not strictly valid

16

data

Example Lorenz Flow 25 Noise

surrogates

tp

tp

tp

d

d

d

tp

tp

tp

d

d

d

17

Example Henon Map

18

Example Lorenz Flow

19

Example Mackey-Glass System

20

Measure of Smoothness

- Find directional vector Xtxt1-xt

- Find the angle between successive directional

vectors - Compute the index

- The lower the s, the higher the degree of

smoothness

21

Example Chaotic Rössler Equation

State space plot

Angles between Successive vectors

22

Example Chaotic Rössler Equation

Histogram

Original

s 0.01

Angle variation

Power spectrum

Histogram

Angle variation

Surrogate

s 0.39

Power spectrum

23

Palus (1995) Physica D

Redundancy

- It measures how much repetition of information

occurs in the measurement. - For low-dimensional deterministic dynamics,

repeatable and distinct patterns - produces redundancy because the measurements made

in the past provide - information about the present/future states.

- For random or stochastic dynamics, repetition

happens only out of chance! - Subsequent measurements provide fresh or new

information.

If pr is the probability that the time series

will take values between x and xr,then,

H(1,r) is the average number of bits needed to

describe a single value of the time series with

a precision r ? Shannon Entropy Let H(d,r) be

the average number of bits needed to describe a

sequence of d values (i.e. the number of bits in

an d-dimensional embedding

24

In general, because describing a sequence of d

values cannot take more bits than describing d

individual values. This redundancy R(d,r) d

. H(1,r) H(d,r) The block-entropy can also be

defined by correlation sum C(r) H2(d,r)

-logC(r) And redundancy R2(d,r)

d.H2(1,r) H2(d,r) In practice, the following

formula is used to estimate redundancy

25

Approximate Entropy

Pincus (1991) PNAS

- Construct multi-dimensional state space from each

signal xt xtxt xt-t xt-2t xt-(d-1)t - Find,

- Compute,

- Approximate entropy,

- The lower the value of ApEn, the higher the

degrees of recurrence - Practical choice d 2, r 0.15std (or three

times of the mean noise level)

26

1. ApEn is a nonnegative and biased quantity.

Thus, two ApEn values should always be

compared with same parameter settings. 2. For a

stochastic process, K-S entropy is infinite, but

ApEn is finite. 3. For any two systems, the

following holds (a) If h1(A) lt h1(B), then

ApEn(d,r,N)(A) lt ApEn(d,r,N)(B) (b) If

ApEn(d,r,N)(A) lt ApEn(d,r,n)(B), then

ApEn(d1,r1,N)(A) lt ApEn(d1,r1,N)(B)

27

Barahona Poon (1996) Nature

Polynomial Based Prediction

Volterra based approach but with closed loop (or

feedback)

Model with degree d and memory ?

Functional basis zm(n) is composed of all the

distinct combinations of the embedding space

coordinates (xn-1, xn-2, , xn-?) upto a degree d

with a totaldimension M (kd)! / (d! k!)

The short-term prediction power of the model is

then measured by one-step ahead prediction error

28

The best model (?opt, dopt) can be found by

satisfying the following condition

where r ? 1,M is the number of polynomial terms

of truncated Volterra expasionfrom a certain

parameter set (?,d).

- In practice,

- One obtains the best linear model by searching

for ?lin by minimizingC(r) with d 1. - Repeat with increasing ? and d gt 1 to get the

best nonlinear model. - The nonlinear determinism is indicated if (i)

dopt gt 1 - (ii) the best nonlinear model is better

predictor that both the linear model and

than the best linear and nonlinear models

obtained from the surrogates.

29

Example Chaotic ecological model with strong

periodic component

NH3 laser pulsations

Intensity of a star

30

Practical Remarks

1. Inpulse Noise

The sudden spike or impulse has a damaging

effect on surrogates

Primarily, these impulses have a white power

spectrum after phaserandomization, such white

noisesspread into the time series.

Recipe Remove these spikes if they are not of

interest.

31

2. Endpoint Mismatch

Any standard FFT program assumes that time series

to be periodic with the available data sequence

being just one period of the assumed infinite

time series. This means that the end points of

the time series are assumed to be equal. If the

endpoints of the time series are not equal, a

discontinuity results which Introduces spurious

high frequency power into the power spectrum.

Recipe Choose a subset of time series such that

the end points match.

Ex xn 1.9xn-1 - 0.9001xn-2 ?n

Effect of endpoint mismatch

After removal of endpoint mismatch

32

3. Slow Drifts

If a trend is present in the signal,

phase-randomized surrogateappears quite

different from the original signal.

Recipe 1. Remove the trend. 2. Compute the

surrogate of the detrended series. 3. Adding

back the trend.

33

4. Padding

A common approach while computing an FFT of a

time series is to pad the data sequence with

zeros so that the length of the series will be a

power of 2. But introducing such padded zeros

introduces more structure into the

surrogatewhich is not present in the original

data. This effect is likely to increase the

rateof false positive.

Recipe Use a Fourier Transform program which

can be applied on exact length of the data. or

Truncate the data sequence to be the right

length.

34

Multivariate Surrogates

Prichard Theiler (1996) Physica D

Let, we have M simultaneously measured time

series, x1(t), x2(t), , xM(t)

Let X1(f), X2(f), , XM(f) be their Fourier

transforms.

The cross-correlation between jth and kth time

series is

For a linear Gaussian multidimensional process,

all relevant information about the process is

given by these cross-correlations.

Now, the cross-spectrum will be

A(f) is the Fourier amplitude, and f(f) is the

phase angle.

35

We need to preserve (i) linear

auto-correlations (ii) linear

cross-correlations

Add same random phase sequence ?(f) to fj(f) for

j 1,, M

Then, surrogates can be obtained by

36

Example Chromospheric Oscillations

Bhattacharya et al. (2001) Sol. Phys.

Surrogates w/o Spatial Correlations

Surrogates with Spatial Correlations

data

data

surrogates

surrogates

37

Casdagli (1992) J. R. Stat. Soc.

DVS Algorithm

1, Normalize the time series to zero mean and

unit variance. 2. Divide the time series into

two parts (a) a training set or fitting set

to estimate the model

coefficients (b) a test set or

out-of sample set to

evaluate the model Nf is the number of points

in the fitting set Nt is the number of points in

the test set 3. Choose T (prediction horizon), d

(embedding dimension) 4. Choose a test delay

vector xi (i gt Nf) for a T-step ahead prediction

task. Compute the distances dij of the test

vector xi from the training vectors xj

for all j such that (d-1)t lt j lt i-T 5. Order

the distances dij and find k nearest neighbors

xj(1) through xj(k) of xj s

38

6. Then T-step further state of xj was predicted

by using the following model

where the prediction coefficients a0, a1,, ad

by least-squares approach. This is equivalent to

an locally linear autoregressive model of order d

fitted to the k nearest neighbors to the test

point. Vary k in the range 2(d1) lt k lt Nf T

(d-1)t

7. Compute the prediction error

8. Repeat steps 4-7 as (jT) runs through the

test set, and compute the mean absolute

forecasting error

9. Vary d and plot Ed(k) as a function of the

number of nearest neighbors. Such a family of

curves is called a DVS plot.

39

Example FIR Laser Data

Ed(k)

k

k

40

Tokuda et al. (2002) Chaos

A Variant of DVS Plot

Here, one can estimate the in-sample prediction,

i,e, using the whole data set. Say, is the

predicted value of the original xt, and the

residual error The signal-to-noise ration

(SNR) will be

Here, noise refers to unpredictable components

within the data.

41

When k is small, prediction accuracy is sensitive

to noise level ? low SNR With increasing k,

prediction performance gets better because

nonlinear structureis well modeled by local

linear predictor. With an intermediate value of

k, SNR reaches maximum. With further increasing

of k, the model gets closer to a global linear

predictor that may be a bad model for a

nonlinear process ? low SNR The degree of

nonlinearity is related with the difference

between optimally nonlinear prediction and

global linear prediction.

42

Example Iregular Animal Vocalizations

Scream of Juvenile Macaque

DVS plot Juvenile Macaque

Call of Mother Macaque

DVS plot Mature Macaque

43

Surrogate Analysis DVS plot

Juveniles scream

Mothers call