METRICS PowerPoint PPT Presentation

Title: METRICS

1

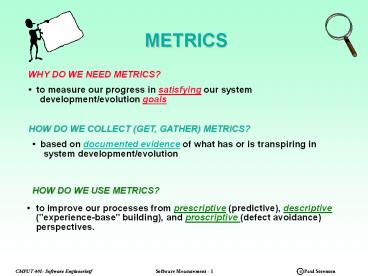

METRICS

WHY DO WE NEED METRICS?

to measure our progress in satisfying our

system development/evolution goals

HOW DO WE COLLECT (GET, GATHER) METRICS?

based on documented evidence of what has or is

transpiring in system development/evolution

HOW DO WE USE METRICS?

to improve our processes from prescriptive

(predictive), descriptive ("experience-base"

building), and proscriptive (defect avoidance)

perspectives.

2

IMPORTANT ASPECTS OF METRICS INMANAGING QUALITY

In the SEI Maturity Model the primary

difference between Level 5 (optimized processes)

and lower levels are that tests are conducted

during the process and not at the end.

Both the V-System Development Model and the

Spiral Model support planned testing and

continuous verification and validation. So do

certain well-defined, well-control approaches to

prototyping.

While project management tools (e.g., MS

Project, Autoplan) are useful in assisting the

project manager, they can not be viewed as the

only tools. Why?

For system testing, experience indicates that

the best indicators focus on defect discovery and

tracking.

3

Deciding on Software Metrics

BASED HEAVILY ON ROBERT GRADY'S BOOK

Practical Software Metrics for Project

Management and Process

Improvement

What are the goals in developing successful

software systems?

We wish to develop systems that ....

meet customer needs

are reliable (perform well)

are cost effective to build and evolve

Meet a a customers desired schedule.

4

GOAL/QUESTION/METRICPARADIGM

A GOAL ELABORATION PROCESS DUE TO BASILI, ET AL

AT UNIV. OF MARYLAND (SEL PROJECT)

Goaln

Goal1

5

GOAL DEVELOPMENT

6

QUESTION GENERATION

Q1 What are the attributes of customer

satisfaction?

Q2 What are the key indicators of customer

satisfaction?

Q3 What aspects result in customer satisfaction?

Q4 How satisfied are the customers?

Q5 How do we compare with the competition?

Q6 How many problems are affecting customers?

Q7 How long does it take to fix a problem?

(compared to customer expectn)

Q8 How does installing a fix affect the customer?

Q9 How many customers are affected by the

problem? (by how much?)

Q10 Where are the bottlenecks?

7

Q1 What are the attributes of customer

satisfaction?

Functionality Usability Reliability Perfo

rmance Supportability

8

Q2-Q5 Sources of Customer Needs

Surveys

Define survey goals gt questions (at least one

per FURPS) gt how data will be analyzed and

results presented.. State or graph sample

conclusions.

Test the survey and your data analysis before

sending out

Ask questions requiring simple answers, preferably

quantitative or yes/no

Keep it short (one to two pages)

Make them very easy to return

Interviews

Generally more accurate and informative, but

time-consuming and could be subject to bias.

9

MAJOR SW STRATEGIES

MAJOR SW STRATEGIES

BASED HEAVILY ON ROBERT GRADY'S BOOK

Practical Software Metrics for Project

Management and Process

Improvement

What are the goals in developing successful

software systems?

We wish to develop systems that ....

meet customer needs

are reliable (perform well)

are cost effective to build and evolve

10

GOAL/QUESTION/METRICPARADIGM

GOAL/QUESTION/METRIC

PARIDIGM

A GOAL ELABORATION PROCESS DUE TO BASILI, ET AL

AT UNIV. OF MARYLAND (SEL PROJECT)

Goaln

Goal1

11

GOAL DEVELOPMENT

GOAL DEVELOPMENT

12

QUESTION GENERATION

Q1 What are the attributes of customer

satisfaction?

Q2 What are the key indicators of customer

satisfaction?

Q3 What aspects result in customer satisfaction?

Q4 How satisfied are the customers?

Q5 How do we compare with the competition?

Q6 How many problems are affecting customers?

Q7 How long does it take to fix a problem?

(compared to customer expectn)

Q8 How does installing a fix affect the customer?

Q9 How many customers are affected by the

problem? (by how much?)

Q10 Where are the bottlenecks?

13

Q1 What are the attributes of customer

satisfaction?

Functionality Usability Reliability Perfo

rmance Supportability

14

Q2-Q5 Sources of Customer Needs

Surveys

Define survey goals gt questions (at least one

per FURPS) gt how data will be analyzed and

results presented.. State or graph sample

conclusions.

Test the survey and your data analysis before

sending out

Ask questions requiring simple answers, preferably

quantitative or yes/no

Keep it short (one to two pages)

Make them very easy to return

Interviews

Generally more accurate and informative, but

time-consuming and could be subject to bias.

15

MEASUREMENT PROGRAMPLANNING

FURPS

WEEK

FURPS planned versus actual tracking (from Fig.

4-3 Grady, Practical Software Metrics)

16

Q6 How many problems are affecting customers?

EXAMPLE METRICS

Incoming defect rate

Open critical and serious defects

Break/fix ratio

Post release defect density

17

CRITICAL/SERIOUS PROBLEMSTRACKING

18

DEFECT CLOSUREREPORTING ANALYSIS

19

DEFECT IDENTIFICATION TRACKING

Defects Remaining Open

(based on Fig. 7-5 Defects identified/remaining

open)

20

PREDICTIVE MODELS

Kohoutek's model

21

RELEASECRITERIA

TESTING STANDARDS SHOULD INCLUDE AGREED-TO GOALS

BASED ON THE FOLLOWING CRITERIA

breadth testing coverage of user-accessible

and internal functions depth branch

coverage testing reliability continuous

hours of operation under stress measured

ability to cover gracefully defect density

(actual and/or predicted) at release

22

RELEASE DATES (BASED ON DEFECTS)

of Defects Target

Critical/Serious Defects

Critical Defects

of Defects

Defect/ KNCSS

Projected Release Date

10

.06

8

Actual Release Date

6

.04

4

.02

2

.00

0

Ma Ju Jl Au

Se Oc No

Months

(based on Fig. 7-8 Critical/serious defects)

23

POST-RELEASE DEFECTS CUSTOMER SATISFACTION

Service Req. per KNCSS

Avg. of Certified

Worst Certified Product

Not Certified or Did not Meet Certification

6

5

4

3 months moving average

3

2

1

0

Months

0 2 4 6

8 10 12

(based on Fig. 7-9 Postrelease incoming SR's -

service requests)

24

METRICS SELECTION

Goal1

GOAL ELABORATION PROCESS In reality, we

seldom develop a new metric from scratch we

usually adopt an existing one. examine

"Bang" and "function point" approaches to be

applied at the requirements analysis phase

we have examined fan-in/fan-out and Henry

Kafura's INF (Info Flow Measure) at the design

stage examine McCabe's cyclomatic

complexity measure for intra- module complexity

(applies at both the implementation and

testing phases)

Subgoal1.2

SG1.1

SG1.3

Q1.2.1

Question1.2.2

Q1.2.3

M1.2.2.1

Metric1.2.2.2

M1.2.2.3

Known "Metric's Base"

25

McCABE'S CYCLOMATIC COMPLEXITY

Programs are viewed as directed graphs that

show control flow.

26

PROBLEMS WITH McCABE CC

It was primarily designed for FORTRAN --

doesn't apply so well with newer languages that

are less procedural and/or more dynamic in

nature. (E.g., how is exception handling dealt

with.) The case of CC(G) 1 remains true for

any size of linear code. Insensitivity of CC

to the software structure. Several researchers

have demonstrated that CC can increase when

applying generally accepted techniques to

improve program structure. Evangelist'83

shows that only 2 out of 26 of Kernighan

Plauger's rules results in decreases to CC.

All decisions have a uniform weight regardless of

the depth of nesting or relationship with other

decisions. Empirical studies show a mixture of

success with using McCabe's CC. Best suited

for test coverage analysis and intra-module

complexity