Dirichlet Aggregation: - PowerPoint PPT Presentation

1 / 29

Title:

Dirichlet Aggregation:

Description:

Dirichlet Aggregation: Unsupervised Learning towards an Optimal Metric ... Related work (Lebanon, UAI 03, PAMI 06) Pull-back metrics. Fisher information metric ... – PowerPoint PPT presentation

Number of Views:54

Avg rating:3.0/5.0

Title: Dirichlet Aggregation:

1

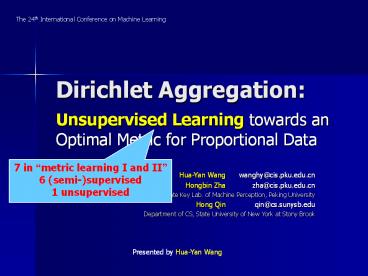

Dirichlet Aggregation

The 24th International Conference on Machine

Learning

- Unsupervised Learning towards an Optimal Metric

for Proportional Data

7 in metric learning I and II 6

(semi-)supervised 1 unsupervised

Hua-Yan Wang wanghy_at_cis.pku.edu.cn Hongbin

Zha zha_at_cis.pku.edu.cn State Key Lab.

of Machine Perception, Peking University Hong Qin

qin_at_cs.sunysb.edu Department of CS,

State University of New York at Stony Brook

Presented by Hua-Yan Wang

2

Outline

- Introduction

- The metric learning problem

- Related work

- Dirichlet aggregation

- Basic idea

- Setting

- Approach

- Experiment results

- Conclusion

3

Introduction

- We need a metric or a (dis)similarity measure in

a lot of tasks in machine learning - Classification (kNN, SVM, )

- Clustering (k-means, )

- Information retrieval

- Why we have to learn a metric ?

- A simple metric does not work well due to

sophisticated underlying latent structure of the

data space. - These structures are arisen from a generative

process from a latent variable space (sometimes

hard to model). - Metric learning could be viewed as trying to find

some evidence about the latent structure directly

in the data space, without saying anything about

latent variables.

4

Introduction

- Two different settings

- (Semi-)supervised metric learning

- Relative comparisons or category labels of data

points are given as constraints that the target

metric should satisfy. - Unsupervised metric learning (our case)

- We exploit the distribution patterns of the

unlabelled data points. - Highly related to non-linear dimensionality

reduction

5

Related work (Lebanon, UAI 03, PAMI 06)

Pull-back metrics

Fisher information metric

The maps are scaling of individual dimensions

6

Dirichlet aggregation (basic idea)

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go thru dense data points.

latent variable space

data space

7

Dirichlet aggregation (basic idea)

Geodesic between two points, A and B, in the

latent variable space is (approximately) the

straight line connecting them. Because the latent

variable space often could be assumed as

quasi-Euclidean.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

B

8

Dirichlet aggregation (basic idea)

The distance measure could be viewed as a

transition process moving from one point to

another, i.e., changing one object into

another. Note that intermediate states on the

straight line are also in the latent variable

space, i.e., represent meaningful objects in

the same domain.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

B

9

Dirichlet aggregation (basic idea)

Usually, the image of the latent variable space

is a sparse subset in data space, (sometimes we

assume that its a manifold). So in the data

space, using the straight line to measure

distance between two points is problematic.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

A

Whats this ?!

B

B

10

Dirichlet aggregation (basic idea)

Because some intermediate states might have no

preimage in the latent variable space, i.e., do

not represent meaningful objects. And the

transition process along the straight line is

meaningless.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

A

Whats this ?!

B

B

11

Dirichlet aggregation (basic idea)

As we know, the geodesic should go like this,

such that all intermediate states have preimage

in the latent variable space, i.e., represent

meaningful intermediate objects of the

transition process.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

A

B

B

12

Dirichlet aggregation (basic idea)

In other words, the real length of the blue

path could be much longer than the red path when

projected back to the latent variable

space. However, finding a real geodesic (such

as the read path) in the data space is difficult

in most cases, because the data are noisy and the

manifold assumption is sometimes violated. In our

approach, we adopt a more flexible version of

this basic idea Paths going thru dense

data points should be relatively shorter than

paths going thru sparse data points.

- A common basic idea of unsupervised metric

learning and non-linear dimensionality reduction. - Geodesics should go through dense data points.

latent variable space

data space

A

A

B

B

13

Dirichlet aggregation (setting)

We consider unlabelled data points in a simplex

(normalized histograms). Each vertex corresponds

to one dimension in the vector space.

14

Dirichlet aggregation (approach)

Bag-of-words representation with 4

words. Documents represented as normalized

histograms (points in the 3-simplex). Many

document mention both economy and market a

lot. Many documents mention both terrain and

geography a lot. But relatively few documents

mention both economy and terrain a lot.

economy

terrain

market

geography

15

Dirichlet aggregation (approach)

economy

Consider documents A, B, and C. AB should be

shorter than BC. Because AB goes thru dense data

points but BC goes thru sparse data points

A

terrain

B

market

C

geography

16

Dirichlet aggregation (approach)

So the simplex should be warped like this. To

implement such a warping, we first infer

affinities between simplex vertices (by fitting a

distribution parameterized by vertex-affinities),

then induce a metric on the simplex from these

affinities (earth movers distance (EMD) with

ground distances derived from vertex-affinities).

A

B

C

17

Dirichlet aggregation (approach)

The Dirichlet distribution Each parameter

associates with one vertex (dimension).

18

Dirichlet aggregation (approach)

To gain more flexibility, we use a Dirichlet

mixture model with N equally weighted

components where is a

symmetric matrix.

19

Dirichlet aggregation (approach)

The parameters correspond to edges of the simplex

(affinities between vertices).

20

Dirichlet aggregation (approach)

Consider a toy example like this. The parameters

associated with edge 1-2 and edge 3-4 exhibit

higher values than other off-diagonal elements.

21

Dirichlet aggregation (approach)

1000 points sampled from the Dirichlet

aggregation distribution with these parameters.

22

Dirichlet aggregation (approach)

Parameters are estimated by a simple iterative

algorithm, E-step Each data point is assigned

with a weight (probability) wrt each Dirichlet

component. M-step Parameters of each Dirichlet

component are re-estimated with re-weighted data

points.

The distribution we devised yields a pattern that

just resembles the imaginary one we have

discussed.

23

Dirichlet aggregation (approach)

- Define a metric on the simplex as EMD (Rubner et

al, 2000) with log of the learned

vertex-affinities as ground distances.

The EMD (earth movers distance) between two

histograms is defined as the effort needed to

make them identical by transporting mass among

histogram-bins. Distances between histogram-bins

are called ground distances. For example, if

ground distance between bin 1 and bin 2 is larger

than that between bin 1 and bin 3, the left

histogram would be closer to the top right

histogram than to the bottom right histogram,

measured by EMD.

1 2 3

1 2 3

1 2 3

24

Experiment results

- Reuters corpus

- 12897 docs, 19881 unique index words, 90

categories - Topic histogram representation obtained by LDA

(Blei et al 2003). - Caltech image database

- 2233 images, 4 categories

- SIFT descriptors (128D) clustered into 2000

visual-words, visual-topic histogram

representation obtained by LDA. - SIFT descriptors clustered into 100

visual-words, visual-word histogram

representation.

25

Experiment results

Reuters corpus, Precision-Recall curves 50-topic

representation obtained by LDA.

Reuters corpus Representation-dimension vs.

Performance

26

Experiment results

Caltech image database, P-R curves 40-visual-topic

representation obtained by LDA.

Caltech image database Representation-dimension

vs. Performance

27

Experiment results

Caltech image database, 100-visual-word

representation.

28

Conclusion

- Advantages

- Unsupervised (easily collect a large training

set) - Flexible (handles correlations among dimensions)

- Global (a unified distribution to fit all

observation) - Limitations

- Intuitive but need more solid theoretical support

- Time consuming in parameter estimation and EMD

29

Thanks for your attention !