Overfit PowerPoint PPT Presentations

All Time

Recommended

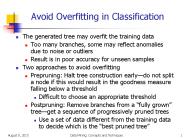

Avoid Overfitting in Classification The generated tree may overfit the training data Too many branches, some may reflect anomalies due to noise or outliers

| PowerPoint PPT presentation | free to download

Spline has better fit because it tends to overfit. Potential subsequent tests. LOF test of spring and alternative models. LOF test of alternative and spline models ...

| PowerPoint PPT presentation | free to view

Forecasting Stock Prices. Walkrich Investments: Neural ... Time (BP training, or EA/PSO generations) Training data. Validation data. Starting to overfit ...

| PowerPoint PPT presentation | free to download

Recommend items based on past transactions of users. Specific data ... Can easily overfit, sensitive to regularization. Need to separate main effects...

| PowerPoint PPT presentation | free to view

post-pruning fully grow the tree (allowing it to overfit the data) and then ... Nodes are pruned iteratively, always choosing the node whose removal most ...

| PowerPoint PPT presentation | free to view

Decision Trees Definition Mechanism Splitting Function Issues in Decision-Tree Learning Avoiding overfitting through pruning Numeric and missing attributes

| PowerPoint PPT presentation | free to view

separate test set to evaluate the use of pruning ... no bookkeeping on how to reorganize tree if root node is pruned. Improves readability ...

| PowerPoint PPT presentation | free to download

Daria Sorokina. Rich Caruana. Mirek Riedewald. Daria Sorokina, Rich Caruana, Mirek Riedewald. Additive Groves of Regression Trees ...

| PowerPoint PPT presentation | free to download

Cool. Weak. High. Cold. Cloudy. 6. No. Change. Warm ... Cool. Weak. Normal. Warm. Rainy. 7. 4. Decision Trees. Humidity. Normal. High. Yes. Sky. AirTemp ...

| PowerPoint PPT presentation | free to view

A decision tree is a tree where each node of the tree is associated with an ... The decision trees represent a disjunction of conjunctions of constraints on the ...

| PowerPoint PPT presentation | free to view

RIPPER. Fast Effective Rule Induction. Machine Learning 2003. Merlin Holzapfel & Martin Schmidt ... usually better than decision Tree learners. representable ...

| PowerPoint PPT presentation | free to download

Recall = TP / (TP FN) F1 = 2Prec Rec / (Prec Rec) ICML ... CARCASS (50) 30: beef, pork, meat, dollar, chicago. SOY-MEAL (13) 3: meal, soymeal, soybean ...

| PowerPoint PPT presentation | free to download

Review of : Yoav Freund, and Robert E. Schapire, 'A Short Introduction to ... Michael Collins, Discriminative Reranking for Natural Language Parsing, ICML 2000 ...

| PowerPoint PPT presentation | free to download

Let S be a set examples from c classes. Where pi is the proportion of examples of S belonging ... Intuitively, the smaller the entropy, the purer the partition ...

| PowerPoint PPT presentation | free to view

Discriminatively Trained Markov Model for Sequence Classification Oksana Yakhnenko Adrian Silvescu Vasant Honavar Artificial Intelligence Research Lab

| PowerPoint PPT presentation | free to download

Instances are represented by discrete attribute-value pairs (though the basic ... are related as follows: the more disorderly a set, the more information is ...

| PowerPoint PPT presentation | free to download

Artificial Intelligence 7. Decision trees Japan Advanced Institute of Science and Technology (JAIST) Yoshimasa Tsuruoka Outline What is a decision tree?

| PowerPoint PPT presentation | free to download

Chapter 3: Decision Tree Learning Decision Tree Learning Introduction Decision Tree Representation Appropriate Problems for Decision Tree Learning Basic Algorithm ...

| PowerPoint PPT presentation | free to view

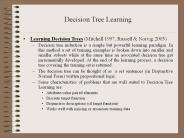

Decision Tree Learning Learning Decision Trees (Mitchell 1997, Russell & Norvig 2003) Decision tree induction is a simple but powerful learning paradigm.

| PowerPoint PPT presentation | free to download

Decision Trees, Nearest Neighbor/IBL, Genetic Algorithms, Rule ... Stacking, Gating/Mixture of Experts, Bagging, Boosting, Wagging, Mimicking, Combinations ...

| PowerPoint PPT presentation | free to download

Biological learning system (brain) complex network of ... arrow: negated gradient at one point. steepest descent along the surface. Derivation of the rule ...

| PowerPoint PPT presentation | free to view

The Models of Function Approximator. The Radial Basis ... ganglion cells. The Topology of RBF. Feature Vectors. x1. x2. xn. y1. ym. Inputs. Hidden. Units ...

| PowerPoint PPT presentation | free to view

... any continuous multivariate function, to any desired degree of accuracy. ... wm. x = Single-Layer Perceptrons as. Universal Aproximators. Hidden. Units ...

| PowerPoint PPT presentation | free to view

Title: PowerPoint Presentation Author: Gheorghe Tecuci Last modified by: Gheorghe Tecuci Created Date: 10/16/2000 12:50:33 AM Document presentation format

| PowerPoint PPT presentation | free to download

Properties of RBF's. On-Center, Off Surround ... visual cortex; ganglion cells. The Topology of RBF. Feature Vectors. x1. x2. xn. y1. ym. Inputs ...

| PowerPoint PPT presentation | free to view

borrowed some of his s for 'Neural Networks' and 'Computation in Neural Networks' courses. ... Paolo Frasconi, University of Florence. ...

| PowerPoint PPT presentation | free to view

Predictive Automatic Relevance Determination by Expectation Propagation. Alan Qi. Thomas P. Minka ... Where is a cumulative distribution function for a ...

| PowerPoint PPT presentation | free to download

A Tree to Predict C-Section Risk. Learned from medical records of 1000 women ... Local minima... Statistically-based search choices. Robust to noisy data...

| PowerPoint PPT presentation | free to download

blond. eyes. blue. brown. Disjunction of conjunctions (one conjunct per path to a node) ... Hair: blond(2 , 2-) red(1 , 0-) dark(0 , 3 ...

| PowerPoint PPT presentation | free to download

Knowledge discovery & data mining: Classification UCLA CS240A Winter 2002 Notes from a tutorial presented @ EDBT2000 By Fosca Giannotti and Dino Pedreschi

| PowerPoint PPT presentation | free to download

If you use the full posterior over parameter settings, overfitting disappears! ... But it is not economical and it makes silly predictions. ...

| PowerPoint PPT presentation | free to download

Research supported in part by grants from the National Science Foundation (IIS ...

| PowerPoint PPT presentation | free to view

Data Mining (and machine learning) DM Lecture 6: Similarity and Distance

| PowerPoint PPT presentation | free to download

Classification and Supervised Learning Credits Hand, Mannila and Smyth Cook and Swayne Padhraic Smyth s notes Shawndra Hill notes Outline Supervised Learning ...

| PowerPoint PPT presentation | free to view

Weight-decay involves adding an extra term to the cost function that penalizes ... Is there a way to determine the weight-decay coefficient automatically? ...

| PowerPoint PPT presentation | free to download

Parameters (weights w or a, threshold b) ... A function of the parameters of the ... Shave off unnecessary parameters of your models. The Power of Amnesia ...

| PowerPoint PPT presentation | free to download

Machine Learning by Tom Mitchell, 1997, Chapter 3 ... ( chi-square test used by Quinlan at first later abandoned in favor of post-pruning) ...

| PowerPoint PPT presentation | free to view

(hair = red) [(hair = blond) & (eyes = blue) ... short blond brown - 1. Find the attribute that maximizes the ... Hair: blond(2 , 2-) red(1 , 0-) dark(0 , 3 ...

| PowerPoint PPT presentation | free to download

Bag CART. 4. Case study. FLDA. DLDA. DQDA. 4. Case study. 4. Case study. 4. Case study ... Bagging: 'Ipred' package. Random forest: 'randomForest' package ...

| PowerPoint PPT presentation | free to view

if hair_colour='blonde' then. if weight='average' then ... blonde. red. 23 ...Calculating the Disorder of the 'blondes' The first term of the sum: D(Sblonde) ...

| PowerPoint PPT presentation | free to download

Can be used to predict outcome in new situation ... To be informed of, ascertain; to receive instruction. Difficult to measure. Trivial for computers ...

| PowerPoint PPT presentation | free to download

Joint work with T. Minka, Z. Ghahramani, M. Szummer, and R. W. Picard. Motivation ... Approximate a probability distribution by simpler parametric terms (Minka 2001) ...

| PowerPoint PPT presentation | free to download

Risk of Overfitting by optimizing hyperparameters. Predictive ARD by expectation propagation (EP) ... of relevance or support vectors on breast cancer dataset. ...

| PowerPoint PPT presentation | free to view

1. Classification and regression trees. Pierre Geurts. Stochastic methods (Prof. L.Wehenkel) ... Goal: from the database, find a function f of the inputs that ...

| PowerPoint PPT presentation | free to download

Data-Oriented Parsing. Remko Scha. Institute for Logic, Language and Computation ... live on this paradoxical slope to which it is doomed by the evanescence of its ...

| PowerPoint PPT presentation | free to view

Handling Continuous-Valued Attributes. Handling Missing Attribute Values. Decision Trees ... attribute as the root, create a branch for each of the values the ...

| PowerPoint PPT presentation | free to view

Extension of joint work with John Langford, TTI Chicago (COLT 2004) ... Standard conversion method from to : logistic (sigmoid) transformation. For each and , set ...

| PowerPoint PPT presentation | free to view

We study Bayesian and Minimum Description Length (MDL) inference in ... Our inconsistency result also holds for (various incarnations of) MDL learning algorithm ...

| PowerPoint PPT presentation | free to view

Non-Symbolic AI lecture 9 Data-mining using techniques such as Genetic Algorithms and Neural Networks. Looking for statistically significant patterns hidden ...

| PowerPoint PPT presentation | free to download

Examples are represented by attribute-value pairs. ... Define the classes and attributes .names file: labor-neg.names. Good, bad. Duration: continuous. ...

| PowerPoint PPT presentation | free to view

If we have a big data set that needs a complicated model, the full Bayesian ... random collection of one-dimensional datapoints (except for nasty special cases) ...

| PowerPoint PPT presentation | free to download

XX denotes a row with very extreme X values. Values of Predictors for New Observations ... Temperature (x1) degrees Fahrenheit. Pressure (x2) pounds per ...

| PowerPoint PPT presentation | free to view

Title: CSIS 0323 Advanced Database Systems Spring 2003 Author: hkucsis Created Date: 1/18/2003 8:56:22 PM Document presentation format: On-screen Show

| PowerPoint PPT presentation | free to download

... Discovery in Databases (KDD) is an emerging area that considers the process of ... composed of arbiters that are computed in a bottom-up, binary-tree fashion. ...

| PowerPoint PPT presentation | free to view

Build a tree decision tree. Each node represents a test. Training instances are split at ... Fewest nodes? Which trees are the best predictors of unseen data? ...

| PowerPoint PPT presentation | free to download

... greedy FS & regularization. Classical Bayesian feature ... Regularization: use sparse prior to enhance the sparsity of a trained predictor (classifier) ...

| PowerPoint PPT presentation | free to download